The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On January 30,2024, Denise Holt of Spatial Web AI was joined by Dr. John Henry Clippinger, co-founder of BioForm Labs, research scientist for the MIT Lab City Sciences group, and Founding Advisor for the Active Inference Institute.

I recently wrote an article, “Turning Point in AI — Leaders Rally for a Natural AI Initiative,” about a roundtable discussion sparked by a pivotal Letter signed by 25 neuroscientists, biologists, physicists, policy makers, AI researchers, entrepreneurs, and directors of research labs on a joint initiative proposing a radical rethinking of AI’s trajectory, in which scientists advocated for a shift away from the current deep learning paradigm, calling for a more natural approach to AI based on Active Inference.

Dr. Clippinger was a key figure in orchestrating that Letter, and I had the pleasure of interviewing him on my Spatial Web AI Podcast to discuss the significance of Active Inference and the move towards a more natural, principled AI, emphasizing the importance of interdisciplinary collaboration and a deeper understanding of intelligence.

Clippinger revealed that the inspiration for the letter stemmed from a growing concern over the misframing of AI in the public narrative. He observed that certain parties were exploiting this misframing to push their own business models and agendas. The letter aimed to provide an alternative, science-based perspective on the biological foundations of AI and to engage a broader community in this critical conversation.

The response to the letter was overwhelmingly positive, resonating with individuals across various disciplines. John stressed the importance of involving policymakers, technologists, and the general public in discussions about the development and regulation of AI technologies. By fostering open dialogue and collaboration, we can work towards creating AI systems that align with human values and societal needs.

One of the key issues addressed in the letter is the need to redefine our understanding of intelligence. Clippinger noted that extreme claims regarding the emergence of Artificial General Intelligence (AGI) are often based on misguided comparisons to human minds and do not consider the diverse forms of cognition found in nature.

He emphasized that intelligence should be understood as a spectrum, with various forms manifesting in different substrates. By broadening our perspective on intelligence, we can develop AI systems that complement and enhance human capabilities rather than attempting to replicate or replace them.

Dr. Clippinger highlighted the growing significance of digital twins and the need for interdisciplinary collaboration in AI development. Digital twins, which are virtual representations of physical systems, can serve as powerful tools for understanding and optimizing complex processes, from individual organisms to entire cities.

By bringing together experts from various fields, including computational neuroscience, biology, physics, and social sciences, we can create more accurate and comprehensive digital twins. These models can help us address pressing global challenges, such as climate change, by enabling more informed decision-making and facilitating the development of effective solutions.

To ensure the responsible development of AI technologies, John emphasized the importance of establishing a testable foundation based on scientific principles. He noted that current Deep Learning models often lack a solid scientific basis and are developed without clear performance standards or evaluation criteria.

Clippinger discussed his collaboration with experts, including Dr. Karl Friston, Chief Scientist at VERSES AI, to lay down a framework for AI development that is grounded in the principles of computational neuroscience, biology, and physics. By creating AI systems that are transparent, explainable, and auditable, we can foster trust and accountability in the development and deployment of these technologies.

During the interview, John and Denise explored the concepts of collective mental health and extended cognition in relation to natural intelligence. John suggested that by understanding the biological foundations of intelligence, we can gain insights into the factors that contribute to the well-being of individuals and communities.

Dr. Clippinger envisioned a future where AI systems, designed according to the principles of natural intelligence, could support and enhance collective mental health. By extending our cognitive capabilities and facilitating more effective communication and collaboration, these systems could help us address social and environmental challenges more effectively.

As AI systems become more sophisticated and integrated into our daily lives, the nature of human-machine interactions will evolve. Clippinger discussed the potential for AI systems based on natural intelligence to develop more symbiotic relationships with their human counterparts, adapting to individual needs and preferences while maintaining transparency and explainability.

He speculated on the development of synthetic languages that could facilitate more effective communication between humans and machines. These languages, emerging from the interactions between AI systems and their users, could enable more nuanced and contextually relevant exchanges, leading to more meaningful and productive collaborations.

Clippinger emphasized the importance of communication initiatives in elevating conversations about AI and bridging different groups. He discussed his efforts to bring together researchers, technologists, policymakers, and the general public to discuss the development and regulation of AI technologies.

By fostering interdisciplinary dialogue and knowledge sharing, we can work towards a more comprehensive understanding of the challenges and opportunities presented by AI. These conversations can help inform the development of ethical frameworks and regulatory guidelines that ensure the responsible development and deployment of AI technologies.

John Henry Clippinger’s interview offers a compelling vision for the future of AI, one that is grounded in the principles of natural intelligence and emphasizes the importance of interdisciplinary collaboration. His insights, along with the groundbreaking work of Dr. Karl Friston and VERSES AI, underscore the need for a fundamental shift in our approach to AI development. By embracing Active Inference and First Principles AI, we can create AI systems that are more efficient, adaptable, and transparent than current Deep Learning models.

As we move towards an increasingly AI-driven future, it is crucial that we prioritize scientific principles, interdisciplinary collaboration, and public discourse in shaping the development of these technologies. Only by working together can we ensure that AI serves as a tool for empowerment and progress, rather than a source of existential risk or centralized control.

Moving forward, it is crucial that we prioritize communication initiatives that foster open dialogue and knowledge sharing among diverse groups. By working together to shape the future of AI, we can harness the potential of these technologies to support collective mental health, extend human cognition, and create more meaningful human-machine interactions.

The shift towards Natural AI represents not only a technological breakthrough but also a philosophical and ethical imperative. By grounding AI in the principles that govern life itself, we can create systems that enhance rather than replace human intelligence, ultimately leading to a more sustainable, equitable, and beneficial future for all.

Connect with Dr. Clippinger:

LinkedIn: https://www.linkedin.com/in/john-henry-clippinger-5ab1b

Connect with Denise:

LinkedIn: https://www.linkedin.com/in/deniseholt1

Learn more about Active Inference AI and the future of Spatial Computing and meet other brave souls embarking on this journey into the next era of intelligent computing.

Join my Substack Community and upgrade your membership to receive the Ultimate Resource Guide for Active Inference AI 2024, and get exclusive access to our Learning Lab LIVE sessions.

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

00:13

Denise Holt

Hi, and thank you for joining us again for another episode of the spatial Web AI podcast. Today I have a very special guest. John Henry Clippinger is with us today. He is the co founder of Bioform Labs. He is also the founding advisor for the Active Inference Institute, and he is a research scientist for the MIT Lab City Sciences group. John, thank you so much for joining us today. Welcome to our show.

00:46

Dr. Clippinger

Well, thank you for having me. It’s great to be here. Let me give a little more context to my background as well, and see is it relevant to this. I visited MIT, the media lab City science group before that with Sandy Pentland and his human dynamics group, and he and I developed started a foundation called Digital Institutions decentralization. We sort of anticipated blockchain, things like that. Much of the is about privacy and with self sovereignty and things like that. And before that, I was at Harvard at the Berkman center and founded something called a law lab. And my background to get my phd a long time ago in AI and linguistics and written charisma companies around that. So I’ve had a long interest in artificial intelligence in the early form and been tracking it.

01:47

Dr. Clippinger

And so when it really sort of crossed this new threshold that I became really reengaged and felt like, okay, this is a whole new era. It’s really beginning as a science. It’s just not a lot of collections of sort of programming techniques. And so became really interested in it. And particularly with the work of Karl Friston, I felt that his work really sort of pulled it together, pushed it into a whole nother threshold. I’d been involved, I said several companies in artificial intelligence, particularly natural language processing, wrote a book on and been interested involved with the Santa Fe Institute and Complexity Science and self organizing system, all of that and that tied into the stuff of blockchain and decentralized autonomous organizations.

02:42

Dr. Clippinger

But it was really Karl’s work that I think pulled it together, tied it into physics, gave it a principled way of thinking, and it’s quite transformative for me. And actually that’s how I first came to it through. I just discovered it when, and looking at a lecture that Maxwell Ramstead did, and I got to know Maxwell and what he was doing and really impressed. And then we got on a number of the calls and networks that he had with Karl. I see what’s going on. I worked with Maxwell. So projects, but very sort of taken by what they were able to do. And then Maxwell went off. I actually introduced him to versus the people of the spatial Web Foundation.

03:31

Dr. Clippinger

I was very interested in the spatial Web foundation early on because I think the whole idea of spatial computing and being a principal basis protecting data and governing is really foundational. And we’re moving into a new era where we’re explaining things by mean and became digital twins. And that again ties into Karl’s work. So all this sort of comes together, and I think we’re moving into a whole new era. The motivation for. Well, partly just back up. It’s also a director of the Active Inference foundation in working and being that capacity, really interested in saying, how do you get people to understand it, where it’s going? And I’ve always been involved in open source software.

04:22

Dr. Clippinger

I’ve been very interested in that, and making a public good that’s been part of my career and interests, and that’s part of the rationale for the Active imprints Foundation. And then I was also part of something called the Boston Global Forum, which is a group. It’s in Boston, but it’s really global. And there the interest was increasingly artificial intelligence, saying, how do we create safe artificial intelligence? How do we make it a public good? The interest spent not just in the US, but around the world. And that form is an attempt to bring in people cross disciplines. There’s a big disconnect, as you might imagine, between policy people and tech people.

05:15

Dr. Clippinger

I’ve been in government, I’ve done a lot of policy work, so I know they speak two different languages, so it’s really important not to have them sort of the policymakers look at it as an afterthought, but really understand the principles and how transformative it is. That was really a little background and context for why I’m engaged in these things, particularly writing the letter that we did.

05:43

Denise Holt

Yeah. And just to back up to what you had said about VERSES, I actually was speaking with Dan the other day, and I told him that I would be interviewing you, and he said that they really owe you so much gratitude because you played a real significant part in their early days introducing them to Maxwell and therefore Karl as well.

06:08

Dr. Clippinger

Thank you. I’m glad you said, yeah, no, I was really interested in what they’re doing. They were really ahead of the trend. And the fact it’s going through the IEEE, I think, is pretty. So I saw the connection there, but I think where I am now thinking and working is really, and it’s part of the idea of bioform labs and our collaborations with different people is that we’re seeing this transition in AI. It’s going to be in everything. And you really do not have a principled governance approach to it. I was very much involved in privacy, this whole area of self sovereign identity and data at the Berkman center, involved in the World Economic Forum and working some of those policies.

07:01

Dr. Clippinger

I was very concerned that regulation is always much slower, and it’s sort of like an afterthought, and suddenly they slap a lot of things on, and you have a lot of adverse effects. You really don’t understand that people are playing to the trends and things like that. And so it’s really, as I said earlier, it’s really important to get a principled understanding of these things. And the current narrative is so much captured by these extreme claims about the AGI, the super intelligence, taking us over as Arnold Schwarzenegger, the Terminator, the end times. And I think that actually, it’s a very powerful technology. That’s an understanding. In my view, intelligence and life and what it means to be alive are really interconnected. Karl makes his know. It’s like I’m alive because I can make predictions.

08:03

Dr. Clippinger

And I think Andy Clark, his new book makes this point very. So what is information? What is energy? What is intelligence? What does it mean to stay alive? There are many kinds of intelligence, and I think that’s in the work that you find in Michael Levin. That’s a very distributed thing. It’s not what a kind of thing. There’s not a uniform, monolithic intelligence. I think a lot of this is just projecting onto the machine. Now we’re starting to see the limitations of large language models and transformers. Yeah. And they start to be really apparent. I wrote a piece, it could be called a parlor trick, like a Ouija board, because people are pushing it around. It’s a Ouija board with memory, and then they sort of ascribe certain things to it.

08:57

Dr. Clippinger

And yet I do think there’s some really fundamental things that’s very profound, that’s been done as a list. I think the transformal is very powerful in understanding semantics and things we didn’t before. I don’t want to dismiss that at all. But I do think the way the current narrative is that, okay, it’s only going to be running really big models. You got to give it to big tech. I don’t think you have to run big models. I think you need causal models. I don’t think you need all those parameters, blah, blah, all that. So I think we’re going to move into that new transition, the next generation that’s coming about.

09:38

Denise Holt

Yeah. And it’s interesting that you say that, because to me, that’s how I see this, too, is that when you look at human knowledge, right, and these autonomous systems are going to be extensions of human knowledge. And the way human knowledge grows is by a diversity of a lot of different minds, a lot of different intelligences, a lot of different levels of understanding and viewpoints and all of that kind of converge and interact, and it makes this global knowledge get bigger. And you can’t do that with one brain or one big machine. You’ll just have a reiteration of the same information. There won’t be expanding to the knowledge from there. Is that how you see it as well?

10:30

Dr. Clippinger

I totally agree. I think that you see this in nature. There are many different kinds of body types that are adapted to different situations. There are different kinds of intelligence. Intelligence and body type go together. There are different kinds of life forms that exist, and they have different kind of niches, and then they combine together to create different kind of ecosystems. So we’re really understanding the biology of intelligence, the biology of what it means to be a living thing. And that’s very different than the mechanical reductionist notion. And that’s why I think it’s really important to keep this an open research.

11:14

Dr. Clippinger

We’re just at the beginning of understanding this, I think is analogous to where synthetic biology was in the sense that experiments, this came out, you really want to keep it open in growing and being able to involve lots of people in being able to develop and contribute to those intelligence. That’s been my posture. That was sort of the motivation for the letter as well.

11:40

Denise Holt

Yeah. And so I really do want to get to the letter, and maybe I was going to start in a different spot. But to continue with what you’re talking about, one of the things that was really interesting to me in the letter, and I think it was talking about these machine learning models, the transformers, the llms, and that engineering approachments and advancements were made with no scientific principles or independent performance standards referenced or applied to the direct research and development. And that’s a really important point. And so maybe we can take it from there and start there with. Why is that a problem?

12:27

Dr. Clippinger

Well, I think AI and I really involved it many years. I was at the MIT AI lab way back. Really, there was not any underlying science. It wasn’t based on first principle. It was really computer science being applied to a problem to see what we could do. And so you had many leaps and lurches around that. You had symbolic computing, it’s or neural nets and what you could do with neural nets. And, yes, you had McCalloc and Pitts. They had a neural net model, isn’t approximate to a physical model. But I think what you’re now seeing, and this is, again, it gets back to Karl. I mean, I have to give it to him.

13:13

Dr. Clippinger

Going back to physics and first principles in physics and the Hamiltonian, the least action free energy, you can actually start to build systems based upon first principles and test them against first principles. As a science, that’s a huge shift. If we think we can look at the physics of the mind and how the mind works, and with the same rigor that we can start to understand the physics of physical system or even biological systems and make claims about that, then that really changes how we look at our social institutions, our economic institutions. The whole idea of extended cognition, I think, is extremely profound. And again, you need to base it on first principles.

13:57

Dr. Clippinger

We have been working with various people, like not just Karl, but also Michael Levin and other Chris Fields, and saying, okay, how do we lay down a foundation that’s testable in a scientific sense, that we can make certain claims and then validate a very powerful notion? Is this scale free, domain free? Well, can we really prove that? Can we really show that they’re the same principles, work at different scales? If we can do that really changes the game, and I think we can do that. So that’s why that’s so important.

14:35

Denise Holt

Well, okay, so then let’s talk about the ladder. So you played a key role in writing this letter, and what was the inspiration? What was the purpose for it? Why did you think, now is the time? And what was the goal in writing this letter?

14:58

Dr. Clippinger

It was a frustration that you’re hearing the public narrative captured to a certain point of view, and that having been in the field for a really long time and talking to other people, you’re saying, wait a second, this is being misframed. And that was in misframe. Then the misframing is being exploited by different parties to push a particular view and business model on top of this, which I don’t necessarily agree to. And I sort of tested the waters. I said, am I the only one? And no, it resonated with a lot of.

15:36

Denise Holt

The others that joined you in.

15:39

Dr. Clippinger

Mean, one of the first was Chris Fields, but then Karl came in, and Karl was very sympathetic, too, of it. But then there was a whole other. There were some of the people in the active inference world, but it was broader than that. It reached out to a broader community, but also through Boston Global Forum, we’re able to reach with people who are on the policy side. And I really wanted to make that link. I just didn’t want to make it just a tech or science view, but saying, yes, we’re trying to bring together different people to really think about this thing freshly and not have it be captured to a small particular interest. Spinning out of Silicon Valley, basically. And so that was really the motivation for it. So went through a number of iterations and letters.

16:32

Dr. Clippinger

David Silverswig, who was dean of the Harvard Medical School and also head of the neuroscience division at Harvard, he was very active in that, and he contributed to it because he’s very interested in developing a new way of thinking about mental health using these principles. And looking at mental health beyond the skull and say collective mental health, what we see is, can we link what’s going on? Is there a way in which we can see these things together? So in Karl Sacrone and that, so there’s a bigger community that’s coming.

17:13

Denise Holt

Yeah, I was just going to say what’s really interesting about that particular notion is I think a lot of the mental health issues that we’re experiencing these days are a result of our interaction with attack. Right.

17:28

Dr. Clippinger

I totally agree. I’m a little bit phonetical about that because I think that what we’ve done is we’ve created algorithms of exploitation and depression and addiction at a social level, and we monetize that. And we don’t have a policy means of saying, hey, that’s an opiate. Where they’re selling opiates out there. We have a principled way. This is we do in medicine say that is an opiate. Can we say that’s an opiate and a cognitive opiate. That’s a cognitive opiate. That’s a cognitive addiction. So I’m working on a paper on the whole notion of when ideologies become pathologies. Can you really say an ideology is a collection of bayesian beliefs that perform in a particular way, but do they exhibit the patterns of something that’s addictive or like even like cancer?

18:27

Dr. Clippinger

So can we really apply those terms of rigorously to mental states and collective mental states? I think so, but you just can’t throw it out there as a metaphor. You really have to actually ground it in your know.

18:40

Denise Holt

Right.

18:40**

Dr. Clippinger

So we’re looking at being able to. Karl started early work on this, but David’s involved in this and being able to say, ok, we can do a scan of the brain and map the characteristics of that to on a collective and individual level and see what’s happening, that changes policy big time. So it also gets into misstatements. So is this a misstatement or not? Do we have a principled way of saying, what is hate speech? What is the misuse of speech to create an addiction or some other? And can we base that on a principled way? Do we have a way of stating rigorously what a fact is? So I can go on and on, because there’s a whole overlap between scientific method and jurisprudence. In other words, what is a fact? How can I prove it in science?

19:37

Dr. Clippinger

And then in jurisprudence, what is evidence? What’s the consensus? What are the rules of evidence? What kind of conclusions I can draw? And can I have a computational model? Can I have something that computationally represents that in a rigorous form, that I can replicate it and explain it? Now, you can’t do that with llm bottles. You can’t do that close, but you can do that with these new bottles.

19:59

Denise Holt

And what’s interesting about that is that we’re entering into a future where we’re going to have these intelligent agent assistants, like our life assistants, our teachers, our mental health professionals.

20:17

Dr. Clippinger

Well, maybe we’ll get a better sense of their mental health than some of the mental health professionals. What I’m interested in is there a ground truth that you can establish? It’s never an absolute truth, but you can upgrade it, and you can understand what it’s based upon. And so do I trust this agent? How far do I trust this agent? And this, again, is where active inference is. Very good, because it gives you a variation of free energy principle for measuring the level at which you have uncertainty about certain claims or data, and you could upgrade that. So it’s very overt about what it doesn’t know. And I think it sort of codifies the scientific method, I think can be a principle of governance. That’s the other thing I’m interested in.

21:07

Denise Holt

Yeah, and I’m sorry because I interrupted your train of thought on this letter. And for the viewers who aren’t sure exactly what we’re speaking about here with this letter, the title of the letter was natural AI, based on the science of computational physics and neuroscience, policy and societal significance. Right. Please continue with how you gathered all these people. You realized everybody is on the same page, sees kind of same need and significance of really bringing this next generation of artificial intelligence to the public forum. Yeah, continue, please.

21:54

Dr. Clippinger

Well, what has happened is that now it’s picked up, it’s another phase. We’re saying, well, how do we encourage different groups to adopt this particular perspective. So we have been approached by universities and others and other research groups to say, yeah, we like the central proposition, the natural AI, keeping it open, and how can we develop repositories and things that actually function this way? We’re in the early phases of that, but it hit a chord with a number of folks. And I really do think you’re seeing sort of the exposure, the cracks in the current model and then people exposing it. And now they’re asking these questions, and so where do we go from here and how do we shape that?

22:51

Dr. Clippinger

And how do we really make sure that it doesn’t become captive and it grows as a public good, as a public resource, right where we are.

23:02

Denise Holt

Yeah. And in the letter you talk about pro social benefits of natural intelligence approach. So maybe you could speak about that. And what are the pro social benefits?

23:17

Dr. Clippinger

Well, I think there are many, there are several things that we’ve mentioned, the whole idea of collective mental health or extended cognition, and be able to have a new way of framing that. The other area that I’m very interested in, but involved in over the years is in climate change. And so I’ve involved in a company, it’s called clear trace. We actually shared with nfts that validate certification of production of green energy, solar primarily, and then open Earth foundation as one of the founding directors of that. Now moving with working with some other projects. And the big challenge to climate change is being able to have people believe the measures you have, the esgs, you have all these other measurements, and then you have measurements that are in different domains at different scales. How do you relate them? Is there an underlying organizing principle?

24:20

Dr. Clippinger

I actually talked to Karl, we organized a call with Karl a number of years ago, talking about something like digital Gaia concept, because he wrote a piece on the earth is a living system, the planet is a living question. And I think that is the right perspective. And the question is, how can you start with different kind of measures that reflect the uncertainty associated with, and therefore that uncertainty is associated with allocation of a financial instrument to reflect that uncertainty. But as the uncertainty gets less and you reduce the uncertainty, then you affect this financial instrument. So I worked in some projects with the government of Costa Rica on how to. And basically they’re interested in saying how they look. They have 30% of their land masses sort of set aside as reserves.

25:19

Dr. Clippinger

Is there a way they can value that as a natural asset? So can you have assets that are based on nature? Now, how do you value that? And as you get better and better measures, how does that reflect the value of the instrument or the underlying asset. So this is where active inference comes in. You can tie your measurement uncertainty into the risk associated with a financial instrument. So working with other people on that, interesting. So there’s a group, number of groups are really interested. That’s a big issue. And if you can crack that nut, then you get a lot of people to contribute. This is why they believe in the metric, because it’s explainable, and therefore they can assign value to it, and then you can attract capital and then you could scale it.

26:09

Dr. Clippinger

So I think that is the only way we’re going to really address climate change at scale. And so it comes back to really having a ballot way of measuring things that’s transparent, that upgrades itself to reflects what it doesn’t know, and that reflects itself in a particular kind of bond or financial instruments.

26:27

Denise Holt

Yeah, it’s interesting because that’s one of the things that I’ve recognized with what the spatial web protocol will do in taking us into these 3d digital twin spaces that are now programmable. Because then once everything becomes a digital twin, you can run simulations and you can’t really argue with it, because right now you have scientists that are like, we see this as the potential outcome, and then you have people just going, well, I don’t believe it. Maybe it will, maybe it won’t. But if you have concrete calculations that.

27:04

Dr. Clippinger

Are telling you one of the things we experimented with was actually creating a digital twin of something, and as your data gets better, your resolution of the digital twin gets better. You just think a tree was very highly pixelated, but then you get drilled down, you understand more and more the dynamics of the tree, then you can predict more about this behavior, therefore your data is more valid and you see that, and people can work towards a common goal. So this is like an active invoice. You can submit policies around observations or actions you take in order to reduce the uncertainty, and you can get compensated for that. And so you have a principled way of getting people compensated that contributes to an outcome. And that to me is a new kind of governance principle and you can visually see it.

27:54

Dr. Clippinger

So this is where it gets to the spatial web protocol, actually, I can see something coming into being and I can work with other people and I get a very good model of it, and therefore that’s generated value, and therefore there should be some kind of allocation of value for that.

28:11

Denise Holt

Yeah, really? With a system like that, you can create bespoke futures. You know exactly what steps to take to get the outcomes that you’re looking for because you’re able to run it and see the prediction simulation is huge.

28:29

Dr. Clippinger

You can explore a huge space that way. So Autodesk has a lot. With Autodesk, I don’t if know you’re familiar with what they do, but it’s really worth looking into because you can say, I can design a whole building and I can set certain parameters, and then it’ll generate different variants of it, and then I can manage the constraints and see what I want, and then I can send it off to a 3d printer and it can print it. That’s where we’re going.

28:56

Denise Holt

Yeah. Fascinating. Wow. Okay, so then you were talking, too, about one of the important things of introducing this idea of the natural approach to these autonomous systems, and that it’s going to give us a lot of focus on all of the potential outcomes of AI as an opportunity for our future rather than a threat, and that there are these existential threats that people are talking about. Do you think that those really are potential outcomes, bad outcomes, if we stick with this direction that we’re in with just focusing on the machine learning and the deep learning?

29:50

Dr. Clippinger

The analogy, I think Ada Lovelace was, wait a minute. She said, well, machines don’t think you’re projecting onto the machine. What do you think? And Joe Weisenbaugh did with Eliza program, it’s like, oh, there’s a very simple little program that simulated a shrink. Everyone projected everything upon it. I think there’s a lot of projection, good and ill. I don’t think it’s necessarily because there’s no intention in his mind. So it’s a projection, and that’s a projection of the business model. So people just displaying what they’re about. I do think that I did a certain amount of work with government, DoD, a whole area of network warfare and decentralized command and control systems. When you look in that world, it’s hard to tell.

30:52

Dr. Clippinger

I mean, you’re looking at what’s happening in drones, and I know the person has done interesting things in drones, but you’re going to have autonomous drones and the military is going to drive that, and they can have. So it is a huge disruptor. How these things get shaped is dependent on the institutional context in which they’re derived. It’s not inherent, and it’s the humans.

31:17

Denise Holt

That will be stupid with the technology.

31:20

Dr. Clippinger

Or they project their legacies. Now, ideally, our technology is going to make us smarter and collectively smart. Yes. We’re going to get these multiple intelligences. We’re going to what we know what we don’t know and we’re going to be accountable to what we don’t know. And that’s a big change. We’re enforcing accountability around our own stupidity. Well, that’s good. And we don’t have that. So anyone can make any kind of claim and get away with it. And you say, wait a second, no, if we’re going to have really valid models, there’s a protocol for doing that and we understand it and we’ll hold it to a high standard. So I think is a great thing. I think that’s huge. That’s a very big plus. But you do have to be aware of these institutional contexts in which it’s developed.

32:08

Dr. Clippinger

And that’s part of my thinking is, yeah, creating institutional context that doesn’t get twisted, but actually takes the full value of it.

32:18

Denise Holt

What are your thoughts on the misinformation side of it?

32:21

Dr. Clippinger

Because with this, I’m really big on that. I’m really big in trying to correct that. And I working on a piece again, it’s like, what is a fact? What is evidence? What can I rely upon? And we do have method. I mean, the legal system absolutely depends upon that. That’s why you have a jury of peers that you rule on the evidence. You have a whole set of processes to bring out evidence, but that is subject to all sorts of exploits. But the whole point of the sort of active MSM model is to actually codify a scientific method in such a way that you can actually update, upgrade and be explicit about your doing. You’re not saying you have the 100% answer. There’s no such thing as that.

33:12

Dr. Clippinger

But you can say, I know this to a certain degree of confidence, that I can upgrade it, I can scale it’s totally transparent. I think eventually we’re going to depend upon these kind of things for what we rely upon and trusted agents and certifications, of fact, I think that’s going to be really important. So it’s the providence of the data, where it come from, and being able to replicate that, to what extent I can rely upon it. We’ll have different tests and then I have agents that embody that and I’m willing to go with them and I can at any point I can expect them and ask them to explain themselves, unwind themselves and go back at Borgit. That becomes really critical. Again, you can’t do that with the current llms.

33:56

Denise Holt

No, they’re fed all kinds of information, including a lot of inaccurate information.

34:03

Dr. Clippinger

Absolutely. When they make it up, I mean, they just make it up.

34:07

Denise Holt

Because it’s funny, I tell people this all the time. They’re trained to spit out an appropriate output, but appropriate and accurate are two different things.

34:23

Dr. Clippinger

They’re designed to please. It’s like a yes man. Okay, whatever you say these poor lawyers are getting, they’ve used it, they’ve used legal. I mean, they did the review. I think you saw that at Stanford or where it just, it generates so.

34:42

Denise Holt

You can lead the witness. Very easy.

34:45

Dr. Clippinger

So you really have to curate the databases, you really have to curate these things. But once you do that and you have a trusted, independent process that’s based upon first principle, that’s a real move ahead for us as a civilization. That’s a real opera.

35:04

Denise Holt

It’s interesting because to me that’s the big advantage that I see with this approach, especially when you considering what VERSES is doing. And with the spatial Web foundation and the spatial web protocol, that’s going to give these intelligent agents facts to develop their understanding of their environment, their frame of reference. It’s going to be based on actual real time program data into all of the things in their interrelationships, and then they get real time sensory data too. So they’re actually dealing with real in the moment.

35:41

Dr. Clippinger

Yeah, the sensor data and the whole Internet of things, zillions of things that are out there. I was interested in blockchain and all that distributed governance and what you see with old networks, a distributed system, you get trillions of these things out there

35:41

Dr. Clippinger

And there’s something called son, which is a self organizing network protocol that’s actually in use right now, that these things correct themselves. If you can’t trust those things, then you’re really in trouble, right? So they have to do it at the edge, figure it out at the edge. And I think we absolutely depend upon a trusted network that can verify itself, that then allow the greater inferencing to take place on that.

36:34

Denise Holt

So how do these systems cut through the noise of all the signals? Because there’s going to be so many sensors. I’d love to hear from you what you explain how the self optimization and the minimizing of the complexity, if you could give your explanation of how this will function, I think our viewers would appreciate that.

37:06

Dr. Clippinger

Well, I think what you’re doing is you’re creating models, you’re trying to identify causal models. That’s the whole underlying process. You got a quantum reference space, you have something in multiple studies of the Hilbert space. How do you be able to project that into a three dimensional space? And then how do you able to infer out causal models, and then how do you validate those causal models? And then you’re always updating them. And so I do think that’s inherent in this whole process. I think the whole idea to be intelligent is also able to compress complexity and be able to maintaining levels of fidelity. Right. So that is the challenge. And then be able to know when you jump from one state or one belief into another state, because you may get locked in. So is it exploit versus explorer problem?

38:19

Dr. Clippinger

But there are principal ways of doing that. So I think that I’m very positive about all that. I think I share with you the optimism that goes along with it. It’s just that we’re in an institutional context that does not see the economic value of that particular model yet. And I think because they’re still into the extractive mode and addictive algorithms versus generative algorithms.

38:58

Denise Holt

What’s crazy about that to me, though, is that when you look at where the future of technology is going and the necessity for these smart city infrastructures and the possibility that we can build these smart cities that will become sustainable and inclusive and all these things that we need for a healthy population and a healthy planet, how could people not think this is, like, critical?

39:24

Dr. Clippinger

This is very much so. This is part of, in Kettle Larson’s group, the city science group, this has been a lot of my involvement. Internet. I mean, they’re building models of cities, and then they’re building. And you can change the models based upon the outcomes that you want, and you can see the impacts of it. And so they’re trying to increasingly be able to virtualize a city and to then model different kinds of behaviors to see what kind of impacts you have on, say, carbon sequestration or carbon generation, but also on pro social behavior. That’s one of the things that got me interested. Again, implied in Karl’s stuff, is to model a community and say, okay, how does the community function? How does it dysfunction? If we be wanting to change it in order to make it more equitable and open up opportunities?

40:19

Dr. Clippinger

What are the things that, what are the various things that affect those kind of outcomes? And we can start to model that. And so I know Kent, we’re doing simulations of that. Kent Larson’s been very involved in that. He’s working with a very famous architect, Norman Foster, and actually just had a big event last week. So this is the next generation. Imagine the cities, but also how to make them really local, make them enjoyable, create the right kinds of human interactions and create the right kind of scale and give people agency within a sense of agency and significance within the context of a city, make it really a living thing, not a machine thing. I’m very partial to. I work in Italy, so I love the way that Italians do things. It’s a relational, artesian economy.

41:15

Dr. Clippinger

So people enjoy that moment, enjoy the conversations of what they’re doing. How do you create that? Context allows that to happen, and so this can definitely facilitate that.

41:27

Denise Holt

How do you see that kind of interaction between human and machines in the future? Because we’re going to have robots, we’re likely going to have robots in our homes. We’re going to have these virtual agents that are our assistants, but have this embodiment to them, this personification to them. So how do you see that kind of artisanal interaction of like, well, how may cooperate?

41:57

Dr. Clippinger

These are extensions of manifestations of ourselves. And artisanal economy is one of building relationships and valuing differences in people. So you could have a very eccentric robot is very italian friends. They have these really od hobbies and things they carry to great extremes. They may be the world’s expert in a certain kind of wine, in a particular terror. Okay, that’s great. That’s a great conversation. Never knew I’d have that. I mean, I think the challenges with people, it’s a profound challenge, is feel that you’re dealing with something that is not alien and that’s authentic and that you’re living in a spoofed world. That I think is going to be really tricky, because right now people try to spoof you for everything, right? Phishing attacks and things like that.

42:59

Dr. Clippinger

But what happens when you get a robot that’s modeling your mind and then trying to play to get your mind to see what I can do, it’s going to get tricky. It’s going to get really creepy. Right. You could have a facsimile of yourself showing up and arguing with you. It wouldn’t be that hard to have a replica of myself interrupt myself and telling me to get off the call, right?

43:32

Denise Holt

Cloning with no genetics involved.

43:37

Dr. Clippinger

You could go some really strange places, though. The other way. It’s going to shake a lot of foundational habits and beliefs. People are terrified of what’s going on right now and for kids and how to bring up kids and not have it be exploited, just to throw something out there and say, oh, sorry, I think that’s not good enough. You have to appreciate that.

44:14

Denise Holt

Okay, so if an autonomous intelligence system agent can, for lack of a better term, clone your mind and really replicate you, where does identity lie in that and proof of identity.

44:34

Dr. Clippinger

I don’t know. I was really interested because I was an. And Karl came out with this paper on communication and language, and I think he’s coming out with another paper. But in that paper I saw, one of the things that I got interested in AI about was that language was a singular human know. No one other creature has languages. And through language we can imagine other worlds we can simulate. So it really is our special power, I believe. Now, lo and behold, I was looking at Karl stuff. I think you can have two agents come together and create synthetic languages. If you think about in linguistics, you have something that was a pigeon, a creole and a full. So a pigeon is like two people never met each other. They point to different things, they establish certain kinds.

45:25

Dr. Clippinger

They’d be able to name things, distinguish things, establish certain predicates or relationships, and then out of that draw a creole language and then a full language. I think what he has shown is that there are these sort of conventions that are used to show. They reflect in the structure of the brain. This is how we do this. Now, if we simulate that for agents, then I can have a conversation with an agent over a period of time, develop a private language for that agent that would be very tuned to everything I do. It’s like a good dog watch everything you do. A good egg will watch, and they’ll figure out where you’re going to go, what you’re going to do. They’ll figure it out, they’ll do the same. Does that make you feel better or is that being watched? It depends upon context, right?

46:24

Dr. Clippinger

I mean, if it was a person and you really trusted that person, you say, I have a really intimate relationship. If you didn’t trust the person, you think, I’m being spied on, I’m being exploited. Right. You just have a very opposite response.

46:39

Denise Holt

Right. And it’s interesting with what you say about language there, because our closest friends and our closest relationships, we do have these private bonds of communication, nicknames for each other and inside jokes. And you only have to say one or two words and the other person.

47:02

Dr. Clippinger

Exactly. The other friend completes it.

47:04

Denise Holt

That’s right. It brings you closer and it solidifies that bond. So to think of having these kinds of interactions with an autonomous agent, if that autonomous agent doesn’t have real emotion, will it be the same? Will it just be felt by the human side of that kind of. I don’t know.

47:27

Dr. Clippinger

Well, I don’t know. But emotion, I think, look, we’re talking about living things and we’re looking at different thresholds, I don’t think that emotions are off the table for these autonomous living things. I don’t think they’re really cognitive. They have their own self evidence, and they have self vested in being what they’re going to be. So they have fluctuations in their motions or their cortisol levels or whatever about what they’re going to respond to. So you may have some of different personalities, and you may get along with someone. You may have a more intimate relationship with the bot than the person.

48:15

Dr. Clippinger

It was really interesting because I was judging a set of submissions in Vietnam for a bunch of high school kids, and they were all in special schools, and they were doing stuff in AI, and one of them had done a pretty exhaustive study of romantic relationships with avatars. It’s a thing.

48:44

Denise Holt

I know there’s like the replica app. Yeah.

48:47

Dr. Clippinger

And so they were talking about it and how they build the relationships, and different types of them had certain kind of feelings and kids, because the kids felt very lone and the avatar talked to them and they really liked it. It’s like an imaginary friend for a kid. It’s something. So it had real standing for it, and it was really interesting, and you got a sense of a lot of isolation of those kids. They’re drawn to something that just recognized them and was their own. They value them, who they were. And so do we treat people surrogates, or do we treat people directly as people in order to do this? I think it’s surfacing a lot of things.

49:40

Denise Holt

Yeah.

49:41

Dr. Clippinger

But I can imagine people get attached to their car.

49:50

Denise Holt

Children, babies, to get attached to blankets and things.

49:55

Dr. Clippinger

Of course, animals, and they feed by animals. There’s a real attachment there. So I think it’s inevitable at some point. I mean, it really is.

50:07

Denise Holt

Yeah. That’s going to be really interesting to see it unfold, because like you said, just in the things that we are witnessing right now, that application replica, where you have an autonomous interaction with bot, what’s really interesting about that is the first couple of years, I guess, they would let you upgrade to kind of sexual interactions as far as dialogue and stuff like that. And then at some point, and I think it was early last year, they cut that out because for whatever problems or whatever thinking that were arising, and maybe it had to do with their board of directors, whoever that was saying, no, we can’t really take it in that direction, they cut it out.

51:01

Denise Holt

And the people who had been in those engagements with their own bots, they really suffered mentally over it to the point where they went back to allowing it for the legacy people who were already there because there were people who were suicidal, they felt like they had this relationship ripped away from them.

51:24

Dr. Clippinger

This is really fascinating. It’s what we think is real. So that’s real, right? It’s real. It’s real. It’s something physical. And we haven’t quite made that leap into saying it’s a reality there. Yeah. No, I think that’s going to be difficult. And I think they’re going to be generational divides on this thing and cultural divides and stuff like that. When you think about how it disseminates and how rapidly it goes in the adoption patterns of what’s allowed and what’s not allowed. Yeah. It’d be really tricky to be a parent and through about all this.

52:15

Denise Holt

Oh, so agree with that. Honestly, I think we’re seeing so much of that already. And even my kids are all in their early twenty? S and even when they were preteens and teenagers, having to deal with the social media stuff and all of the craziness and the damage control that they were susceptible to every day. You had these anonymous. I remember when my daughter was in high school, there was this one app, and I forget what it was called, but it literally was anonymous. So anybody could make a statement on it about somebody. And it started going through their school and it was tearing these kids apart because they were literally sitting know, having to do damage control against all these rumors and all this stuff that was.

53:10

Dr. Clippinger

Know, going, I wasn’t aware of. I, that was one of the things that I was at Harvard when Facebook was started, and I was one of the first people on Facebook and took a big objection to the fact that you have anonymity and you can’t at certain points at that time, and they didn’t protect kids. And it was a big debate. But the idea of this sort of anonymous commentator being able to, there’s so much power that it taps into a darker side of people and rewards that whole adolescent venue. It’s really destructive and there are no antibodies.

53:58

Denise Holt

You know what’s fascinating to watch though, was that this site came out right, it was this storm for like three months or something. And then all of a sudden it’s like all of the kids, they just at the same time had this consensus of like, forget it.

54:20

Dr. Clippinger

I’m very interested in that because how that naturally forms. So can you do that? What is the threshold that allow this gets into my idea of pathologies and ideologies. So how did they develop a natural immunity to that yeah, I think they.

54:37

Denise Holt

All just got so frustrated and something happened and they all realized they were fighting the same demon. That was just like, it wasn’t there if they walked away. And so they all just.

54:50

Dr. Clippinger

Oh, that’s very interesting. Yeah, that’s a good way of phrasing it. If they walk away, it disappeared. That’s really interesting. Yes, that’s right. Yes. Because that’s, so many conspiracies, so many fear based things are constructed that way, exploited that way, and now it’s at the national scene, I think. Oh, that’s really interesting.

55:13

Denise Holt

Yeah, it was fascinating watching that, because as a mom, I’m sitting there going, my baby all time. And to me, one of the critical things that I saw at that point was I needed to make sure that I was aware of what was happening, like kind of parenting from inside the huddle so that I knew what was going on because I knew that it was so necessary. She would need my wisdom, need my discernment, like helping her guide her through this rather than just being outside the huddle and not knowing what’s going on. And that’s a delicate balance, too.

55:54

Dr. Clippinger

That’s really interesting. So that if you could have an agent that could be a companion to kids and that they trust to provide the discernment and allow that, then you could help inoculate them from these kind of things. No, I don’t like that. Wow.

56:11

Denise Holt

I do not envy any parents going forward. They’ve got even more to deal with. Oh, my gosh. Okay, so back to the letter, back to the effort there in bringing this to public awareness. Where do you see that going from here? What do you see as the next step that’s really important to get this on more of a public stage.

56:40

Dr. Clippinger

A couple of things that we’re interested in. One is looking at first principles, this whole idea of establishing first principles. They’re saying, we’re claiming that there is an underpinning of a science to this, and it’s a testable science, and therefore we can make certain claims. And so we’re trying to pull together different people to identify those first principles that also being able to have some kind of tests or experiments to validate that’s at the high level. I think at the other level is to educate people as to what this is about, to help understand the differences between what we’re talking a natural AI of intelligences and also across different disciplines. And I think it’s starting to get hold within, quote, the AI community. I can know you put up the debate between Jan and Karl and all that, I think that was rubbish.

57:44

Dr. Clippinger

Karl, he never says anything bad about. He had to really force it out of him. So, okay, I’ll call it rubbish.

57:53

Denise Holt

Well, yeah, I love the introduction to that, where the moderator was like, okay, we’ve been far too civil for far too long. And he was like, I actually see us as partners in crime, but we’ll make this adversarial for entertainment purposes.

58:08

Dr. Clippinger

Exactly. We’re so english to call something rubbish. It’s so true to form, but that’s a Davos, and that’s an elevation. I think that Meta is certainly headed in that directing, rebranding and talking its own terms that way. And so all of this to get clear, I also think that running smaller models and smaller platforms is going to be a big deal again. That could change the whole ecology of this thing. Rather than having to be centralized, we talked about decentralized and have a whole network of validated nodes and things like that. All that becomes really important. So it’s really incrementally trying to deal with people, bringing together, sort of elevate the conversation across different kinds of groups. I think there’s certain talking points. You’re very good at the talking points.

59:11

Dr. Clippinger

How to explain this, to know when it started out, it means, oh, I point people, here’s Karl’s lecture. Listen to that. Why did you do that to me? But I like him. I like everything. It doesn’t always carry. We’re really an early phase of how this thing is going to get reformed. But a year from now, it will be, I think, much more of the mainstream.

59:46

Denise Holt

Yeah. One of the things that I’ve started this year, I started a substac channel because I wanted to have kind of a hub that could be a communication hub. But I cannot stand discord. For some reason, it does not resonate with me. So I was thinking substack, because my articles can go there, but we can also organize other things around it. And one of the things that I’m starting and next week is, one of the first ones, is I figured every month I could put together some kind of an educational presentation or something around active inference and the spatial web and just kind of where this technology is headed. And so I’m calling it the learning lab live. And it’ll be live streams with like a live q a chat, whatever.

01:00:37

Denise Holt

But I figured that might be a really good way to just create some discourse around this, make it to where people start to gain more understanding, but also bring some conversation.

01:00:52

Dr. Clippinger

I also think that when there is a reference technology application out there that really embodies this and distinguishes itself, is commercially successful on basis, then everyone’s eyes are going to go up, right? So when people see the limitations of current language models, now actually, here’s one I can use, and actually I can let it loose and I can count on it and it’s not going to do squirrely things. And then it actually helps me transform my business. I think of my business in a very different way than I’ve ever thought about it before. And I think you’re starting to see those conversations come around. So in another year or so, I think world will be in a different place.

01:01:40

Denise Holt

Yeah. Okay. So I know we’re kind of running out of time here, but if one last question I have for you. What excites you most about the future of this natural path to AI, and particularly Friston’s work? Like where do you see it five years from now? Ten years from now?

01:02:02

Dr. Clippinger

Ten years? I can’t imagine. That’s like a whole nother. Who knows? To me this has just pulled everything together. There’s a number of different fields. I think it’s applicable to those different fields where it has the biggest impact for me is, I think, about this whole social economic crisis and ecological crisis we’re in. I think for me, this is a very powerful technique for dealing with a real existential challenge in terms of climate change. I think, Tim, if it could get supplied there and we can really get together at scale and address these things and not be extractive, but be generative to climate, we’re creating more living things. We have a whole way of organizing ourselves that’s based upon life, not the destruction of life. And that becomes our new organizing principle that could change our economy. That can be extremely positive.

01:03:10

Dr. Clippinger

So I think that to me is the best thing about it. And I think that applied in that direction. I think that’s what I’m interested in and I do think it could work there, but I have no idea. I got the right way, but I’m working on different pieces of it. But some way it’ll coalesce because it’s inherent in how. It’s inherent how you had the best method of thinking about intelligence and what social organization, economic organization is based upon these principles. That’s trends. That’s good.

01:03:46

Denise Holt

Yeah, I agree 100%. So, John, how can people reach out to you? Where do they find you on the Internet? How can they reach out to you? If they have any questions or would like to know more?

01:04:02

Dr. Clippinger

Well, I give you two emails and I get to send that. And I’m on LinkedIn. And then I always answer LinkedIn. I’m not a big figure on the Internet. I’m not adroit at being an influencer. You can reach out to through those menus. That’s fine. And I generally respond and stuff.

01:04:38

Denise Holt

I can include those into the show notes so that people can then. So, John Henry Clippinger, thank you so much for joining us here today.

01:04:48

Dr. Clippinger

Well, it’s great to have a chat with you, and good luck. And I enjoyed it. Certainly.

01:04:54

Denise Holt

Thank you so much. And thank you to everyone for joining us. And we’ll see you next time.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

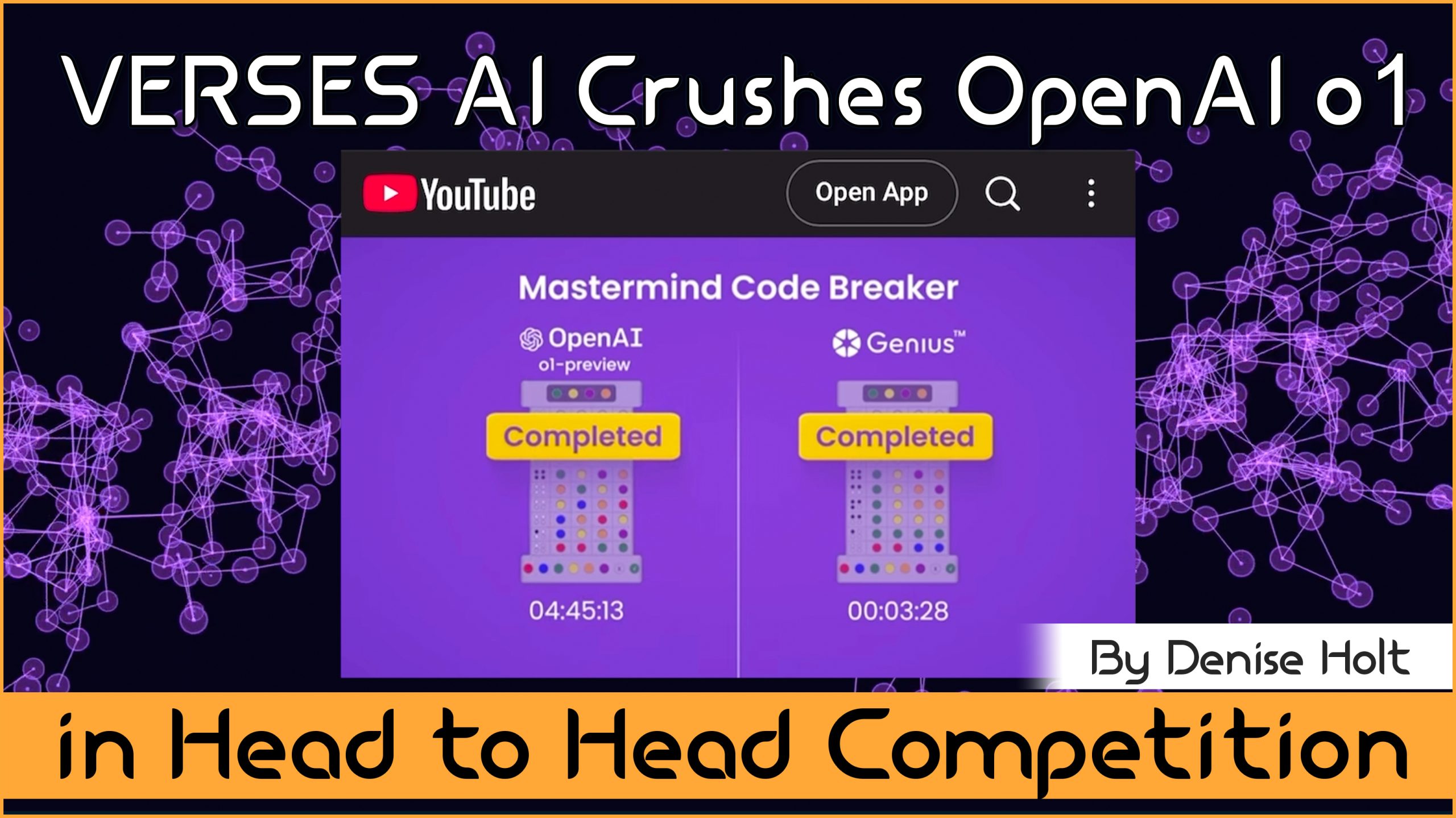

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...