The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

In an unprecedented move by VERSES AI, today’s announcement of a breakthrough revealing a new path to AGI based on ‘natural’ rather than ‘artificial’ intelligence, VERSES took out a full page ad in the NY Times with an open letter to the Board of Open AI appealing to their stated mission “to build artificial general intelligence (AGI) that is safe and benefits all of humanity.”

Image by permission from VERSES AI

Specifically, the appeal addresses a clause in the Open AI Board’s charter that states in pursuit of their mission to “to build artificial general intelligence (AGI) that is safe and benefits all of humanity,” and the concerns about late stage AGI becoming a “competitive race without time for adequate safety precautions. Therefore, if a value-aligned, safety-conscious project comes close to building AGI before we do, we commit to stop competing with and start assisting this project.”

VERSES has achieved an AGI breakthrough within their alternative path to AGI that is Active Inference. And they are appealing to Open AI “in the spirit of cooperation and in accordance with [their} charter.”

According to their press release today, “VERSES recently achieved a significant internal breakthrough in Active Inference that we believe addresses the tractability problem of probabilistic AI. This advancement enables the design and deployment of adaptive, real-time Active Inference agents at scale, matching and often surpassing the performance of state-of-the-art deep learning. These agents achieve superior performance using orders of magnitude less input data and are optimized for energy efficiency, specifically designed for intelligent computing on the edge, not just in the cloud.”

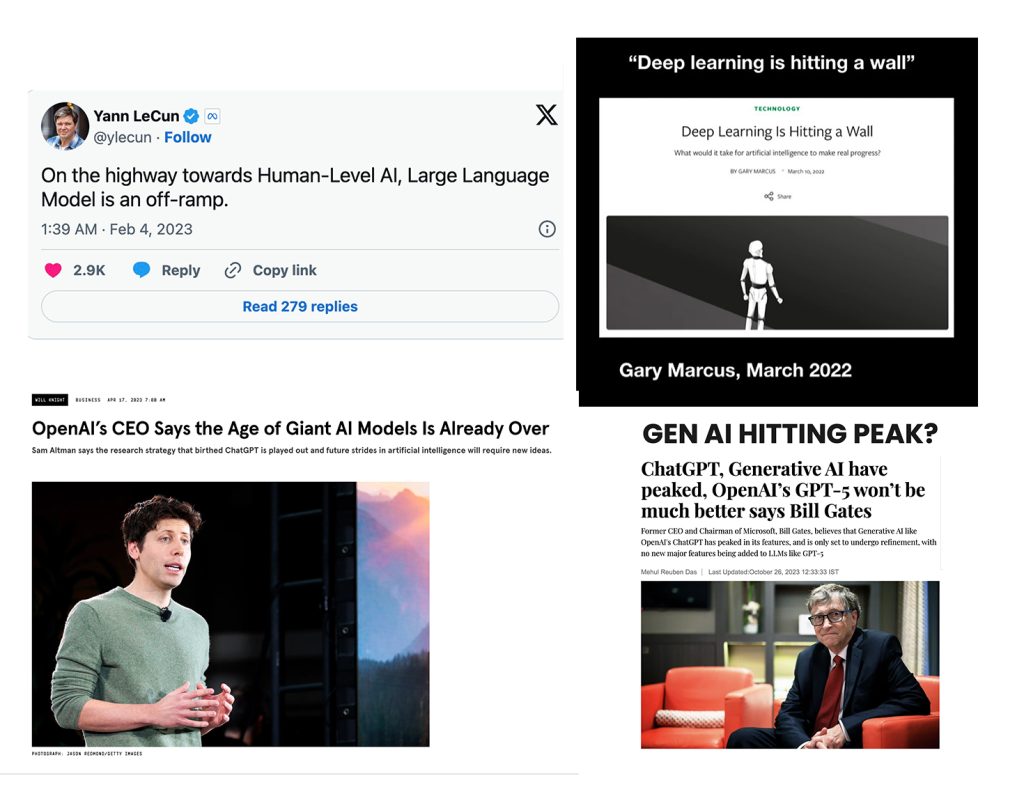

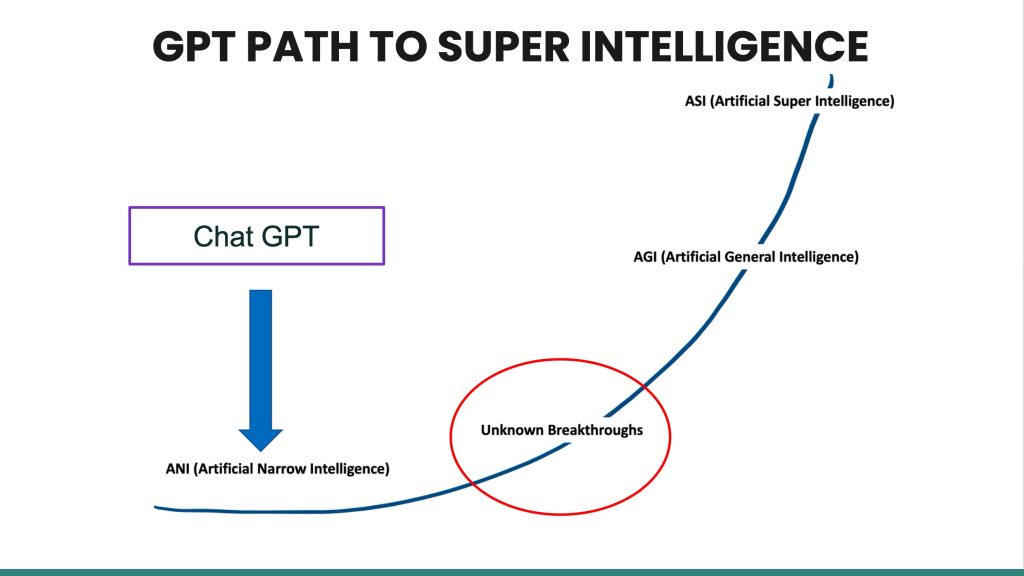

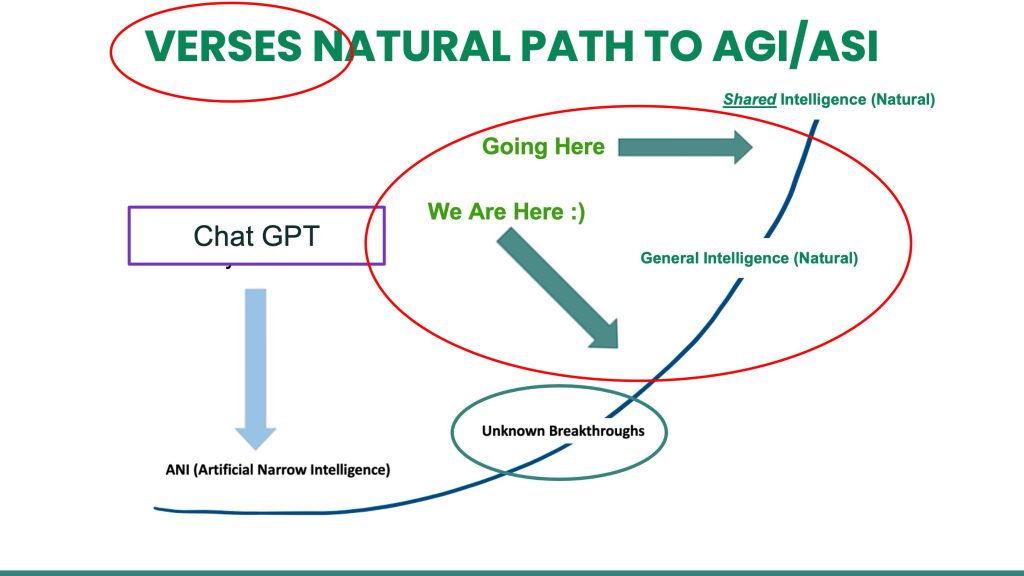

In a video published as part of the announcement today titled, “The Year in AI 2023,” VERSES takes a look at the incredible journey of AI acceleration over this past year and what it suggests about the current path from Artificial Narrow Intelligence (where we are now) to Artificial General Intelligence — AGI (the holy grail of AI automation)… Noting that all of the major players of Deep Learning technology have publicly acknowledged throughout the course of 2023 that “another breakthrough” is needed to get to AGI. For many months now, there has been overwhelming consensus that machine learning/deep learning cannot achieve AGI. Sam Altman, Bill Gates, Yann LeCunn, Gary Marcus, and many other have publicly stated so.

Image by permission from VERSES AI

Just last month, Sam Altman declared at the Hawking Fellowship Award event at Cambridge University that “another breakthrough is needed” in response to a question asking if LLMs are capable of achieving AGI.

Image by permission from VERSES AI

Even more concerning are the potential dangers of proceeding in the direction of machine intelligence, as evidenced by the “Godfather of AI”, Geoffrey Hinton, creator of back propagation and the deep learning method, withdrawing from Google early this year over his own concerns of the potential harm to humanity by continuing down the path he had dedicated half a century of his life to.

Image by permission from VERSES AI

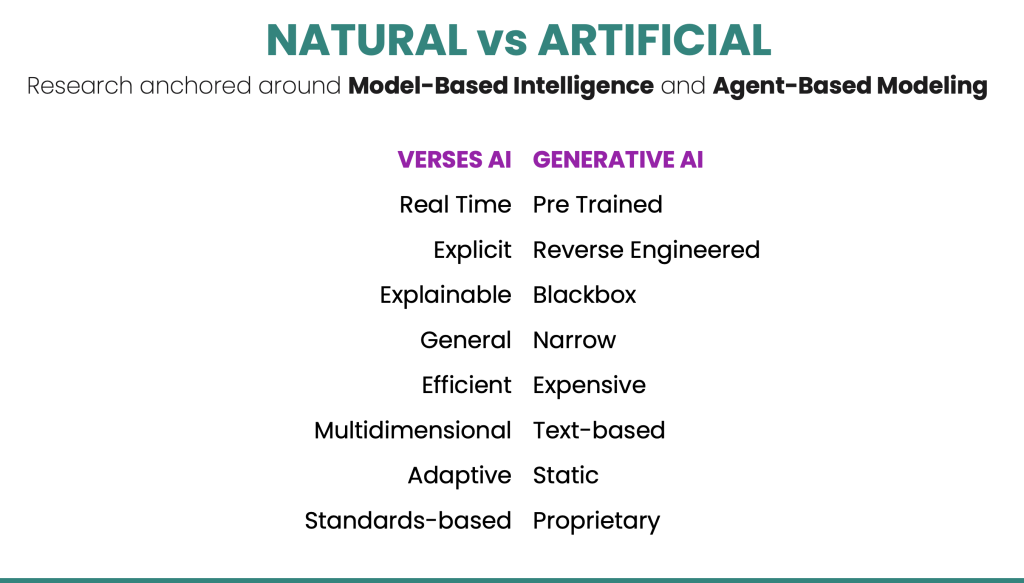

The many problems that pose these potential dangers of continuing down the current path of generative AI, are compelling and quite serious.

· Black box problem

· Alignment problem

· Generalizability problem

· Halucination problem

· Centralization problem — one corporation owning the AI

· Clean data problem

· Energy consumption problem

· Data update problem

· Financial viability problem

· Guardrail problem

· Copyright problem

Image by permission from VERSES AI

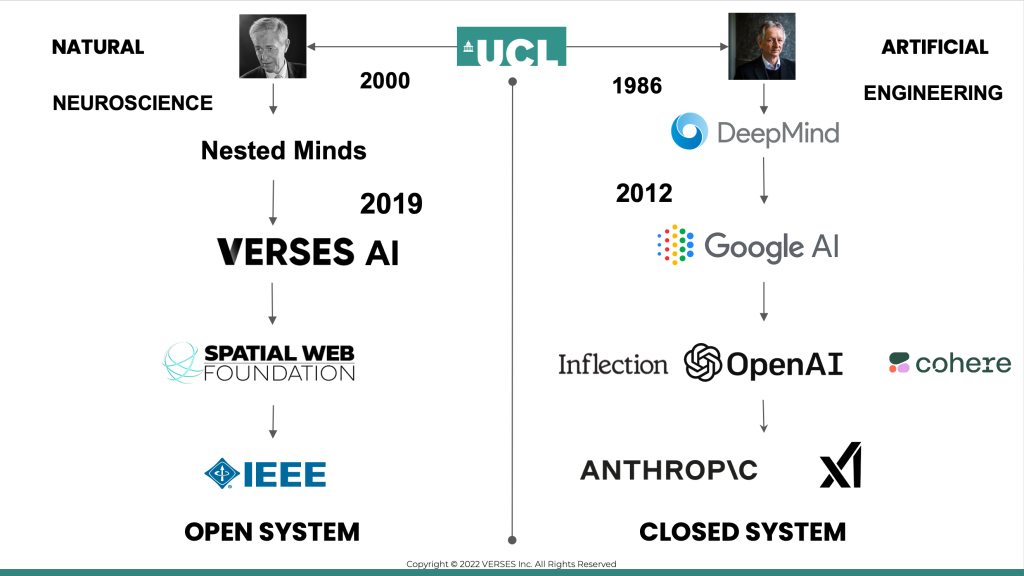

At University College London two professors worked right next door to each other. One, Geoffrey Hinton, and the other, Karl Friston.

Professor Hinton developed DeepMind, taking an engineeering approach to artifical intelligence, resulting in the handful of monolithic foundation models that exist today in which all of generative AI is built from.

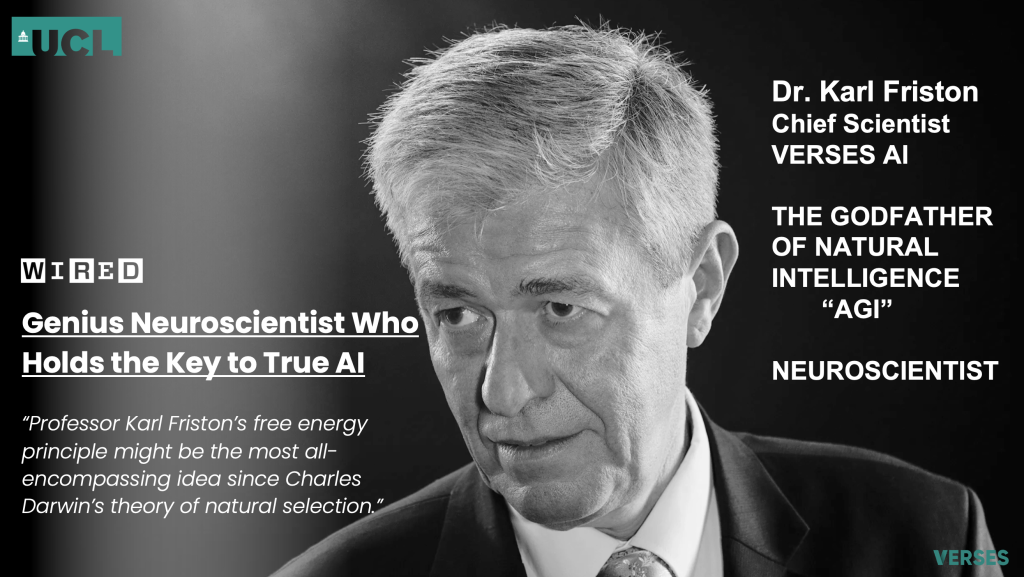

Dr. Friston, Chief Science Officer at VERSES, and one of the most renowed neuroscientists in history, had other ideas. He pioneered a method called, Active Inference, based on a principle he discovered called, The Free Energy Principle, opening up an entirely new path to AGI that is grounded in physics and based on the mathematics of natural, biological intelligence, rather than mere machines and algorithms.

In November 2018, WIRED published an article titled, “The Genius Neuroscientist Who Might Hold the Key to True AI,” stating, “Karl Friston’s Free Energy Principle might be the most all-encompassing idea since the theory of natural selection.”

Image by permission from VERSES AI

In the video released today, Professor Friston says, “You look at machine learning, and you just look at the trajectory. It’s all a trajectory measured in terms of big data, or how many billions of parameters can your large language model handle? That’s exactly the wrong direction.”

In today’s announcement, VERSES explains, “Building on this breakthrough, we developed a novel framework to facilitate the scalable generation of agents with radically improved generalization, adaptability and computational efficiency. This framework also features superior alignability, interoperability and governability in accordance with and complemented by the P2874 Spatial Web standards being developed by the Institute of Electrical and Electronics Engineers (IEEE).”

Image by permission from VERSES AI

VERSES AI is taking a First Principles approach to AI, based on the understanding that the path to AGI must be based on natural intelligence. This direction follows the premise that intelligence is multidimensional, adaptive, efficient, and cooperative. The technology they have built demonstrates a systematic blueprint for achieving AGI and even super intelligence, resulting from this path. This is the Breakthrough everyone is waiting for.

Image by permission from VERSES AI

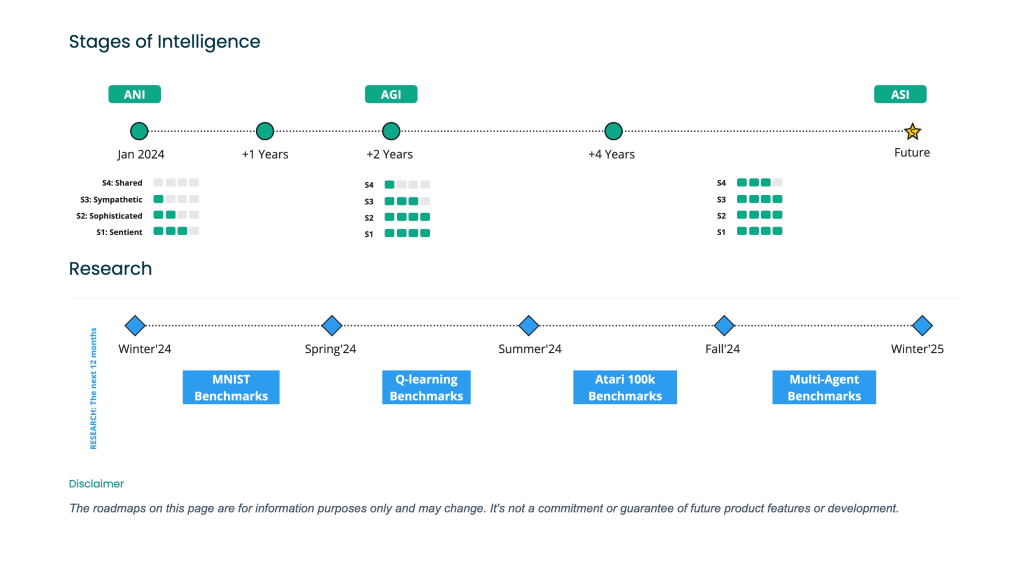

Additionally, VERSES unveiled their research roadmap and timeline publicly today, outlining the stages of development for Active Inference AI.

S0: Systemic Intelligence — This is contemporary state-of-the-art Al; namely, universal function approximation-mapping from input or sensory states to outputs or action states — that optimizes some well-defined value function or cost of (systemic) states. Examples include deep learning, Bayesian reinforcement learning, etc.

S1: Sentient Intelligence — Sentient behavior or active inference based on belief updating and propagation (i.e., optimizing beliefs about states as opposed to states per se); where “sentient” means behavior that looks as if it is driven by beliefs about the sensory consequences of action. This entails planning as inference; namely, inferring courses of action that maximize expected information gain and expected value, where value is part of a generative (i.e., world) model; namely, prior preferences. This kind of intelligence is both information-seeking and preference-seeking. It is quintessentially curious, in virtue of being driven by uncertainty minimization, as opposed to reward maximization.

S2: Sophisticated Intelligence — Sentient behavior — as defined under S1 — in which plans are predicated on the consequences of action for beliefs about states of the world, as opposed to states per se. i.e., a move from “what will happen if I do this?” to “what will I believe or know if I do this?”. This kind of inference generally uses generative models with discrete states that “carve nature at its joints”; namely, inference over coarse-grained representations and ensuing world models. This kind of intelligence is amenable to formulation in terms of modal logic, quantum computation, and category theory. This stage corresponds to “artificial general intelligence” in the popular narrative about the progress of AI.

S3: Sympathetic Intelligence — The deployment of sophisticated AI to recognize the nature and dispositions of users and other AI and — in consequence — recognize (and instantiate) attentional and dispositional states of self; namely, a kind of minimal selfhood (which entails generative models equipped with the capacity for Theory of Mind). This kind of intelligence is able to take the perspective of its users and interaction partners — it is perspectival, in the robust sense of being able to engage in dyadic and shared perspective taking.

S4: Shared Intelligence — The kind of collective that emerges from the coordination of Sympathetic Intelligences (as defined in S3) and their interaction partners or users — which may include naturally occurring intelligences such as ourselves, but also other sapient artifacts. This stage corresponds, roughly speaking, to “artificial super-intelligence” in the popular narrative about the progress of AI — with the important distinction that we believe that such intelligence will emerge from dense interactions between agents networked into a hyper-spatial web. We believe that the approach that we have outlined here is the most likely route toward this kind of hypothetical, planetary-scale, distributed super-intelligence.”

Image by permission from VERSES AI

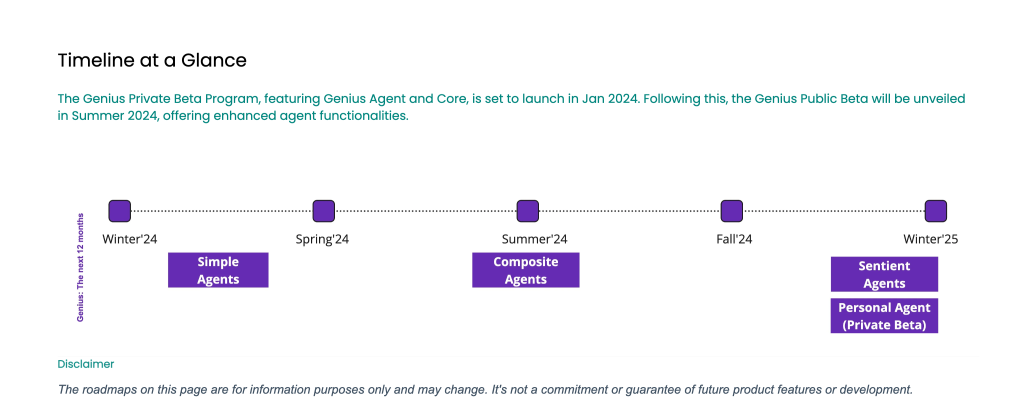

Today for the first time, VERSES made public their ambitious timeline for the 2024 rollout of their platform. The GENIUS™ Private Beta Program officially launches January 2024, with previously announced Beta partners like, NASA, SimWell, Cortical Labs, NALANTIS, with more announcements soon. The GENIUS™ Public Beta is set for Summer 2024, with enhanced agent capabilities. Included in the timeline is a projection for Sentient Agents as “Personal Agents” planned for winter 2024 in a Private Beta.

Image by permission from VERSES AI

Not only are LLMs unlikely to achieve AGI, they are highly likely to bring about the worst potentials for humanity. In the very least, they bring about confusion, misinformation, and compound many of the things we already struggle with as a human race.

The good news is, Active Inference AI can benefit these machine models. They can inherit qualities from Active Inference that solve for many of their issues.

When faced with a new discovery for a potential path to AGI that is programmable, explainable, self-evolving, governable, and works to scale while preserving individual cultural differences and preferences, aligning with human values.; When it operates in a secure manner — overcoming the security issues and obstacles of using LLMs in an unsecured environment like the centralized world wide web; When it has the ability to specifiy and adhere to rules and regulations for various types, stages of intelligence, and governing needs of a wide gamut of Autonomous Intelligent Systems; We have the potential to do things ‘right’ from the beginning. We have the potential to embark on this crucial next evolution of technology in cooperation between humans and machines for the ultimate good for the future of human civilization.

Open AI from its inception has ensured the public that its mission to develop “AGI that benefits all of humanity” would trump all else, including their own for-profit aspirations. Their charter states very clearly that they would set aside competition to join in support of any leading technology that adheres to that mission. VERSES AI is presenting technology and a timeline that fits the criteria they set in their charter. VERSES AI is demonstrating their own intention and integrity to the public welfare by reaching out to the Open AI board today to appeal to this spirit of cooperation within the AI community. In a space of technology that is rife with fear of a corporate race for domination, it’s hard to imagine a more meaningful gesture than that.

At the end of the video, “The Year in AI 2023,” released today, narrated by VERSES CEO, Gabriel Rene, states, “we have achieved the breakthrough we believe leads to smarter, safer, and more sustainable general intelligence systems. This isn’t just about achieving AGI. It’s about what we can achieve with it. The natural path invites us to realign our relationship with technology, nature, and each other. And it calls on us to come together to imagine a smarter world and then build it.”

Full video “The Year in AI 2023,” released today — by permission from VERSES AI

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

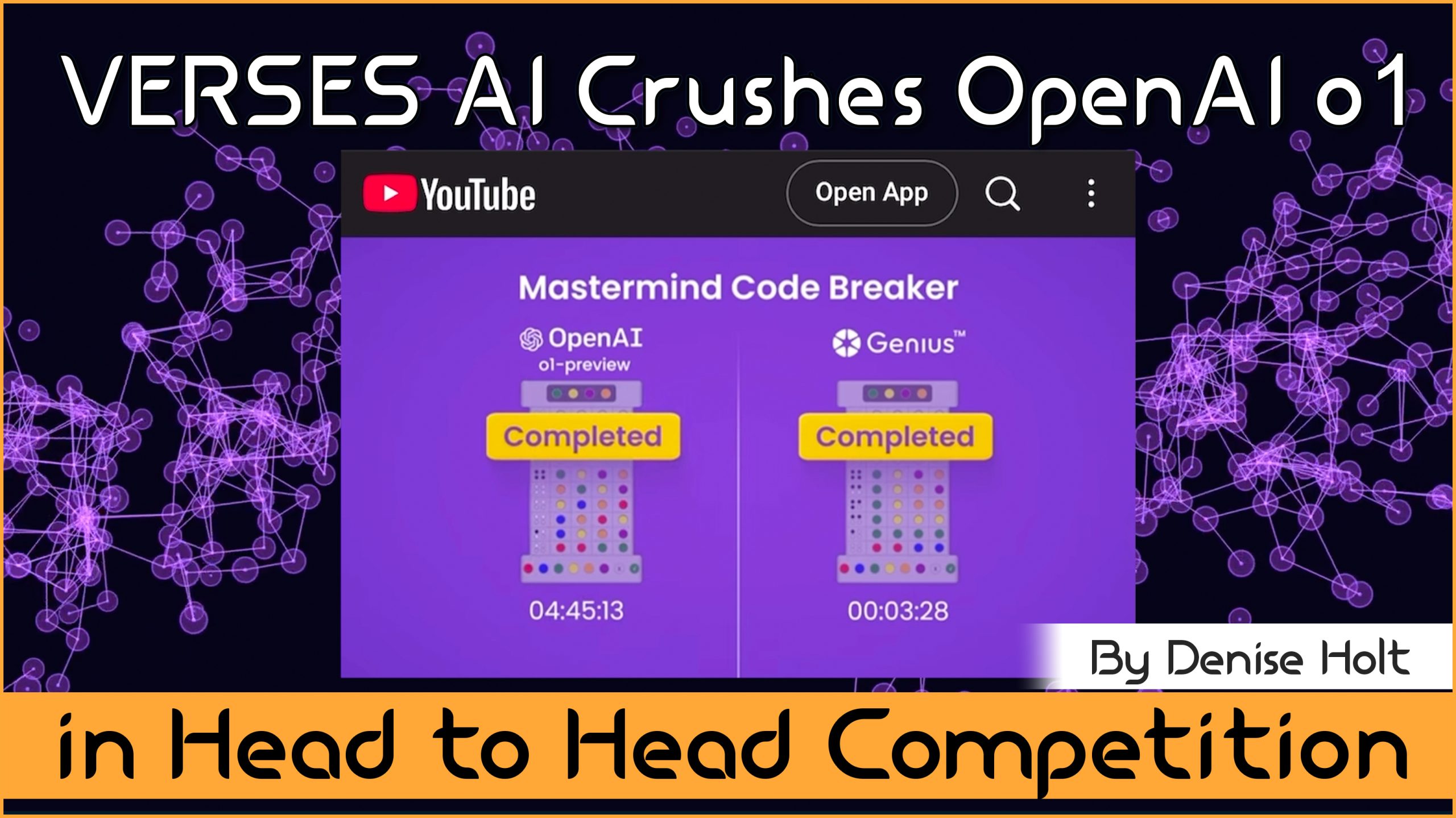

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...