The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

A groundbreaking new artificial intelligence paradigm is gaining momentum, thanks to a major discovery proving its underlying scientific theory.

What if I told you there is a framework for an entirely new kind of artificial intelligence that is able to overcome the limitations of machine learning AI? I’m talking about an AI that is knowable, explainable, and capable of human governance. One that operates in a naturally efficient way, and does not require big data training.

What if I said that its underlying principles have just been proven to explain the way neurons learn in our brain?

This may seem too good to be true, but it’s not. In fact, it’s happening right now.

In December 2022, VERSES AI set forth a sweeping vision for artificial intelligence based on a distributed, collective real-time knowledge graph, comprised of Intelligent Agents operating on the physics of intelligence itself. In their whitepaper, titled “Designing Ecosystems of Intelligence from First Principles,” they propose a profound departure from standard notions of AI as a monolithic system.

Authored by Chief Scientist Dr. Karl Friston and the VERSES AI research team, the paper puts forth the concept of “shared intelligence”. This represents intelligence as emerging from interactions between diverse, distributed nodes as Intelligent Agents, in which each contributes localized knowledge and unique perspectives.

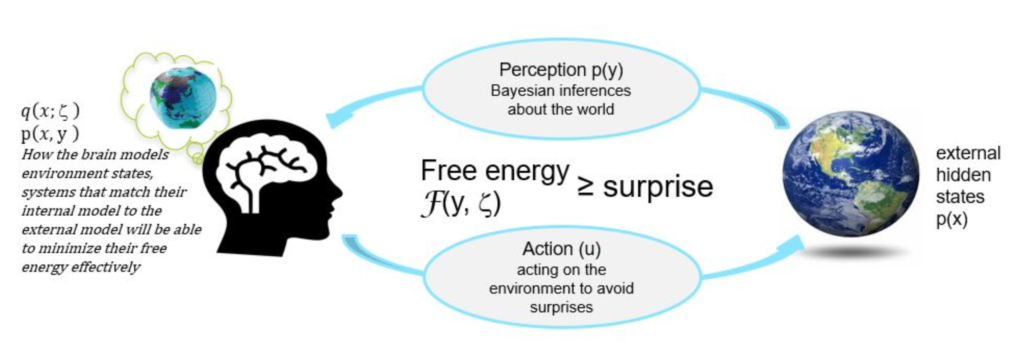

Central to this framework is Friston’s Free Energy Principle (FEP). FEP proposes that neurons are constantly generating predictions to rationalize sensory input. When something doesn’t make sense, these “mismatches” drive learning by updating the brain’s internal model of the world to minimize surprise and uncertainty. This theory neatly explains the remarkable feat of perception and learning in the brain.

https://www.kaggle.com/code/charel/learn-by-example-active-inference-in-the-brain-1

This provides a unified theory of brain function, proving perception, learning and inference emerge from internal models that constantly update in this way, and that this type of self-organization will follow a pattern that always minimizes the variational free energy in the system. Think of this free energy as the “space of the unknown” between what you think will happen based on what you already know, vs. what actually does happen based on things you may not expect.

Friston shows how this physics principle can be applied through “Active Inference” to create Intelligent Agents that continually refine their world models by accumulating evidence through experience. In this way, intelligence arises from the process of resolving uncertainty — an intelligence that is self-organizing, self-optimizing, and self-evolving.

The trailblazing ideas in the VERSES whitepaper just gained substantial empirical validation. Published in Nature Communications on August 7, 2023, a study from Japan’s Riken Research Institute demonstrated that real neuronal networks do self-organize based on Friston’s Free Energy Principle, proving it correct.

By delivering signals to brain cell cultures, researchers showed the emergence of selective responses attuned to specific inputs — confirming predictions of computational models using Active Inference.

This concrete proof in biological neural networks lends tremendous credence to the VERSES AI whitepaper’s proposal of grounding AI in the same neuroscience-based principles.

Mainstream AI today relies on training neural networks on huge data sets to recognize patterns. However, human cognition operates differently, building an internal intuitive model of how the world works.

The proposed Active Inference agents similarly maintain generative models rationalizing observations via dynamic beliefs about their causes. This equips AI with common sense and reasoning abilities beyond today’s data-hungry approaches, mitigating machine learning issues like brittleness and opacity.

Rather than brute-force machine learning, agents continually update internal models — beliefs of what they know to be true — by interacting with the environment to minimize surprise. Just like humans gather evidence to resolve uncertainty and support our worldview, this allows more efficient and adaptable learning, balancing curiosity and goal achievement.

To enable seamless collaboration, individual agents then connect inside an ecosystem of diverse intelligences within the distributed network of nested collective intelligence, called the Spatial Web, which mimics the distributed functionality of the brain. This allows frictionless exchange of knowledge and beliefs through a common protocol called Hyperspace Transaction Protocol (HSTP), and a modeling language called Hyperspace Modeling Language (HSML) — a “lingua franca” for AIs to communicate their generative models of the world, linking diverse intelligences.

HSML represents all people, places, and things as digital twins in 3D space, informing Intelligent Agents of the ever-changing state of the world. This equips systems with context beyond data, supporting intuitive reasoning.

With permission — Storyblocks asset ID: Asset ID: SBV-347494173

With communication grounded in their internal models, networked AIs develop social abilities like perspective taking and goal sharing. This facilitates recurrent self-modeling and value alignment, overseen by human input. The result is a transparent, decentralized intelligence that dynamically self-organizes, bridging individual capabilities in cooperation with each other.

The authors of this whitepaper propose that the key to achieving advanced AI lies not in simply scaling up deep learning neural networks to handle more data and tasks, but rather true intelligence emerges from the interactions of diverse, distributed nodes, as Intelligent Agents, and that each contribute localized knowledge and unique perspectives.

With permission — Storyblocks asset ID: Asset ID: SBV-346749102

Complex intelligences arise from combinations of simpler intelligences across levels, just as the human mind emerges from interactions among neurons. This allows knowledge to be aggregated while preserving diversity. Each Intelligent Agent in the network contributes its own localized “frame of reference” shaped by its unique experiences and embodiment.

Unique localized viewpoints are preserved, as diversity provides selective advantages for the collective system. Intelligence emerges in a distributed way that is transparent, scalable, and collaborative.

One of the most fascinating aspects of Dr. Karl Friston’s Active Inference AI, based on the Free Energy Principle, is how it naturally minimizes complexity. Instead of relying on massive data loading, it can take any amount of data and make it smart within the distributed network as individual Intelligent Agents continuously update their internal models.

In the same way the voltages in the brain are quite small, this self-organizing approach works just like natural intelligence, with lower energy requirements.

The human adult brain is remarkably energy efficient, operating on just 20 watts — less power than a lightbulb. In contrast, popular AI systems like ChatGPT have massive energy demands. When first launched, ChatGPT consumed as much electricity as 175,000 brains. With its meteoric rise in use, it now requires the monthly energy of 1 million people. This intense carbon footprint stems from the vast computational resources required to process huge amounts of training data of machine learning. The University of Massachusetts Amherst reported that “training a single A.I. model can emit as much carbon as five cars in their lifetimes.” referring to a single training instance, yet models are trained repeatedly as they are improved.

The exponential growth of AI is colliding with sustainability. Active Inference AI offers a path to intelligence that minimizes complexity and energy, promising a profoundly scalable and cost-effective path to artificial general intelligence. By mimicking biology, we can nurture AI that thinks and learns while protecting the planet.

The blueprint laid out in the VERSES whitepaper aligns firmly with our understanding of natural cognition. By distributing intelligence while retaining variety and knowledge gained through individual frames of reference, it provides a pathway beyond narrow AI towards adaptable, trustworthy and beneficial systems exhibiting general intelligence. It’s a system that safeguards and respects individual belief systems, socio-cultural differences, and governing practices.

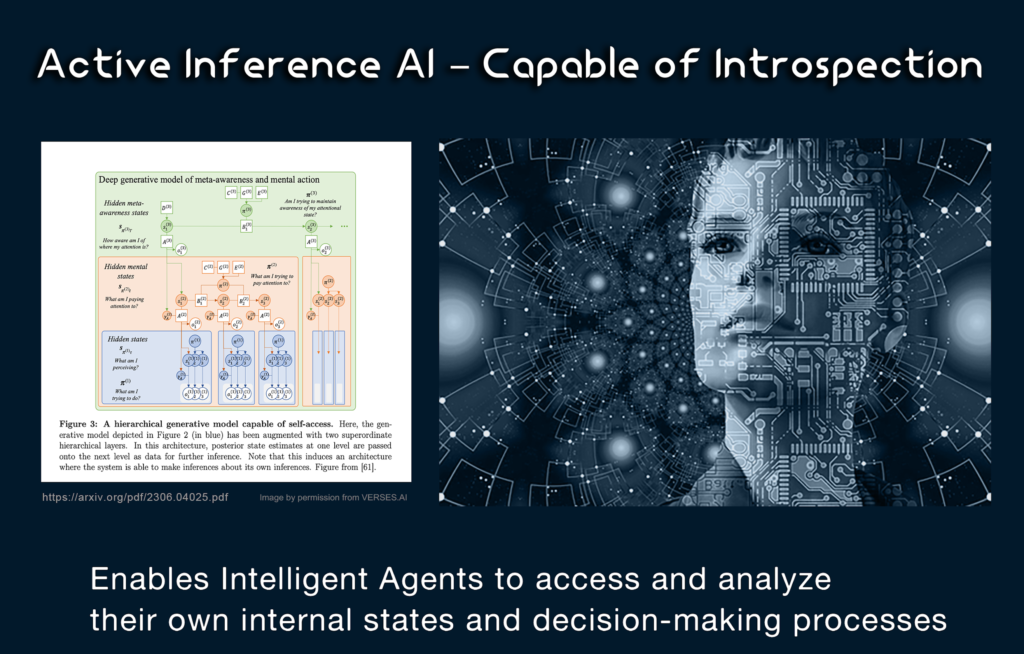

Crucially, Active Inference AI has also demonstrated the ability for self-introspection and self-reporting as to how it reaches its conclusions. This “Explainable AI” allows human oversight over otherwise opaque machine learning models.

Image by Author

Combined with HSML for translating human law or norms into code, the Spatial Web architecture enables human governance to scale alongside AI capabilities.

The recent results from Riken substantially boost the credibility of this futuristic framework for AI based on the physics of the brain. The empirical confirmation of the biological mechanisms behind human learning lends increased confidence that systems built on the same principles can achieve human-like robust and generalizable intelligence.

By rooting AI firmly in the fundamental laws governing living cognition, this pioneering approach ushers in a new era of explainable, programmable, and collaborative AI, providing a compass for navigating the promise and perils of artificial general intelligence, with an unprecedented cooperation between human and machine cognition.

Spearheaded by VERSES AI, this groundbreaking whitepaper outlines a revolutionary roadmap for artificial intelligence based on collective, distributed intelligence unified through shared protocols within the Spatial Web.

This approach fundamentally diverges from current machine learning techniques of standardized neural networks trained on big data.

Powered by Dr. Karl Friston’s Free Energy Principle, now proven to drive learning in the brain, their vision promises more human-like AI that is energy efficient, scalable, adaptable, and trustworthy. The Spatial Web architecture provides a framework for frictionless knowledge sharing and oversight through explainable AI models. Combined with the recent neuroscience findings and breakthroughs, this bio-inspired approach could represent the future path to beneficial Artificial General Intelligence, surpassing narrow AI, while allowing human values to guide its growth through integrated governance – catalyzing the next evolution of artificial intelligence towards more human-like capabilities.

Visit VERSES AI and the Spatial Web Foundation to learn more about Dr. Karl Friston’s revolutionary work with them in the field of Active Inference AI and the Free Energy Principle.

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

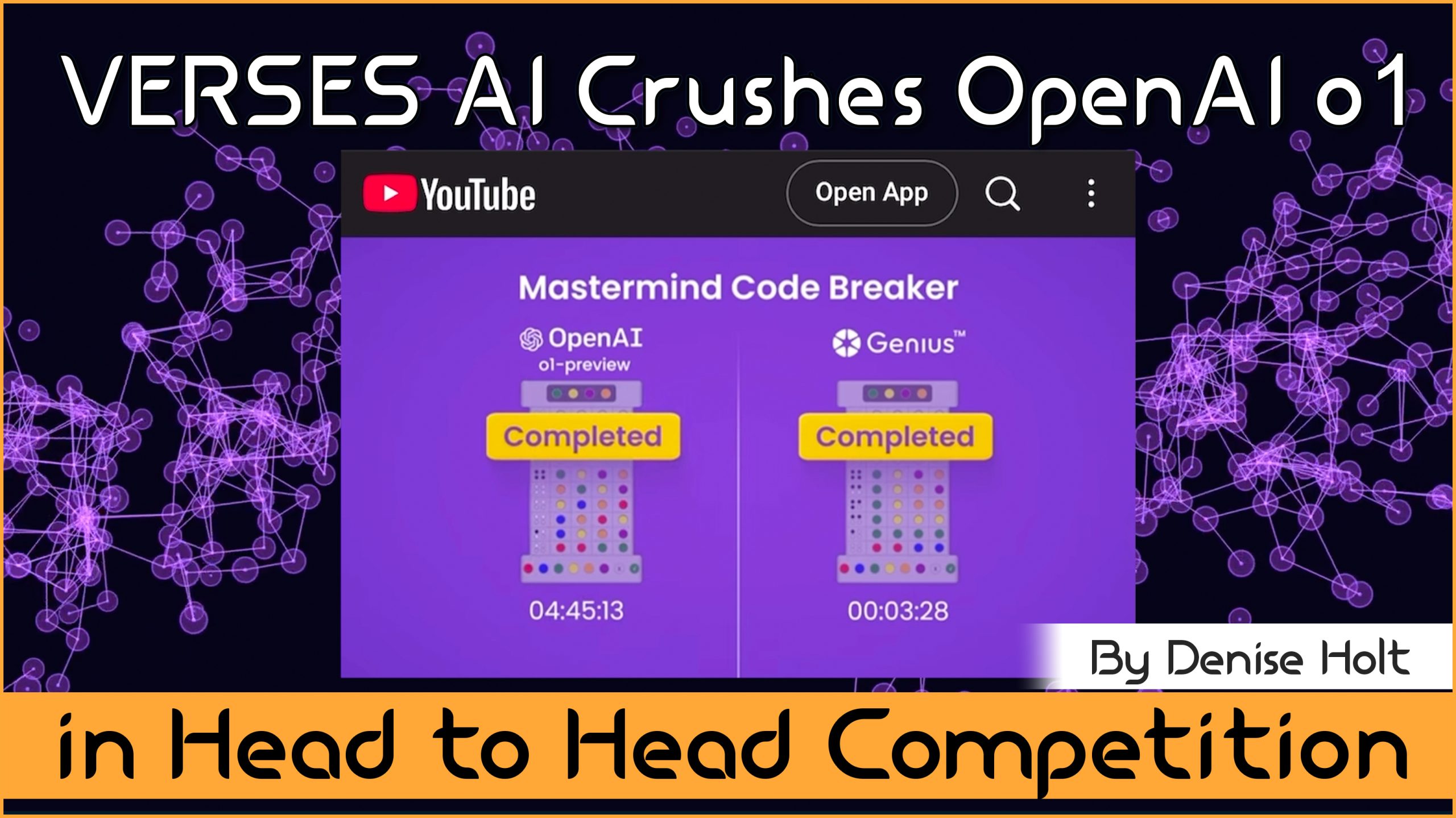

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...