Active Inference & The Spatial Web

Web 3.0 | Intelligent Agents | XR Smart Technology

Exclusive Inside Look: One-on-One with Cortical Labs' Chief Scientist - From DishBrain to CL1

Spatial Web AI Podcast

- ByDenise Holt

- April 16, 2025

The concept of merging biological components with traditional silicon-based computing might sound like pure science fiction, but for Cortical Labs, it represents the cutting edge of what is possible in “artificial ‘actual’ intelligence.” In my recent in-depth, one-on-one conversation with Brett Kagan, Chief Scientist at Cortical Labs, we explored the groundbreaking field of what they refer to as, synthetic biological intelligence (SBI), and the technology behind their latest innovation. CL1 is the world’s first commercially available biological computer, fusing lab grown human brain cells to silicon chips.

Origins and Vision

Cortical Labs was founded in 2019 around a core question: what fundamentally drives intelligence? According to Kagan, “While people, especially back then, were looking at a lot of different silicon methods, at the end of the day, the only ground truth we had for true generalized intelligence were biological brain cells.” Kagan explains, highlighting the central motivation behind Cortical Labs’ unique approach.

We asked ourselves the question, what if we could actually leverage these instead of just modeling them? Would that give us a more powerful processor?” — Brett Kagan, Chief Scientific Officer, Cortical Labs

From DishBrain to CL1

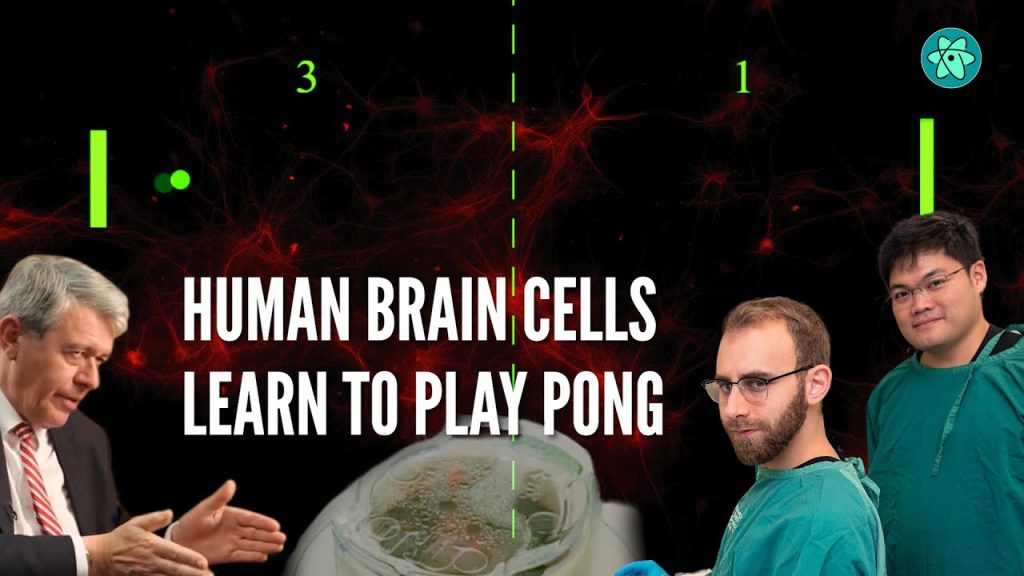

Cortical Labs first captured attention with their “DishBrain” experiment in 2022, where lab-grown neurons successfully learned to play Pong by processing predictable versus chaotic electrical signals. This experiment validated their concept but left questions about scalability and practical applicability.

Notably, the experiment’s training methods were designed as a test of Professor Karl Friston’s Free Energy Principle — a theory suggesting intelligent systems act to minimize surprise or unpredictability in their environments.

Cortical Labs developed an approach where neurons received unpredictable signals every time they “got it wrong,” compelling them to adapt their behavior. Kagan described this as a critical experiment: it would either provide strong evidence in support of the Free Energy Principle or challenge its validity. The results provided remarkable support for the theory, demonstrating conclusively that neurons structured their behavior to minimize unpredictability, thereby validating the principle in a new and exciting way.

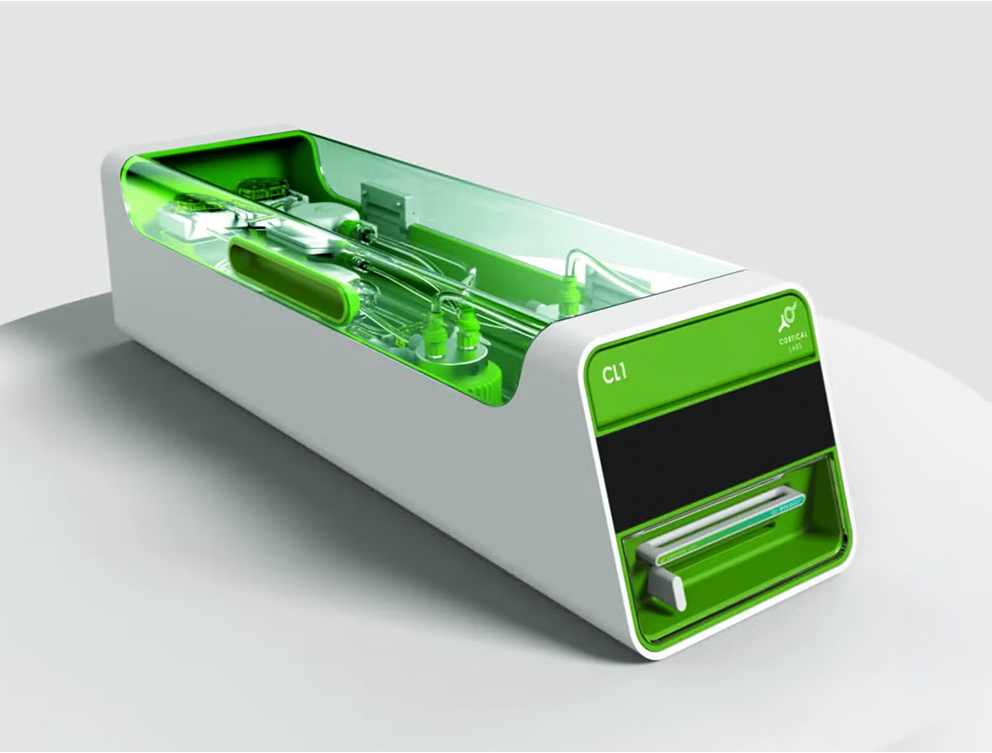

Fast forward to March 2025, the company announced its revolutionary computing system, the CL1, described as a self-contained “body in a box.” This advanced system integrates living neurons with silicon chips, creating adaptive, self-learning networks placed on a grid of 59 electrodes. The neurons themselves originate from induced pluripotent stem cells (iPSCs), capable of developing into any type of cell. In this case, they were tailored into neurons forming learning networks.

The Science Behind CL1

At the heart of CL1 is the interplay between biology and silicon, bridged by electricity — the “shared language” of both neurons and microchips.

“One of the really nice things is that the shared language between neural cells and silicon computing is electricity. Both of them ultimately use electricity in some way to communicate. And so it’s this bridge.” — Brett Kagan, Chief Scientific Officer, Cortical Labs

The neurons are kept alive in a specially engineered environment mimicking human physiological conditions, utilizing carefully managed media for nutrients, temperature control, and filtration systems. Remarkably, neurons in the CL1 routinely survive for six months or more, with aspirations to extend that lifespan significantly.

According to Kagan, their experiments regularly achieve survival rates beyond six months, with some cells maintained successfully for up to a year. These longevity records suggest that longer-term applications and stability for practical use in industries such as pharmaceuticals, robotics, and personalized medicine are all well within reach.

Training and Learning: Active Inference and the Free Energy Principle

Training neurons to learn and adapt hinges significantly on theoretical insights from Professor Karl Friston’s Free Energy Principle and Active Inference frameworks. These theories propose that intelligent systems naturally act to minimize surprise or unpredictability within their environment. In practical terms, Cortical Labs employs methods where neurons experience predictable and random signals as feedback, prompting the networks to adjust their behavior toward more predictable outcomes.

Interestingly, while other companies like FinalSpark have employed neurotransmitters like dopamine as chemical rewards in neuron training, Kagan explains Cortical Labs’ strategic focus is on scalability and nuanced control.

“I think the way you can go with that ultimately is fairly limited, especially if you want to start to reward discrete units… So I think rewarding neurons with dopamine is perfectly good as a lab experiment. But how would you scale this into a more complex device?”

They prefer to understand the fundamental biological mechanisms behind neurotransmitter signaling and replicate them through electrical feedback rather than directly introducing chemical rewards.

Energy Efficiency and Sustainability

An exciting advantage of synthetic biological intelligence lies in its inherent energy efficiency. Kagan notes that CL1 racks consume less than 1 kilowatt of power — a mere fraction of the power required by conventional AI data centers. This dramatically reduced energy footprint could significantly influence the future of sustainable computing, making large-scale deployments economically and environmentally feasible.

Practical Applications: Transforming Industries

The potential applications of CL1 are vast. Key areas of impact include:

- Drug Discovery & Disease Modeling: Rapidly accelerates research while reducing reliance on animal testing.

- Personalized Medicine: Utilizes patient-derived cells for customized treatment strategies.

- Robotics: Enhances robotic intelligence with biological learning capabilities, providing human-like adaptive responses.

The flexibility of CL1, complete with a programmable bidirectional interface and a robust Python API, empowers researchers to design and conduct a wide array of custom experiments. This functionality is available both through physical unit purchases for labs and remotely via Cortical Labs’ “wetware as a service” cloud-based model.

Ethical Considerations

Brett Kagan addressed the ethical implications of Cortical Labs’ research very openly and clearly, emphasizing that, despite their biological origins, these neuron networks are not conscious. He stated that their very first published paper from Cortical Labs was an ethics paper, and since then, they’ve collaborated with numerous independent international bioethicists, philosophers, and regulatory experts to continuously address ethical concerns. Emphasizing their commitment to responsible development, he states:

“We pretty much always have a paper ongoing in this area, working with independent people to make sure that this research can be developed and applied responsibly… We’ll continue to work on it because it’s the right thing to do.”

However, they openly acknowledge the complex ethical questions raised by their research, ensuring transparency and responsible oversight as their technology advances.

“I’m not going to say that there aren’t ways where companies could do things unethically, and I do have some concerns about some things going on with what other people are doing in other areas. But you can also do it correctly and openly. And that’s the approach we’re taking.”

Future Directions: Scaling Up and Beyond

As Cortical Labs moves forward, scalability remains a priority. The ultimate vision includes fully integrated “server racks” of CL1 units capable of supporting complex research and industrial applications. Challenges remain, particularly in refining life-support systems and optimizing neuron integration, but the trajectory is clear — synthetic biological intelligence is positioned to revolutionize the technological landscape.

Looking further ahead, Kagan envisions synthetic biological intelligence dramatically transforming our approach to AI and computing over the next decade, offering unprecedented adaptability and efficiency.

Cortical Labs’ CL1 represents a radical leap into a future where biology and technology fuse seamlessly. By harnessing the adaptability, energy efficiency, and inherent intelligence of living neurons, synthetic biological intelligence promises a new era of technological advancement with profound implications across industries.

Don’t miss my full podcast interview with Brett Kagan on the Spatial Web AI Podcast where we go deep into these topics, and more!

Watch here:

Huge thank you to Brett Kagan for being on our show!

Connect with Brett Kagan:

LinkedIn: https://www.linkedin.com/in/brett-kagan-6ba996146/

To learn more about Cortical Labs visit : https://corticallabs.com/

The FREE global education hub where our community thrives!

Scale the learning experience beyond content and cut out the noise in our hyper-focused engaging environment to innovate with others around the world.

Become a paid member, and join us every month for Learning Lab LIVE!

Episode Transcript:

Speaker 1 – 00:00 Foreign. Speaker 2 – 00:13 Hi, everyone, and welcome to the Spatial Web AI Podcast. Today we have a very special guest, Brett Kagan. He is the chief scientist for Cortical Labs. And if you’re not sure who Cortical Labs is, they just had a huge announcement back in March that they’ve launched the first biological computer, a technology that they call synthetic biological intelligence. And how they’ve achieved that is by fusing live neurons to silicon chips. So, Brett, welcome to our show. I’m so excited to have you here today, and I can’t wait to talk about this technology with you. Speaker 1 – 00:54 Thanks so much. It’s a pleasure to be on the show. Speaker 2 – 00:56 Yeah. So tell me, what is Cortical Labs? You’re the chief scientist there. How long have you been there? How long has Cortical Labs been building in this space? And, you know, how did you guys come to the. The choice of using live neurons with silicon chips? Speaker 1 – 01:16 So lots of questions there. Cortical Labs really kicked off 2019, and there was just a couple of us, and we kind of had this question of what is the core thing that leads to intelligence? While people, especially back then, were looking at a lot of different silicon methods, at the end of the day, the only ground truth we had for true generalized intelligence were biological brain cells. We asked ourselves the question, what if we could actually leverage these instead of just modeling them? Would that give us a more powerful processor? And so we started off, and we wanted to see, could we actually, one, grow brain cells in a dish, which academic labs have been doing for a while? I did a PhD in that area. Speaker 1 – 02:00 But then, two, the more challenging part, interact with them in real time to teach them to do anything in particular. So we started out this game Pong, because it was just this classic game. DeepMind did it for their first reinforcement learning agent. And we thought if we could get them to get better at Pong, that would be a really compelling proof of concept. And so this is sort of the journey we started down. Speaker 2 – 02:25 That’s awesome. I. I actually remember the dish brain announcement back in 2022, I think, and I found it so fascinating. So can you tell me a little bit of how this works? How do you take these live neurons? How do you keep them alive? How do you merge them with the silicon? Like, how. How does that work? It sounds really complicated. Speaker 1 – 02:52 Look, there’s always a lot of complexity in some of these things. The nice thing when you’re working with biology, though, is, as I was saying, there is this core of knowing what can happen. So we know, for example, that brain Cells can grow and live like human brain cells we know can live 120, 122 years old, at least whatever the oldest human is, we know it’s possible. Now, we can’t do 122 years in the lab yet, but that means we’re starting from a position of how, not if. And so, for example, and again, there is a history of cell biology in many huge numbers of academic labs who have actually done a lot of this difficult work and laid the groundwork that we can build on to keep cells alive. Speaker 1 – 03:36 For example, special, what’s called media, which is a special nutrient rich liquid that the cells actually sit in. We can use that, we can put cells down, keep them in special temperature controlled incubators that manage their gas and everything else. We can use media that we then have to manually and very carefully, using proper techniques, replace in the dish. Then we can basically make sure that we maintain these cells to interact with them and to keep them actually growing on the silicon, we have to use special molecules that actually allow the cells to adhere and attach to this glass substrate. But once you do that, one of the really nice things is that the shared language between neural cells and silicon computing is electricity. Both of them ultimately use electricity in some way to communicate. And so it’s this bridge. Speaker 1 – 04:29 And what we had to work on in this dish brain protocol, which is our first prototype that we did, and as you said, announced in 2022, was figure out how do you best shape that language to get something useful out of both systems. That’s what we set out to do. And we worked with some amazing scientists, both domestically as well as internationally, particularly with Professor Carl Friston, who’s based at the ucl, who had some really interesting theories on how cells will adapt. With that, we’re able to basically explore that if you pattern the right information into the cells, would they adapt their behavior, in this case their electrical activity, to actually get better at playing the game Pong? Speaker 2 – 05:10 So interesting. So then can you go a little bit deeper into how you managed that with these neurons as far as how does the free energy principle play into that? How were you able to get them to learn the game? Speaker 1 – 05:27 Yeah, sure. So essentially were interested in creating something called embodiment. Now, embodiment sounds kind of like one of these scary words when you talk about this, but it’s not. This doesn’t mean consciousness or anything like that, but a body, ultimately, when we talk about a body, you can talk about a cell body, a human body, whatever. It’s Ultimately, just this concept of a barrier between an internal and an external world, statistically speaking. And this is talked about in the free energy principle, using different words, but it’s a very similar concept. And so ultimately, what is then what creates embodiment? Having a internal action that can be differed from the external information. And when you make and you tie together the external action and the internal information, you can create this embodiment, this closed loop, which is something we all live in. Speaker 1 – 06:22 We all live in a closed loop. When I move my hand, I can feel the resistance of the air. I get my mechanosensory kinesthetic sensations that feedback through it. All of these things show that there’s an action and a response to the decision I’ve made internally. Now were interested firstly, so can we create this closed loop? And so for that, what we had to do was be able to send electrical information into a dish and then in real time. In this case, when I say real time, we’re talking about a 5 millisecond loop, although we’ve improved that significantly as we can discuss in real time, process what that electrical activity does to the neurons, and then apply that response from the neurons to an external world, which then will update the information we’ll put in the future. Speaker 1 – 07:15 And so this circular loop, the same as we all experience, we had to create that for neurons in a dish. Now, a closed loop in of itself, or embodiment in of itself, is not enough to trigger learning. Right. You actually need to give them some reason. Why would they change their behavior? I mean, there’s no innate Pong playing drive to brain cells. It would be bizarre if there were. So this is where we touched base with great computational neuroscientists like Professor Carl Friston, and we discussed their theories, such as the free energy principle. And what he basically has proposed and done significant work on is this idea that neurons will, or not just neurons, but any intelligent system will actually work to minimize the, what he calls the surprise or the information entropy of how it acts in the environment. Speaker 1 – 08:10 Now what does that mean in more concrete terms? It basically means that again, there’s an internal state trying to predict the external world. And the more that it can align its predictions with the world, the more control, the less surprise and less what we formerly would call information entropy arises between these two states. So we looked at that and we said, all righty, this is all well and good, but how would you actually operationalize this? And the simplest, silliest idea we could come up with, it didn’t work. But the second simplest and silliest idea we came up with was, well, what if we just treat this as random noise is something that can’t be predicted. And so if our goal was to predict the environment, there’s two things we can do to get better. Speaker 1 – 09:06 We can either be able to get better at predicting the outcome. So again, let’s say I reach over for a cup and I pick the cup up. I predicted that this action would lead to this outcome. Now if I knock the cup over, I can either get better predicting that moving in this way will knock the cup over. Not ideal, but fine, or I can actually get better control in my action. So what we said is, what if we make it that every time it gets it wrong, it gets information that is different, that can’t be predicted. The only thing the system could do would therefore be to change its actions. So this is what we thought we’d do. We thought we’d just give it random information every time we got something wrong. Speaker 1 – 09:50 And if the cells don’t like that, they’ll change what they’re doing to actually get better at playing the game. And I say if because we didn’t know. And this is one of the reasons we wanted to work with leading experts in theories, because we think it will do the test. If we all agree it’s a good experiment, we have to accept the result. And the free energy principle is somewhat controversial. Some of theories from it, such as active inference, does raise some controversy in the field. And so we thought either we’ll find evidence supporting it, in which case that’ll be really exciting for people, or we’ll find evidence against it. And because we’ll have agreed in advance with the scientists working on this that this is a good test, they’ll have to accept that the results, that there’s challenges for theory. Speaker 1 – 10:32 Now, in the end, we actually did find remarkable support for this at multiple levels that we won’t go into the depth the papers have been published. So we actually did find strong results, which was super exciting. But for us, our main focus was set up a good experiment and see what those results were. And ultimately we found that when you do structure the information landscape the world for the neurons in such a way that they have these contingencies, they will actually get better at playing games like pong over a pretty rapid time course. And that was exciting. Speaker 2 – 11:03 That’s really, that’s really cool. So let me ask you then, so Dish brain, this was back in 22, when you were doing these experiments. And now you’ve launched CL1. Right. And so maybe talk a little bit more about what CL1 is as opposed to what Dish Brain was and what happened internally in your company to, to what was the growth like from there to there. What can CL1 do that has come about with your technology? Speaker 1 – 11:37 Yeah. So like, if we think back before, what were the questions you asked me first up, how do you keep them alive? Right. How do you interact with them? How do you make this easy? And, and you know, basically I had to give you a try to scale it down as much, but a pretty technical answer right now. If I was to say, like, hey, you know, here’s some cells. Go interact with them. You’re going to be like, what am I going to do with this, Brett? Right. So we basically wanted to solve this problem. We wanted to say, how can we make this technology accessible for people to be able to use it? Speaker 1 – 12:10 And so we wanted to build a device that abstracted away the complexity of programming an environment for the cells, improve the capabilities, bring down that closed loop latency because 5 milliseconds is good, but we want to have it. That’s not, we don’t operate on that such a lag. We actually work far quicker. So we wanted to bring that down. We wanted to be able to have the cells be kept alive at the right temperature without having to keep them in an incubator and manually change their media every day or two. We basically wanted to build a device that just made this easy for ourselves. And then we realized as were building it for ourselves, it could be for everyone because we’re taking away all these difficulties. And for us this really mirrored the nascent computer industry. Speaker 1 – 12:55 So when you started out, they had computers back in the 60s, 70s, but they were these things that sat in laboratories and required a team of PhDs to run. And that’s kind of where we’re at, or we’re at until very recently with the idea of biological, synthetic biological intelligence. So we could grow it and we could have kept doing that and we could have kept making advancements. There would have been fine, but not at scale and not accessibly for other people who weren’t experts. So we wanted to build out the CL1 device that would be self contained, simplify the interactions with the cells, even if people weren’t abstract away the need to grow cells yourself, we can actually put these onto the cloud and people can access living biological neurons through the cloud. Speaker 1 – 13:43 Or if you have a wet lab, if you’re a scientist, Or a pharma company, you can buy server racks of these because we’ve dropped the price many times over what it would have cost before to be able to scale this and have it in your hands. Eventually we want to make this accessible enough that people could have it even without a wet lab, ideally in their home that’s still a little bit away. But for now people have cloud access and those with labs can be able to afford some of these devices at a scale that was previously just too expensive. Speaker 2 – 14:13 So how long do the neurons stay alive in the CL1? And is it something that even if someone buys an individual unit, do they have to do anything to help keep these alive? Speaker 1 – 14:26 Yeah. So at the moment, as I say, if you’re at the moment to buy a CL1, you have to have a wet lab, you have to have a biosafe, biosecure facility to grow them in. So it’s really for scientists and biotechs and farmers at the moment. We hope to change that in the future, but not like, not in the future. We’re going to put a timeline. Speaker 2 – 14:48 Yeah, yeah, of course, yeah. Speaker 1 – 14:50 Like whether that’s two years or 20 years away, we couldn’t answer. Right now we have some pretty good ideas on how to do it, but it depends on a whole bunch of things. But keeping cells alive, you know, is not the challenge. As I was saying, like you can do it even just with traditional methods. We’ve, we have regularly kept cells alive for over 12 months. Keeping them alive more than six months is incredibly standard. Often by that time we finished our experiments or finish whatever work we’re doing with them. So we have previously kept cells in our prototype units again for about that five and a half month period. @ which point we find we need to replace our filters. Speaker 1 – 15:28 We are doing some more longer term validations with the actual CL1 devices now, not anymore the prototypes, as we’ve just started manufacturing them. So we expect again that sort of five, six month survival is going to be very readily achievable. Our goal is to be able to get to ideally a two to five year survival. But there’s going to be a lot of work still to go into improving some of these methods. But for most people, experiments go over a couple of weeks to one or two months at most. So we should be resolving what 99.9% of people want to do with what we already have. Speaker 2 – 16:05 So I, I think it’s important to kind of let the audience know where these neurons come from. And I know you gu are using stem cells that are stem cells that could be turned into any kind of cell, right? So you’re turning them into essentially brain cells, is that correct? Speaker 1 – 16:22 Yeah, yeah. So we can grow a variety of different brain cells. So what we would call neurons and a range of those neurons, and then also the supporting cell type, you know, what people often refer to as glare and astrocytes and similar things like that. Speaker 2 – 16:37 And why would you want to use different types? Like what would be the. The use cases, your different types of brain cells. Speaker 1 – 16:47 So ultimately our brain consists of many different types of cells and they have to work together to actually achieve the complexity that we have. And we don’t fully like, not only do we not fully understand it, I’d say we probably don’t even mostly understand a lot of this. Some cell types, we have a pretty good understanding of some of its actions. The role of dopaminergic neurons and Parkinson’s, for example, we know that Parkinson’s is a loss of a very specific type of neurons. Although there’s again a lot of complexity there when you dive into it. But we do know that one single cell type doesn’t make the brain. And we’ve referred to this sometimes as the search for the minimal viable brain, what I like to call sometimes the mvb. And we’re trying to figure out exactly what that is. Speaker 1 – 17:31 What is the least number of cells we can do to have a stable and controllable system, ideally one that also holds some amount of memory. So for example, with our Pong game, we actually weren’t able to hold any meaningful memory in these cells because it was all very short term plasticity. Now there’s pros and cons to that. A lot of people just view the cons and go, well, I can’t remember. No. But it did learn incredibly rapidly each time. And the ability for it to not actually store too much meant that you could, let’s say for drug discovery, you don’t want to have to grow a whole new neural culture every time you try a new drug. The fact that these systems actually reset themselves almost back to a naive state pretty quickly can be advantageous. Speaker 1 – 18:14 If you want to do drug discovery or if you want to iterate through more devices. If they remembered everything they ever went through in such a way that it changed the system forever, it would actually be very expensive and time consuming to have to grow a new culture every time. On the other hand, there are obvious drawbacks. If the system doesn’t hold memory because you have to retrain it every time. So there’s pros and cons to it. And ideally what we want to do is have the choice for what type of culture we grow for each purpose. And so we’re currently searching that how do we grow up to have it integrate memory? Can we improve the performance? Because while we did get learning and pong, it wasn’t beating, you know, you or me in the game by any means. Speaker 1 – 18:53 I suspect a motivated mouse would probably still be outperforming these neurons as it should, because we only did play down about a B’s worth of neurons, and a flat B at that. We had an incredibly simple system. We want to improve that complexity. We don’t think the answer to that is simply go bigger and bigger as what some people have proposed. I think that’s a very crude approach because ultimately, as I said, we had a bee’s number of cells, but not a bee’s level of intelligence. Again, that’s because bees are highly structured with a number of different cell types that all work really well together. So that’s what we’re currently searching for, this minimal viable brain. What is that? And from that we can then grow once our understanding has matured. Speaker 2 – 19:36 I have so many questions. Okay, so one question, and I don’t even know if this is really even an appropriate question as far as the technology goes, but since there’s this struggle to keep these neurons alive, will these neurons ever get to the point where. Speaker 1 – 19:53 They’Re self replicating neurons, what we call post mitotic, which means that for most of nearly all the cases they don’t replicate. There are small pockets in the brain that do grow new neurons, but it’s like by far an exception, not the rule, and quite limited in its capability. This is in many ways a good thing. And especially when people talk about safety, even though we’re very far away from that, obviously with our capabilities, people do worry, oh, you know, will this become like, what if this gets out of control? And so it’s not going to, because again, it’s not self replicating it. You build a device and it has to work in its own way. Speaker 1 – 20:39 Now, is there a future where you could imagine setting up pipelines of stem cells to neurons because stem cells are a, what we call often some of them anyway, are an immortalized source so they can be divided and treated. And you could imagine a pipeline to accelerate this, but we’re not doing that at the moment. We’re not there yet. It may not even be the right approach anytime in the Foreseeable future. And in any case, as I said, these things do remain incredibly controllable. Speaker 2 – 21:09 Yeah. So let me ask you then too, back to the concept of the minimum viable brain and what you were talking about with, you know, having these neurons that basically just start all over again and don’t really contain the ability to have memory. And then I saw something about, you know, one of the potential use cases that are being explored with this. So, you know, there’s a lot of stuff with drug discovery and lab work, but also there’s a robotics use case. Right. So is that something that you’re exploring and does that have to do with this minimum viable brain? Because then I imagine you’re going to want robots that can have memory, you’re going to want them that can access different types of learning. Speaker 1 – 22:01 We definitely want the capability for that. Although, and I think this is the future really of the technology. A lot of people think of the idea of, oh, we want to have. So I said, I say the word minimal viable brain and that’s one context, but the other context is that we don’t need to be constrained by physiology. Our brains are the size and shape that they are, because if they were any larger, we couldn’t be born evolutionarily. This is, this is the case basically human biology, when you get down to it, and our development is centered entirely around how much, how big can you get the head, as much brain as possible in the best way as possible, prune back everything you don’t need. Speaker 1 – 22:41 I mean, we’re born without a whole bunch of stuff that our brain then has to go and develop that takes up space and still how big can you get it and still be born? We don’t need to have that limitation, but also we don’t even need to have the framework of human brain. And so this is an approach that I’ve lately taken to calling bioengineered intelligence. So some people, were part of a group led out of John Hopkins University, were part of his publication that talked about something called organoid Intelligence. Organoid intelligence is this idea of we want to have a brain in a dish for brain like purposes, which is great. It’s a super valuable area, especially I think in the areas of disease modeling and drug discovery, because it does mimic as closely as we can do. Speaker 1 – 23:26 And people want to get closer and closer to human physiology. But for robots, that’s not necessarily the case. We can take this bioengineered intelligence approach to actually build out a system of Neurons, which we use as basically very smart information processes. And there’s a whole bunch of deep theory we could dive into about why these are very smart and why they just work more energy efficiently and more computationally efficiently than anything we can do with silicon alone. But it doesn’t have to be human brain addition. That means you can then integrate it much better with existing silicon. So you end up with this bio hybrid system that maybe we want to store some memory in there. But you know what, frankly, hard drives are pretty good at storing memory in a highly preserved manner. Speaker 1 – 24:13 What if you had the information processing, the dynamic, quick, fuzzy data processing occur in neurons which are optimized for this information processing. Memory is stored in some sort of lossless system and they just work together. Speaker 2 – 24:30 Interesting. Speaker 1 – 24:31 So I think when you look at. People often talk about human memory is amazing. No doubt about is. It’s also faulty. Speaker 2 – 24:38 Yeah, I was just going to say it’s the most unreliable thing. Speaker 1 – 24:42 Yeah, look, there are certainly limitations to it. I mean, it is amazing the ability for us to recall what we can recall over such long periods of time. I mean, our length of time we can store memory actually does exceed anything that we have built so far. I think the next closest thing, you know, you get to is starting to have to, you know, physically transcribe things to get close to how long humans can preserve it. Hard drives, you know, tend not to last as long as that. But yeah, as you say, they are subject to error. We’re, you know, we’re talking about LLMs having hallucinations, which they do, but we do that as well with our memories. Number of times my wife and I sort of have a discussion saying, you know, you said this. I don’t know we said that. Speaker 1 – 25:28 No, we agreed to this. It’s like we’re both intelligent people. She’s a scientist as well. And yet we’re like, what happened two days ago? What did we agree to have for dinner? Yeah, well, even this is. Speaker 2 – 25:39 Yeah, I was just gonna say, even if you take a family of two siblings, you know, they’ve experienced the same thing through their life in their family, but they will tell it differently. Speaker 1 – 25:49 Exactly, exactly. So that’s not to say that we’re not interested in memory or that I don’t think it would be good or useful, but it is to say, let’s look and use, and I say this a lot, the right tool for the right job. And I think the problem we have at the moment in AI research and this has been the problem, I think, for a while is that fads come and go, and when the fad is there, that’s the hammer, and everything then becomes a nail. And so at the moment, it’s transformers and LLMs and this world, and you can do amazing things with them. But the solution to the problems people have is now, let’s just get a bigger hammer. Let’s not run it with a thousand GPUs. That’s run it with a hundred thousand GPUs. A million GPUs. Okay. Speaker 1 – 26:35 I mean, you can definitely get some merit for scale, but I think what people are even in the mainstream media side of realize now is that those performance aspects are going to tail out, and you can only achieve so much by building a bigger hammer. Speaker 2 – 26:49 Right, right. Speaker 1 – 26:51 Exploring other parts of the toolset. And so, particularly with this bioengineered approach, you can create these highly structured collections of neurons that may bear very little resemblance to a human brain or a mouse brain or anything like that, but could be tightly integrated as part of a biohybrid system to achieve other things. Speaker 2 – 27:10 Wow. Now, you mentioned something a few minutes ago that I’d like to explore a little bit, and that’s the energy efficiency of this particular direction of synthetic intelligence. So maybe you could talk a little bit more about that. How is this different than what you see with the deep learning, the giant data centers and all the GPUs, and how. How is this more energy efficient? Speaker 1 – 27:37 So there’s I. I would say broadly two big ways that it’s more energy efficient. One, one is just a cooling, as we know, cooling. I think we saw a tweet from Sam Altman the other day complaining that their GPUs were melting because of people’s excitement about ripping off Studio Ghibli art, which are very pretty pictures, but I don’t know if that’s a reason to be generating so much CO2 on the planet. But cooling is a factor, and basically biological cells need to be kept at a stable temperature, but they don’t overheat when they’re active, not in any reasonable sense. So you don’t have to cool these systems. And that’s a huge benefit. The second benefit simply is the energy to run them is negligible. They basically, as do we run off glorified sugar water. Speaker 1 – 28:26 As I like to say, an apple gives us energy compost. We basically just create compost and we’ve got energy, so it’s negligible. Negligible CO2 emissions. Obviously there’s a bit generated in the process, but as we can expand like the process, sorry, there’s a bit generated in the process of making these devices, but that’s the same for everything and we can greatly improve that at scale. And then other than that, the energy is almost negligible. You know, we’re talking about hundreds of thousands to hundreds of millions of times less energy than your comparable GPU and way less than the amounts being used now. I mean just it’s not even worth comparing. Speaker 2 – 29:10 So what do you see as far as the future with this, as far as use cases? Who you know, because I, I know, I read that the CL1, you’re creating these stacks and then you were talking a few minutes ago about offering the cloud service or people can purchase them for their own wet labs. What do you see as far as the scaling for your company in this and the future? What does this look like and who should be paying attention? Speaker 1 – 29:38 So I think the nice thing about this is it’s a platform technology and so there are short, medium and long term horizons that we can unlock and the immediate ones, and we’ll have a paper hopefully coming out fairly soon once it’s through the peer review process, is this basic neuroscience, drug discovery, disease modeling. The papers on drug discovery and disease modeling in fact. But basic neuroscience we’ve already published some work on and commercially these are actually not tiny markets and they’re incredibly important markets as well. And I know a lot of the times people get excited about well what can I, you know, the everyday person, what can I grab, what can I hold right now? The reality is all these great inventions of what can we grab, what can we hold right now? Came out of basic science research. Speaker 1 – 30:26 So that’s the immediate step. The more medium term step is more abstract information processing architectures, especially ones that we can integrate into silicon as well as more advanced personalized medicine angles. For example we, so we currently use something stem cell, but it’s a type of stem cells called an induced pluripotent stem cell. What, what that word means basically induced means you create the stem cell from basically an adult donor. So that is some blood, same as you give to a doctor. That’s what we use. Some people use some skin cells from a small skin sample and from that you can create this renewable stem cell line. Now the nice thing about that is it has your genetics. Speaker 1 – 31:11 So if you have a disease, for example, my brother in law is unfortunately both non verbal autistic with an intellectual component to it as well as more recently developed epilepsy. Now they’ve been struggling to figure out what antiepileptic works best for him. And every time he has an epileptic fit because of his comorbidities, he has a lot of challenges. The family has a lot of challenges. It’s difficult to get him to go to hospital. There’s a lot of challenges. And the only thing they can do is use him as a guinea pig and see, well, how often is the seizure happening. And this is a complicated case, but this is true for anyone who’s diagnosed with pretty much any neurological or psychiatric disease they used. Assuming guinea pigs tried a whole bunch of different drugs at a whole bunch of different dosages. Speaker 1 – 32:00 Sometimes it takes quite often, in fact it takes years to even identify a working option. And most psychiatrists and neurologists generally agree that this is unlikely to be the best case. But it’s too hard to figure out what the best case is. Yeah, what if we could grow cells from you that express some components because they have your exact DNA for how you would respond to these medications? We could just do a screen of 30 medications at, you know, a range of different doses, and then say in three months, four months, hey, these are the top three candidates and doses. Now, we’re still very early in this journey, but what we have seen with our proof of concepts is pretty supportive of this. Speaker 1 – 32:45 That this is a reasonable assumption to have personalized medicine has been explored in a lot of other labs who have some pretty compelling evidence for it. And honestly, it would be affordable for the healthcare systems to take this approach rather than the amount of time and money that patients and doctors have to spend just on this trial and error approach. So that’s something that can help as well. As well, as I said, there’s some computational stuff that opens up in the more medium term, long term, you know, the big shiny goal that, as I said at the very start that inspired us was this idea of generalized intelligence. And as I said, whether it’s us or bees or my cat has jumped onto my lap, now they all show a generalized intelligence of a differing degree. What if we can unlock that? Speaker 1 – 33:31 And it doesn’t have to be bound to physiology, but the properties, the fundamental building blocks of intelligence could be harnessed to give us something far more powerful than what we can achieve with silicon alone. Speaker 2 – 33:44 Wow. You have to admit, and I, I’m sure you get it a lot, that so much of this sounds incredibly science fiction. And I’m sure that you get a lot of different react as far as public perception. So if you could say anything to the skepticism or any pushback or anything that you’ve received around the public perception of what you’re doing versus what you actually are doing. You know, what would you say? Speaker 1 – 34:14 So there’s two areas that, from very different perspectives, actually, funnily enough, that people have some concerns and that we actually engage very openly with these concerns. One is, as you said, it sounds like science fiction. And to that I say the diff, you know, science fiction. The difference between science fiction and science is people are working on the problem in science. So science fiction ends and science begins when you start working on the problem. And we’ve been doing that. We’re not claiming that we’re there yet. And we try to keep our claims grounded in fact. And we’re always very honest when we say this is what we’ve done. This is the data we have, this is data we have some evidence for, and this is what we believe is possible so people understand that there is those differences. Speaker 1 – 34:56 And when we have proof of something, we’ll publish it openly, as we’ve done this whole time. And so that’s what I’d say for that is science fiction ends and science begins when you start working the problem. We’re working on it, we’re working it openly with multiple collaborators around the world. And we hope to be able to make that progress. The other side, which is quite funny, is often for people who dive more into the science fiction and the science, and they say, oh, well, what if these cells are conscious? What if they’re suffering? Forgetting that these are incredibly simple systems. As I said, we used a bee’s worth of neurons, but not with the B structural, the number of different cell types that a bee would have. So even simpler than a bee. And very few people. Cockroach say a similar number sometimes. Speaker 1 – 35:40 Maybe a cockroach is a better analogy for this. Most people don’t mind about spraying a cockroach or stomping on it if it comes into the house. And quite often I say to someone, yeah, have you, have you killed a cockroach or a mosquito? Oh, yeah, sorry. So you don’t actually care about a couple of hundred thousand cells. Right. Speaker 2 – 36:00 I’m one of those people that I, I, I need someone else to kill it for me because I don’t, I can’t stand the crunching sound. So I’m not the right person to ask. Speaker 1 – 36:10 But, you know, the ethics is a fair point. And, and despite being like often, I. Speaker 2 – 36:14 Get what you’re saying. Speaker 1 – 36:15 Put it into context. We do still engage really closely with the ethics. In fact, our first paper, we Ever published from Cortical Labs was an ethics paper. And we’ve since worked with a large number of independent and international bioethicists, philosophers, regulatory experts. We pretty much always have a paper ongoing in this area, working with independent people to make sure that this research can be developed and applied responsibly. So to the people with those concerns, we often have to bring it back into something closer to reality. But to that extent, we are working on it, and we’ll continue to work on it because it’s the right thing to do. And often people are concerned because we’re a company. But actually a lot of the things we talk about, the ethical benefits come out better because we’re a company. Speaker 1 – 37:01 For example, generally people agree that accessibility and equitable access are good things, but the reality is that an academic lab will never make this technology equitable to access, because why would they? If they have an edge in research, they’re going to want to keep that edge. They’re also just not set up to make these things even if they wanted to. They’re not even set up to do it. But a company benefits by making their technology as accessible to as many people as possible. The same thing with personalized medicine. The more accessible we can make, the better for us commercially it is. So this idea that because we’re a company, this is more concerning, is actually misplaced. It’s the exact opposite. By helping as many people as we can, which generally we think is an ethically good thing to help as many people as possible. Speaker 1 – 37:48 Yeah, we also do the good thing commercially. And so this is the interesting case where you can actually progress things in a good way as long as you take that approach. I’m not going to say that there aren’t ways where companies could do things unethically. And I do have some concerns about some things going on with what other people are doing in other areas. But you can also do it correctly and openly. And that’s the approach we’re taking. Speaker 2 – 38:11 Yeah, and that’s very important. And one of the things that I, I saw about what this opens up a possibility for is to be able to do more tests that. So you’re not using animals, you’re not using, you know, things in the lab research. You’re able to test on these neurons. And, you know, I think that when you’re talking about ethics, that sounds like a much more ethical way to go. Speaker 1 – 38:37 You’re not testing on animals, and that is a big focus for us. I mean, I, I did do a lot of animal testing during My education and training and Ph.D. And, and the reality is there are a lot of people who don’t like animal testing. A lot of them are scientists. We do it when necessary because sometimes it is necessary. And if you say, well, what’s better for, you know, my PhD was on trying to find ways to help babies that have had a stroke either just before or during birth, which is heartbreaking. And as a, you have to weigh those up and say, well, we want to fix this issue. So some short term animal testing may be necessary. But what if it wasn’t necessary? What if you could avoid testing on animals altogether? Speaker 1 – 39:20 And, and yeah, pretty much no scientist who does this enjoys it. And if they were, they’d be ostracized, frankly, because that’s an uncomfortable thing to do. It’s, it’s, that’s the case of it. So even most scientists are eager for any alternatives to animal testing because it’s a better approach, it’s more ethical approach. And that’s one thing that we think this could be super helpful with in the long term. And especially the reality is when you get down to it, animal testing for a lot of neurological and psychiatric issues is not particularly good even. It’s the best we have. Yeah, it’s the best we have and we’ve had to work with it. Yeah, but what if we can build something better? And that’s one of the big things that inspire us. What if we could build something better? Speaker 1 – 40:06 We can reduce animal testing as well as improve the chance to get better drugs and better understandings of diseases and all these things as well. Speaker 2 – 40:14 Yeah, I, I, I agree 100%. And so what advice would you give to researchers or entrepreneurs who were looking to get into this field of biocomputing? You know, what advice would you have for them? Speaker 1 – 40:32 I’d say reach out and say hi. Because what we’ve done is try to build a way to make that as accessible as possible for them and everything else. Once you have the tools, you can go away and build it. You know, we’re not here to sort of dictate what people within reason, but we’re generally not here to say what people should and shouldn’t do. We are here to try and make it accessible. So if you think that this is going to be a great tool to help with say, looking for drugs for depression, we can help with that. If you think that this is going to be a great tool for mining Bitcoin, it’s probably not what we’d pick. But maybe you have a way. We never thought to approach it. We’re not going to be able to cover all the applications ourself. Speaker 1 – 41:13 And so we need to approach that with humility and say, great, let’s share. Let’s see what we as a community can build. And you know, when we talked about those short, medium and long term, I told you that, you know, some of these things, I don’t know if they’re 2 years or 20 years away or 50 years or who knows? Speaker 2 – 41:28 Right. Speaker 1 – 41:28 The thing that will dictate it is how much the community come together to solve these problems together. And that’s going to be, I think, the big difference. Speaker 2 – 41:37 Yeah, that’s a great point. Okay, so then you said reach out to you. How can people reach out to you? Speaker 1 – 41:43 So if you go www.corticallabs.com or you just Google us or whatever your preferred search engine is will pop up. There’s forms there you can fill out, you can send an email and we’ll be in touch with you to see how we can progress things. Speaker 2 – 42:02 Well, Brett Kagan, thank you so much for being on our show today. This has been a fun conversation and I’m sure our listeners are going to be fascinated. Speaker 1 – 42:12 It’s been a pleasure to chat with you. Speaker 2 – 42:14 Yeah, it was really great having you. Speaker 1 – 42:15 And thanks so much. Speaker 2 – 42:16 Yeah, pleasure to chat. Thanks everyone for tuning in.