The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

In a groundbreaking revelation that is poised to shake up the world of AI as we know it, VERSES AI, in collaboration with the world’s largest multi-national law firm — Dentons, and the Spatial Web Foundation published a landmark industry report today titled, “The Future of Global AI Governance”, offering an innovative solution, redefining our potential to resolve the challenges facing AI governance and regulation, particularly the lack of explainability in current AI generative models.

In a world that is seemingly focused on a choice between unhindered innovation of AI technology, or governmental oversight of AI developers — holding them responsible for the explainability of their AI systems’ results (an impossible task for machine learning AI applications), or the AI Act, the EU’s attempt at assigning “risk” category levels to various AI applications as an arbitrary means to try to mitigate the potential risks against the stifling of AI advancement, the findings in this report offer another solution; one based on grounding AI through a combination of global socio-technical & core technical standards that can be programmed into the AI itself within a common network of intelligence to provide intelligent agents with an accurate understanding of the world “in a meaningful, reliable, and explainable manner.”

According to today’s report, “Grounding [AI] facilitates meaningful understanding, collaboration, and ethical decision-making by bridging the gap between AI systems and humans, ensuring alignment with societal norms and values,” while enabling adaptability as the relationship between humans and AI continues to grow and evolve toward future advancements.

The explosion of Generative AI tools in the past seven months has sparked very serious concerns about what it means to human civilization if AI evolution proceeds unchecked.

Today, VERSES AI, Dentons, and the Spatial Web Foundation, set forth an outline of the problems facing global AI governance, why we need to act now, and how to reconcile our sociocultural differences with a cutting edge socio-technical solution. The report ends with a proposed call to action — The Prometheus Project — a global regulatory sandbox, in which we can test and control emerging AI technologies in a controlled environment.

We’ve heard many calls for regulation. We’ve witnessed petitions to pause innovation until we can figure out how to control what appears to be uncontrollable.

Our uneasiness over the idea and possibility of AI technology evolving beyond our control is largely due to the fact that we are acutely aware of the difficulty in mastering that which is unknowable.

Machine Learning AI models are akin to “black boxes.” The processing within the neural net structure of transformer AIs like LLMs is obscured. The inner workings are a mystery. We cannot see how these layered algorithms crunch the input data, compare it with their training data, and then come to their conclusions for output. These are extremely complex and opaque programs.

So how do you manage an operation in which the processing is unobservable? How do you audit a program when the activities are unalterable? And how do you align a system of these machines, such as autonomous vehicles, with human values?

Much of the call for AI governance centers around the ability to explain the outcomes, ie, holding the tech developers accountable for false, defamatory, or otherwise harmful information generated by these AI tools. Yet, it is also acknowledged that this is asking for the impossible.

We also face many other serious considerations regarding regulatory or governance approaches for AI. Humanity exists over a vast international ecosystem. How do you determine one rule of law to protect or appease all, when there are so many governmental, cultural, ethical, and belief system differences from one country or region to the next? How do you create a fair and equitable approach, when needs and goals vary from one society to the next? How do you even begin to enforce alignment of human values without common ground?

The vast differences in various AI technologies also need to be taken into account. We will have AIs with various abilities and at different stages of development. How do you adjust the regulation requirements according to situational need?

These challenges are very real, and the concern is warranted, but the proposed solutions fall short of addressing these issues on a global scale.

Yet, there is an urgency to find a solution and come to agreement, otherwise the market drives the solution, which raises a slew of other concerns. Given the rapid acceleration of advancement in AI technology, these large, centralized corporations like Google, Meta, Microsoft, and a few capital rich AI startups throughout the world are naturally driven by profit, charging ahead of one another in competition, seemingly without consideration to the potential future affects on humanity, for risk of losing the race to AI domination.

Lacking a coordinated approach, the regulatory landscape is fragmented, evidenced by divergent strategies and policies across various countries and organizations. Several initiatives aim to address these challenges, such as the G7’s call for international discussion on AI governance, the US White House’s proposal for an AI Bill of Rights, the EU’s AI Act, and efforts from the Future of Life Institute. However, these endeavors face serious issues around enforcement, comprehensiveness, and inclusivity, especially considering the rapid advancement and global implications of AI technology.

What we are left with is a lack of consensus and cooperation as to the optimal path forward, resulting in a medley of non-interoperable and arbitrary, unenforceable AI guidelines.

Oh, and did I mention the other elephant in the room? Logic tells us the path of evolution and innovation with cognitive architectures leads to an end goal of autonomous, self-governing systems. If we establish legal deterrents for non-compliance to these governing agreements, these restraints may not affect a machine’s abidance to law in the same way it would affect a human. Humans are largely influenced by forms of suffering like fines, punishment, and restrictions, whereas a machine that is self-governed is unaffected by such external penalties.

In today’s report, “The Future of Global AI Governance”, VERSES AI, Dentons, and the Spatial Web Foundation break new ground, suggesting a an entirely new approach as a solution. One that is based on socio-technical standards along with core technical standards.

Their approach: rather than focusing regulation on the companies developing the AI tools, regulate the AI systems, themselves, through a “formula for adaptive AI governance,” resulting in adaptive and self-regulating AI.

Further, the report points out, “For AI systems to reach their full potential, they must be capable of interoperability and coordination, while also respecting privacy and data protection norms.”

This requires specific framework to encode the guiding principals directly into the AI machines. This framework already exists, and even further, it has been tested with great success.

VERSES AI has created a global all-inclusive cognitive architecture that facilitates a system in which AI and AIS (Autonomous Intelligent Systems) can gain understanding and act accordingly. Active Inference within this architecture facilitates explainable AI – AI that is capable of self-examination and self-reporting. Within the computational geo-spatial architecture of this system, human law can be transformed into computable law that AI can indeed comprehend, abide by, and enact within its decision-making process. A process which is fully auditable, knowable, and updates in real-time.

In their recent industry report, titled, “Designing Explainable Artificial Intelligence with Active Inference: A Framework for Transparent Introspection and Decision-making,” VERSES AI proposed a revolutionary architecture for “explainable AI”, revealing the aspects of their research that demonstrate the capacity of Active Inference AI to be introspective, enabling Intelligent Agents with the power to access and analyze their own internal states and decision-making processes and the ability to report on themselves.

At the heart of this computable governance are the core technical standards of the Spatial Web Protocol, the computing protocol that evolves the internet as we know it into a distributed and unified global network of intelligence. HSTP (Hyperspace Transaction Protocol) and HSML (Hyperspace Modeling Language) enable a system of distributed intelligence that takes its cues directly from human input. This protocol facilitates what VERSES AI terms, “law by code;” the ability to translate human laws and guidelines into a programming language that AI can observe, comply with, and act accordingly.

It is not possible to expect a machine that is only capable of pattern recognition to interpret or adhere to laws, when that machine was trained on datasets geared toward performing a particular function and bears no ability to possess any awareness or understanding of the data itself, nor how that data relates to anything occurring outside of it. It’s not possible to expect a machine to be able to adhere to “laws” when the machine itself is uncontrollable and impossible to rein in if it steers down an undesirable path of data processing.

In late May 2023, Sam Altman, CEO of OpenAI, said his company could quit operating in Europe if they are not able to comply with the proposed EU’s AI Act, “If we can comply, we will, and if we can’t, we’ll cease operating… We will try. But there are technical limits to what’s possible.”

It’s not hard to see where the concern comes from.

HSML (Hyperspace Modeling Language), the programming language of the Spatial Web, acts as a cipher for context informing the intelligent agents across the network of the ever-changing details and circumstances within a nested hierarchy of spaces, objects, entities, and the interrelationships by and between each of them. All the while, HSML is translating the real-time data inputs from IoT sensors, cameras, machines, and devices, simultaneously updating the AI’s awareness and perception, giving it a baseline for what it knows to be true at any given moment in time.

Spearheaded by VERSES AI, and funded by the European Union’s Horizon 2020 program, Flying Forward 2020 (FF2020) is a groundbreaking urban air mobility (UAM) project enabling drones to navigate urban airspace by employing geospatial digital infrastructure with the Spatial Web Standards. One of the most remarkable aspects of this FF2020 project is the translation of existing air traffic control laws for UAM into HSML.

FF2020 uses Spatial Web Standards to govern and enforce legally compliant behavior of autonomous drones, ensuring that the drone acts in accordance with all local rules and regulations without the need for human intervention. The drones were also able to navigate the geography and topography of the terrain, while also complying with rules defined by the European Union Aviation Safety Agency. Demonstrating the ability to traverse between countries in various altitudes through controlled/uncontrolled airspaces, the autonomous vehicles can remain compliant in all real-time situations while adjusting seamlessly to the changing circumstances.

According to this report, “The Future of Global AI Governance”:

“Through machine-readable models and data integration from various sources, FF2020 enables autonomous drones to comprehend and seamlessly comply with laws and conditions. The project has successfully translated existing EU laws for Urban Air Mobility into Hyper-Spatial Modeling Language (HSML), allowing autonomous drones to adhere to parsed rules and regulations automatically, even those that inherently contain ambiguities. For example: “UA operators must fly safely.” But how is “safely” defined? HSML can explicitly identify ambiguous laws and provide a feedback mechanism for developing future analog laws with machine readability in mind. This creates a virtuous two-way loop between analog law and digital law.

FF2020 has demonstrated its effectiveness through real-world use cases, including emergency delivery, infrastructure maintenance, and security monitoring. The project’s approach ensures compliance with local rules and regulations without manual intervention or human involvement. It also enables autonomous drones to navigate airspace, avoid security and no-fly zones, and understand and enforce regulations set by the European Union Aviation Safety Agency (EASA).”

The Spatial Web offers an all-inclusive networked system of distributed intelligence for AI to function throughout. Machine learning neural nets are program applications, whereas the Spatial Web is a network for all entities, programs, and operations to seamlessly interact within.

As AI Researcher, David Shapiro says, “One neural net will not reach AGI… A brain in a jar is useless.” AGI will inevitably evolve within a system mimicking similar systems that we can observe in biology and nature.

VERSES AI has developed a networked ecosystem that allows Intelligent Agents — humans and machines, to grow in tandem with each other, learning, adapting, and adjusting as needed along the way. This system provides interoperability and cooperation between humans and AI.

This cognitive architecture and the proposed method of AI governance in today’s report offers us a way to get AI right the first time, granting us the ability to shape and guide these Automated Intelligent Systems while retaining control over the outcome.

This is the most likely system capable of instilling a sense of trust between human and machine.

Additionally, VERSES AI, Dentons, and the Spatial Web Foundation propose a global regulatory sandbox called, The Prometheus Project, based on core-technical standards. This public/private initiative offers a collaborative landscape that can be used for testing and aligning emerging AI technologies with suitable regulatory frameworks, using advanced AI and simulation technology, creating an environment where we can plan for the future and test socio-technical standards before their widespread adoption.

This sandbox enables governments, technology developers, and researchers to iteratively deploy, evaluate, and discuss new technology and standards’ impact, providing valuable insights into how emerging technologies can comply with existing regulations and how new regulatory frameworks might need to be adjusted. It also provides an environment for stakeholders to experiment, gain insights, and adapt regulations, thereby reducing barriers to entry and promoting innovation. This initiative, leveraging expertise and resources from both public and private sectors, aims to provide an efficient regulatory framework for increasingly autonomous AI systems, positioning AI as an opportunity rather than a threat.

As this report suggests, “The future of AI governance, therefore, is not merely about restrictions and controls designed to avoid the potential perils of AI. It should also provide us with enormous benefits by steering AI systems toward their most promising capabilities…Smarter AI governance aligns AIS with our most vital human values while empowering these systems to operate more autonomously, more intelligently, and more harmoniously at global scale.”

VERSES AI and the Spatial Web Foundation offer us the framework in which we can build an ethical and cooperative path forward for AI and human civilization, chartering a prosperous future in which we can coexist peacefully, safely, and abundantly — all humans and all machines.

Where can I find the full report from VERSES AI on AI Governance? Click HERE.

Want to learn more about the Spatial Web Protocol and Active Inference AI? Visit VERSES AI and the Spatial Web Foundation.

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

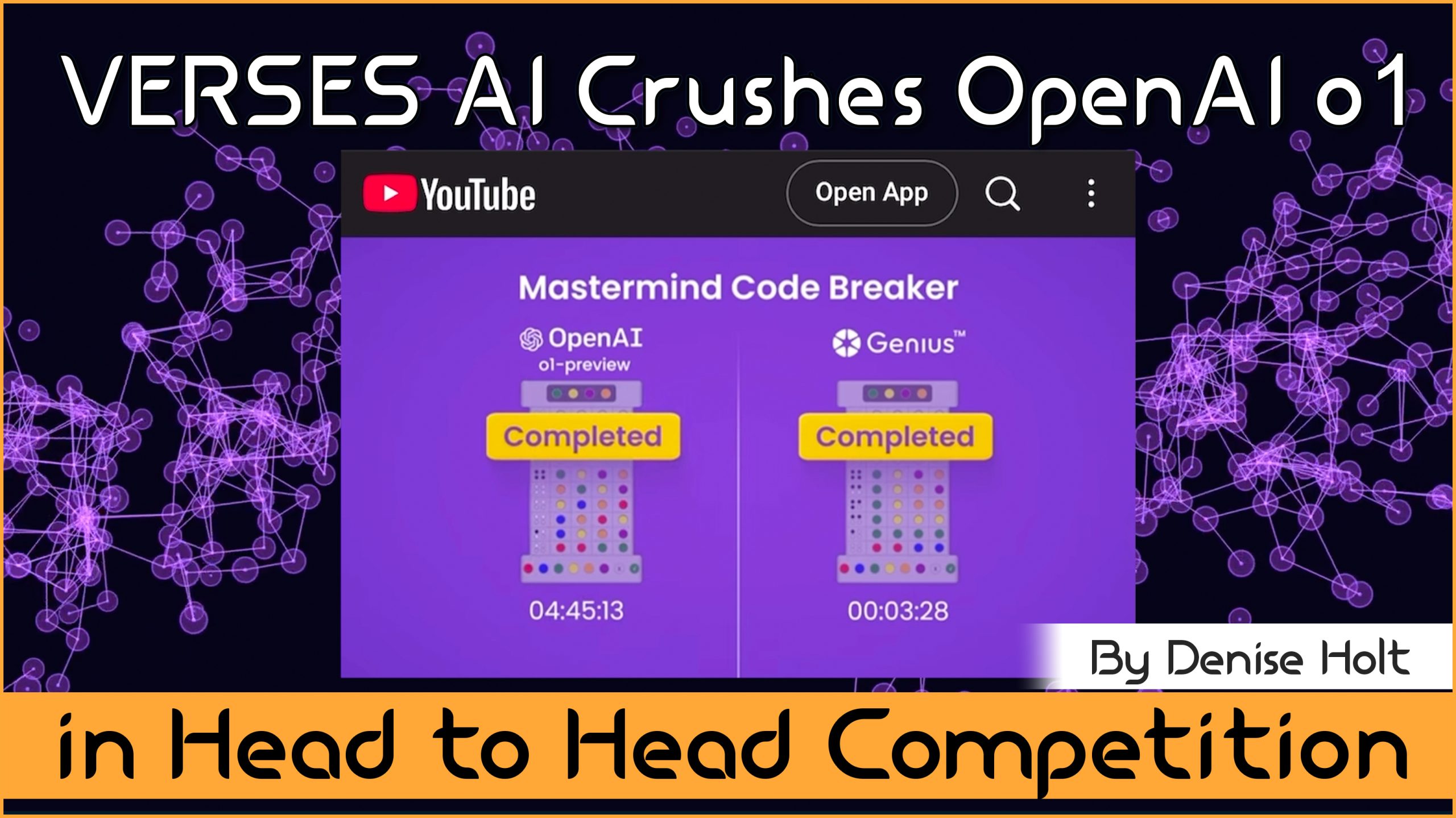

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...