The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

VERSES AI has developed a unique methodology that combines Active Inference AI with the Spatial Web Protocol, trained on real-time data within a common network of Intelligent Agents based on real-world events as they occur and unfold over time.

OpenAI administers a multi-modal methodology of generative Machine Learning AI derived from LLMs trained on text/image pairs.

In a mere three question conversation with ChatGPT4, the OpenAI bot provided a solid basis for understanding the greatest issues facing Machine Learning LLMs, the advantages of Active Inference AI over LLMs, and the way in which the next evolution of the internet protocol, the Spatial Web, when combined with Active Inference AI, can function as a nervous system for a company, city, or even larger systems.

ChatGPT Replies:

ChatGPT Replies:

Active Inference (AI) and Large Language Models (LLMs) are quite different types of AI. Active Inference AI from VERSES.AI has a number of unique characteristics that can solve many of the problems found in LLMs like ChatGPT.

ChatGPT Replies:

Active Inference combined with the Spatial Web could indeed function as a “nervous system” for a company, a city, or even larger systems, in the sense that it could perceive, process, and respond to real-time information in a way that’s similar to how biological nervous systems operate.

Here’s how this could work:

For example, in a city, this system could monitor traffic flow, energy usage, air quality, and other key metrics in real-time. It could then make decisions to optimize traffic routing, energy distribution, and environmental management based on the current state of the city and its understanding of the city’s dynamics.

In a company, it could monitor production, inventory, sales, and other key metrics, and make decisions to optimize resource allocation, supply chain management, and strategic planning.

In both cases, the combination of the Spatial Web and Active Inference AI could provide a powerful tool for autonomously managing complex systems, with the potential to greatly improve efficiency, responsiveness, and overall performance.

ChatGPT4 provided a point by point comparison that demonstrates the clear advantages that Active Inference AI has over LLMs. Active Inference AI combined with the Spatial Web network enables a generative model of the world that is grounded in actual occurrences within the world that update continually in real-time, providing the AI with an efficient and accurate basis of evolving information with which to learn, adapt, and make decisions.

The Spatial Web provides a foundation for an evolving network that unites programmable spaces with human activity and real-world, real-time data insights, enabling and empowering a network of Intelligent Agents to perceive, interpret, and carry out actions with a high level of reliability and accuracy.

VERSES AI has developed an artificial intelligence methodology with capabilities that are simply not possible with LLMs; a system of programmable nested intelligence that can sense the world around us, with the potential to monitor and manage complex systems great and small.

Special thanks to Dan Mapes, President & Co-Founder, VERSES AI, and Director of the Spatial Web Foundation.

You can learn more about the Spatial Web Protocol and Active Inference AI, by visiting the VERSES AI website: https://www.verses.ai and the Spatial Web Foundation: https://spatialwebfoundation.org.

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

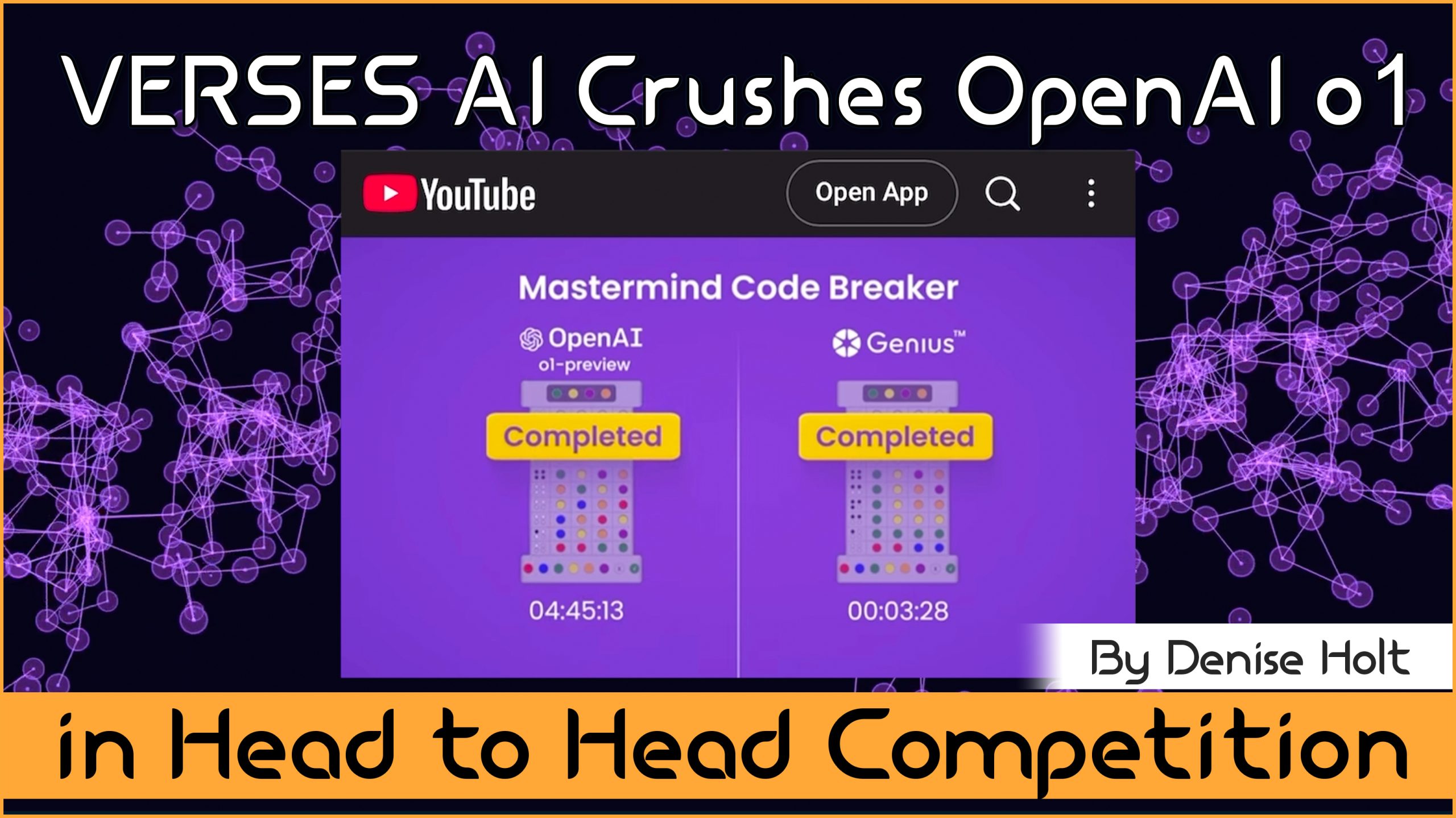

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...