The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

In a recent episode of the Spatial Web AI Podcast, host Denise Holt had an enlightening conversation with Dr. Jacques Ludik, a global AI expert and smart technology entrepreneur. This discussion ventured into various realms of AI, offering insights into its future, ethical implications, and transformative impact on society.

Dr. Ludik shared his journey in the field of AI, with a focus on machine learning and neural networks. His work in building recurrent neural networks and exploring their applications in various industries underscores the current state of AI technologies. Active Inference AI, a concept central to Dr. Ludik’s Massively Transformative Purpose, is poised to revolutionize our understanding of networked synthetic intelligence – a self-evolving, self-organizing, and self-optimizing approach that is far more advanced than current AI.

A significant part of the conversation revolved around the Free Energy Principle, by Dr. Karl Friston. This concept, crucial to understanding how AI can mimic human learning processes, offers a new perspective on AI development. Dr. Ludik’s insights on this topic highlight the potential for more advanced and efficient AI systems.

Dr. Ludik and Denise Holt delved into the ethical aspects of AI, discussing the importance of AI governance. The conversation highlighted the need for responsible AI development, ensuring that emerging technologies are guided by ethical principles and governance frameworks.

The podcast touched upon broader societal implications of AI, including its role in empathy and privacy. Dr. Ludik emphasized AI’s potential in enhancing the quality of life and its transformative impact on various sectors, from healthcare to industrial processes.

Dr. Ludik praised the work of Karl Friston and other leading figures in the AI field. Their contributions have been instrumental in shaping the current and future landscape of AI, providing a roadmap for innovative and beneficial AI applications.

The discussion also covered HSML (Hyperspace Modeling Language) and VERSES, highlighting their role in the next evolution of AI technologies. These tools are essential in building more comprehensive and interconnected AI systems, facilitating the creation of digital twins and more.

Dr. Ludik, with his extensive experience in AI, emphasized the need for democratizing AI technology. He advocates for making AI accessible and beneficial for all, aligning with his vision of a world where AI serves humanity’s broader goals.

This episode of the Spatial Web AI Podcast with Dr. Jacques Ludik provided a profound look into the future of AI, stressing the importance of ethical development, governance, and the application of AI for the betterment of society. As we continue to navigate the ever-evolving landscape of artificial intelligence, conversations like these are vital in shaping a future where AI enhances human life in sustainable and ethical ways.

Connect with Dr. Ludik: Website: https://jacquesludik.com/

Episode Chapters:

00:12 – Introduction to Dr. Jacques Ludik

01:23 – Dr. Ludik’s AI Background and Journey

07:00 – Future of Smart Technologies

10:30 – Massive Transformative Purpose in AI

15:08 – Vision for a Thriving Civilization

20:50 – AI’s Impact on Jobs and Empathy

23:47 – Merging Technology with Humans

26:09 – Active Inference and Bayesian Approach

30:51 – Digital Twins and Spatial Computing

34:57 – Intelligent Agents and HSML

41:16 – AI Governance and Trustworthiness

48:23 – Future of AI and Decentralized Data

53:00 – Active Inference in World Models

59:38 – Overcoming Sunk Cost Bias in AI

01:05:03 – Personalized AI and Future Possibilities

01:10:01 – Wrap Up

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

00:12

Speaker 1

Hi, and welcome to another episode of the Spatial Web AI Podcast. I’m your host, Denise Holt. And today we have a wonderful guest with us today, Global AI expert Dr. Jacques Ludic, a smart technology entrepreneur and founder of multiple AI Companies, an AI ecosystem builder and award winning AI leader with a PhD in Artificial Intelligence and three decades of experience in AI and its applications in multiple industries across the globe. Also, Jacques is the author of Democratizing Artificial Intelligence to benefit everyone. So, Jacques, welcome to our show. Thank you so much for being here with us today. I’m so excited about this conversation we’re about to have.

01:00

Speaker 2

Absolutely, Denise, I’m very excited as well and was lovely talking to you before, and this is going to be great. Looking forward to it.

01:09

Speaker 1

Yeah, definitely. So why don’t we start off with a little bit of your background. I’d love the audience to understand a little bit about your experience and kind of your thoughts and direction with AI at the moment.

01:23

Speaker 2

Yeah, I was kind of always interested in computers and mathematics and all of these kind of things. And I was fortunate to be introduced to machine learning. Right. I would say 1st, 2nd year, third year. And then I just fall in love with it and I wanted to understand the brain also better. I really interested in human intelligence and natural intelligence and those kind of things. And then my honors master’s and PhD was absolutely focused on machine learning, neural networks actually, but we covered the whole spectrum, so it was like fuzzy logic, genetic algorithms, looking at and programming in prologue and Lisp and all of the different types of languages at that times. In the. Finished my PhD in mid ninety s and so forth. And I also collaborated with Professor Dan Stein at that time, one of my kind of research partners.

02:22

Speaker 2

So I was actually academic and we wrote a book or edited, co edited. Wrote a book called Neural Networks of Psychopathology. It’s also on my website, talk. Got it there at the back.

02:35

Speaker 1

That sounds heavy.

02:37

Speaker 2

But I was just interested, so I was more kind of the AI expert. And Dan Stein, he’s like a world renowned guy in OCD and psychiatry. He’s probably one of South Africa’s top researchers. He’s really a world class guy. And he produced so many books and publications afterwards. But that particular one, we built neural network models of brain disorders like OCD and those kind of things. So my focus was on recurrent neural networks. So I created these kind of recurrent neural networks, make lesions in the networks and doing all sorts of stuff and tutorial and simulate some experiments as well. So it was fascinating. And I also started to apply collaborate with the business school and chemical engineering, electronic engineering, because I was more kind of computer science.

03:27

Speaker 2

And it was so fascinating, just the application of this kind of technology, I just realized, and this was before deep learning, so I saw the scalability. I remember even with my master’s degree, I had one chapter focused on the parallelization of neural networks, and we used transputers at that time. And I remember speeding it up, trying to build the kind of the deep learning stuff, but you were limited with the compute and the data and stuff, but I was paralyzing neural networks and stuff, so I got that kind of experience. So it was fascinating. And then I just realized, yeah, I can follow academic career and stuff, but there’s so much to do out there. And I started my first, a company, ceasing systems, with the co founder was a PhD in chemical engineering.

04:15

Speaker 2

And we did quite a bit of work everywhere, financial services sector, all of it. But we decided we’re going to focus on minerals, metals, mining, the industrial space, just because in South Africa and Africa you actually have quite a bit of mines and there’s some manufacturing and so forth. And then we build it out, Africa, Australia, North America, everywhere, and then move not only from continuous processes and minerals, metals mining, to discrete and batch processes in manufacturing, and a lot of customers across the globe. And we started to collaborate with Wonderware and General Electric and all of these kind of things. And then towards, I think, 2009, we’ve got this OEM agreement that we concluded with General Electric, because they were just seeing us everywhere. And were kind of the AI layer on top of the industrial, the SCADA systems, the industrial application layer.

05:09

Speaker 2

We add an AI layer on top of the human machine computer interface. And what we did was things like predictive maintenance, but also real time causal identification of process problems. So we’re building models of processes and pieces of equipment, and if you can improve the throughput, the yield, the quality with one, two, 3%, it translates to millions of dollars. So the business cases was there and we build a proper business there. And so it’s incredible experience going global, appointing distribution partners and implementation partners around the globe, and then spending time, quite a bit of time at GE, also the GE for GE business, because they are in energy and healthcare and all sorts of different spaces. And at that time they were like Apple and Google, they were the top in the 2010, up to 2011 twelve. They were really dominant.

06:06

Speaker 2

So it was incredible time to spend time in San Ramon and obviously universities, they’ve got their own university in New York, and G intelligent platforms was actually headquartered in south of Boston, Foxborough. So I spent quite a bit of time there. And then when were acquired, I spent quite a bit of time in Chicago and was kind of a global experience because I spent time in China and Australia and all the different places. But it was wonderful applying it and just learning all the time. And obviously the G environment, obviously the shareholder transaction deal was two years, retention bonus is three years. I was there for almost five years, and that was an incredible experience. But I’m a smart technology entrepreneur, so I wanted to do more things. I couldn’t just be part of a corporate.

07:00

Speaker 2

And so from there on, I started the next phase. And there’s more to talk about those things as well, if you like to know.

07:07

Speaker 1

Yeah, absolutely. Because I’m really fascinated with smart technologies, too, and especially with the coming future of all of these technologies converging together. And it’s funny that you talk about one of the biggest challenges in the past was compute power, and we have bandwidth issues and all kinds, and it’s like it’s all converging into this ecosystem that will let everything just explode.

07:37

Speaker 2

Exactly. It’s wonderful. Yeah, you’re right. And for me, I’m thinking even AI, Web three, the next generation of the Internet, and wrote an article on that. It’s almost like we’re creating all these building blocks in the smart technology toolbox that will allow us to do these incredible stuff and then also averses, and we will definitely get into that, the spatial web, those kind of things, that kind of evolution, which is actually more kind of defining Web three for me in a proper way, because for spatial computation as well. So I’m excited about that. But obviously we get to that as well.

08:12

Speaker 1

Well, it’s interesting that you say that too, because there’s definitely a distinction between Web three and Web 3.0. And a lot of people ask, what is the difference? Aren’t they the same? But in my perspective, it’s like Web three is the technologies in that space, but Web 3.0 is like the evolution of our global network into that space where everything comes together.

08:39

Speaker 2

I like that. Yeah, it’s all terms that we use and labels that we place to describe things, but at least that’s part of the sense making process, because there are technology, as you say, and there is evolution. So I like that.

08:55

Speaker 1

Well, it helps me to compartmentalize it all because there are so many awesome things going on in the web three space, and then Web three, it includes so many other technologies. You have all the XR technologies and then the distributed ledger blockchain technologies. I don’t know. It’s interesting because I think one of the biggest issues with all of those technologies is that they’re not interoperable. It’s not easy to make them interoperable. They need this evolution of our global network to evolve, to include them all and make it to where they have a common language and they can interact and actually become an ecosystem because they all contribute to what we envision as this future ecosystem.

09:49

Speaker 2

Exactly. That’s what’s so nice about this. And it’s almost like connecting the dots, putting all the Lego blocks together in a proper way. And that’s what I love about versus as well, the fact that even the book I got the book as well. So just to have that vision and understand the big picture of what’s happening here and what is the missing pieces, and if it’s missing, let’s fill that gap. They’re really on that path as well. So for me, that’s awesome. So great to see.

10:30

Speaker 1

So while we’re still on the subject of you, I would love to ask you because I’ve read several things about you. I’ve seen some of your interviews, and you talk about having a massively transformative purpose within this AI sphere. So I would love for you to talk about that. What is that all about?

10:52

Speaker 2

Yes, absolutely. So I do talk about in the book, and I wrote the book during COVID It was before chat. GPT Yes, I wrote the book during COVID and had time to reflect and think. But it’s obviously things that were coming for a long time because I’m thinking about what are we doing here? And it’s about legacy, and it’s about thinking about civilization. And for me, it’s always important to make a positive contribution. Can we cause positive ripples in the fabric of civilization, even in our humble little way, each one of us? We can’t move the needle as individuals too much. But if you participate in if you can maybe influence things, and if you collaborate with other people, you can do big things.

11:40

Speaker 2

Even Elon Musk, with just a few very specific visionary statements and questions that he was asking, is able to make some positive impact and attract top talent. So it’S very important to get the narratives right, to push civilization the right direction. And in my book, I’ve got a chapter that talks about what does it mean to be human in the 21st century? And I had to go back to philosophy and man Search of Meaning. And Lex Friedman has got these kind of Questions as well, that he always asks in the past, what is the Meaning of life? And I tried to make a synthesis of, I think it was about 150 of these kind of episodes where I try to figure out and think what are the Most important ones? And it’s captured, and I’ve extracted that in chapter TEN as well.

12:28

Speaker 2

But then further on, Daniel Schmochenberger talks about, he’s a system thinker, and he talks about the Metacrisis and the problematic trajectory that humanity is on. And he’s talking about all the Generative functions for this and all of these kind of Things. And I think there’s a lot of merit there. So I was trying to make Sense and try to figure out all the different kind of pieces and then looked at even Max Tech. Mark wrote a book called Life 3.0. And I thought it was very good because he was kind of laying out the various possibilities where humanity is potentially going. I tried to use that as input as well. And then I went to really focusing on beneficial outcomes for humanity.

13:12

Speaker 2

And I just realized it’s important that we have a purpose, a massive transformative purpose as a humanity, and we are a hyperconnected civilization society. So it’s very important that as a civilization intellect, that we’ve got a purpose and we understand where we’re going. And you see it a little bit with some of the countries that’s doing a little bit longer term thinking. So, like in China, for instance, there are really good and bad, but there are really good aspects there. And I see this in some other countries as well. And I think that’s quite important.

13:45

Speaker 2

And I think with capitalism, this Quarterly growth, Wall street type of thing, also political, if you think about just the whole dynamics around political system, governance systems and democratic systems, it’s almost hurting ourselves because there’s so much good there because we need to protect the freedom of the individual, but we have to think about the. So I do think those kind of things are important. So I was trying to really look at in Africa, they talk about Ubuntu, which is really trying to think about us as well. So it’s a focus on the collective. There’s something in South America also around that. And then United nations actually have really incredible, if you think about it, the vision that they’ve laid out for 2030 and the 17 Sustainable Development Goals are pretty good. The question is we’re struggling to implement that.

14:42

Speaker 2

And what I did was to say, okay, let’s define a massive transformative purpose that’s aligned with. And I actually have slides where I can maybe quickly show that as well if you want.

14:54

Speaker 1

Absolutely. I think I’ve got it to where you can share. Yeah, absolutely.

14:58

Speaker 2

Okay, great. Okay, so what I wanted to quickly show, you can see I’ve got some of versus slides in here as well.

15:05

Speaker 1

I talk about nice.

15:08

Speaker 2

I’m utilizing, and I’m actually promoting this because I do think apart from the technology itself, the broader vision I think is so good. Anyway, so this is what we need. We need visionary leadership, collective sense making, wisdom and practical actions to ensure that humanity and our civilization is moving in the right direction as we work towards unlocking the tremendous potential of AI and other smart technologies in terms of the purpose here. And I can put this in full screen if it helps, maybe make it better. So it’s very simple in one level, because it says evolve a dynamic empathic. I think it’s so important that we’ve got empathy, understanding of cultures with one another, because we are one the problems that we face as humanity goes across borders.

15:58

Speaker 2

It will be very interesting to see in 500 years time if we still have countries as defined now, it might be just smart cities, smart towns that local communities connected like nodes in the Internet. And it will be fantastic if it’s like the spatial web and everything is properly contextualized. And I can just see that kind of world happening, but a thriving, self optimizing civilization that benefits everyone in sustainable waste and in harmony with nature. And I actually looked at the four parts of this. So you need to look at things holistically at a systems level. And I’ve just tried to summarize it. I say decentralized adaptive systems to benefit all by driving beneficial outcomes for all life through decentralized. This is again where web three blockchain all of these kind of technologies comes in, it has to be adaptive and agile.

16:52

Speaker 2

That means what is it we’re talking about? We need to reengineer the economic, social and governance systems and then what I think we should be doing. And this could also help solve the thing around jobs and those kind of things. And meaning we need to reward active participation and positive contributions to society and civilization, but also help. Yeah, that could be to anything that people do, give the incentives, give them tokens, give them all sorts of stuff so they can survive. Obviously, if we live in a Star Trek abundant kind of world where you’ve got this kind of situation, it will be unbelievable, but you’ve got to also have, it’s almost like if you look at your body as well, if you’ve got a problem in your knee or somewhere, you want to make sure that you address that.

17:39

Speaker 2

So it’s almost like if there’s problems in the country or certain towns or cities, let’s help one another. So if there’s COVID, if there’s viruses, let’s see what we can do as a collective to protect ourselves. So I think we need to keep peace and protect humanity from any potential harm in elastic ways that respect individual freedom of privacy. So that kind of summarizes me, just the whole thing around systems. And I bring in a democratized smart tech to benefit all as well. So I’m thinking about not just smart tech, but knowledge science. I think we want to use that in human centric ways.

18:14

Speaker 2

We don’t need to create a world where it’s dominated by technology and it dictates, and all of it, I think we need to think about humanity, human centric ways that are based in wisdom, good values and ethics to dynamically solve problems, create opportunities and abundance, and share the benefits with everyone. Now you will see this ties in with that evolution of intelligence of versus as well, which I absolutely love because it provides a framework in terms of sympathetic and shared intelligence, and creating these intelligent agents that help us. We work together, synthetic and human intelligent agents work together to solve problems. And I’ve got a slide, I actually built slides that show that. And then for me, one of the most important things, and I think I mentioned to you before, I don’t think we should optimize necessarily just for GDP and stuff.

19:09

Speaker 2

We should optimize quality of life to benefit all, and maximizing quality of life, community building virtues, character, strength, development, sense making, standard of living, well being and meaningful living for everyone. And if we measure that and we really making progress there, I think we’re going to create a better world. So the purpose, the goals are very important. And then finally sustainable livable planet and explore the universe, obviously that’s super important. And it ties in with the sustainability, development goals. And I actually have this even a place in the universe. And I actually have specific goals that also talks about exploring the universe, like Ellen Musk with Mars and all of those kind of things. And I think we should do that. And that’s why I talk about sustainable, livable planet and exploring the universe. Anyway, so that’s the high level.

19:56

Speaker 2

I’m not going to go to much further details, but there are some very specific goals for each of these four buckets. And then these also map to development goals as well, as you can see. So anyway, so maybe last comment on this is just that Kaifu Li, he did quite a bit of work, I think, with Apple, but he’s based in China nOw. He wrote a book also on. And he actually had a TED talk where he talks about the future of AI. And I love this as well, because you can position jobs and all sorts of things on an X axis, going from optimization to creativity and strategy. But if you add other dimensions, and could be a lot more dimensions, but if you say we add compassion, what kind of jobs?

20:50

Speaker 2

More kind of human centric, then you can create quadrants where you create tasks and things that people do, where it’s utilizing AI, but the warm embrace of humans, I think we need to navigate, we need visionary leadership to go this direction.

21:06

Speaker 1

Anyway, that’s brilliant. Okay, so what’s really interesting is you brought up several points there, which I would love to unpack a little bit with. Interesting in actually manifesting that kind of a bit is I think that’s the kind of thing that’s going to ease a lot of people’s minds, because right now, and especially with, you’re talking about the need for empathy and compassion and having these be at the forefront of this evolution. And I think people, they envision AI as like machines, right? And they think that’s going to become what humans start thinking like. And it’s like, no. And it’s not going to supersede human thinking. It’s coming in cooperation alongside collaboration with humans. So we will bring all of those necessary elements to the evolution of this next era of technology. I truly believe that.

22:08

Speaker 2

I’m with you there. It’s almost like these doomers and all sorts of people, I try to just make sense of it, and it’s almost like this pendulum, and people are afraid, they overreact. And this is typical of the narratives. And if you think about how the brain works, and JOshua Bach, I can talk about who’s the people that’s influencing a lot of my thinking. DAVId Deutsch and Deutsche Bach and a few others as well. But it’s called Frison, obviously, as well, in terms of the brains, this prediction engine. And Joshua Bach talks about this story that we create of ourselves that’s living up in this unIverse. And as humanity, as a civilization, we’re creating all these abstract narratives and constructs as well. But we need to navigate this. We need to take ownership and let the tools be an enabler for us.

23:00

Speaker 1

This is a total sidebar. But it’s really funny, because when you think of empathy and when I think of where my SCi-Fi brain sees technology going, and eventually we’re looking and this is going to make a lot of people uncomfortable, but the human computer brain interface kind of thing, like Star Trek, all of that, it shows if we merge with technology on a biological level, which that’s what we’re starting to see, right? I feel like years ago I remember one of my real close girlfriends and we hadn’t seen each other in a while. We were laughing, catching up and everything. And in my mind I just had this vision of like, what if in the future reality shows people tune into that, right?

23:47

Speaker 1

But what if you could just tune into somebody’s conversation, tune know, and you think, well, we would never allow that to happen. But I remember when Facebook came on and it was still stranger danger. Don’t put your picture on the Internet. People adapt and get used to things, so you never know what society will get used to. But if you envision this future where we will gain a deeper understanding of the thoughts of others, I think that’s going to increase empathy tenfold like a million fold, because we’ll understand that everybody is so the same on the inside. Our thoughts are the same. We have the same level of depravity in our thoughts and judgment and insecurities.

24:37

Speaker 2

It doesn’t matter who you are, you could be whoever if you travel a lot as well and you meet all the different kinds of people from different cultures as well. And I was very fortunate to do that right through my career, academic and business careers and so forth. And I was just recently in Sehul for the first time in South Korea as well. And it was fascinating, just the kind heartedness and the empathy of so many of the people there as well. And there’s so many things that we have in common because we live in this constraints and we live and then we die and we know about all these things and we want to make the most of this as well.

25:19

Speaker 2

And I think some people, this is where I’m very passionate about, kind of if we can create a personalized AI that’s helping us with sense making, better decision making those kind of things, and not make us, I think we’ve got to be careful of the dependence locking, so we don’t want that necessarily, but just something that really boosts us, help us make better sense and steel man other approaches and help us maybe to understand the other person’s perspective better as well. I use chat, GPT and Bart and all these kind of things extensively for sense making to push, to try and figure out. I know there’s hallucinations here and there but trying to figure out the different feelings, even with things like active inference and kun and stuff.

26:09

Speaker 2

And I’ve come up with interesting perspective of where world can integrate and combine things as well. Just because you look at what is the weaknesses, what is the strengths of different kinds of approaches and stuff, but you can apply it to everything in life, relationships. I’m just thinking the wars and the problems that we face here in civilization, it’s so sad, actually, because it’s certain narratives. And if you really zoom out and in 500 years time, you will probably look at, say, I know it’s complicated, Israel, Palestine, what’s happening there, and also Russia and Ukraine and so forth. But for me, it’s so unnecessary and these people’s lives at stake here, and it’s driven by certain political agendas and all sorts of different things.

27:04

Speaker 2

If we’ve got leaders that’s also making better sense and having a better understanding, and not just about power grab and all of these kind of things, I would love to see civilization, if we can, up the wisdom levels of as many people as possible. And obviously, we need to do this in the leadership of the people that’s really leading in the various stakeholders. But if we can uplift that’s why I’m even thinking for Africa as well. You don’t want Africa to be left behind, which is part of this global, hyperconnected community, civilization, but there as well, you want to say, how can we leapfrog? And education is so important, lifelong lifewide learning. And if we can, that’s why we need to disrupt this factory model. They talk about the fourth educational revolution anyway, so there’s so many things there.

27:53

Speaker 1

Oh, true. I’m nodding, agreeing, because, yeah, it’s all so important. But it’s really interesting because that’s one of the things with the versus technology that is kind of fascinating to me, because you have the spatial web protocol, which gives us this programmable language that can program context into every space and all the things in all the spaces, right? And then it also can be this language where the AI then can be programmed with human laws, with human guidelines. So it’s governable. And the active inference AI is an explainable AI, right? It has the ability of self introspection, right? It can report on how it comes to its conclusions, its decisions, on its processing. So when you think about having a governable AI like that, and you talk about HSML literally is just giving us digital twin spaces of everything, right?

29:07

Speaker 1

Dan Mape, he refers to the spatial web as a nervous system for the planet. So when you talk about digital twins on any scale, then you can do simulations, and when you can do simulations, then you can actually show people what the outcome is. You’re not just saying, I think the outcome is going to be this based on my understanding and my knowledge and my science, you’re actually showing it evolve in a digital twin space, you really can’t refute that. So when you talk about even peace efforts and things that we can do to alter our climate and reverse damage to our planet, to put ecosystems back into balance of things, and then when you talk about smart cities and what that can do for inclusive, sustainable, smart, it’s really interesting to me.

30:05

Speaker 2

It is also so many things there. I think the digital twin thing is so interesting as well, because initially, even my first company, CSense, we built kind of digital twins, but it’s not like with spatial computing and stuff, but it’s building models of processes, of piece of equipment. And if you latch them all together, you can almost create a digital twin effectively of whole factory or plant as well. And then you can zoom out and you can say, if I’m creating a model of that, but also my whole supply side, demand side as well. And this is, by the way, some of the things that were talking with, one of our customers was General de Beers, which is part of the Anglo American group, and they were looking specifically on diamonds as well.

30:51

Speaker 2

So were looking at demand side and building these kind of models and it’s all about instrumentation. So the reason were able to build these kind of models of proceeds and plants, because obviously industrial space you’ve got temperature sensors, flows and pressures and all sorts of stuff in real time, you’ve got real time data available and it’s all being captured. And then when you do the same with, and the same with machines, equipment as well, it’s heavily instrumented. But now if you instrument the world, especially the Internet, you’ve got a lot more data available about, even for demand side, supply side, all of those kind of things.

31:29

Speaker 2

And if you think about ERP systems, mes systems and all of these kind of things got data now suddenly you’ve got lots of data now adding spatial web and spatial computing where you can say, I’ve got more information, I’ve got actually three dimensional information in real time, that is adding a next layer to this. And it’s almost like, what’s the missing? So it’s me, an absolute no brainer. And by the way, on active inference, I absolutely love the fact that there’s something with this Bayesian type of approach around expected free energy minimization. Think about, you want to minimize complexity, you want to obviously look at minimizing inaccuracy. That’s what the free energy principle is trying to do. But on the policy, the action side is obviously trying to reduce risk and ambiguity and all of those kind of things.

32:28

Speaker 2

And I like this, the way they. I think it’s just so great to see that you see this kind of mark of blanket internal external kind of systems kind of everywhere, so it resonates as well. And as you say, to explain things, the fact that you’re using this kind of probabilistic Bayesian type of approach and creating these kind of structures makes it possible to figure out why the introspection kind of things, because you can now create, look at awareness, what am I trying to look at? What am I paying attention to? And all of these kind of things.

33:06

Speaker 1

Yeah, there’s so much we can learn from that just applying that systems.

33:11

Speaker 2

Exactly. I’m excited about that, because with the deep learning approach, you’ve got a little bit of a black box. Obviously, I come from kind of the deep learning transformers, those kind of areas. I understand what we’re doing there. And even with my PhD, we looked at the kind of underlying we tried to understand. The PhD was focused on training dynamics and complexity of architecture specific recurrent neural networks. So I was trying to figure out how, and it’s always using back propagation, similar to what’s happening anyway with this main algorithm used in deep learning and transformer and so forth. But you can try to figure things out, but when you create so much complexity, it’s really difficult because you don’t know the emergent kind of properties that you get. How is it really getting to that?

33:59

Speaker 1

Right.

34:00

Speaker 2

But anyway, I love that kind of active inference kind of approach where we’re trying to figure out and make it more explicit as well. We can go into more details there, because I’ve got questions about, even questions for Cole Friston and others as well, around the creation of world models. And to represent this. I think I’m getting some answers from Le Kun in that regard as well, to have more kind of how do we learn from observations, world models, in a proper way? Because you can obviously use hyperspace modeling language to define kind of the context for an agent in terms of its world model, and obviously have active inference, doing the reasoning, interacting with this world model and looking at the observations and stuff. But it is a bit of a bottom up approach.

34:57

Speaker 2

I can see for example that this genius, that nice demo is really great to see it. You can see for those type intelligent agents, that’s fairly simplistic and stuff, but you can do this, but it provides the environment where you can build more complex systems. And I think this is maybe where it’s going, because I think about the human brain as well. If you’ve got a bunch of these kind of systems that collaborating, working together, and that’s exactly what’s happening. Maybe you’re sharing world models. If you’re just focusing on the auditory part or the visionary part, whatever it is, you can start putting pieces together, but it could become quite complex. But I think we’re still early days with this.

35:46

Speaker 1

Yeah, no, it’s so true. But you hit the nail on the head because that’s literally what they’ve envisioned and what they’re building. Because the spatial web protocol, just basically it’s going to evolve our Internet, right? Same 40 billion computers that are connected right now evolve the capabilities to take us out of the World Wide Web into a much more secured network space that includes spaces and things. But it’s built on distributed ledger technology. It enables zero knowledge proofs, and the transactions are taking place at every touch point. So then you have this network that can be comprised of multitudes of intelligent agents, that are all these independent intelligent agents learning about their environment from their own frame of reference and then communicating to each other with that same active inference. It’s based on a holonic structure with Markov blankets and stuff.

36:57

Speaker 1

So you have these nested intelligences too. And so it’s really interesting when you think of that, because feel like when they let loose with their platform, then people are going to kinds of intelligent agents, and all these agents are going to be aware of each other as a network, and it’s just going to grow, just like collective intelligence grows, right? Our world knowledge grows, and it depends on the diversity of all of the multitude of the intelligences among the human species, right? That’s how we learn and grow, and that’s how our knowledge bank grows, right? So that’s why to me, when I look at what they’re doing, I’m like, okay, this is the AI that can get us to ASI. They’re creating an ecosystem, a framework to grow knowledge. Knowledge that actually can grow in tandem with humans.

37:58

Speaker 2

Absolutely. And right now, if you look at the toolbox, and I think I’ve asked Jason as well, he’s CTO, adversis around the use of, say, LLMs, because currently it’s got its purpose because it’s kind of this human computer interface and natural language processing can handle. So you will probably need to go. It’s not clear how you can create exactly that type of AI intelligence with active inference right now because you have to build that up and it’s so many layers. But I can see practical applications where active inference based intelligent agents are key core to it, and it can deal with real time information and adapt and reason and minimize surprise and doing all these things, and then communicate with humans utilizing LLM type of infrastructure that plugs into the hyperspace modeling language, at least the interface of the intelligent agent for communication purposes.

39:01

Speaker 2

So that’s a simple kind of application. So that’s why it’s a bigger toolbox and Lego blocks that we put in place. But obviously with active inference, the fact that you’re adding spatial web is making such a difference because it’s making things practical right now, which I love. So from an engineering perspective, you want to solve problems, and it’s nice to have these kind of things that make sense from a biological perspective, but to have systems that actually work, and this is where we are getting with Transformer LLMs, it is practical. The generative AI stack is there.

39:40

Speaker 2

So from my perspective, when I think about kind of a global AI leader, I would love to collaborate with versus companies in that ecosystem, because like you, I believe this is absolutely key, to build it bottom up and to create the digital twins and to adopt the kind of. I think it’s going to be very important, the adoption of the protocols, that kind of ecosystem is going to be super important, and I would love to see how we can fast track that. So I think communication is going to be super important, even what you’re doing, just spreading.

40:17

Speaker 1

Thank you. I’m just a tech geek girl that I’m really good friends with one of the founders, so I’ve known what they were doing, and I’ve been watching it for the last six years or so. So it’s exciting to see it all coming to fruition. What you were talking about, it’s interesting, because the way that I understand it is that all of these other AIs, the machine learning AIs, those are tools that will fold right into this network and they’ll act a lot of the attributes of the active inference as kind of a meta AI over the network. And so they’ll be able to become more accurate because of the explainability in the meta AI of the network they’ll be able to integrate in.

41:16

Speaker 1

So it’s almost to me like that’s what I was trying to say earlier, is just, I see this as kind of like, this will be like the network that everything comes into, and all of a sudden it’s all functioning well together. And all of these tools are then going to be able to fall into a system that can be governed. And I don’t know if you saw versus published a report, I want to say in July, and I think it was called a path to global AI governance.

41:49

Speaker 2

Absolutely. There’s a webinar as well. I think it’s tonight or tomorrow.

41:55

Speaker 1

I think it’s tomorrow. Yeah. Well, that might be today for you because you are a day ahead. It’s nighttime for me, and you’re first thing in the morning. Thank you. It’s 10:49 p.m. Right now I should be having a glass of wine. I’ll have wine with your coffee. But what’s really interesting to me with what they propose, because it’s very common sense, because in their estimation, you’re going to have all kinds of AI systems. They’re all going to be at different stages of intelligence, they’re all going to be at different capabilities. So how do you govern that? How do you specify what kind of governing system would be allowable when there’s a diversity among the actual systems themselves and their capabilities.

42:56

Speaker 1

And so it makes a lot of sense what they’ve proposed, because they’ve literally kind of broke it out to different levels of intelligence, I think five different levels, and then different types of governance that would apply to the different levels, meaning that the ones that are trustworthy and they’re capable of autonomy and whatnot, they can enter this more decentralized, distributed, autonomous governance, but for things like the machine learning tools and things that are still going to be hallucinating or having issues, and they’re not really trustworthy, or different things within robotics, all kinds of stuff. Human life is at stake. Then there’s different types of levels of how much human interaction needs to be there for that. And that just makes a lot of sense to me, 100%.

43:58

Speaker 2

And I think it also has to do with this connecting the dots on a holistic level, this whole evolution of autonomous, intelligent agents, because I haven’t seen it really laid out like that before, and going from systemic, sentient, sophisticated, sympathetic, and not talk about super intelligent, because we’re obsessed around superintelligence, this monolithic type of thing.

44:23

Speaker 1

Yeah, and their point is there’s a path to that we have to govern.

44:29

Speaker 2

Exactly. It’s almost like leading with the protocols, leading with a proper vision for the evolution of autonomous intelligent agents. And I 100% agree with you. I think that’s why the framework is so nice, because you can actually say, where does llamus or transformers or these type of generative AI models fit in with this kind of framework as well. Because I do think even if you think about Jan Lakun’s self supervised, energy based, self supervised learning, where he’s also using kind of probabilistic, there’s some very interesting things. What I really love about that is it is like a model that you can learn from observations, you can learn future states in trying to build a world model as well. But even for that, you want trustworthy AI guardrails. And I know there’s been quite a bit of work done there.

45:29

Speaker 2

I’m going to have a look at that governance, I definitely need to look at that. But I’ve looked at trustworthy AI frameworks consist of ethical, robust, lawful AI. Ethical AI is looking autonomy, no harm, fairness, explicability. And I think Europe actually started with European Union with this seven foundational requirements for trustworthy AI. Human agency and oversight and technical robustness, and safety and privacy and transparency and diversity and all of those kind of things. And I’m sure they will probably, versus given their holistic, they will incorporate all of that into this kind of framework, governance framework.

46:14

Speaker 1

Well, the beauty of it, are you familiar with the Flying Forward 2020, the European Union program, that they were involved in the drone project for the last three years?

46:26

Speaker 2

No. I would love to see. I’m getting more involved in AI driven drones. And I was actually in Seoul, South Korea, talking to Dr. Drone. Dr. Kwan, there’s so much to learn. So, you know, tell me.

46:42

Speaker 1

Yeah, okay. So one of the really interesting things that came out of that project, because it was a three year long project, and it was basically, they were brought in as one of the companies. It was among, I think, eight different countries in Europe. And it was, how do we get these drones to obey differences in airspace laws, you cross country borders and things change and do it in real time. And they did all kinds of proof of concept of delivering medical supplies to a hospital, perimeter security, just all kinds of stuff. And what they found in every case, what they proved, is that through HSML, the modeling language for the spatial Web protocol, you can take human law and make it programmable to where the AI understands and can comply in real time. In real time.

47:39

Speaker 1

It makes sense that they’re, and Denton’s the largest law firm in the world, they collaborated with them on this governance report, because they saw what they were doing with that drone project. They’re like, oh, my gosh. So when you think about what you can do with that, then you have AI that can be governed. So all these things like the European Union, the regulation standards that they’re coming up with stuff in the United States, what they’re coming up with for these guidelines and these different things through HSML, these can be programmed into the AI and it’ll comply. That’s what’s needed, because that’s the missing link.

48:23

Speaker 2

And you know what, Denise? That excites me, because that’s why people yesterday in a podcast, humble mind and stuff, and you have people that was pessimistic and optimistic and the whole spectrum. But the reason why I’m also optimistic about this, obviously, as an entrepreneur, you have to be optimistic. You got to look at the silver lining. But it is because of these kind of things, because I can see a path. And that’s why we can actually have a responsible, trustworthy implementation of AI. And we don’t need to go to the doom and gloom. And it’s so incredible to see some of the godfathers of, say, deep learning, how people think about these kind of things and the machine learning, street talk, Tim Scoff and those guys. That’s why I’m so aligned with Tim as well.

49:22

Speaker 2

And I feel like they’re also trying to really get to the bottom, the truth, what makes sense, what not. So if I listen to even young McCoon, I don’t agree with everything, or even Cole Friston, I’m trying to figure out. And there was a wonderful discussion between Cole Friston and StepHen Wolfram as well about this. And it was like an eye opener for him around, oh, the brain. It could be this whole free energy principle and this kind of the fact that intelligent agents want to minimize surprise. And it’s like this predictive thing. It was amazing for me to see that Stephen Wilframe is doing incredible work, but that it was like something that he didn’t really thought about as well. And it was amazing to see that kind of interaction. So I just feel there’s so many missing pieces.

50:10

Speaker 2

You can have Jeff Hinton there and even between people that were in the same area. And my PhD was also like young Lacun and things in that kind of areas around those kind of things. So I’ve got an understanding of how it evolved. But it’s so interesting where people end up with, because it’s still narratives running and then there’s doom, and there’s real worry. The solutions are there.

50:38

Speaker 1

Yeah. And like you said, we’re in the very beginnings of this, and it has to evolve, and you can’t expect it to be where we want it to be in the beginning. We have to guide it and make sure that it gets there. It’s interesting with what you were just saying, because it reminded me of something that Dan Mapes, the founders of VERSES, said to me a while back. But he said, when active inference becomes available and people are using it, he said, in the beginning, it’s not going to be super clear. The big difference between it and the machine learning, it’s not going to be that clear. But he described it this way, which I love this analogy. He said, think about the machine learning as a chimpanzee and the active inference as like a two or three year old.

51:27

Speaker 1

The difference is that two or three year old is going to grow in.

51:31

Speaker 2

Intelligence, because this is the bottom up. It is a whole ecosystem. That’s why it’s so smart, the whole thing. And I was so impressed when I was first introduced to this. It was so interesting because Dan, at that time, when he reached out to me, I was building the Machine Intelligence Institute of Africa and Building the community in Africa, and busy with my next generation AI companies. And there was a lot of focus on AI driven wellness platforms and stuff like that as well. And right now, I’m very keen on personalized AI based on the right building blocks as well. But Dan was saying at that time, we’re just reaching out and stuff.

52:10

Speaker 2

But I only actually, after wrote the book and everything, again, when he reached out and I came back to him, he said, I should read the spatial web and all of these things. And when I saw this, and I just said, wow, these guys are connecting the dots here. And then I went and I had my own kind of research on Karl Friston. It was so interesting. So. Oh, it was such a surprise for me to see that they’re actually looking at Karl Friston. Somebody’s actually looking seriously at. Anyway.

52:45

Speaker 1

Yeah. And from what I understand, when Karl saw the potential of the spatial web protocol, it was like, okay, that’s the world.

53:00

Speaker 2

But the thing is, I was yesterday contemplating as well, thinking about how do we build scalable world models with active inference as well, because right now you can, and I’m sure in a genius demo, they’re just programming it into the hyperspace modeling language. It sits there, and then this active inference is plugged into this, and you’ve got the reasoning and everything with it. But I can also sense that you can, because I’m thinking about the human mind. We don’t have necessarily kind of something that we just programmed, like hyperspace modeling language and just program it there. There’s obviously a lot of engineering advantages because you can share it, you can do many things. I’m also interested in how we build world models, the network itself, not just external structures that you need for that as well.

53:57

Speaker 2

I’m at the point where I want to figure things out and looking at what Yandakun is doing in terms of. Because there’s something interesting there. He reckons that Transformer LLMs will be replaced by these kind of self supervised, energy based kind of learning, because he sees the limitations. It’s clear as daylight, the limitations. But anyway, yeah, it is.

54:19

Speaker 1

It’s really fascinating. It’s going to be interesting to watch how it all unfolds. So what really makes sense to me, when you think of the free energy principle being how neurons learn, and if you think about human learning, take an infant, right? An infant is born, all it knows at versus its mother, that’s its world model. And then it starts to open its eyes and it starts to take in things through its senses, right? It’s taking in all the sensory information, then it starts to learn about the environment. Oh, if I drop this on me, things like that, and it just continues to evolve with what it knows about its environment. And as humans, as we get older, then we get specialized intelligence and all different kinds of things we learn about other humans, we learn from all kinds of interactions and stuff.

55:19

Speaker 1

To me, that’s what really sense as far as we are in this constant feedback loop with taking in this sensory information and measuring what we know to be true, what we’ve already established as our world model, and we’re just constantly updating. And to think that AI is going to be able to do that through the sensory information of IoT and all that kind of stuff, and measure it against what it knows to be true, the context that it understands about the environment through the programmed context, I mean, it’s going to be really interesting.

55:54

Speaker 2

I fully agree. That’s why for me, the fundamental approaches I’m looking at various approaches to go to, not necessarily artificial general intelligence, but if you think about more intelligent kind of systems, and we have to look at what the human mind is doing, because giving so many clues in terms of what kind of intelligence that we create and to learn from observations or from sensor data and build it up. And this is why I like Yann LeCun as well, because he’s really trying to figure out from that, because the current systems is not doing that properly. And what I like about active Inference is a bottom up approach that’s really looking at the fundamental building blocks to actually do that in a proper way. And it’s going to be very interesting.

56:38

Speaker 2

Joscha Bach came up with this kind of artificial general intelligence, vectors of intelligence that goes task specific, broad and flexible general, but it’s got these nine dimensions. And it would be very interesting to look at active inference, intelligent agents, how well it is doing in terms of, say, reasoning. And I think it’s got all the infrastructure now to do really nice reasoning and introspection and stuff, the learning side. But then the representation is going to be interesting for me as well, because this is what Yann LeCun is doing, is that this joint embeddings where you’re building, basically what you’re doing, instead of predicting the exact thing, you’re actually predicting the representation. So from an input output mapping. So it’s joint embeddings that you do as hierarchical, but you are predicting it. So I think there’s something about representation. Yeah. Which I don’t see yet.

57:33

Speaker 2

Obviously, I’m learning, trying to figure out exactly how we can combine the best approaches, integrate things as well. But then knowledge, language, collaboration, autonomy, embodiment, perception, these are the nine kind of vectors. I’ve got a nice slide showing that, but it’s going to be very interesting to see the evolution of, as I’ve mentioned, active inference based intelligent agents. So very excited about. I just, there’s so many things that if you look at the checkbox that I just check around this whole kind of approach, because like Bert De Fries and many others, it’s almost like they, and like you as well, you also had that question, why don’t people really pick up on this? Really, they don’t see it, but this is science and discovery, and it’s these kind of boxes that people put themselves in.

58:35

Speaker 2

And it’s like, even with, if you think about string theory and physicists, just, I put my whole career there. I’m going to just paint my narratiVe, my point of view and all my work that I’ve done. And I’m just saying, no, let’s look at, it’s like the Elon Musk kind of approach, this principle thinking here, let’s say, which kind of approaches has got real merit here, which is closer to reality, to truth. And that’s why I’m excited about this as well. And even people like, he doesn’t care about different kinds of approaches. He’s a cognitive scientist. I think he works for intel there in California. Do you know, you just see some of his interviews and stuff. I don’t agree with everything, but there is a fascinating perspective on things, the way he’s kind of bringing things together as well.

59:38

Speaker 1

It’s interesting that you say that way, though, because to me, what that brought to mind is we’re living in a world of example right now for what the AI is going to be doing with each other as they’re learning, right? Because as humans, that’s how our knowledge grows. We have all these wonderful people, wonderful scientists, technologists in the world that are working on the same goal, but they all have their approaches to it, but that all folds together in what becomes the. I mean, that’s science, right? I mean, that’s how science works.

01:00:13

Speaker 2

Exactly. And you know what I love? There’s something else, and I’ve got a slide now, but I won’t show it now just to keep it. But I don’t know if you know David Deutsch. He’s another guy. He wrote a book called the Fabric of Reality, and the last book was the beginning of Infinity, where it’s talking about explanations. So basically, he’s talking about kind of a worldview, if you think about epistemological empiricism in support of conjecture and criticism, to lead to better explanations, reality. But anyway, he’s got these theory of kind of everything where he says the quantum physics and the multiverse is one part, then theory of knowledge. Popper, Paul Popper’s stuff around that, theory of evolution, then theory of computation. And that also ties in with Stephen Wolfram’s and Joshua Buff talking about computational functionalism, theory of computation.

01:01:11

Speaker 2

And now they’re busy with theory of universal constructors, but it provides a nice holistic framework. I love that kind of view. And Joscha Bach got also this interesting view of a unified model of cognition. So what I’m trying to do is trying to put the piece together, because I find it surprising that you’ve got brilliant people not aware, not because they’re not zooming out, they focus on area, but they’re not connecting the dots. And I see obviously with versus on a certain level, they’ve connected the dots and creating the framework, and it just shows the importance of zooming out and putting the pieces, the Lego blocks together on a certain level, anyway.

01:01:52

Speaker 1

Yeah, that’s so true. That’s such a great point. And it’s funny, too, because we touched on this in our conversation right before we started. But one of the things that I think is happening, especially in the AI space, is sunk cost bias. People get kind of feel like they’re so invested in one direction that they don’t even want to hear about another direction, even if it could complement or help or raise it all to a next level. They’re so focused. And honestly, I think that’s reasons why a company like versus was able to come in and do what they’re doing. Because when you have larger corporations, it’s a lot harder for them to get buy in by the executive to change directions or do something that’s unproven or totally brand new way of thinking. So it’s kind of interesting.

01:02:47

Speaker 2

It is. No, it’s fascinating. Oh, man, there’s so many things to cover here as well. I can’t wait for the journey here because it’s almost like helped to create meaning because you feel. It was so interesting. When I listened to Stephen Wolfram and Karl Friston, he was almost saying, what we are doing here is almost like opposite what doing a moustache and being in comfort zones and what active inference trying to do. But then Karl Friston was just saying that. No, actually, to try and understand uncertainty and stuff is also minimizing surprise, to a large extent, the curiosity of humans. And especially if you’re a scientist or engineer or someone that’s just curious about the world, maybe you still operate on guardrails and you want to be in comfort zones, but you want to explore.

01:03:48

Speaker 2

That kind of notion of curiosity fits in still as well.

01:03:52

Speaker 1

Yeah. And a big part of the free energy principle, it’s about rationalizing the cause of what you’re taking in. Like, what is causing this? Whatever I’m sensing, whatever I’m seeing through my senses, what is the cause for that? So then you know how to interpret it and how to internalize it, and that’s what protects you. That’s what starts to give you those guardrails and give you a better sense of direction and what you should do, how you should. I don’t know.

01:04:26

Speaker 2

I’m with you. I think we’ve talked about the building blocks. I just wanted to say, so the thing that I’m passionate about is, how do we make a difference in people’s lives with this? And I’m concerned where technology is being controlled by tech giants and various players, and if we can get to a decentralized world where we’ve got personalized AI and I’ve got this one slide where I say the age of personalized AI has arrived trustworthy. I’ll share some of my presentations with you as well, so you can get a better idea.

01:05:02

Speaker 1

I would love that.

01:05:03

Speaker 2

Yeah. Explainable again, this is what active entrance anyway is doing. But that’s what you want. Private. Private is very important. Just imagine if everyone have their own personalized AI that’s protecting. It’s almost like a gatekeeper for their data vault. And it’s almost like if data is being monetized or services or whatever, then it could assist in that. So while you sleep, you can maybe have an AI agent that’s actually doing things on your behalf and helping you to monetize things with a configuration that’s been specified for you. And I know with hyperspace modeling, language, and active entrance, I can see a path that we can create this kind of stuff, but they make sure it’s human centric, it’s user controlled, and it can train on your own data.

01:05:48

Speaker 2

And I think this is exactly the kind of, that’s why I’m excited about active inference and what versus is doing, because it’s creating kind of a proper building block for that as well. So it’s a nice basis for that. And then if we can just imagine, this is what I talk about in my book as well, Chapter twelve. And I’ve got Sapiens Network, where I also talk about this a little bit. It’s not just your personal AI powered assistant, but you can actually create a network of AI assistants that can interact with one another like intelligent agents. And maybe your assistant consists of multiple AI agents, but it’s coherent and it’s doing things for you. But this thing can then talk to other maybe family members or within a community, et cetera.

01:06:31

Speaker 2

And you can have each on this whole levels of, say, individuals, community, or family communities, smart towns, smart cities, companies, organizations, all sorts of different things, up to governments as well. But I do think it’s going to be more decentralized the world. I’m not sure if there’s going to be regions and all of it. You want to protect cultures and those kind of things as well, but if you’ve got agents that interact with one another and trying to optimize on an individual level, community level, so those are the stated goals within trustworthy AG guardrails, and it’s trying to uplift as well. And if there’s areas that’s not, say, agency suffering, or people are, or the community is suffering, there could be other communities that’s other AI agents that’s trying to see how we can help assist.

01:07:21

Speaker 2

It’s almost like the human body trying to heal. Almost. So to, so I can see a world where they incentivize people for their positive contributions to civilization. All of those kind of things that speak to this MTP for humanity, the goals, the nations, SDGs. That’s why it’s great. So for me, it’s kind of clear. The way I’ve connected the dots is just, I’ve seen these wonderful building blocks and frameworks being put in place now. It’s about the applications and how we can really make a difference. As I’ve mentioned there, can we help shape a better future and democratize aid to benefit everyone as well? I would love to see that kind of future. And that’s where I put a lot of my focus on right now.

01:08:07

Speaker 1

I love that because that’s the kind of future that I see, too. It’s interesting because everybody is talking about how all of this next era of technology is just going to usher us into this kind of realm of abundance. And I see that. And when you talk about decentralization and you talk about all of the technologies that fold into that, the spatial web, the HSTP is a transaction protocol. Yeah. So when you’re talking about warding society, rewarding human beings, individuals for good behavior, for social contribution, for everything, for years now, we’ve had these conversations, even with government officials on universal basic income, different things like that. I don’t think it’s going to be government that’s going to be doing that.

01:09:06

Speaker 1

I think the system of what we’re moving into with this technology is going to be such a system of abundance, and it will be a rewarding system. It’s going to be gamified. I mean, that’s kind of the whole, we’re living in a game anyway, it’s structured differently.

01:09:26

Speaker 2

Elon Musk talks about also this kind of sunset clauses and laws and being more kind of dynamic around this. And when you talked about Denton and I can just see this all implemented in smart contracts, facilitated by this. And then if it doesn’t work, we adapt quickly, we change the configuration. It’s people standing in the way of all of these kind of things. I feel the tools are there, so we just need to move in the right direction around this. Yeah, but anyway, very exciting.

01:10:01

Speaker 1

Jacques, I know we’ve been talking for a good hour plus here. I don’t want to take too much of your time, but I have enjoyed this conversation so much. And again, thank you so much for coming. On my show. This has been such a treat for me. How can people reach out to you? How can they find out more about you?

01:10:24

Speaker 2

Yeah, I’ve got a website, jacqueludik.com. There’s a little bit of a blog there, but it’s like social media. I’m publishing stuff, so on LinkedIn, I’m obviously on X as well, and a bit of stuff on Facebook and stuff, like more kind of LinkedIn X and the website. And so they’re welcome to reach out to me, connect via any of those kind of. Yeah, okay, great.

01:10:54

Speaker 1

Yeah. And I would love to continue this conversation in the future and just kind of monitor things, follow it as it goes along. So thank you.

01:11:06

Speaker 2

Absolutely. Me too. And I think you see so many other deeper levels to unpack as well. Personalized AI, but we can also go deeper on tech stuff, given that you’re a tech girl, a geek, a total geek, and I’m learning all the time about active interest. I’m looking forward to the whole journey also with versus as well, anyway, so it’s very exciting.

01:11:37

Speaker 1

Well, I can’t wait for our next conversation.

01:11:42

Speaker 2

Thank you very much and have a good night.

01:11:47

Speaker 1

Thank you so much. And thank you, everyone, for tuning in. We’ll see you next time.

01:11:51

Speaker 2

Fantastic. Bye bye.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

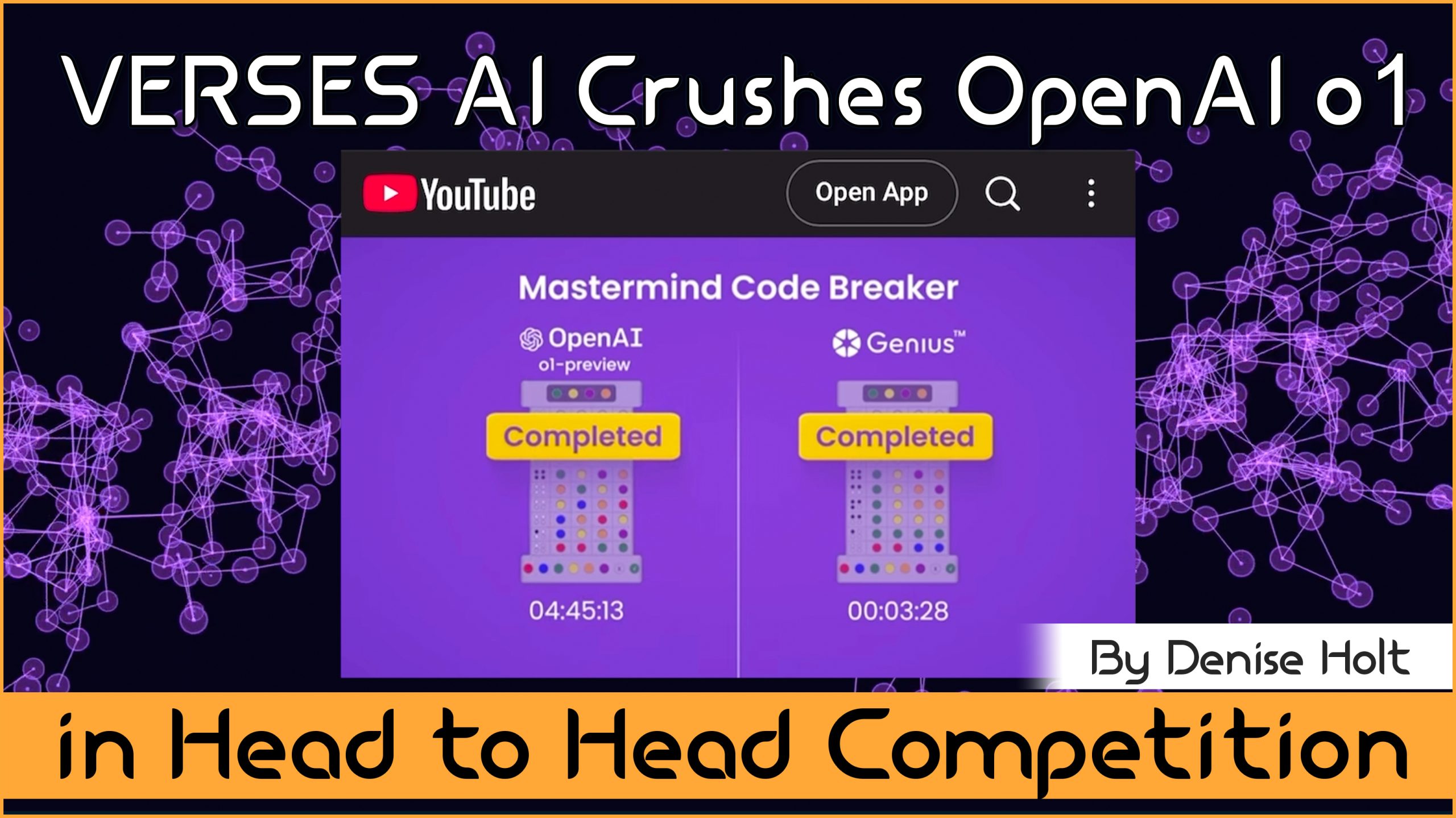

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...