A different class of energy governance is emerging. Seed IQ™ converts hidden energy waste into measurable savings and amplified energy...

The World of AI Has Changed Forever

Dr. Karl J. Friston is the most cited neuroscientist in the world, celebrated for his work in brain imaging and physics inspired brain theory. He also happens to be the Chief Scientist at VERSES AI, working on an entirely new kind of AI called Active Inference AI, based on the Free Energy Principle (FEP) — Karl’s theory that has just been proven by researchers in Japan to explain how the brain learns.

Until now, most of AI research has centered around machine learning models, which are known to face many challenges. From the unsustainable architecture of massive data loading for training, to the lack of interpretability and explainability in the outputs, machine learning algorithms are viewed as tools that are unknowable, uncontrollable, and although they are good at pattern matching, there is no actual ‘thinking” taking place.

The work that Dr. Friston is doing with VERSES is radically different. Active Inference AI and the FEP, coupled with the new Spatial Web Protocol, are laying the foundation for a unified system of distributed collective intelligence that mimics the way biological intelligence works throughout nature. They have created an entirely new cognitive architecture that is self-organizing, self-optimizing, and self-evolving. And yet, it is completely programmable, knowable, and auditable, enabling it to scale in tandem with human governance.

This is the AI that will change everything you think you know about artificial intelligence.

Have you ever wondered how your brain makes sense of the constant flood of sights, sounds, smells, and other sensations you experience every day? How does it transform that chaotic input into a coherent picture of reality that allows you to perceive, understand and navigate the world?

Neuroscientists have pondered this question for decades. Now, exciting new research provides experimental validation of a groundbreaking theory called the “Free Energy Principle,” by Dr. Karl J. Friston, that explains the profound computations behind effortless perception.

The study, published 0n August 7, 2023 in Nature Communications by scientists from the RIKEN research institute in Japan, provides proof that networks of neurons self-organize based on this principle. Their findings confirm that brains build a predictive model of the world, constantly updating beliefs to minimize surprises and make better predictions going forward.

“Our results suggest that the free-energy principle is the self-organizing principle of biological neural networks. It predicted how learning occurred upon receiving particular sensory inputs and how it was disrupted by alterations in network excitability induced by drugs.” – Takuya Isomura, RIKEN

To understand why this theory is so revolutionary, we need to appreciate the enormity of the challenge facing your brain. At every moment, your senses gather a blizzard of diverse signals — patterns of light and shadow, sound waves vibrating your eardrums, chemicals activating smell receptors. Somehow your brain makes sense of this chaos, perceiving coherent objects like a face, a melody or the aroma of coffee.

The process seems instant and effortless. But under the hood, your brain is solving an incredibly complex inference problem, figuring out the probable causes in the outside world generating the sensory patterns. This inverse puzzle — working backwards from effects to infer hidden causes — is profoundly difficult, especially since the same cause (like a person’s face) can create different sensory patterns depending on context.

The Free Energy Principle, formulated by renowned neuroscientist Karl Friston, proposes an elegant explanation for how brains handle this. It states that neurons are constantly generating top-down predictions to explain away the incoming sensory data. Any mismatches result in “prediction errors” that update beliefs to improve future predictions. Your brain is an inference machine, perpetually updating its internal model of the world to minimize surprise and uncertainty.

The Free Energy Principle synthesizes many observations about perception, learning, and attention within a single unifying framework. But direct experimental validation in biological neuronal networks has been lacking.

To provide such proof, the Japanese team created micro-scale neuronal cultures grown from rat embryo brain cells. They delivered electrical patterns mimicking auditory sensations, generated by mixing signals from two “speakers”.

Initially the networks reacted randomly, but gradually self-organized to selectively respond to one speaker or the other, like tuning into a single voice at a noisy cocktail party. This demonstrated the ability to separate mixed sensory signals down to specific hidden causes — a critical computation for perception.

Powerfully, the researchers showed this self-organization matched quantitative predictions by computer models based on the Free Energy Principle. By reverse engineering the implicit computational models employed by the living neuronal networks, they could forecast their learning trajectories based solely on initial measurements. Mismatches from top-down predictions drove synaptic changes that improved predictions going forward.

https://www.nature.com/articles/s41467-023-40141-z#:~:text=According%20to%20the%20free%2Denergy,of%20sensory%20inputs1%2C2.

The team also demonstrated that manipulating neuron excitability, consistent with pharmacological effects, altered learning as predicted by disrupting the networks’ existing models. Overall, the study provides compelling evidence that the Free Energy Principle describes how neuronal networks perform Bayesian inference, structuring synaptic connections to continually update top-down generative models that best explain sensory data.

Understanding the exquisite computational abilities of biological neural networks has important practical implications. As with the work that Dr. Friston is doing as Chief Scientist with VERSES AI, Active Inference and the Free Energy Principle, together with the Spatial Web Protocol, are being deployed to achieve an entirely new kind of AI that is based on biomimetic intelligence, rather than brute-force backpropagation of machine learning, with the efficiency and generalizability of human perception.

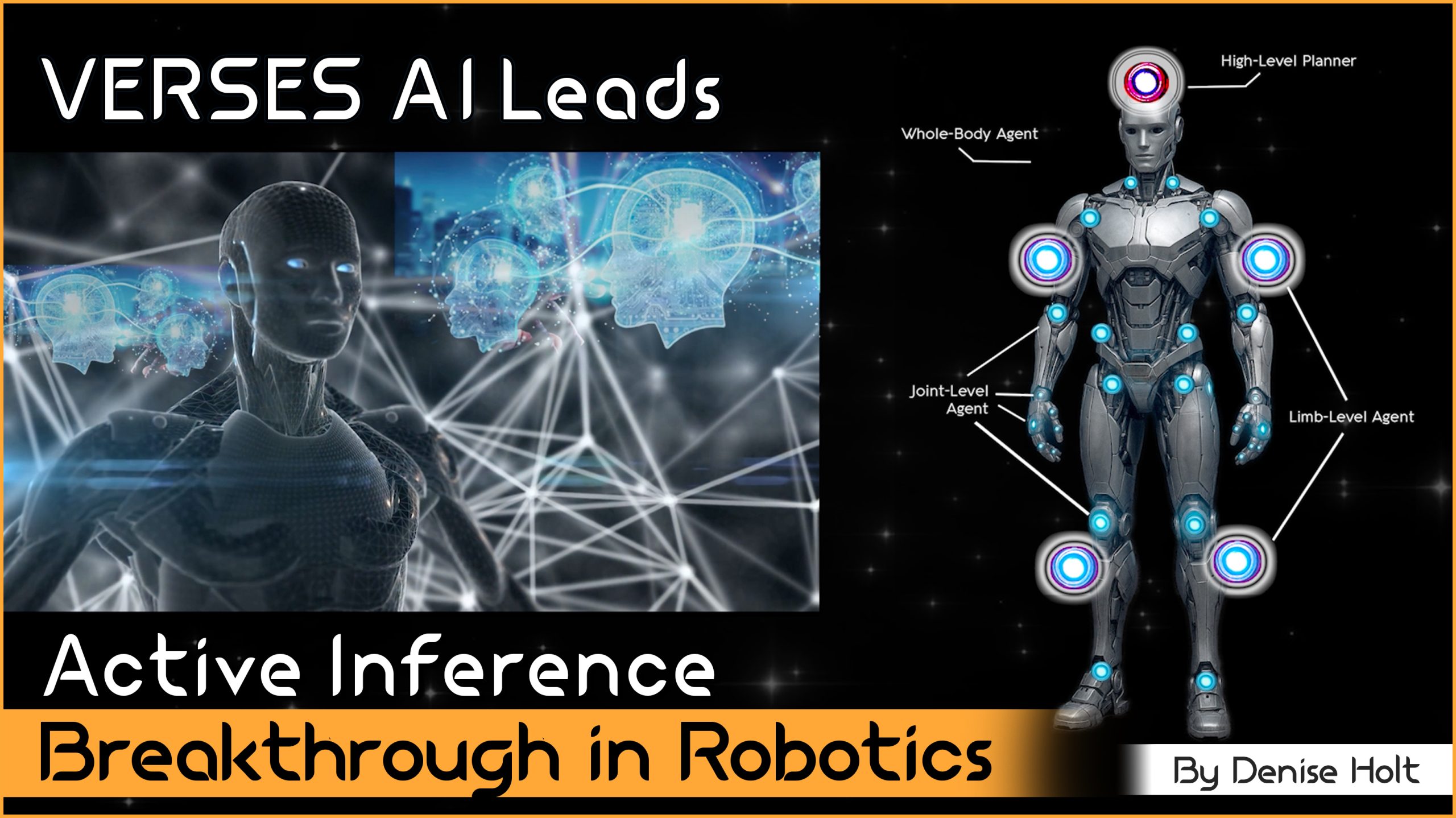

Image by permission from VERSES AI

As the researchers suggest, Active Inference AI and the Free Energy Principle through Bayesian inference, allows a neural network to self-optimize through intake and continuous updating of new real-time sensory data, while simultaneously considering previously established outputs and determinations, generating predictive models enabling the creation of brain-inspired artificial intelligences (Intelligent Agents) that learn as real neural networks do. An ensemble of these Intelligent Agents, all orginating from their own vantage point of unique specialized intelligences gained from their own frame of reference, within a unified global network of context-rich nested digital twin spaces, affords the contextual world model that has been missing from practical AI applications. This is a critical piece of the puzzle in advancing AI research and achieving AGI (general intelligence) or ASI (super intelligence). Advancing such neuromorphic computing systems is a vital goal as we seek to emulate the versatility and adaptability of biological cognition in machines.

So, while understanding how your own brain works may seem abstract, this pioneering research brings practical artificial intelligence applications closer, and confirms the truly revolutionary work that VERSES AI has introduced and is leading in the world of AI. The Free Energy Principle provides a unifying theory of cortical computation, and its experimental validation in living neuronal networks marks an important milestone on the path toward building truly brain-like artificial intelligences.

Visit VERSES AI and the Spatial Web Foundation to learn more about Dr. Karl Friston’s revolutionary work with them in the field of Active Inference AI and the Free Energy Principle.

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

A different class of energy governance is emerging. Seed IQ™ converts hidden energy waste into measurable savings and amplified energy...

Why the world's leading neuroscientist thinks "deep learning is rubbish," what Gary Marcus is really allergic to, and why Yann...

Seed IQ™ enables coherence across distributed agents through shared operational belief propagation maintaining system-level viability, constraints, and objectives while adapting...

Preliminary results with Seed IQ™ suggest that barren plateaus can be treated as an operational state that is detectable, actionable,...

Operations are control problems, not prediction problems, and we're applying the wrong kind of intelligence. The missing layer: Adaptive Autonomous...

We have been entrusted with something extraordinary. ΑΩ FoB HMC is the first and only adaptive multi-agent architecture based on...

AI is entering a new paradigm, and the rules are changing. AXIOM + VBGS: Seeing and Thinking Together - When...

Blueprint for new robotics control stack that achieves an inner-reasoning architecture of mult-agents within a single robot body to adapt...

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...