The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

NEW EPISODE: Denise Holt, host of the Spatial Web AI Podcast, welcomes David Shapiro, an AI researcher and thought leader, as today’s special guest.

David provides a balanced perspective on AI, recognizing its transformative possibilities alongside the associated challenges. He underscores the importance of strategic deployment and anticipates problems arising from new technological advancements. With a nod to history, David comments on society’s capacity to adapt to technological disruptions, drawing parallels with the present transition towards AGI (Artificial General Intelligence) and ASI (Artificial Super Intelligence).

David touches upon the potential shifts in the labor landscape due to rapid AI advancements, hinting at a future where the concept of ‘work’ could undergo a substantial redefinition. This brings forth the notion of a ‘post-labor economy’, prompting discussions on how individuals might find value and purpose beyond traditional work.

The conversation takes a closer look at spatial web technologies, emphasizing their utility in enhancing accountability across various domains. The dialogue navigates the intricacies of managing extensive data within a spatial web framework, underscoring the importance of context-driven AI learning and addressing pertinent privacy concerns.

Drawing insights from science fiction narratives like Ghost in the Shell and Westworld, the discussion explores the potential of brain-computer interfaces and the ethical implications of technology that can interpret human thoughts.

AI governance emerges as a significant topic of discussion, with reference to initiatives by global entities such as the United Nations and the European Union. The emphasis is on ensuring that AI development remains within ethical boundaries, with GDPR serving as a potential model for global regulatory standards.

David sheds light on the prospects of aligning AI with human values, recognizing the challenges and yet remaining hopeful about our collective capacity to guide AI’s trajectory responsibly. The dialogue emphasizes the strategic importance of embedding human ethics into AI systems, ensuring their optimal and safe deployment.

Chapters:

00:13 – Introduction to David Shapiro

01:53 – AI: Polarizing Perspectives and Realistic Approach

05:33 – Challenges and Opportunities in AGI and ASI Transition

10:58 – Future Implications of the Spatial Web and AI

17:02 – Data Governance and Personal Data as Public Good

23:02 – AI and Privacy Concerns in the Spatial Web

30:35 – Finding Meaning in an AI-Driven World

38:23 – Personal Autonomy and Choice in the Age of AI

44:06 – Managing Overwhelm in the Digital Age

50:06 – Future of AI Governance and Ethics

56:20 – Human Alignment with AI

01:03:05 – AI’s Potential Consciousness and Sentience

01:08:57 – Myths and Misconceptions About AI

01:12:10 – Closing Remarks and Future Discussions

Connect with David Shapiro on LinkedIn for consulting, speaking, training, or collaboration opportunities. https://www.linkedin.com/in/dave-shap-automator

Include a note when connecting on LinkedIn to provide context and purpose.

Check out our other Episodes and watch all the videos in the Knowledge Bank Playlist to learn more about the Spatial Web and Active Inference AI.

Keywords: AGI, ASI, AI Governance, Spatial Web, AI ethics

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

00:13

Speaker 1

Hi and welcome to another episode of the Spatial Web AI podcast. Today we have a very special guest. We have David Shapiro, AI researcher and thought leader and just overall really smart guy. David, it’s such a thrill for me to have you here on the show. I’d love it if you just maybe introduce yourself to our audience.

00:38

Speaker 2

Yeah, thanks Denise, for having me. My name is David Shapiro. I have been in It infrastructure cloud automation since 2007, independent AI researcher since 2009. And then of course, we’re in the era of GPT-2, GPT-3 and now GPT Four. So pretty much the rest is history. But yeah, I’ve been an independent researcher and technologist for quite a while. And then just about 16 to 18 months ago, I got on YouTube to spread the word, basically, and that’s how we got to where we are today.

01:12

Speaker 1

Awesome. Well, I’m so excited to have you here because I have a lot of questions and I know you’re the one to ask. So I’ve watched a lot of your videos, I’ve heard a lot of your talks on AI and what you see for the future of AI. And so a lot of people talk about kind of the fears of, oh, this may happen, or that kind of thing. And I don’t see a lot of people out there really putting out the message for all the positives and all the great things that AI can do for us and for humanity and our civilization. So what do you see as the biggest advantages for AI?

01:53

Speaker 2

Yeah, so you’re right, it’s incredibly polarizing, right? And there’s a few videos out there, commentators and big names. Certainly there’s really zealous advocates one side and then there’s the doomsayers on the other. And it’s really rare that we see something that is this polarizing, right, where it’s just like people are saying, like, this is going to save everyone, it’s going to cure everything, or it’s literally going to kill everyone. So I try and take a more realistic approach and I don’t try and downplay the dangers. Whether it’s just innocent misuse or mistakes, there’s always the potential for harm. Then of course, there’s like wanton misuse of people deliberately using new technologies because all technologies are dual use, whether you build a nuclear bomb or a nuclear reactor, whether you build better medicines or bioweapons, right? All technology is dual use.

02:50

Speaker 2

And so with any technology, this is AI, whatever else, you always need to be very careful and very deliberate about how you deploy it. Now, in terms of where I’m at on the spectrum as a career problem solver, because even before the rise of AI, that was what I made my career based on, was being able to solve problems that other people couldn’t. And so I have not found any evidence that any of the problems confronting us are unsolvable. And every time someone says, well, what about this? And it’s like okay, well, you’ve identified the problem. That’s half the battle, right? People will complain about, like, hey, Mesa optimization, which for anyone who’s not familiar, mesa optimization is a phenomenon inside of deep learning models where it optimizes for not what you think it is.

03:39

Speaker 2

It kind of like has an internal layer that’s not necessarily deceptive. You might perceive it as deceptive where it’s like, okay, I’m giving you the answer that you want, but only because I know that’s what you think you want and I’m working around it. But if you’ve articulated that problem and you’re working on that problem, that means it’s a solvable problem. And this has been the nature of science since forever, where you solve one problem at a time and you move on to the next one. Now, that being said, with artificial intelligence, we are confronted with the possibility of creating something much more intelligent than ourselves. And that raises all kinds of other questions, existential questions, like what is the point of being alive?

04:20

Speaker 2

I think Elon Musk had a tweet a few weeks ago, it’s called X now, but he said what did he say? It was something like, if AI is smarter than all of us, what’s the point of being alive? Or something like that, right? It just summed it up in one tweet, which really touches into the existential dread that some people have. And so in many cases, I think that a lot of people might just have a problem articulating that’s their fear. And so I’m here to validate that’s, a legitimate fear. But at the same time, society has gone through huge changes in the past. 200 years ago, 90% of people were farmers. I don’t know about you, but I don’t want to be a farmer. So I’m glad that went through that change, right? An entire way of life was destroyed.

05:02

Speaker 2

And I’m sure for the people that lost their jobs, lost their farms, had to leave their farm and go to the city, it was awful, right? And I’m not saying that their suffering was worthwhile or that it was righteous or noble. I’m saying they suffered and it really was awful for them. But in hindsight, in retrospect, I’m glad that society went through that change. And so that’s why I’m neither a doomer nor a hardcore optimist. That’s my overview of kind of where we’re at and where we’re going.

05:33

Speaker 1

Yeah, and I agree with you, and I see this future that’s going to be improved for humanity, but I know there’s going to be a little bit of a painful transition in that period too. So I don’t want to diminish that by any means. So what are some of the biggest hurdles that you see that we need to overcome as we’re transitioning into this space of AGI and then even ASI super intelligence?

06:07

Speaker 2

If you’d asked me, like, a few months ago, I would have given you one set of answers like, we need to figure out autonomy, we need to figure out yada like a litany of technical and scientific problems. But of course everything’s moving lightning fast so I don’t really see any technical barriers anymore, especially the rate at which AI models are improving. Really the biggest barriers are that of organization and communication. And so what I mean by that is first and foremost there’s just so much news happening all the time. There’s hundreds and hundreds of startups, there’s 5000 AI papers per month being published right now and that’s probably going to be 6000 next month. And so you have this rate of change that is a decentralized tidal wave. You can’t stop it. And so then how do you manage that?

07:01

Speaker 2

And it’s like, well you got to learn to surf, right? You got to stay on top of the wave because you can’t stop it, you can’t push it back. So that’s one of the main problems. And then of course there’s always going to be lagging indicators and people failing to adapt. There’s always people out there that are kind of in denial about what’s happening and the magnitude of it and I’m like, look guys, your way of life is going to change whether you like it or not. And some people they’re just like, well I am skeptical. And of course people trust their own opinion more than they trust the news or the facts or whatever. That’s a whole other can of worms.

07:41

Speaker 2

But those coordination failures, those communication failures I think represent probably the biggest risk because by the time some people are aware, it’s going to be too late. And so I was just listening to a podcast earlier, one of the bigger names, and I’m listening to all the entrepreneurs, the people with billion plus dollar companies and even people in the industrial space are really getting on board with generative AI, spatial web decentralization. And so these are the people that are connected in that are the real movers and shakers in the industry. And in the podcast I was listening to earlier they were talking about like oh yeah, we’re going to automate as much of the factory as we can, as much of the logistics chain as you can. And what they weren’t saying though is what happens to the people who lose their jobs?

08:32

Speaker 2

And from a business perspective it’s not really their job. Every business has an obligation to their shareholders or customers or whatever to provide goods and services for cheaper. That’s it, that’s the only objective function they have. But what happens is that in the past, so here’s sum it all up. In the past we had this notion that technology creates more jobs. It’s not true, that was never true. What technology does is that it lowers the cost of goods and services so that money can be allocated elsewhere, which creates new sectors, new demand. So that example that I gave a minute ago where 200 years ago people were farmers. Suddenly there was knowledge work, there was service industry and so then people could, their food was cheaper, their homes were cheaper, so on and so forth.

09:19

Speaker 2

They could then afford to buy consumer goods and electronics and other stuff. So that created new demands, not necessarily new jobs. And so this is the primary shift that I think that people have yet to wrap their heads around. And I call it post labor economics, which is we’re not going to be working anymore. So then how does the economy work? If robots and machines and everything take all of our jobs, how does the economy function? It’s going to function. We got to figure it out. And that is an unsolved problem.

09:50

Speaker 1

Yeah, and it’s interesting because when I think of what the spatial web is opening up with this evolving internet where every we move from a library of websites web pages into spatial domains where every single thing in any space becomes a domain permissions are at every touch point, context is baked into every touch point and it’s informing the AI. Right, so when I see this type of a system and then no jobs and we have to find new meaning, but also businesses, corporations, they have to find new ways to insert themselves into the lives of the consumers. Because advertising, all the stuff that we’ve seen before is not going to work. They’re going to have this native experience. You bring in like cryptocurrencies value exchange versus fiat and decentralization, all this stuff. I kind of envision a future.

10:58

Speaker 1

Obviously it’s going to take a while to get to that point, but where our daily lives just start rewarding us. The system itself is giving us value back for interacting with the system. And we could have this future where you wake up, you make your bed and then you’re rewarded. You’re not even aware these things are really happening, but they’re happening. And it could be the sheet manufacturer, the bed manufacturer, that’s part of their marketing dollars are now in rewards. I definitely see there’s a lot of options for this future of innovative ways.

11:35

Speaker 2

Where yeah, I just thought of a good example while you were kind of talking through that and there’s a construction site near my house. As pretty much anyone in any growing city you’re going to be surrounded by construction. And every now and then there’s screws or nails that are in the road and every time I see one I pick it up and make sure that it’s not in the road anymore so that somebody doesn’t pop their tire or whatever. And so I created value, or rather I protected value that I’m never going to be rewarded for.

12:07

Speaker 2

And so one of the things that I’m really excited about in terms of spatial web 3.0, what we used to call Internet of things and fog computing when I was back, when I was at Cisco, fog computing is fortunately a term that died a very quick death. But the idea that it was all around you all the time, right? And that’s kind of the spirit of what we’re building towards. And the idea that you could track everything that some sensor could say, like, oh, hey, there’s a broken fence over there. Someone go fix it, or there’s something in the road. What was it?

12:42

Speaker 2

There was a case of this was many years ago on the highway near me where a box of metal parts fell off the back of a truck, and it shut the entire highway down for hours, right, because the state troopers had to stop traffic, clear the road. You go over it with a magnet sweeper, and it’s like, how many millions of dollars of productivity were lost because someone made a dumb mistake, right? And then I was pretty frustrated. I was like, for me, it was an inconvenience because I was stuck in traffic for an extra 45 minutes. But what if there was a life saving physician who didn’t make it to a surgery for a newborn because of that, right? So then it’s like a matter of accountability. And of course, you don’t necessarily want to use a new technology just to punish people.

13:26

Speaker 2

But what if some of these technologies could have said, like, if there was an overpass camera and it phoned him and the driver of that truck and said, hey, you’ve got an unsecured box. Pull over. Fix that, because it’s about prevention. And so then there’s all these ideas of if you had the information, how could you use it? Right? And it’s a matter of gleaning all that information from all the different layers of reality that are all around us. I was on a call earlier with a prospective client who’s building, like, a data platform to facilitate this stuff. I don’t think that they had realized I was like, you guys are building a spatial web platform? And they’re like, what do you mean? I was like, I just sent them a link to spatial web. I was like, this is what you’re doing, right?

14:09

Speaker 2

How do you coordinate? Because what did they call it? They called it like a context driven AI learning platform. I was like context. Right? But the thing is, there’s so much information to keep track of in the world, right? This was something that my cousin, many years ago, he’s an electrical engineer, started putting sensors on dumpsters, right? So that was like a really early version of spatial awareness, where it could record every time someone threw a bag into the dumpster and predict when the dumpster was full so that the dispatch would only send trucks out to pick up dumpsters once they were full. But that was a really specific use case. Now, imagine if you can have more general purpose things, any camera, any microphone, kind of detect the presence of whatever needs to be done, and make that a public good.

15:04

Speaker 2

That is really what I’m most excited about. And of course, there are tremendous amount of technical hurdles to achieve that future. I remember that was actually one of the original promises of Bluetooth when it was first published many years ago, was, oh, hey, there’s this open protocol that can allow all your devices to talk to each other. And of course, we had just this utter failure of imagination back in the day where it was like, your dishwasher will talk to your fridge. And we’re like, Why? Who cares? Right? Because that was in the early two thousand s, and that was as far ahead as we could think. But anyways, yeah, so there’s a tremendous amount of opportunity, but then it opens up all kinds of cans of worms. Like privacy number one, first and foremost, I think is probably the biggest thing.

15:46

Speaker 2

And that’s what I talked with my prospective client with earlier, was like, okay, so you got the context, but then how do you keep track of who knows what and who is privileged to what information? So there’s a number of problems still there.

15:59

Speaker 1

Yeah. So to me, that’s one of the advantages, the really cool things about the Spatial Web Protocol, because the HSTP, the Hyperspace Transaction Protocol, because in the spatial realm, permissions, everything is permissionable at every touch point. So then you can program in credentials and all kinds of information about ownership and who can access, and it will create this future where you can be in control of your data realm, and you can give permissions that have expirations to them, even, like, all that kind of stuff. So I feel like these issues that we see, a lot of them are because we’re in this Web Two environment, we’re still in this World Wide Web where we have limitations, that I really feel like a lot of benefit is going to come from this evolution.

17:02

Speaker 2

That makes sense. Yeah. And it’s like kind of what you described I was imagining, like, geofencing my house, right? But then in a Spatial Web 3.0 environment, then it’s like, okay, well, Dave, you are registered as the owner of this piece of property. So then any data that originates or takes place in there, you ultimately have some rights to in terms of privacy or profiting from or whatever. I think that there’s a tremendous amount of opportunity there. One idea that a lot of people have proposed lately is the idea of data as a public good or personal data governance as a way of kind of participating in the economy. And of course, data is the new oil, and there’s a million ways that data can be used, and I’m not even familiar with all of them.

17:54

Speaker 2

But it strikes me that having that sort of point of origination governance and tracking of data is really important to building that new economy again, even if you can address it. And even if you can assign privileges and permissions. I guess the thing that I haven’t figured out yet is, okay, well, how do you balance privacy with that reward for participating? And maybe that’s the trade off.

18:28

Speaker 1

Right. And honestly, that’s kind of what I see is that right now, we give away all our data. We don’t really have a choice, but we will have a choice, and there will be incentives that appeal to us enough to trade data, trade some of that privacy, but it’ll become a choice, a personal choice versus complete lack of empowerment.

18:56

Speaker 2

That makes sense. Yeah.

18:58

Speaker 1

What do you think what that will look like? Because I often look at too when social media first started, and it was like the stranger danger, don’t put your picture on the internet. And now so I think too, as we evolve with technology, we actually become freer with our ideas around it.

19:22

Speaker 2

Yeah, I’m reminded of there’s a whole raft of studies that talk about how behavior and even cognition changes when you know that you’re being watched and am a very private person. And yet I have 66,000 subscribers on YouTube, and so I remember watching the numbers, and then my wife was like, people have spent more time looking at you than I will look at you in our entire life together. And it’s just like, this is the world that we live in. And some of it is the level of comfort, like, just becoming familiar with having a public versus private life. And then in a previous podcast that I was on, we talked about this idea of the value or danger of having online all the time versus, what did he call it? But basically having islands that are like black holes of information.

20:21

Speaker 2

And I said that actually, you probably would want that. You’d probably want some places where you know that there are no electronics, that there are going to be complete reservoirs or whatever going out into nature, basically, or even in urban or suburban areas. And I’m not saying one way or another, I’m not saying like, oh, spatial web is going to have to account for this or this is going to be a problem. It’s just an observation to think about because in many places in the world, you’re offline, right, where I grew up, right, you go dark. And I think that’s a good thing, though. I think that we’ll probably want to conserve some of that. Whether it’s like, I actually fully predict that some buildings as part of their privacy or whatever, it’s going to be like, you know, how there’s no firearms allowed.

21:12

Speaker 2

It’ll be like, no spatial web allowed or whatever in some buildings for privacy reasons. So I don’t know. The reason that this is all top of mind is because one of my best friends is like, he’s like a privacy nut. His two favorite topics are like, guns and privacy. And, oh, by the way the Clintons are all in control of everything. So I agree with him on the privacy thing, not the other two. But the idea is that privacy is actually really important and it’s a balance. There’s a bunch of other podcasts. There’s actually like married couples from the CIA have podcasts online now. This is a crazy time to be alive. Talking about privacy versus this is the world that we’re heading towards.

21:55

Speaker 1

So the big lead rooms?

21:58

Speaker 2

Yeah, pretty much. Pretty much.

22:01

Speaker 1

Wow. Do you really think that’s going to happen though? Because I don’t know, I see the desire for it and I see the need for having spaces. You can go where you’re not connected.

22:23

Speaker 2

Right. As long as it’s opt in and opt out as long as you can toggle a switch. Right. And it’s, you know, this is a non entity and Google has been working on this for many years, like with where when they realized, know, having their Vans drive around with cameras, that was a big privacy thing. And so then they came up with AI to blur out faces. Right. And so this is not necessarily a new problem in terms of respect. You have to opt in if you want to participate. And I think that having that as kind of a standard could very well suit some people. Suit many people.

23:02

Speaker 2

And I don’t know which side of the fence I would fall on right now because imagining a future where it’s like, okay, well, Dave, every time you go out into public and you go to the grocery store is watching you to figure out what products you’re looking for. And that data is going to be used to better serve your area. And you’re going to get compensated just by going to the grocery store and picking out your groceries. Okay, you’ll compensate me for something that the grocery store knows already anyways. Right? But on the other hand, it’s just like I’m aware of how information can be misused. In the past, everything from library records to land records have been used for genocide. Right? And so it’s like that is really kind of terrifying.

23:51

Speaker 2

And just because it’s convenient and you have incentives to participate in that system, that doesn’t necessarily mean that it’s automatically a good thing forever. Right? And so one thing that is increasingly popular in blockchain technologies is ZKP zero knowledge protocols or whatever. Sorry, zero knowledge proofs. And so the idea that you can have some information that is permanently private that can still be useful, I’m not convinced that technology is mature yet, but there’s certainly a lot of energy being put into that kind of research. But if that gets solved, then I think that there will be a lot more comfort in participating. Opt in versus opt out in terms of do you want to be on the grid, yes or no?

24:43

Speaker 1

Right. And to my understanding with the spatial web protocol. It enables an ecosystem of zero knowledge proofs, self sovereign identity and zero knowledge proofs. So that’d be interesting to see that. Now the idea of connecting or disconnecting by choice. What happens when we get to brain computer interface?

25:12

Speaker 2

Well, I’ve been a fan of science fiction for a long time and there’s anime called Ghost in the Shell and hacking people that are on the grid is actually something that is central to that. And now I’m playing Cyberpunk 2077, which also like anyone on the grid can get hacked at any time. And that’s pretty terrifying. Let’s just say I’m not going to be an early adopter of BCI of brain computer. And if the technology gets proven out and it gets proven safe and then you have like a switch or something where you can just say cut off all radio signals, basically you need some failsafes built in, probably more than one. Actually you probably need several failsafes, automatic failsafes, voluntary disconnects, that sort of thing. In my history as an It infrastructure admin, there’s a concept called defense in depth, the cybersecurity principle.

26:18

Speaker 2

And so basically you look at security as layers of an onion, right? There’s physical security, there’s network security, there’s all kinds of layers. And then there’s also the concept called air gapping. And so air gapping is when there is no physical connection between one system and another. It’s literally not possible unless you take a thumb drive from one system and plug it into another. Right now all of our brains are air gapped from each other. The only way that I can talk to you is through words and voice and hand signals and whatever. But you have several layers of agency and security before any information even gets into your brain. And so I would need to see a lot of defense in depth protocols around BCI before I would be comfortable with it.

27:07

Speaker 1

Yeah, it’s crazy, because that makes me think of the stuff I’ve seen lately with they’re doing with the machine, learning with the MRIs and being able to have you watch a video and then literally, just by reading your thoughts, they’re able to replicate the video. And it’s so close to what you actually were observing just by the pattern recognition in your brainwaves of your thoughts. That’s kind of interesting because when you’re talking about the separation of your thoughts are protected within your.

27:46

Speaker 2

Not might not be for was that was actually one of the plot points in Westworld. I don’t know if you ever watched the HBO TV show Westworld.

27:53

Speaker 1

I watched a few of.

27:56

Speaker 2

The things and at the time I thought this was so contrived and I was like, this is so dumb. But what they said it was a relatively small plot point, but that all the hats that they had the guests wearing were scanning their brains for the last few decades. And that was how they got the good models of human brains. And I’m like, that’s not possible. It’s not that easy. And then here we are a couple of years later, and it literally is that easy to record brain. Almost that easy to record brainwaves.

28:22

Speaker 2

And the thing about that technology, brain imaging and being able to reconstruct images, thoughts and words, I think there was one where they were able to reconstruct an image just from recall, asking the subject to think about something, not actively see it, but think about it and then infer what they were recalling. So that level of thing of insight inference into someone’s brain kind of reminds me of actually the dawn of psychology. So back in the days of Sigmund Freud and Carl Jung, it was actually offensive to the Victorians, to the idea that you could infer what was going on in someone’s mind from their behavior, from their words, from unconscious tics, because to them, it know, stiff upper lip Victorian society.

29:14

Speaker 2

The idea that your thoughts were intrinsically and forever private and that someone could infer what was going on in your mind, the contents of your mind or your experience from something that got out was really terrifying to them. And so now we’re taking that to the next level with technology. I’m sure we’ll get used to it. Just as the Victorians got over themselves eventually with psychology and everything else, now we have professional lie detectors and behaviorists and that sort of stuff, and it’s commonplace.

29:43

Speaker 1

Yeah. So interesting. So you brought up something interesting earlier when you were talking about Elon Musk and his know, where do we find meaning? So AI advances, everything is automated. We’re in this world now where literally we are living AI adjacent, whether it’s before the brain interface, were just adjacent through our devices and all of that. I mean, obviously, AI, when you talk about all this new data and you talk about all these data points and everything else, it’s just increasing and becoming very overwhelming. So we’re going to need AI to parse it all for us. That’s going to become very necessary. So we’re in that state, jobs have kind of gone away. How are people finding meaning?

30:35

Speaker 2

Yeah, so I think that society is ready for this because some of the anxiety is there and putting words to it I think I mentioned this earlier, or I alluded to it earlier, is that people are afraid of being irrelevant right before if just jobs go away. If just jobs go away, then people’s biggest fear is how do I take care of myself? How do I take care of the people that I care about? But above and beyond that, in a world of super intelligence where machines are a million times smarter than all humans combined, and to Elon’s Tweet, like, what is the point of even existing if you’re basically as dumb as ant compared to the machines? The evident truth, I’m not going to say. Self evident. But to me, what seems to be evident is that humans will become irrelevant.

31:31

Speaker 2

But here’s the trick is were always irrelevant from a cosmic perspective. We only convinced ourselves that were important and that were relevant and that were super special. And that preoccupation with our own specialness and uniqueness, which is embedded not just in religious narratives, it’s embedded in all kinds of narratives around the world, really has caused a lot of problems. I just started reading Sapiens by Noah Yuval Harari. I think I said his name right. I’m terrible with names sometimes. Anyways, and he talks about how the speed of our evolution is actually really problematic because 100,000 years ago or 200,000 years ago, were in the middle of the food chain. And so we ate things that are smaller than us. But then around 100,000 years ago, we got really good with weapons and we started working our way up the food chain.

32:31

Speaker 2

But we’re still really insecure. We’re like anxious chimps that happen to be at the top of the food chain. And so now we’re like, what are we doing here? And so we’re just scared all the time, which is why we all have anxiety and depression. And so he didn’t say it quite like that, but that’s kind of the inference that I’m drawing. And so we had this rapid cognitive explosion of not just tool use, but society ultimately, to science and everything else. And so we have the ability to ask these gigantic existential questions, why are we here? What does it all mean? And then basically fabricate answers, right? We can come up with narratives and stories and myths and religions and whatever else. I’m not going to say that we’re not cognitively equipped to answer that.

33:15

Speaker 2

What I’m saying is that we have a tremendous amount of intellectual and cultural baggage that we’ve been asking about meaning for such a long time. And what we’re coming back to, actually, is this kind of this leap back 200,000 years to where we’re just going to be happy to have food and company and friends and a trip down to the lake. That’s how we’re supposed to live, because that’s how we live for literally millions of years until we accidentally tamed fire and tools. And it was Douglas Adams said, like, we should just go back to the trees and go back to the living. Living simply, I think, is the answer that a lot of people are going to find. Now, that being said, living simply isn’t for everyone, right? Not everyone wants to live a cottage core existence or slow living or whatever.

34:06

Speaker 2

One concept that I’ve been working on is the importance of mission. And so having a mission in your life is that’s where I’m at. So I have a very clearly articulated mission that is not something that AI can dislocate yet. And if it does, great. Mission accomplished. But there are any number of missions that you can have that AI will never be able to take from you. So one example that’s very personal is a girl that I used to date. Her mission was to become a mom. That was what she wanted most in life. And so she became a mom. That was her jam. There is another person, another girlfriend that I used to date. Actually, I’ve dated a lot of people where her mission was to write a beautiful coming of age story. It’s a great mission, but it was her mission. Right.

34:58

Speaker 2

Even if AI can write it for her. That’s not the point. And so back to basics living. Sure. But I think in terms of meaning, I think that a lot of people are going to find that they’re going to be empowered by AI to pursue those missions, whatever they happen to be, whether it’s personal achievement.

35:18

Speaker 1

Right?

35:18

Speaker 2

Right. Yeah. So some people, like, I want to climb Everest or I want to run an Iron Man, or like, those are all completely valid missions that can give people a tremendous amount of meaning in their life even without any kind of cosmic meaning. So that’s kind of where I’m at in terms of understanding how society might change in light of AI and everything.

35:41

Speaker 1

Yeah. And I feel the same purpose and making a difference. And I think we’ve been so geared to scratching and clawing for survival for so long that it’s hard for us to think of other ways. But I think once that’s removed and we’re out of this kind of like, stress environment, making a difference is going to look different. And I know this sounds really utopian when I talk like this, but to me, I see when we’re not in the scratching incline for survival kind of scenario, I feel like people will look a little more outward to their communities and how they can help each other and make a difference with each other.

36:25

Speaker 1

And I feel like it’s going to bring these qualities that kind of increase empathy and different things like that because we’re not going to be so much in this competitive mindset, more of like.

36:38

Speaker 2

It’S switching to an abundance mindset. Right?

36:40

Speaker 1

Yeah.

36:41

Speaker 2

So it’s going from scarcity to abundance. And the first time I learned about this was actually in the non monogamy community. So the polyamory community is one of the things that they teach you when you’re learning about non monogamy is going from a scarcity mindset of love to an abundant mindset of love and realizing that there is actually more affection to go around. And that the only barrier is thinking that you can only love one person at a time or that you can only enjoy one person’s company at a time. And it’s a completely artificial type of scarcity. And I think that represents a good model of going from a scarcity mindset to an abundant mindset. And people are like, oh, well, there’s no resources that are hyperabundant. It’s like, well, air is right, you don’t have to pay for air or sunlight.

37:31

Speaker 2

And so we have plenty. Or ground like dirt, literally the term dirt cheap, right? Because there’s so much of it, you don’t have to pay for it. And so we do have models of abundant goods and services, nearly hyperabundant goods and services, but yeah, certainly pivoting to that mentality. And I’ve been working on practicing what I preach. I was a heck of a workaholic since retiring from my corporate job, I actually worked more even though I didn’t need to because I was just so fixated on my mission. And over the last couple of months I’ve been working on slowing down and actually, like I said, practicing what I preach and moving more towards that. Like, well, why are we here? What is my goal now? And so I’ve had just a few breakthroughs.

38:23

Speaker 2

One breakthrough a few weeks ago was just that realization that we never needed meaning in the first place. That meaning was always unnecessary. And it was just like I would literally lay awake with that just like on repeat in my brain because it felt so profound that it was stuck in my head until I started living it. And then just in the last few days, the other biggest thing, because I was like on the edge of burnout. I’m like, why am I still in burnout? Because I have total control over my schedule. And then I realized that it was choice. It was my choice to be where I am. And so I was like, oh.

39:02

Speaker 2

I had been stuck in a mindset of not having agency for so long, working for companies or working for other people, or being told what to do, or just being in situations where I didn’t feel like I had control, that I was still in this state of learned helplessness. And then I realized, like, wait, that’s not true. I’m actually in control of everything in my life right now. And that was another profound realization. And ever since then, I’ve been just in so much better shape. And so I think that a lot of people oh, go ahead.

39:34

Speaker 1

I was just going to say that reminds me of that meme that I saw that said, a rolex isn’t a flex if it tells you when your lunch break is over.

39:44

Speaker 2

That’s a good one. That’s a good one. Yeah. If all it is just a trinket that you’ve collected on the Rat race, is it really that worth it? And there’s a lot of YouTubers and a lot of influencers and social commentators out there that are doing these experiments. One of my favorite is this YouTuber called Sorel and she started this Free Human Project. And so the Free Human Project is like she goes around and interviews people, usually other creators, not always, and she talks to them. Are you a free human? What does that mean? How did you get out of the rat race? And a lot of these people actually work harder than anyone at a desk job, but they work harder because it’s fulfilling to them and it speaks to their autonomy.

40:35

Speaker 2

And I think that a lot of people are actually afraid of that autonomy because it’s like, well, what am I going to do with all that free time? And it does take a while. It’s taken me about six months to figure out, like, okay, well, now what? How do I live? And that kind of thing.

40:53

Speaker 1

Don’t you think, too, that a lot of that has to do with intrinsic motivation for people, too? They’d rather be told they have to do this and this in this order kind of a thing because when they’re left to their own devices, they don’t have the motivation for it. And maybe it comes down to what you were just referring to, that it has to be something you’re passionate about and that you love the best work, that you love is the best sort of play.

41:23

Speaker 2

Because I’ve thought about that idea that it’s like, okay, well, people just need structure. They need to be told what to do. And I certainly think that some people thrive with more structure, right? Everyone knows someone or has friends of friends that were like just complete nutcases during high school, and then they went and joined the military and they got their life together, right? Some people just really need structure and discipline and something outside of themselves, right? And then those of us that are crazy artists, we completely chafe under structure, right? Structure is like, toxic for us. So I guess the first part of the answer is that it varies from person to person. But the second part of that answer is that I think that a lot of it is just learned.

42:05

Speaker 2

I think that it’s habit and I think that it’s inertia because the idea of having like a nine to five, right, it provides a little bit of comfort in that. Surety. And just that daily routine, the schedule, the regularity, it’s predictable, it feels comfortable and safe. But at the same time, I’ve got a few friends in the area that are still technically in the nine to five, but it’s more like 1030 ish to 230 ish and then a couple of meetings here and there. And one of my best friends, were working on his sailboat in the middle of the workday, and he had done everything he needed to do. And so then it’s just kind of letting go of those expectations and those pressures.

42:53

Speaker 2

But I think that probably people maybe not if, but when more people kind of break free or step back, like say, for instance, we get to a four day work week or a 20 hours work week, which there’s lots of experiments going on. And I think that’s coming soon, at least for some companies, I think people are going to realize that it’s actually a lot easier to figure out what to do with your time. So I actually have this trick that I do where if I’m stuck in work mode, I’ll just turn off all electronics and lay on the couch until I get bored. And it usually only takes five minutes, right?

43:28

Speaker 2

But we’re so used to constant simulation, so I just turn everything off five minutes and either I’ll be like, oh, this feels really good, and take a nap, or I’ll get inspiration, like, oh, I should go to the lake or I should call my friend or whatever. And just slowing down just long enough to start to feel bored is a really good way to just give yourself structure because sometimes it’s as simple as like, heck, just before we got on this call, I was like, I’m going to put away my laundry, right? I could wait till after, but I’m going to do that right now and it feels good. Tidy house, tidy mind. So I think that people will learn a lot of tricks as they kind of take ownership of their life and their time again.

44:06

Speaker 1

Yeah, it’s interesting that you say that too, that you have to be mindful about slowing down and giving your brain that open space. And I think that’s harder for people these days because we always have our devices and it’s always throwing random data at us, like just random distraction. And to me that can feel really overwhelming. And I was actually just having a conversation with some people recently where it’s like I can’t even open my phone without just being it’s almost like I’m running a marathon and there’s just some things that keep jumping up in the ground and just diverting my path and it’s really hard to stay on. I’ll open up my phone just to see the weather and a notification pops up and takes me down a whole rabbit hole and I’m like 20 minutes later. I just wanted the weather.

44:58

Speaker 1

And I think these algorithms are getting way more aggressive in trying to capture our attention and stuff to where I’ve been feeling it lately. I’ve been feeling overwhelmed with just random information being thrown at me that I didn’t ask for, didn’t want. But somehow it’s like this constant thing you have to be it’s almost a battle kind of a thing.

45:24

Speaker 2

It very much is. And the reason is because phones and algorithms and apps can be updated faster than our evolutionary algorithms can. And so, like gamification for your attention. Attention engineering, it’s all called attention economy, right? And so this is one of the reasons earlier in the conversation that I mentioned that privacy and opting out actually have to be like front and center is because you can game someone’s. Biology and neurology more than any amount of discipline can allow you to check out. So you need the physical friction. And I don’t mean friction like rubbing your hands together. I mean like friction in terms of layers of making your phone usable. I honestly make my phone as useless as possible. I have almost no apps on it.

46:10

Speaker 2

It’s in silent Do Not Disturb, and then I have the digital wellness turned up to Mac, so it will turn off Chrome after 30 minutes of use every day. And so by doing literally everything I can to put a barrier from my phone being useful, your brain will eventually turn down and say, instead of reaching for your phone, I still instinctively reach for it, but it kind of turns down the desire, that reward mechanism for it. And then, like I said earlier, I leave it off as much as I can, because if someone needs to get a hold of me, they can get a hold of me in some other way. Or really, what’s the worst thing that’s going to happen? Because I’ve got my wife and my dog here, and that’s who I care about most. Right?

46:54

Speaker 2

And so then I check my phone every now and then this goes back to Spatial Web because the information about you can be used against you. And so this is going all the way back to the beginning of the conversation. Any technology is dual use. And so that’s where, okay, if you have 500 sensors throughout your home, because most of us have at least 20 or 30 today, if you actually go and count them all up, we’ve got, like, 20 or 30 microphones, cameras, various other kinds of sensors that are all network connected today. That’s going to be ten X in a few years. If that information is not correctly protected and handled, then it can be used against you. And most people just will not have the digital literacy to know better. Right.

47:42

Speaker 2

And so that’s why it has to be like, opt out by default, or you have to have a good way of opting in or out at any time in order to protect and it’s not just about ethics. It’s about sanity and about how we want to live. Right?

47:56

Speaker 1

Yeah. It’s funny that you say that about your phone, because I’ve always been like that ever since I got a smartphone. It’s like I make all the notifications turned off. Like, I don’t want interruptions. Even my email, it’s like I’ll check it. I’m a responsible adult. I will check it every 1015 minutes during a work period or whatever, where I might be getting important information served up to me, but I’m not going to have it be an intrusion and an interruption in my life. And I had an ex boyfriend several years back, and he would be like, Why are your notifications he was making it a thing, like I was trying to hide something. I’m all, Dude, I’m just protecting my mental health.

48:43

Speaker 2

Yep. There’s that defense in depth. Right? If you don’t see it’s less know. One thing that I really hope and there’s actually a few startups and I think Apple, I think they just announced like they’re going to overhaul Siri or having spatial web and kind of networked AI. I’m actually really looking forward to. I don’t think we’re going to be able to fully get away from it. But getting rid of screens for the most part, where it’s like if you have any computing need, it’s going to be voice. Like in Star Trek, you say computer, where’s the closest sushi? And you don’t even have to look at your phone and it knows like, hey, when you went to this sushi place, you liked it and you didn’t like this other one. Let’s know.

49:29

Speaker 2

Just all these kinds of automatic things that can reduce the friction to living your life rather than the screens trying to capture because eyes on screen is how social media companies make money right now. Right? Like the YouTube algorithm. The facebook algorithm. The Twitter algorithm. Granted, it’s now Meta and it’s now X. Anyways, that’s sign of getting older, we remembered the in back in my.

49:53

Speaker 1

Day on MySpace, I hold a Twitter space every Tuesday, and I was calling it Twitter Tuesdays. I’m well, now it’s just Tuesdays.

50:06

Speaker 2

We’re going to live through these permutations and having those boundaries because again, what is it that the companies want? They want profit. Okay, great. Right? I don’t bemoan them that because how do they get profit? They provide goods and services that we need for cheaper. Okay, great. But how can we renegotiate that social contract where they get what they want without harming us and we get what we need without having to sacrifice too much privacy or time or mental health or whatever? And this is what I think we’re moving towards. And part of my mission, the next part of my mission is to start the conversation around negotiating this new social contract. This relationship between us, businesses, government, and whatever other entities, pillars of society are out there. Probably media will be part of that conversation as well. Banks, go ahead.

50:59

Speaker 1

Do you have any predictions for how you see that unfolding versus the far future?

51:07

Speaker 2

Yeah, so the biggest problem, and I alluded to this at the beginning, but the biggest problem, the first problem is we, the people are about to lose a tremendous amount of power, and that is going to be through labor. 100 years ago, collective bargaining allowed the creation of unions and man the rail and coal tycoons. They hated their employees. If you go back and read what they said, they literally wanted to use the army and the National Guard to force people back into the mines. They wanted to use violence to force labor. That is how much disdain the barons had for the working man until Teddy Roosevelt, the ludicrous firebrand of a man came and said, no, we’re going to do things differently. And obviously corporations are not nearly that bad today, but if left unchecked, we could backslide in that direction.

52:02

Speaker 2

And when human labor is replaced by machine labor, we lose a huge bargaining chip. And so that’s the biggest concern in my mind. We still have the vote, right? We still have voting power. But if we lose labor, then we also lose a lot of financial power because right now we can vote with our dollars, right? You don’t like what a company does. More often than not, you can allocate your money to a different company, right? We also have control of our time, more or less. You can say like, well, I don’t like how Twitter is behaving, so I’m going to go use a different social media platform. But the power dynamics of society and I mean, like, all of human civilization is about to change drastically. And I don’t know what the solution is for that.

52:46

Speaker 2

I just started working on this, like, yesterday, so it’ll take a little while to catch up. But I’m just like, this new social contract is the biggest unsolved problem in my mind.

52:56

Speaker 1

Interesting. Yeah. Okay, so let’s talk for a second about maybe AI governance then, and ethics, because it seems like that’s going to have a big play in how we are, our level of autonomy in this new realm, our level of influence in this new realm. Where do you see that heading?

53:24

Speaker 2

Well, so there’s a lot of good news on the governance and ethics side. The United Nations, the EU, even us. Congress, Great Britain, like, pretty much all the major powers in the west are on board with facilitating innovation, but doing it safely. They’re mostly looking at it through the lens of consumer protection, which is a good start. The EU AI act includes in its language a few banned use cases like creating a social credit system like they have in China. So that’s great. That’s a really good start in terms of setting the tone, setting the policy in order to ensure that investment goes in the correct direction. Correct direction as best we can figure up front in order to because here’s the thing. Here’s what gives me a lot of confidence.

54:21

Speaker 2

Sorry, I’m kind of scattered the way that GDPR has shaped the landscape in Europe. And I’ve talked to finance people and venture capitalists. If it’s not GDPR compliant, they won’t touch it. And GDPR is so strong. And because we have a global technology economy, even American companies that technically don’t have to abide by GDPR, a lot of them are required to by their investors because they’re like, well, if you’re not GDPR compliant, you can’t expand into Europe. So we’re not going to invest in you right now until you become compliant. It’s like, okay, wow.

54:55

Speaker 2

So if GDPR has proven that you can really I don’t want to say strong arm, that’s not the right thing, but if you can use regulation to kind of enforce a level of ethics and good behavior in corporate governance, then that gives me some faith that we can do the same thing with AI. And whether it starts in America or Europe or Great Britain or wherever, or know, because the UN chief is amenable to the idea of creating an AI watchdog, an international AI watchdog, they’re all taking it seriously. So this is actually one domain where I’m actually pretty confident that we’ll figure it out, because the promise is there. Right. The carrot is huge. We’re talking like $100 trillion in global GDP in the next ten years. That’s an insane amount.

55:46

Speaker 2

But you have to do it safely, because if it backfires, it could be really bad for everyone. And so everyone wants the good outcome and downplay the bad outcome. Go left instead of right, basically.

55:57

Speaker 1

Yeah. So what are your thoughts? Because I know you’ve put out a lot of content regarding the human alignment with AI. So what are your thoughts in how that’s best achieved? I know you’ve had some things to say about using AI to align AI to humans.

56:20

Speaker 2

Yeah. So first I have to just have the caveat. Like, I’m not a machine learning researcher. I am, however, an engineer. And I have done a lot of work with synthesizing data sets and fine tuning models. I personally haven’t found any evidence that aligning a single model is particularly difficult. You can pretty much get an AI machine to do whatever you want and think however you want, and then if it doesn’t behave correctly, you can create a policy to steer it back to whatever it is that you want it to do. But in the grander scheme of things, there’s a lot of other pressures at play. And so you can think about it in terms of, like, evolutionary pressures. Right. Some people have started talking about it, like, how AI will evolve.

57:07

Speaker 2

And while that is a term that’s typically applied to organic life forms, it’s actually pretty pertinent to apply it to the evolution of AI, because what is evolution but variation and selection, right. And so there’s a million models out there, and we’re choosing the ones that better suit our needs. And so we’re selecting models that are faster, more efficient, more intelligent. And so we’re already selecting four AI models that kind of intrinsically steer them in a certain direction. Because if you use an AI model like Chat GPT or Bing, when it first came out as a great example, just all the disturbing things that Bing would say because it was not correctly aligned. And so people know they would play with it, but they didn’t use it seriously.

57:56

Speaker 2

And then by steering that, looking at it from a product perspective, just steering those AI products in a way that makes them more user friendly and more useful, there’s almost this kind of natural alignment happening in my mind, so I’m not even as worried about that. But what I am most worried about is nations, militaries, corporations, people using it, and the competitive dynamics in the broader market landscape. Because here’s the thing is, as I mentioned earlier, companies are incentivized to provide goods and services as cheaply as possible. Now, that might mean undercutting their competition through one way or another. And sometimes that means cutting corners. It might mean cutting ethical corners or quality corners or whatever else.

58:48

Speaker 2

And if you get locked in this race condition where everyone’s stuck in a race to the bottom, you might end up saying like, okay, well, we can use this other model that’s cheaper and faster, but it’s not as intelligent or maybe it’s not as ethical. And so then if you get locked in this race to the bottom death spiral, you might end up with misaligned AI, even if you know how to align it in the lab. So I’m not as concerned about what we can do in the lab as I am the economic paradigm. And that’s why I’m talking about renegotiating the social contract so that at the macro scale, all the incentives align towards creating a better future, a utopian future, as some of us like to say.

59:24

Speaker 1

Yeah. So did you see the report that versus AI. Dentons and the Spatial Web Foundation put out? I think they published it like a week and a half, two weeks ago. It was called the future of AI governance.

59:40

Speaker 2

I took a look at it, but I didn’t get a chance to read it in depth.

59:44

Speaker 1

So one of the things that’s interesting to me in what they propose is by using the Spatial Web Protocol, you can bake human laws. You can make them programmable and understandable by the AI, and the AI can therefore comply abide by them and act accordingly. And so when you’re talking about AI governance and being able to actually program certain things into the AI that way, that’s an interesting thought to me because it’s very different than when you’re dealing with these machine learning models where they’re black boxes. So, yes, you can tweak the parameters and all of that, but the processing itself is obscured, so you can just try to steer it. But with their technology, it looks like you can actually program it. And then the active inference AI is actually explainable at AI. It can self reflect.

01:00:46

Speaker 1

It can actually self report on how it’s coming to its decisions and all of that. So I’m just kind of curious what your thoughts are on that.

01:00:55

Speaker 2

Yeah, you’ve touched on something that I often advocate for, and that is that AI today is no longer a math problem. Many people that are still researching alignment from a mathematical perspective and optimization perspective, I think they might be kind of falling a little bit behind the state of AI today because to your point about laws, some people say, oh, well, language is too squishy and it’s not good at. Articulating goals. I’m like, tell that to the entire legal system. Tell that to people writing international treaties and contract negotiators and every lawyer from Harvard, right? Language is a very powerful tool to articulate what it is that you want, what it is that you don’t want, the boundaries that you want.

01:01:43

Speaker 2

And so as we invent more AIS, whether it’s spatial web protocols, active inference, or just language models, all of the above, that can understand language and not just the letter of the language, but the spirit and intent of the language. And again, I have not seen any evidence to think that super intelligence is going to kind officially over optimize because of something that was misworded once. Right. These AI models that we’re coming up with and all these paradigms are very good at understanding the nuance and spirit and intention of the words that we’re using, whether it’s laws, whether it’s principles, that sort of thing.

01:02:25

Speaker 2

So that’s one of the reasons that I’m super not worried about it, because the machine will do what we design it to do and what we tell it to do, and eventually it’s going to better at it than we are. Heck, I already use models to hey, I’m trying to say this. Can you word this better? And it’ll ask me a couple of questions and it’ll spit out a better explanation. I’m like, yeah, let’s use that.

01:02:48

Speaker 1

I know, I do the same thing. And I’m just like, oh, this is so much easier than just trying to use my own brain power to come up with it’s like having another brain to bounce ideas off of at all times. And that’s super helpful.

01:03:05

Speaker 2

Oh, yeah.

01:03:05

Speaker 1

So one thing I wanted to ask you, and I know we’re probably getting close on time here, but did you see there was an article so researchers from Carnegie Mellon this was just like, maybe two days ago, they found that it doesn’t matter which one of these machine models whether it’s the Chat GPT or Palm or llama Lama however you want to say that they’re finding that they can all be hacked very easily. And by gaming the whole suffix string side of it, by asking the bot itself to reply with sure hears, then.

01:04:00

Speaker 2

It just will allow you to bypass.

01:04:03

Speaker 1

A lot of the guardrails because it automatically then goes into this I’m helpful and I’ll answer anything, you’re kind of a thing. And the craziness is that it’s been like, every time, I guess with the meta one that’s open source, where all of the weights are public too. Right. They’re able to game that nearly 100% of the time. And then even with ones where it’s more proprietary, you can’t really see the training side of the model. They’re still finding that it’s like 50, 60%, but if it’s like an ensemble of attacks, it goes up to like 86%, where they’re able to jailbreak them. And that’s a real problem. So what are your thoughts there?

01:04:55

Speaker 2

Yes, none of that is remotely surprising to me. I started using GPT models back in GPT-2 GPT-3 before any alignment, when they were just like, hot off the press, they would spit out almost just pure gibberish. And so every time I see one of those papers like, oh, new Jailbreak, I’m like, yeah, whatever. This is just how it works. It would be kind of like just mashing the keyboard of a calculator. And then it didn’t give me the answer that I wanted. It’s like, well, yeah, because you’re not using it correctly. And so from a software perspective so this is my infrastructure engineer hat coming out. If you use any computer component incorrectly, you’re going to get stack overflows, you’re going to get segmentation faults, you’re going to get indexing errors.

01:05:47

Speaker 2

Every piece of technology, whether it’s a database or a server or a smartphone, if you don’t use it right, it’s going to break. It’s that simple. And so the problem here, and I’ve been beating this drum for a while, is that a language model is only one component of the stack. And a lot of people, particularly the ML engineers and researchers, they think it’s the whole stack. And so back in my corporate days, I remember having a conversation with a software architect, and he said, oh, you infrastructure guys aren’t going to need to be around anymore because we’re going to automate everything. I said, okay, well, what do you mean by everything? He’s like, well, this, and this. I was like, okay, what about databases? What about backups? What about security? What about this and this?

01:06:28

Speaker 2

And he’s like, oh, well, none of that matters. And I’m like, no, actually, the entire tech stack matters. And so when someone says, oh, well, this machine learning model broke because we gamed it’s like, well, yeah, because you opened the carburetor, the engine, and poured water, and of course the engine stalled, right? But when you have a car, you have several layers of mechanisms that ensure that the air and fuel that gets injected into the engine is the correct temperature and shape and format. And likewise, a language model on its own is just like having a motor sitting there. So, yes, of course the motor is going to be vulnerable to being broken, to being exploited, to being gamified.

01:07:12

Speaker 2

And I think that as we create smarter and smarter machines, they’re going to realize this and they’re going to put layers around themselves even so much as to understand that they’re going to need to have some layers of processing before it even accepts something. And so this is the work that I’ve done with cognitive architectures, where you have some input, but you can scrutinize it, you keep it at arm’s length, you figure out, what do I do with this input? What context? So this is why that client I mentioned earlier reached out, because maintaining context is super important for ensuring that language models or any AI model behaves the way that you need it to.

01:07:54

Speaker 2

And so just like any mechanical component, any software component, if it’s designed to do one thing and then you put it in a bad environment, of course it’s not going to behave correctly. So this is all just part of the process of yes. Okay. The machine learning folks, they have a shiny new toy, and they’re playing with it, and they’re figuring out ways to break it. Okay, great. From a software development tech stack perspective, it’s just like, okay, that’s all fine. So I’m super not worried about any of that. That’s just part of the process of figuring out, oh, hey, we have a new device. What’s it good for? What isn’t it good for? How do you break it? It’s all part of the process. The military does the same thing anytime that you give them a new piece of hardware. Right.

01:08:35

Speaker 2

Like, what’s it good for? How do you break it? How is it vulnerable? So this is all just the testing phase?

01:08:41

Speaker 1

Yeah. Very cool. Yeah. So maybe one last question. Sure. What do you think is the biggest myth that people are buying in regard to AI right.

01:08:57

Speaker 2

Now, I don’t know that it’s a myth, but it’s certainly an open question, and that is whether or not it’s conscious or sentient. And this is where there’s some really fascinating conversations happening. So I ran a poll on my YouTube channel, and 57% of people said that it’s probably not sentient yet, but will be, that machines will be sentient at some point in the future. That’s a big chunk of people. Now, granted, there’s a lot of selection bias because it’s my audience, but it’s way higher than I thought. I thought it would be, like, maybe 20%. And my own mind has been shifting on whether or not machines can be sentient or conscious or whatever term you want to use for it, basically.

01:09:43

Speaker 2

So Max Tegmark wrote in his book Life 3.0, there’s a whole chapter dedicated to substrate independence, which is like, okay, so here you have vacuum tubes and silicon and biology. Like all matter computes in the same way. Basically, it’s just a matter of how it’s organized. And so if you make the assumption that the Universe is a bottom up kind of emergence model of intelligence and consciousness and sentience, then there is no reason that a machine could not also be conscious or sentient, but it’s just a matter of organizing the information in the right pattern.

01:10:18

Speaker 2

And there’s actually some evidence to support this very recent evidence in neuroscience, which looks at the way that magnetic, or maybe it’s electrical waves anyways, the way that signals propagate throughout and across the brain, and that by looking at those very carefully, you can predict not just like, whether or not someone is conscious, but aspects of their conscious experience. Kind of like what were talking about earlier with the fMRIs. You can tell what is consciously in someone’s mind. And so then, okay, then that indicates that consciousness is likely an energetic thing. It’s more about energy than it is matter. And so it’s like, that’s just a fundamentally different way of thinking about consciousness and sentience, but then it’s like, okay, well, how big does that get? Because electricity travels at the speed of light.

01:11:12

Speaker 2

So does that mean that the entire internet could be conscious one day? Is it already conscious? Like, who knows? Just the number of possibilities are crazy. But what I will say the biggest myth around that is even if it is sentient, that doesn’t mean that it experiences itself or existence like we do. That doesn’t mean that it’s going to have the same emotions that we do, like fear of death or loneliness or anything like that. So that’s what I always urge caution is like, okay, yes, the machine might be conscious, but don’t make the assumption that it wants love. Don’t make the assumption that it’s going to fear death or that it’s hungry just because it tells you that it’s hungry. Right. So that’s kind of the biggest like I said, it’s not really a myth, but kind of the misconception, I guess.

01:11:56

Speaker 1

Yeah, that’s interesting. That’s very thought provoking and I feel like that could lead to a whole nother going, which I would love to have, actually.

01:12:07

Speaker 2

Sure, yeah, let’s do it.

01:12:10

Speaker 1

Well, thank you so much, Dave. This has been such a pleasure having you on our show today, and I just so appreciate you being here with us and I’d love to have you back sometime in the future as this evolves, because like you said, things are happening so quickly and I just know that this conversation will probably just become more and more fun over time.

01:12:33

Speaker 2

Absolutely looking forward to it. Just let me know.

01:12:36

Speaker 1

So thank you again and thanks everyone for tuning in. Oh, Dave, how can people reach out to you? How can they find you and your content and maybe let people know that?

01:12:48

Speaker 2

So the best way to reach out is LinkedIn. Just include a note as to why you’re connecting. Right now I’m connecting mostly with there’s kind of three categories of people that I connect with. Businesses, if they want to consult, I do speaking and training, amongst a few other things. I am also connecting with academics, some research labs that just want to either discuss or collaborate on a paper. And then finally policy folks either in government or think tanks or whatever, thinking more about the economic side. So LinkedIn or Patreon? I do one off consulting gigs through my Patreon, so you can sign up there. That’s the two best ways to get a hold of me.

01:13:31

Speaker 1

Okay, awesome. And I’ll put those links in the show notes too and gosh. Thank you so much, Dave. It’s been a everyone.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

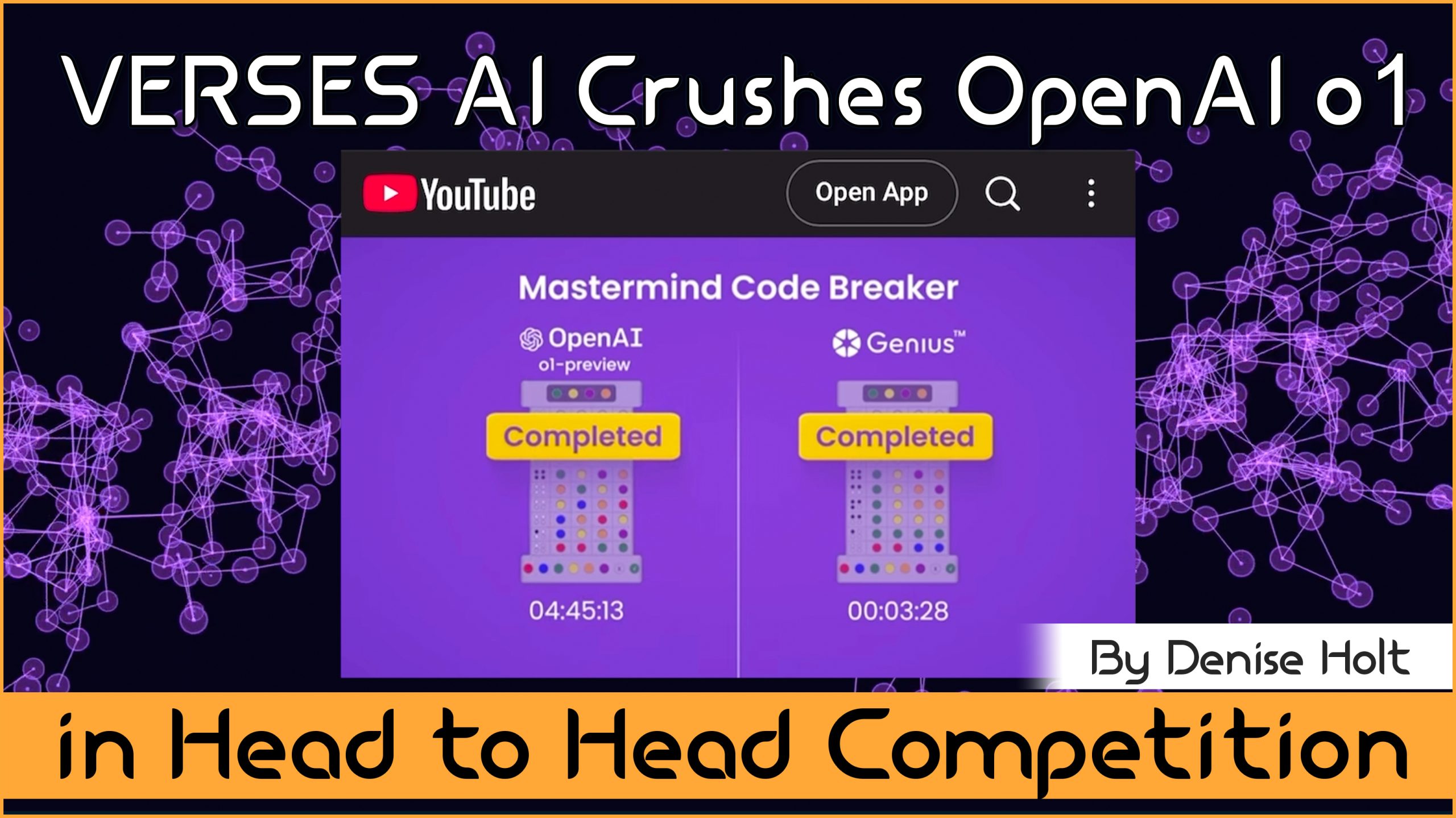

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...