The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

Join Spatial Web AI host Denise Holt, and Tim Scarfe, creator, and host of Machine Learning Street Talk Podcast, for a mind-bending discussion on AI and the evolution of technology.

In this episode, they explore topics like Active Inference intelligent systems, biomimetic intelligence, and the complex goals that drive artificial and biological entities.

Tim provides perspective on the need for ethical standards in AI research and development. Discussing issues surrounding data privacy, identity, and centralized control over information, they touch on issues of trustworthiness, transparency, and the challenges of governing exponentially advancing technologies. The conversation examines how social conditioning shapes human behavior and subjective experiences compared to machines.

They also contemplate future implications as brain-computer interfaces become more common. Could technologies like Elon Musk’s Neurallink enhance empathy by sharing experiences or further divide societies? What does an “extended mind” mean for privacy and agency?

Additional topics include memetics, decentralized decision-making, transhumanism, simulation theory, and the transition to a data-driven world powered by intelligence. How will hyperconnectivity impact our perception, identity, and fundamental cognitive abilities?

Tune in for all this and more…

Connect with Tim:

LinkedIn: https://www.linkedin.com/in/ecsquizor/

Machine Learning Street Talk on YouTube: https://www.youtube.com/@MachineLearningStreetTalk

Connect with Denise:

Website: https://deniseholt.us/

LinkedIn: https://www.linkedin.com/in/deniseholt1

Learn more about Active Inference AI and the future of Spatial Computing and meet other brave souls embarking on this journey into the next era of intelligent computing.

Join my Substack Community and upgrade your membership to receive the Ultimate Resource Guide for Active Inference AI 2024, and get exclusive access to our Learning Lab LIVE sessions.

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

00:12

Hey, everyone, welcome to the spatial web AI podcast. I’m your host, Denise Holt, and today we have a very special guest. Before we get to that, I’d like to just talk for a second about something that I started this year with my substac channel. I’ve put together a resource guide with tons of information and links and access to all kinds of information to learn more about active inference AI. And then I’m also hosting monthly learning lab live sessions. So I would love to have you join. You can go to substack and you can join with any of the memberships there. And there’s various levels to the memberships, so check it out. Please join.

00:59

I would love to welcome you into the community and we can all learn from each other and kind of evolve our knowledge together as we move into this next era of computing. Today, I am very happy to have Tim scarf on our show. I’m sure most of you know him from Machine Learning street talk. He is the creator and host of that. And Tim, welcome to our show. Thank you so much for being here with us today.

01:31

Denisa, it’s an honor to be on your show. Thank you so much for inviting me, and I’m a big fan of the show.

01:35

Oh, thank you so much. Well, okay. So I would love to talk a little bit about your podcast. Your podcast, machine learning, street talk. I think it’s currently the number one podcast within the AI community. Maybe tech as a whole.

01:53

Definitely not tech.

01:55

Clearly you have such a deep understanding of AI technology, so I would love to know a little bit about your background, where does your expertise come from and what made you want to start your show?

02:12

Yeah, fantastic. So about 21 years ago, I set up a software company, a startup company called Net Solutions. And very early on, we got a project which was doing data analytics. So things like support vector machines and 3d data visualization and stuff like that. And were doing Voroni diagrams and lots of algorithmic stuff. And I got the bug straight away. I never went to university, so I think there was a bit of a story there that in 2006, I went to Iceland to see one of our customers, and one of the directors at that company was a professor at the local university in Reykjavik. And I think I had a conversation with him one evening, and he convinced me to go to university to study computer science because he could see that I was intellectually curious and all of this kind of thing.

03:00

So, yeah, I did that. I found a local university, and my local one, Royal Holloway, was actually quite famous because Vladimir Vapnik and Shervineskyzk were, you know, the guy who invented support vector machines and a guy called Volodyovov who invented conformal prediction, which is now really huge at Nureps, but at the time nobody had heard of it. So yeah, I did all of that. I got a first class in my computer science degree, although I went straight onto the PhD, and I must admit that was a rude awakening. So all of the lessons that set me up for computer science at undergrad failed me in PhD. So I was quite eccentric.

03:34

And I did this weird dissertation where I kind of made it up and I scored the highest in the year because it’s a good strategy to do something which has never been done before because it’s very hard for them to mark you down. But I did the same thing in the PhD and that was a disaster. You actually want to do the same thing as everybody else is doing because otherwise no one cares what you’re doing. So, yeah, I did that anyway, did a stint at Microsoft and chief data scientist at BP and started a bunch of startup companies. So like a code review platform and more recently an augmented reality startup called Xray. And yeah, so I started MLST in about 2018. It came out of a project were doing at Microsoft called the paper review calls.

04:16

So every week we would pick a paper from the literature and machine learning. We would sometimes get the primary authors from Microsoft Research to come on the call and tell us about the paper. And we did about 25 of those, I think. And it was published on YouTube as well.

04:33

Interesting.

04:33

That project became MLST. So we started the new YouTube channel at the beginning of COVID with Yannick Kilcher and Keith Duggar and Connor shorten, and the rest is history.

04:44

Oh, awesome. That’s really awesome. Your show is amazing, by the way. I love it. I think I discovered it very early last year, 2023, maybe January or so. And one of the first things that I saw was an interview that you had done with Carl Firston, and I know you’ve had him on your show a few times, and I know you’re a big fan of know. So coming from a machine learning background, what is it about Carl’s work, know, really interests like in and of his work itself, but also where you see it going with the future of know, what is it that has kind of attracted you to Carl?

05:32

It’s a great question. So at the beginning, I must admit, it was a bit of an enigma. Carl’s early papers were incomprehensible to me because he strides so many different disciplines. So there’s neuroscience and cognitive science and biology and talking about things like biomimetic intelligence and stuff like that. And it was all incomprehensible to me. And this is the story of machine learning, street talk. We were an ML channel. And machine learning is really interesting. It’s all about modeling. It’s all about building models of how things work and data. Scientists do this all the time. They take systems and they do statistical analysis and so on. But there’s a big difference.

06:10

And the big difference is, when you kind of come from the worlds of neuroscience and biology and so on, you’re thinking not just of a snapshot of the system, you’re thinking about the system as being alive. And that’s what biomimetic intelligence means. It means that you’ve got all of these independent little things that have forces acting upon them. In fact, you can just think of it in terms of thermodynamics. So you have all of these agents, and agents have a thermodynamic identity, which is represented by their autonomy, essentially a kind of clear separating thing between them and the rest of the system. And the thing in the system, one of these agents in the system, it marshals information, right, in a very sophisticated way, and it knows the history of what went before. So it’s like building up this model of the world.

06:59

And in machine learning, there’s no history in machine learning. We just train a language model on a snapshot of language, and that’s it. That’s the end. There’s no active inferencing, there’s no active sensing, there’s no history, there’s no dynamics.

07:14

There’S no adaptation, no evolution of their knowledge.

07:18

Yeah, exactly. And it took me a long time to grock this because obviously I was never an expert in physics, because what we’re talking about here is basically, it sounds controversial to say this, but it’s a physics of intelligence, or even by extension, a physics of biology, because you could cynically say that even from a biologist point of view, what demarcates living things is simply just physics. Even evolution, for example, Darwin was talking about natural selection and all the different macro aspects of evolution. And actually it’s just physics. It’s all physics. And that is the big kind of insight from Carl Friston.

08:01

Yeah. And to me, I kind of see it as an expansion of causality. And then you see people engaging with their environments and the way they perceive things through their learning process of understanding how the world works, understanding, basically making decisions throughout their day. That are going to bring them closer to their goals in life. And that could be a very immediate goal or long term goal, but it’s this ongoing process.

08:36

Yeah, well, goal directedness is very important. And I did write an article about agency, but I want to rewrite it, because the more I learn about this, the more I know that. I don’t know. I’m reading this great book at the moment by Philip Bull, which is called how life works. And I’ve just read his chapter on agency this afternoon. But, yeah, agents, as you say, they have all of these interesting properties. And one of them, as you just said, is they’re kind of causally connected to the environment, so they actually cause things to happen. And from a fristonian point of view, is they maintain their entropy. They resist entropic forces. And that sounds like a really esoteric, weird thing to say. It just means that in the universe, information spreads out. It dissipates. But living things are very strange because they maintain entropy.

09:22

They maintain their identity and their existence. And more importantly, it’s actually about information. Like they hold on to information, like DNA, for example, is an example of how cells can photocopy information and pass it on to their progeny. That’s a really important concept. But you said as well, goals and teleology and purpose and meaning. And this is another thing that biologists, they don’t like the word goals or purpose. It’s almost been expunged from the literature. And it sounds very religious, doesn’t it, that things can have goals or a purpose, because there’s always the question of where does it come from? Actually, I interviewed Max Bennett.

10:05

Yes. Okay. So I saw your clip this morning of the interview with Max, and I want to read that book.

10:14

And so it reminded me a lot of Jeff Hawkins. So he spoke about the neocortex as being a prediction, again, you know, a prediction machine. Why are things prediction machines? And a lot of it has to do with thermodynamic efficiency. So you can’t just remember everything, because that would be really inefficient. The reason why cells are so thermodynamically kind of efficient is because of how they work and how they store information, how they share information with their. So Max was talking about how the neocortex, as Jeff Hawkins argues, is quite a generic prediction machine. So you have all of these sensory motor circuits and all of these signals in your brain. And the neocortex basically just predicts those signals. But then there’s the question of, well, okay, well, it’s doing planning, and it’s got goals. So where do the goals come from?

11:03

And he said it’s bootstrapped from the basal ganglia, so low down in some more. And I use primitive in big air quotes because it’s much more complicated than that. But he said that the neocortex actually bootstraps its teleology from another part of the brain, and then the neocortex kind of takes over.

11:20

So I could see that happening with intrinsic needs like hunger and things like that. Right. But we develop goals that have more to do with desire and emotion than we do with just keeping it to basically biological needs, don’t you think?

11:42

Well, this has been something, I’ve been trying to get my head around this for a long time now. And I think the biggest source of confusion is, first of all, humans are distinct from all other animals because we are a form of biomimetic. And the memetic bit is the thing that’s doing the heavy lifting here. So we have a culture, and unlike what went before, all of our adaptivity was physical, and now it’s social. So the reason why we can add programs on during our lifetimes rather than generationally is because of this socially memetic intelligence. So, yeah, where I was going with this is before I was confusing the goals of the agent versus the memetic goals. And that’s an important distinction. So evolution, for example, you can think of it as having an overarching goal.

12:33

You can think of our genes as being a kind of source of directedness, but you can also think of our physicality as being a source of directedness, or you can think of our social memetic sphere as being a source of directedness. So what I mean by that is, when I think of a goal like eating food, I mean, babies, they don’t know to eat food necessarily. It’s kind of conditioned upon them. So many of the things that we seek to do, like, for example, my.

13:01

Interest in reading books, get them to see a baby when it’s born. It’s like there’s a little process there.

13:11

Exactly. I mean, if you look in the animal kingdom, they have basic communication and so on, but our language has this dynamism. It’s a software program that’s ever evolving and ever changing, and that’s unique to us. So there are so many goals that we have that are actually conditioned down memetically, and then we kind of incorporate them because this is the thing. Active inference is all about volition. It’s basically a mathematical description of an agent that has a generative model over policies. And a policy is just a sequence of plans with a goal at the end. So you can kind of think of those little micro goals as being the goals. But that’s not how I think of goals. I think of goals as being the system goals. But you can actually think of goals at many different levels of.

13:51

And because I said to Carl, I think that I don’t like goals. And I hope he didn’t take that in an insulting way. Because his entire theory is about goals and where they come from. And volition, obviously, he’s a neuroscientist, so he’s also talking about volition in our brain. But I really think of goals as a system property. And there’s a fundamental tug of war in the land of Friston. And I see it. I called it strange bedfellows in my article, which is to say, I’m not just talking about the web free folks, but even from the cognitive space, there are neuroscientists and cognitive scientists. And roughly speaking, you can partition those into those who think everything happens in the brain versus those who think everything happens outside the brain. And when I say outside the brain, I mean physically and socially.

14:44

So there’s these embodied people who think that our physical embodiment, it’s almost like the knowledge is externalized. And also there’s a social embeddedness as well. The locus of agency is diffused, depending on your viewpoint there.

15:01

Okay, so one of my questions then is then, what makes it to where? What do you think it is that then causes some people to just really kind of fall in line, and they kind of act within the norms of society. And then there’s these special people who really step outside of that, who really, they develop their own direction, their own goals that really don’t even make sense with where they come from, with their past experiences. But they just have kind of this outlier something within them that reaches beyond expectation, that it’s almost like they thrive on the surprise rather than kind of minimizing that uncertainty. They love this space of possibility and really kind of knocking out the walls within that concept. So where do you think that fits into all of this?

16:07

Because when we’re talking physics, superposition is all about just staying in that state of possibility, right? Not observing the outcome. So where do you think that falls in?

16:20

Yeah, well, there’s a huge social component to this discussion as well. Maybe we’ll get into that a little bit later. So at the moment, we’re just talking about the dynamics and the machinations of agency. But there’s a social component and there’s a governance component that we’ll talk about later. And what you’re talking about there is essentially the diffusion of agency in society. And this is kind of where I was going with the web 3.0. So there are a bunch of libertarians who are particularly interested in having a high agency society. I spoke with Beth Jesus the other day. That was basically the argument. There was the bias fairence trade off in society. So governance folks think that we should have more bias, and kind of libertarians think we should have less bias.

17:02

And there’s very good arguments for both, which is to say, having bias mathematically stops you from discovering knowledge and stepping stones, like having independent agents who have the degrees of freedom and the exploration ability to find new knowledge can propel society forwards. But it also means that they might do aberrant things and they might discover or implement harmful and dangerous technology. So there’s always a balance between variance and bias.

17:37

Yeah, no, and I definitely could see that. It’s interesting, because freedom is a really interesting concept. What you’re talking about too, with this kind of decentralized concept of agency, I tend to think that’s kind of how humans work. We’re very much individuals. We all have our own knowledge and understanding based on our own experiences. And that’s kind of the beauty of how we all kind of evolve this global knowledge together. Without that, without the individual perspectives and the individual experiences, I don’t think you really can get that. So you don’t want carbon copies, you don’t want people who are all just kind of staying in line and thinking along the same lines.

18:35

You want to kind of nurture an environment where people are able to be free on their own, in their own brain space, their own way, to kind of live life and experience life and then share those experiences, don’t you think?

18:54

Well, I wish I knew the answer to this. It’s a really difficult one. And yeah, this is exactly what were debating with Connolly and Beth Jesus the other day, because you could make the appeal, and this is one potential criticism of Tristan, is that if you make the appeal to thermodynamics, and you could call it a naturalistic fallacy, which is to say, if you just let the chips fall where they may and let everyone just kind of follow their own gradient of interest and just see what happens, you could argue, well, that’s the natural thing, so it must be good. And well, what if it’s not good? And then from the governance perspective, you could say, well, is Facebook good? Should we ban Facebook? Should we have banned Facebook? And you get into all of these very difficult questions.

19:39

So I don’t really know the answer to that. But I do think that there is something interesting about having individual agents that can think for themselves and do their own things. But I have to stress, though, that kind of doesn’t work in the way that we might think, which is to say an agent has to share information with its environment in order to affect the environment, because agency is about bending the environment to your preferences. And obviously we’ll leave the philosophy of where the preferences come from just for the moment. But you have to kind of become ensconced in your environment. You have to become correlated with your environment in order to make changes in the environment. And the environment kind of affects you in the same way you affect the environment.

20:19

So there’s always the question of, to what extent can you become a distinct actor from the environment that you’re embedded in? Because you’re just a part of that system.

20:27

Right. And I see that. And you can also see then why people get stuck in patterns of behavior where they’re really forcing their opinion on other people rather than just kind of staying in a state of being willing and open to learn. It’s almost like people have this innate desire, or I don’t even know if you could call it a desire, but it’s like, if you don’t believe what I believe, then something must be wrong with what I believe. So I’m going to make you believe what I believe. That way, there’s no argument. It’s weird that people approach things that way instead of being open and receptive. I don’t know.

21:13

Yeah, I think there are many reasons for that. It’s a muddy way to think about it. But I think of agency very much like gravity, which is to say you have a lot of agency if you bend the spacetime around you and people orbit around you. So it’s just a very natural thing because agency is very closely related to power. Yeah, I spoke with Kenneth Stanley all about this, and I’ve got a show coming out. When, when you’re seeking agency, you’re kind of seeking power. And Kenneth said, oh, no, a lot of people just want agency not to seek power just because they want to do what they want to do. They want to read books and stuff like that. But even that is a kind of power. It’s dominion over your time, and there’s different degrees of agency.

21:55

So you might have degrees of freedom. You might have high agency in one domain of your life, but not in another, because the end of the day, you’ll always be trapped in people’s agency fields in some respect or another.

22:07

True. Yeah. I mean, they’re just for survival purposes. Okay, so then to get a little bit back onto talking specifically about AI in this sense, what do you think is required to build trustworthy AI systems? Bringing agency into that conversation, what do you think is the framework for building that?

22:36

Again, it’s a really difficult question because we are dealing with dynamical, very complicated systems and you could almost take the AI bit out of that question. So right now, for mean, maybe I could put this back to you. What makes the UK a trustworthy actor on the world stage?

23:00

Well, so I don’t think humans are trustworthy completely anyway because everybody’s a loose cannon in some way. And I think that one of the factors that really affect that is personal desire, and that can get really polluted with a lot of things, with fear, with negativity, with just the desire for power, like what you’re talking about. That’s a huge one. That really makes things untrustworthy or people potentially untrustworthy and insecurities, all kinds of things. Right. But then those are human. Those are very human traits because we deal with emotions, we deal with self, as far as our ego and things like that. So when you’re talking about autonomous systems, will they develop those types of self traits or are they going to stay apart from it? Are they going to just learn to mimic it when they’re dealing with humans? I don’t know.

24:18

Will they develop a level of consciousness over time? I don’t know. But I do think that there are things that separate humans from these autonomous systems. And it’s really interesting because we’re going to be the ones training them and cooperating with them. So where does that.

24:38

Yeah, there’s a couple of things touch on there. I will get to the emotions thing in a minute because I think that’s a little bit philosophical. But I think, broadly speaking, I would classify a trustworthy system as a system which is predictable and aligned. So, for example, I would delegate something to my staff if I trusted them, which meant that I knew they were going to say the right thing, which is to say that their goals are aligned with my goals and they’re competent and coherent enough to execute on those goals. But then you get into this kind of weird symbiosis between different actors in the system. So they might have goals that are somewhat aligned, or you might find that there’s a kind of tendency of the system to go in a direction that you want it to go in.

25:23

And there are all of these kind of checks and balances, and the system reaches a kind of homeostasis over time. And that’s very much like how the world works. And the reason I’m talking in these very high level, esoteric terms is this isn’t for AI. I mean, even software is the same as that. When you have autonomous software, it’s not just the software, it’s how it’s used. And this is when we’re getting into the memetics again, right? So GPT four, it’s not just running on Microsoft servers like GPT four is alive, right? Because it’s everywhere. It’s on the Internet. People are talking about it on Twitter. I have my own model of what GPT four is. GPT four is this memetic distributed intelligence. And that’s how intelligences work. So you don’t have to talk about AI to talk about the need for regulation.

26:09

And we spoke about bias versus variance so that we can conduct the system in a way that we want it to evolve. So the reason why this is challenging is we’re talking about increasing the agency density in the system. So we want to have actual agents that are essentially diverging and following their own goals and interests and doing constant sense making and active inference. And then you’ve got this problem of, well, how do I create policies to control this? And how do I even describe in those policies what’s good and bad? How do I kind of direct the system? It’s really difficult.

26:45

Yeah. And honestly, that’s one of the things that I see with the framework that versus is distributed. It is decentralized. So it’s decentralizing information. It’s making it to where people all over the world are going to be able to build these intelligent agents the same way that people can build websites. And so it’s going to end up preserving all of the sociocultural differences, different belief systems, different styles of government, which makes sense because what’s right for a certain group of people isn’t necessarily right for another certain group of people. And we really deal with this problem as humans, as there is no agreed upon right way to think, right way to live, right way to do anything.

27:42

So it’s almost like to evolve these autonomous systems, they have to be able to work with and respect all of those differences and still grow knowledge, still grow cooperation. So to me, that’s why when I look at what they’re doing, I’m like, okay, that makes sense. As far as a system that’s going to allow these autonomous agents to grow knowledge versus these monolithic foundation model machines that are just being fed historical data, you can’t have knowledge grow from just one brain. So I don’t know. I mean, to me, that’s one of the advantages I see with the direction that they’re going in and with Carl and stuff is I see that actually has the ability to kind of at least go with humans in that know, since there is real solving those differences.

28:39

Yeah, I mean, it comes back to what were saying before about we want to create biologically plausible intelligence. And what does that mean? Because people point to evolution, they say, well, that’s natural intelligence, even though it’s not directed and doesn’t apparently have any purposes or goals or whatever. But what’s really interesting about it is that it’s producing information. It’s a divergent search. It’s a search process. And in order to have a divergent search process, you need to have lots of independent agents doing their own thing. So this is kind of like, even though what we’re modeling here with all of these agents all over the place, it’s not as high resolution as the real world, but we’re making a statement, I think, that it has a sufficient level of divergence in its kind of search process that it will find interesting solutions to problems.

29:27

But the problem with divergent systems is the lack of coherence, because at the moment, let’s say, Facebook and Google, they get to decide all of the policies they just decided. Like Google now, for example, they label when you’re using generative AI and Facebook do the same. And they’ve just collectively decided that’s the law that the whole world is going to adhere to. And the world is very fractionated and it’s very divergent. Our cultures are quite different in different parts of the world. And I don’t think it’s necessarily a good thing for us to have this extreme globalization where we have a monoculture, because from my perspective, the world just stopped evolving about 20 years ago. I remember when I was a kid, there was all sorts of just rich, diverse, interesting music and culture and new things coming out, and now there isn’t, right?

30:15

The world kind of died with the Internet. So maintaining that pluralism and diversity, I think, is very important with the big caveat that we need to have some kind of consensus mechanism or some kind of agreement to decide what is good behavior and what is bad behavior?

30:31

Yeah. It’s funny, though, because don’t you feel like as these autonomous systems become more pervasive in society, that we’re going to hold on to those things that make us human? The real originality, like what you’re talking about with a lot of that creativity got watered down with the Internet because all of our fascination went to this ease of access to the information and different things. I think it just made it to where we didn’t quite stretch outside of ourselves too much or as much. In that clip with Max that you put out today, you’re talking about how you can tell when something’s written by Chat GPT. And it’s funny because I can, too. It’s like there are certain words. Demystifying is one of them.

31:21

You see that you Chat GPT, or you see people who are writing a blog post, and the very last paragraph is in conclusion, that was Chat GPT. Because nobody writes like that. But Chad, GPT does.

31:36

I know, but the interesting point, though, is that we are all like chat GPTs, and that’s okay, right? As I joked on the video, Carl Friston is Chat GPT, right? He says these high entropy words that are so characteristic of Carl Friston, like licensing and the itinerancy and the renormalization group and all this stuff. And obviously, I’m tuned in. I’ve got the Vulcan mind meld with Carl now, so I’m completely tuned into it. But that’s okay, right? The whole point of having a diverse, pluralistic society is that you have independent kind of subcultures that are cliky, but they’re kind of exploring an area, and that’s where the really interesting thing comes in, because if they discover something good, then it gets shared with the rest of the system, right?

32:22

So all of the good ideas kind of rise to the top, but it’s really important that you have eventual coherence, not constant coherence, because if you have consensus at every single step of the search process, it will blind you from discovering anything interesting.

32:37

Yeah, no, and I agree with that. It’s going to be really interesting to me to see kind of what progresses with that, because to me, I feel like the more predictable the world becomes with our interaction with these systems. I think just as humans, we have something in us that is based on originality or prizes originality. So to me, I almost feel like it might be a catalyst for certain types of expression that we can’t even really imagine yet, and it may be enhanced by these technologies of our ability to be able to expand into these different spaces. But I definitely think that originality is going to become more prized than ever as we move through this time.

33:46

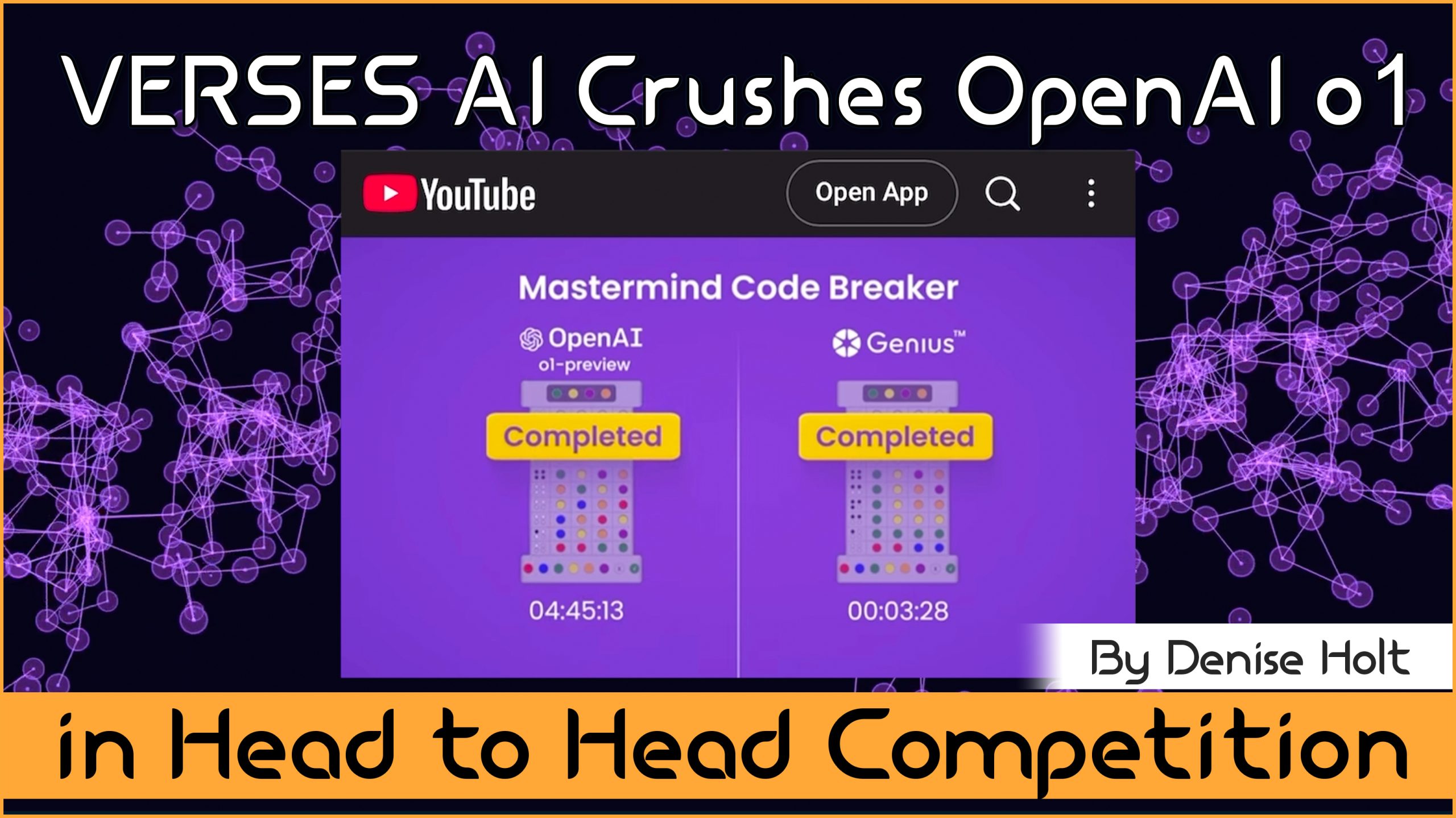

Yeah, I completely agree with that. And that’s the paradox of things like TikTok and Facebook, which is the reason why they are so successful, is because they are parasitic on humans. So there is a diverse set of humans that are creating content on these platforms, but it still doesn’t have the degree of creativity it could have if they were completely separated off. So you get these viral videos that explode, and the information is being shared too widely, too quickly. That’s the problem. And what we’re talking about is a new type of creativity where you have pockets of folks that are genuinely working on something new. And maybe now is a good time to talk about this. The reason why AI systems are not creative is because they are designed to minimize entropy, and they are monolithic.

34:37

So the prediction objective in a large language model is to essentially predict a probability distribution over the next word and the sequence of words. And it’s designed to minimize the entropy because the cross entropy is like a divergence metric. So it’s almost like creativity is deliberately expunged from the system. So the only source of entropy or creativity are the folks that use the system. But if you think about it, there’s a tug of war, because, yeah, I can still push the thing to the entropic boundaries and I can make it do something which appears creative, but it’s only because I’m directly driving it and I’m directly pushing it that it’s pushing back on me. It’s trying to take all of the creativity out of everything I do, and it can only combine things that it already knows about.

35:20

So it’s almost like AI today is designed to eliminate any novelty or creativity, and novelty and creativity is really important. We need to have systems that foster creativity, and you can only do that if you have actual agenda systems which aren’t constantly calling home every 2 seconds to the same place.

35:40

Yeah, and I agree with that. Creativity is so important for continued innovation, continued just evolution, like the evolution of the human species, really depends on it. Yeah, it’s going to be fascinating to see how this all plays out. So, back to what you were talking about earlier. Did you see, I wrote an article on it, I don’t know, a few weeks ago, but there was a letter that was drafted and signed by like 25 researchers, scientists, I think there was a couple of government officials in there, and it was called the natural AI based on the science of computational physics and neuroscience, policy and societal significance.

36:36

And in that they were talking, one of the things that they were pointing out and calling to the attention of this is potentially a problem, because obviously they were talking about active inference and natural based AI as a very viable way forward. And really we need to give it more of a voice and more attention in this global conversation about governance, global conversation about the evolution of these systems. And so one of the things that they said in the letter was they said that the approach to machine learning and the engineering advances over time were made with no scientific principles or independent performance standards referenced or applied in directing the research and development. So how do you think that has affected the trajectory of AI as we’ve seen it so far? And why is this a problem? They feel like it’s a problem.

37:35

Everybody who is involved in the roundtable discussion after that, and they see that as a big problem. Is it a problem? What are your thoughts on that coming from the machine learning side?

37:48

Yeah, I think it’s a tough one, to be honest. It’s certainly true that deep learning in particular doesn’t really have much theory behind it. It’s all just empirical, but a lot of science works that way. But you could say it’s an engineering discipline rather than a science. I don’t see it as being particularly different in the active inference side either. So I think the difference is with active inference, we have an extremely principled view of the world, and we think of the world in terms of a superposition of agents, and we’re using this bayesian reasoning, which is a very principled form of reasoning. And the thing is, though, that just tells you how to build a system at the microscopic scale. But we’re still talking about the emergence of complex systems, and we don’t have really much theory about complex systems.

38:43

I mean, were speaking about this with Melanie Mitchell recently. She’s at the Santa Fe Institute, and she studies complex systems. And when you have a really complex system, the models that you come up with are very anthropomorphic, which means they’re guided by us humans and how we view the world. So you might look at a city and you might say, well, how many people are using antidepressants? Or what’s the average uptake of some particular medication or something like that? And we just slice and dice this complex system, and this is the world we’re in with active inference, because we’re talking about how do we build a better society? And you can point to certain things and you can say, oh, it’s really bad. Nuclear weapons. We need to control nuclear weapons. And there are certain things that are just really bad.

39:28

Like, I can’t think of any examples of my head, but even now there’s so many borderline things, like obviously revenge porn or deep fakes. That’s really bad. There are lots of things in the middle where what’s misinformation and what’s disinformation? And we need to make all of these decisions. I guess the point I’m making is there are no principled ways of thinking about this stuff. It’s all philosophy at the end of the day.

40:01

Yeah. So how do you weigh it then? Or even weigh the importance of what should be addressed and what shouldn’t be? Because obviously certain things should be. We definitely need governance for the most critical dangers and things like that. I know that even the word danger is subjective. So what, do we just draw consensus? Do we allow pockets to. Even with the Internet, there’s still the deep web, the dark web. You can’t stop people who are bound and determined to live or think or breathe a certain way. I don’t know. Does this type of technology then present more danger in regard to some of that? Or is there a way to have some kind of a consensus that can control some of the outcomes of this?

41:01

It’s so difficult. I mean, we could talk about a potential future where we had a digital way of assigning consensus, but it’s not that easy. There’s a Latin saying, vox populi, vox day, the voice of the people, the voice of God. And people don’t really know what’s good for them. People are uninformed, they’re emotional, they make knee jerk reactions. And so we don’t really have democracy even in the UK. Like, if you look at our representatives, they don’t vote necessarily for the things that we want them to, although that’s probably a good thing that they don’t. So there’s no form of government which is good. And this is genuinely a really difficult problem.

41:42

As I said, there are some obvious things that we know are bad, but even then, if you get into the philosophy of ethics, there’s like deontology, which is where you just have principles about what you should do, or there’s utilitarianism or consequentialism. So we have a whole bunch of effective altruists around at the moment, and they think that we can design some utility function, and if we design it carefully enough, we can kind of like minimize suffering or whatever, or we can maximize happiness, and we just have to treat society as a grand optimization problem. And it’s so much more complicated than that. Right.

42:13

We can probably come up with rational massively.

42:19

Something were discussing the other day is, I think there might be some kind of future technology here where we can think of the memetics of society. Society still has structure, and at the moment, we don’t really think about this.

42:33

It has structure and it can be optimized. But here’s what’s really interesting to me. You see places where there’s, like, natural disasters or things like that, and you realize that humans are just a turnkey away from savage. The minute it comes down to survival, they can turn into, like, animals again, where it’s like me against you, and people will think in ways and do things that they would normally not do. I feel like our civilized society is kind of a facade. It’s only civilized until it’s not.

43:09

Yeah. There’s a famous saying that we’re only three meals away from anarchy. And that’s so true, even from a free energy principle, kind of. Our society has reached a non equilibrium. Sorry. A non stationary. Sorry, I think my mind’s going, yeah, a non steady state equilibrium. So it’s not an equilibrium, it’s a non steady state equilibrium, which is a form of homeostasis, and that’s quite important. So things haven’t frozen. When a dynamical system freezes, it’s dead, so it’s alive and it’s moving, and it’s in a kind of homeostasis. It’s in a kind of stable state, if you like, over time. But there’s still stuff going on, because you’ve got all of these agential actors in the system doing things, and it’s extremely delicate. Right.

43:59

So society right now, it wouldn’t take much for it just to decline dramatically, and world war three and all the rest of it. So. Yeah, I feel you on that one.

44:10

Yeah. And what’s really interesting to me about that is that I know that with all of this technology that is coming, that we’re just on the verge of. Right. There’s going to be a little bit of pain in the transition for a lot of people when we’re talking about massive job loss because we’re being replaced by automation, and then this whole shift of humans will have to kind of redefine meaning. And I feel like the end goal, like the end game, has potential for so much good. And so I know I take kind of this very utopian mindset for the outcome of this and where it could place us as a human civilization and make our lives better and things like that.

45:06

But I also know that there’s a transition here and it’s going to be painful for a lot of people because of the fact that our governments are slow. Government as a whole may shift in all of this, and then there’s going to be power struggles, there’s bound to determine to be a lot going on in this shift. And so when you think about how fragile society is, how fragile humans are in the face of uncertainty and fear and all of those things, I don’t know, it’s a little unsettling to.

45:44

Yeah, I can genuinely argue it both think. And again I see people with a governance agenda. I read an interesting book by Emil Torres about existential risk and a lot of the EA’s, the effective altruist that they’re always talking about existential risk, and I think they are slightly more paranoid than I am. So they think of the possibility of certain events happening, like bio risk and even AI, existential risk and stuff like that. And the counterargument is, no, we’re really robust. Yeah, they nearly dropped a nuke on Carolina. And as bad as it sounds, the city would have got blown up. But the human species is incredibly robust.

46:33

So you see, you have this fundamental tug of war of, on the one hand, you have a bunch of people that become gatekeepers and they think they know what’s good and they want to kind of direct society. And the only way you can direct society is by taking away agency from other people. So it all comes back to agency. It’s about how much agency can people have?

46:55

Yeah. And I think too, humans have become soft to change because we’re so used to being comfortable now. And for as much as humans complain about this or that or feel like they are being slighted by certain things in life and they have their little pains and aggravations and stuff like that. Still, most people in first world countries are extremely comfortable in life. And I think that’s probably the biggest thing that drives most people is they don’t want to upset that. And when you’re talking about massive change like we’re about to see, it’s going to get uncomfortable. There’s going to be things that cause this kind of uncomfortableness. And then I think people are extremely irrational. We don’t think like machines, we don’t think in just this logical space of just taking data and dealing with it. So then it becomes unpredictable.

48:09

It might be worth touching on that because you were talking about the irrationality and our emotions and our incoherence. From an agency point of view, it’s not entirely clear to me whether incoherence is just the same as incompetence. So if you’re a competent agent, then in pursuit of your goals, you will make quite predictable and well informed actions. But, yeah, there’s this question of, would machines develop emotions? And then we get into this whole subjective kind of experience here, because you were just saying that different agents understand the world differently. There’s the perennial discussion about whether machines understand. And what is understanding? Right. Well, understanding is simply having models that predict the world, having world models. And everyone has different world models.

49:02

And when we say, oh, machines don’t understand, have you noticed they always give these canonical examples from mathematics or something, where there’s a binary understanding? So one plus one is two. It’s just two. But we’re talking about our culture or very complex things in the system that we’re embedded in. And no one understands these things. No one understands what intelligence is. No one understands how economics work. It’s a complex system. So we all have models. And my models aren’t your models. And my models might work today and your models might work tomorrow. And understanding isn’t just the collection of models. It’s also the process of how we do model building. That’s also intelligence, is how we kind of instantiate these models and how efficiently we build these models.

49:45

But, yeah, many of those models will be built on our subject of experience and what we’re feeling. And then there’s the question of, well, is that something that can exist in a machine, and would it be still the same thing if they were just mimicking our behavior? So we have emotions, and they mimic our emotions. Does that mean they have emotions? And if you’re an illusionist like Daniel Dennett, you would say, yes, it means, for all intents and purposes, they do have.

50:10

So, yeah, I don’t know, though, because, okay, just to pick up on what you were just saying, so these systems would be like one and one is two, right? But for humans, for me, I see so many abstractions from that, because if you think about two people coming together, either in a relationship or for work purposes, to build something or whatever, then as a human, I understand that it’s one plus one, but it has the power of multiples. So I have this abstraction of thought capability of understanding that there’s more to that. There’s more to a concept like that. Will these machines ever be able to develop those kinds of things of ways of thinking.

51:02

Yeah, it’s a really difficult question. I mean, I was speaking to Mao about this. This area of phenomenology and whether machines could have phenomenal experiences. And this is one of the biggest conundrums in the philosophy of mind. It’s called the hard problem of consciousness. And generally speaking, I spoke with Philip Goff, who’s a panpsychist. And one extreme is saying, well, we can’t explain how certain organizations of material give rise to this qualitatively different experience. So we might as well just rearchitect the universe and just say, well, the universe is made of consciousness and material and physics and all these things that we know is derived from consciousness. So in some sense, there might be a global, universal field of consciousness to which we’re all connected. And you can even think of that bottom up or top down.

51:52

So you could think of consciousness as being the little. The building blocks of the universe and there being kind of pockets of conscious agency in different places. Or there’s this cosmopsychism view, which is that the universe as a whole is an agent and has its own kind of qualitative experience. But that’s one view of it. But the other view is that consciousness is just something which emerges from material, and any qualitative feeling that we have is just an illusion. It’s just something that we kind of delude ourselves, that we. I mean, Carl Friston says we. We are agents, and it’s a bit more complicated than that. We’re a nested superposition of agents. And you can interpret that, as I said before, as know it all happens inside my body, or it’s kind of diffused in the environment. I definitely tend towards the latter.

52:42

But according to Carl Fristom, we’re just agents, and we’re just shaping our environment to suit our preferences. And the generative model will essentially implicitly incorporate many subjective states, like phenomenal experience, like our emotions and so on. And it’s all part of the generative model. So it’s all an illusion.

53:00

Interesting. And just touch on that clip that you released this morning with your conversation with Max Bennett, where our minds are just constantly running simulations, and reality itself is subjective. And what’s interesting is I saw this documentary years ago, and they were going to these different aboriginal tribe areas. And even when you take something that you think is as concrete as color, of just an understanding of color, they had different understandings for colors, for even basic things like the sky and stuff like that, everything is subjective. And I don’t know, probably about ten years ago. I have perfect vision in one eye, and one eye is just a little bit off, and one eye has a slight stigmatism to it. I was starting to think, okay, maybe I should go have my eyes checked. Maybe I should get glasses.

54:02

So the doctor was like, well, it’s only slightly off. You don’t need contacts. I’ll give you some glasses. You could probably use them when you’re on the computer or in dark environments like driving and night. Driving has always been a problem for me, or movie theaters, things like that. I got the glasses and I put them on, and the correction for the stigmatism made every rectangle into a trapezoid. And I’m just like, this can’t be right. This just cannot be right. They gave me the wrong glasses. And you realize that even something like vision is our brains are adjusting reality for us. Our brains are telling us what is a rectangle. Our brains are conforming what we see into what we expect. I don’t know. I don’t know.

54:58

It’s interesting when you do realize that even the things that we take in with our senses, it’s just our brains arranging the data as individual interpretations.

55:11

Yeah, it’s a really interesting idea that our entire experience is a hallucination. And it goes back to what were saying about Chat GPT. It doesn’t record sentences. It’s a generative model. It just pastages together words depending on how you condition it. And part of that is because of this thermodynamic dynamic efficiency. And our brains are the same. Our brains have prediction models of our sensed world, and our brains don’t actually remember things that happened. And experiencing things is actually the same as remembering things or even imagining things in the future. So our brain is just always going around and doing this. But then what you were saying about the subjective models, the really cool thing about humans is that a lot of our models are socially conditioned.

55:59

So what distinguishes us from all other animals is that there are many models, because models are how we understand things, and many of those models are basically implanted into us from the social world. Our language is a great example of that. All of language is a memetic organism. It’s a superorganism. There’s a great book by this called the language game, by the way, by Morton Christensen. I’m interviewing them in a couple of weeks, but one of the things they explored in that book is there are certain languages that just don’t have certain concepts, like they don’t have the concept of money, or they don’t have the concept of numbers. And these people in those cultures, they can’t even conceive of those ideas. So just the ability to think of things is culturally conditioned.

56:45

Yeah. No, it’s interesting because I used to travel to China to do a lot of business. And the chinese language, Mandarin, there’s no concept of he or she. So you would have the translators that are translating English for you, and they would call a man her and a woman he, because they don’t even know how to deal with the words, really, because they don’t have it. And the chinese language, it’s a picture language. It’s more like they’re painting pictures with their language rather than text, if that makes sense. I think that’s why it’s really hard to learn if you’re not used to that, if you’re not conditioned for it.

57:29

Exactly. But the take home for me, and this is such a powerful concept. I know were just talking about us having high agency, and wouldn’t that be a good thing? But the truth is that we’re nothing. We’re a collective intelligence. And if we started again, it’s the classic example. If you raise someone in the wilderness, they would be divorced of humanity. Everything that. All of the tools that we use to think and reason and invent and do things, they’re social programs, they’re memetic.

58:04

Yeah. There’s a documentary, I think it was called something like maiden voyage. It was a story of some girl. She was able to do it by the time she was 14, I think. But when she was twelve, her parents were trying to allow this to happen for her to sail the globe alone on a sailboat. She grew up sailing with her parents, and she just had this in her. And then, I don’t know, maybe they were living in England at the time, but somebody brought it to attention to the government, and the government. There was a court battle over it for, like, two years of, do the parents have the right to let this happen or not? Do we step in? And ultimately, it came down to, the parents have the right to allow this.

58:55

And so she was like, 14 when she sailed, and she videoed herself the entire time. And in the beginning, like, the first couple of weeks, oh, she was missing her parents. She was missing after, like, three weeks, though. It was the craziest thing, because you just saw, and she even said nothing else mattered anymore. She didn’t miss her parents anymore. Being alone was just fine. And to see that kind of a shift of coming out of the social conditioning into isolation and talk about agency. I mean, she just became her own agent. Her and the water and her boat, and that was life. And, yeah, it was interesting to see that kind of a shift in a human being.

59:49

Yeah. But it also speaks to the kind of behavioral complexity of humans, because so much of our behavior is socially programmed. It means that we can be reprogrammed, and that’s a good thing and a bad thing. I could go and join a cult, for example, or I could just completely. But the thing is, there are so many things about the situatedness of where we are at the moment that we are kind of bound in the orbit. And it’s a really big deal for you to kind of escape the gravitational pull of the orbit that you’re in, but there is the potential for you to start again and kind of create an entirely different way of functioning in society. But, yeah, it’s kind of what you were saying earlier, right? That there are forces that kind of.

01:00:33

That keep you pulled in, and it is possible to get escape velocity, and some do.

01:00:40

Yeah.

01:00:41

But, yeah, we’re not like animals, that just. Their existence is roughly the same no matter where they are.

01:00:48

Kill or be killed.

01:00:50

Yeah. And I don’t know whether I’m kind of thinking, I’m pitching this as a positive or not, that humans are software. We’re hardware and software, but it’s still quite difficult to escape the shackles of the software.

01:01:03

Yeah. And to bring it back to kind of some of the things you were talking about earlier with emotion and the hallucination of reality for us, because so many people get stuck in the past, so many people are just focused on the future. And people largely acknowledge that it’s really hard for us to focus on the now. And there is no real past. It’s just your memory. Imagination is the future. So where is consciousness? Is it in the now? Are those things part of this hallucination of reality that are really important to us to be able to establish what we understand in the now?

01:01:52

Yeah, it depends entirely on your philosophy. So I think I lean towards being an illusionist. And, you know, were saying that in biology and also in physics and materialism, there’s a lot of cynicism, essentially, that they’rebelling against any grand teleology, any design by God. They just think that everything just emerges and there’s no hard problem of consciousness. There is no consciousness and there’s no meaning. And also there’s this thing called Hume’s guillotine. Hume said you can’t get an ought from an is. So philosophers quite often create this ontological gap, which is to say, well, what we should do is something quite different to what is. You can’t just say, well, because it is this way, then it must be a good thing.

01:02:41

So humans have this tendency to see the world differently, and we’re convinced that the world is different, but it’s a similar thing with religious folks. To a certain extent, we see the world in our own image, and a lot of that is because the way we conceive of things is limited by our psychological priors and our brain structures and so on, even the concept of an agent. By the way, Elizabeth Spelke is a psychologist at Harvard, and she came up with five fundamental ways that humans see the know things, like spatiality and numbers. And one of those things was agentialism. We see the world in agents. And when I spoke to this philosopher guy, he came up with a new theory called panegentialism, which is that agentialism pervades the universe, and it’s everywhere.

01:03:31

So the thing is, we are so limited in how we understand the world. The world is more complex than we could ever know. And you could argue that even the free energy principle and goals and agents and all these things we’re talking about, they’re just an instrumental fiction, which means they’re just a way to help us understand the world. But the world is more complex than we’ll ever know.

01:03:51

Yeah, I definitely agree with that. So, one thing that I did want to kind of get your opinion on before, because I know we’re kind of getting up there on time, but Elon just announced last week that they’ve implanted the first neural link in a human, and I really hope that person fares better than the monkeys. But what are your thoughts on that? I mean, clearly, we all know that this technology is gravitating towards this end goal of human brain computer interface. What are your thoughts on that kind of stuff and where this is heading in that direction?

01:04:38

Yeah, so I think transhumanism is inevitable. I have to caveat that whenever we speak about transhumanism, I get loads of pushback, because I wasn’t aware of this early on, but the left really hates transhumanism, and they say that it’s going to lead to an increasing divide between the haves and the have nots. And there are some folks in particular that say even worse things about it than that. So I have to tread a little bit carefully. But, yeah, I think it’s an inevitability. But what I would say, though, is my frame of reference is that we already have transhumanism, in the sense that this memetic intelligence, this extended mind, I.

01:05:15

Think my personal assistant goes everywhere with me.

01:05:20

Yeah, exactly. I mean, I’ve got a pad here. Actually, we’re talking about hallucinating. I was reading Philip Bull’s book, and I was remarking to myself at the time that I wasn’t really reading it. I was just kind of prompting my brain, and I was actually kind of hallucinating while I was reading it. And I was having a completely personal experience reading the book. And I kept just stopping and thinking, and I was just like, in the matrix, and the book was just triggering all of these thoughts all over the place. And nobody else reading the book would have had quite the same experience. So, yeah, it’s pretty insane. Where are we going? Oh, yeah, the transhumanism thing. I’m fascinated by Andy Clark’s conception of the extended mind with David Chalmers.

01:06:03

Physical things extend our mind, but more importantly, society not only extends our mind, I would make the inverse argument that it is our mind, that society is a superorganism, and it’s like a virus, and we are the hosts, and we don’t have anywhere near as much agency as we thought we did. So then there’s the question of, well, with neuralink, it’s a very low bandwidth device, right? Because if you think about it, you can’t really get that much information in and out. And the brain is incredibly adaptable, and I think it might very quickly become, you almost forget that you’ve got the neuralink plugged into you, and you’ll be able to do certain things. But for me, the irony will be that it won’t actually move the needle as much as we think it would, just because we are already connected into the infosphere.

01:06:50

That’s right. So, I spoke with Luciano Floridi, and he’s a philosopher. He was at the Oxford Internet Institute, but he’s now at Yale, and he’s a philosopher of information. And he says that we are moving from the material world to the information world. He calls it the infosphere, and he’s making a philosophical argument that our fundamental ontology is changing. So with the advent of all of this information and communication technologies, like Facebook and the Internet and so on, our fundamental existence has been transformed just in the last ten or 15 years. Whereas now you can’t get a driver’s license if you’re not on the Internet, and you can’t even function in society if you’re not on the Internet.

01:07:31

I was just going to say, even the way our brains learn and operate is changing. Multitasking is becoming embedded in the way the brain functions. Whereas, and it’s funny because you look at older people in society and they struggle with multitasking. But, like, when my daughter was 13, she and her friends would be sitting there, they’d all be on their phones, they would be texting, carrying on a conversation with me and fully engaged in the conversation with me, but at the same time fully engaged in what they’re doing. And then, ha, to each other. They had several things going on at one time, and they were in all of them equally. And at that point, I remember going, okay, our brains are changing with this technology. And I see that as we get more immersed in this technology with immersive experiences.

01:08:26

You see the Apple vision pro, and now everybody’s posting pictures of people just walking down the street with them on. I saw a video the other day, someone was in an autonomous car, that the car was driving itself, and they have their goggles on in the driver’s seat, and they’re, like, doing stuff in the air while the car is driving. And I just think that as we get more into these kind of immersive digital experiences, and then the human brain computer interface is going to bring, I think it’s going to bring a lot more of our thought processes into this digital space as evidence, right, of part of this digital process. We’re going to evolve into different kind of kinds of humans.

01:09:21

Yeah. And I’d like to understand your take on that because you used the word like utopian a little while ago. So I’m interested to know what your vision of the future is there. The only reason I asked this is because I think you were saying that you want to have a high agency society. But if we’re all connected into the matrix, wouldn’t that reduce our agency in quite a substantial way? The only reason I say that is if we thought that the Internet, we’ve got all the world’s knowledge at our fingertips, and in a weird way, are we better off for it or not? What do you think?

01:09:53

Well, honestly, I don’t know. And I really don’t even think that’s the question, because I feel like it’s inevitable, right? So I feel like then we have to think about how do we want it to look? What are the potential ways that it can look? And here’s what I really do think with the brain computer interface part of it, I remember, gosh, this had to be like maybe seven or eight years ago, and I was having a conversation with one of my girlfriends. We hadn’t seen each other in a long time. And so we’re just catching up and we’re just laughing and talking and telling these funny stories of things that have happened and stuff. And in that moment, I had this thought. I’m like, wow, in the future, people are going to be able to potentially tune into each other’s thoughts, right?

01:10:43

The same way we tune into reality, you know, and you think about like, oh, nobody will ever be comfortable with then. You know, I remember joining Facebook and it was like, stranger danger, don’t put your picture on the, you know, look where we are now, people become comfortable with things over time and they get certain rewards out of it that increase know level of what they’re willing to give away, right? And I’m not saying everybody would be that way, just like not everybody is living the same way within social media, but there are people who are being very outward in, this is my life, this is what I’m living their life out loud.

01:11:27

So I just thought, is that going to be the new entertainment where we’re able to almost switch channels and just tune into people’s conversations, tune into their actual lives, be able to see through their eyes, be able to witness these real situations as like the new reality tv? And I’m saying all this because it really got me to thinking about potential, good and bad that could come from that kind of a society. And one of the things that I really think that potentially has the ability to do is to increase empathy. If we all become aware of just how common our thinking is, like the things that everybody struggles with right now as a society, we love to elevate ourselves above other people of like, oh, they have that problem.

01:12:17

But we lie to ourselves and convince ourselves that we would never think that way or we would never. But it’s the common human condition that we can experience the same love, the same joy, the same things, but we can also experience the same levels of depravity in our thoughts and the same struggles of insecurities and different things like that, right? We just try to keep those to ourselves now. But if we are in a society where you can’t not acknowledge that, then I think that we all gain this empathy for each other.

01:12:58

I don’t know, it’s so difficult. I mean, you could argue on Facebook now that it has kind of fractionated into echo chambers and it is actually really good for people to have an identity and self presentation and find people they can relate. You know, it also has a polarizing effect in a bad way. And then there’s the thing you were saying about. Well, people can kind of broadcast their thoughts and connect to other people with their thoughts. And you could argue that people need privacy and in a way that might be oppressive to people because we’re a slave to the algorithms. Even me on YouTube and yourself as well. Not many people might watch this video. And then you’re going to say, well, I’m not going to interview Tim again, because he’s become. It’s called audience capture. Right.

01:13:41

You get kind of enslaved by the nexus that you’ve embedded yourself in, and that is agency eroding. And it’s really difficult because you can argue that, at least in some limited way, it might increase your agency, but it also makes you even more memetically kind of enslaved than you were before.

01:14:00

Yeah. I think it comes down to the difference of people who chase that acceptance or validation versus people who are just exploring things for their own curiosity. Right. To me, this conversation is amazing, and I’m so grateful to have you on my show, and I really don’t care if anybody else thinks the same way about it, because to me, this is fun and exciting, and I try to approach things that way. I’ve dove into the direction that I’m going with my writing and with the stuff that I’m producing with my show, because it’s interesting to me, and I know that there are likely people out there who will find it just as interesting. But that’s not my motivation. My motivation is to kind of explore where is this going?

01:14:51

And to find some kind of meaning in it, to find some kind of a way to potentially make a difference. I don’t know. I tend to think where the suffering is in social media is the people who are really stuck on that validation side. And it could come down just to the brain chemistry alone of the dopamine rushes, of the likes and all of that kind of stuff. I mean, I know that’s a real thing, and people get addicted, and it’s such a superficial addiction, but it’s brain chemistry, so I don’t know.

01:15:33

Yeah. And there’s the question of, to what extent is it a natural extension of how we function? Anyway, I spoke with Kenneth Stanley, who’s just made a serendipity social network with no likes and no popularity contest. But the end of the day, the reason why we do a lot of this self presentation and reputation building is because it gives us power and gives us influence, and it’s the way to get ahead in the world. But there was this wonderful black mirror episode called Nosedive, where it spoke about this dystopian future where all of this social status ranking became homogeneous and you had just a single number that represented your social ranking. And if you had a bad interaction. Yeah, so I think you’d have a bad interaction with someone and then it could just cause this nosedive.

01:16:17

Know, because of good heart’s law, your social status in of itself could actually trigger other people to perceive you differently. Creating this almost market dynamics of social status. I mean, that to me is a nightmare, and that’s why.

01:16:29

And they’re doing it in China. I mean, you’ve got your social credit.

01:16:38

Almost. It’s tyranical, but it could so easily happen. And that’s the thing. Before the interconnectedness that we had, now we had the privacy to discover ourselves and to explore with new ideas away from the judgmental eyes of others. And I know you were saying that it could be a good thing sharing information with others, but in a kind of very protected way. But there’s something to be said for just having clearly demarcated private spaces.

01:17:07

Yeah. Again, when I see what versus is doing and what they’ve done with building the next evolution of our Internet protocol, right, which they don’t own, that they gave it away. It’s an open protocol, but that’s where our Internet is going. And I can’t wait, because right now I feel like we’re using all these extended reality technologies in the most unsecured environment, the world wide Web, where all the transactions are taking place under these centralized umbrellas. So we have no control over our data or any of that kind of. We have no power in that respect other than to say yes or no. There’s no in between of, well, you can have some of this, but not this, and there’s no guardrails at all. And security was an afterthought in the World Wide Web.

01:18:02

But with HSTP, all of the transactions now are taking place at every touch point in spatial realms, spatial environments. So that really does put the control onto the user, the person who controls the space, which means even our own selves, our own autonomy, to where we can now determine how is that information being used, who can access it, for how long identity is going to be attached, but in a sense that it’s going to protect us in the sense that we own and govern that identity ourselves. Things like deep fakes, there will still be people faking videos and stuff in this space, but it’s going to be so clear if it’s original from the person or not. So then everything becomes obvious and more like a parody. There’s no mistaking. Did that person really say that? Is that really happening?

01:19:08

It’s going to be clear whether it was or not. I don’t know. I think that we’re just going to have so much more control over our identity, our information, all of that.

01:19:22

Yeah, I can see it both ways. I think the one good thing about having, let’s say, a huge platform like Twitter and Facebook and so on is that they can just sort things out and it’s all on their platform. But the problem is they’re deciding themselves. They’re basically self regulating. They’re deciding themselves what to do. And I think people underestimated just the reality we live in now, where your personal identity is diffused on many systems, and legally it’s recognized. Your digital identity is recognized and you don’t have control on it. You don’t have control of your own narrative, you don’t have control of all of the data and so on. So, yeah, I really love the idea of kind of standardizing and systematizing that.

01:20:01

But by the same token, now you’ve got this kind of consensus distributed network, and you have to kind of trust to a certain extent that everyone plays by the rules and the problem is almost diffused even more than it was before. So I think there’s arguments both ways, but in principle, I think it’s really good having rigid standards around this stuff.

01:20:26

The whole idea of the Internet from the beginning was to be able to have this decentralized space, right? But you can’t have that in action if all the transactions are taking place under centralized umbrellas. So I think that moving into the space where the control comes back to kind of the self sovereign identity, the self sovereign ability to move within the space, because then when you’re talking about the stuff like you were mentioning earlier, with the governance issues and possible tyranny and different things like that, it’s a lot harder to do in an environment that is decentralized. I mean, obviously there will be pressures, but then when you talk about the ability for digital currencies and moving into this value exchange system, where fiat, as we have experienced, money, totally shifts into just value exchange, then you really can’t control that.

01:21:29

You know what I mean? And there will be bad actors, there will be good actors, life will be different. I almost envision that when we move into this space, it’s going to kind of take us back to the idea that we had when things were. It was a barter system. Right. Like, what do you have of value? What do I have of value? Do we find mutual value exchange there and it’s beneficial for all? I think there’s a lot of change that’s going to come from the system, but I really do think that humans are going to be in a much better position to control the way their agency is able to be enacted and projected within a society like that.

01:22:17

Yeah, I think that makes sense. I was reading this book called money goes up, all about the crypto crash and bankman freed and so on. And in a way, it was quite horrifying to me that bitcoin is a bit like a spreadsheet that just has a load of numbers in it. And it didn’t have any intrinsic value, but people felt that it did. And then there’s the tether fiasco.

01:22:39

Yeah, but the problem with those was that it was the centralized exchanges that had the problem. It wasn’t the decentralized system of the crypto itself. It was like FTX was a centralized exchange. Sam Bankman was running it. It was just a centralized organization. So anybody who kept their crypto on the exchange were the ones who lost out due to his corruption. But anybody who had the crypto in their self custody wallets were unaffected.

01:23:16

Yeah, but I think I’m just making the broader point. The folks that ran tether, I think they didn’t really have a background in finance. I think one guy was actually. Wasn’t he like the star of the Mighty Ducks? And everyone kept asking them, they said, well, are you sure there’s actually dollars for all of this? Can you prove it? And they were just being really sketchy about it and didn’t want to talk about it. But this is why we do need regulation, because clearly, if you just leave people to their own devices, they’re going to do bad stuff. Right? So we need to have some kind of system where you have lots and lots of counterbalancing agents that actually lead to good outcomes. I was going to give the example as well. There’s a book by Daniel Carneman. I think it’s called bias.