A different class of energy governance is emerging. Seed IQ™ converts hidden energy waste into measurable savings and amplified energy...

The Spatial Web: A New Dimension of the Internet and a Technological Awakening for AI | Exclusive Interview with Denise Holt #SpatialWeb #ActiveInferenceAI #HSML

In this exclusive interview and presentation, Denise Holt, founder of AIX Global Media, and leading educator in Active Inference AI and Spatial Web Technologies, discusses the groundbreaking evolution of the Internet into the Spatial Web and the transformative power of Active Inference AI. Hosted by the Gigabit Libraries Network, in association with the International Federation of Libraries Associations and Institutions (IFLA) headquartered in The Hague, Netherlands, this conversation explores how new technologies like HSML (Hyperspace Modeling Language) and HSTP (Hyperspace Transaction Protocol) will enable decentralized AI, ushering in and enabling an entirely new kind of intelligent agent, while bridging all emerging technologies across the internet with a unified common language.

Denise explains how VERSES AI is leading the charge in this paradigm shift by developing and donating the protocol and IP for the Spatial Web to the IEEE, setting the stage for global adoption. This new AI, based on world-renowned neuroscientist, Dr. Karl Friston’s Free Energy Principle, mimics biological intelligence, offering sustainable, explainable, and human-governed AI systems. Learn about the potential of the Spatial Web to power Smart Cities, decentralized AI, and autonomous intelligent agents, transforming everything from data privacy to real-time AI decision-making.

Learn more about Active Inference AI and the future of Spatial Computing and meet other brave souls embarking on this journey into the next era of intelligent computing.

Join my Substack Community and upgrade your membership to receive the Ultimate Resource Guide for Active Inference AI 2024, and get exclusive access to our Learning Lab LIVE sessions.

00:13

Speaker 1

So, Denise, welcome.

00:17

Speaker 2

Hi. Thank you. Thank you for having me.

00:21

Speaker 1

Please introduce yourself, if you would, and, you know, kind of how you came to this point and then tell us what this point is.

00:31

Speaker 2

Sure. So, my name is Denise Holt. I have a company, AIx Global Media, and I have been doing a lot of writing and educating in the space of active inference AI and spatial web technologies. These are new technologies, and they’re about to get into the hands of the public early next year. So I’ve known it’s coming. You spoke about the IEEE working, or the IEEE standard protocol for the spatial web and the recent approval of that protocol as a global standard. I’ve been on the working group developing that standard for the last two years. The working group has existed for four years. The development has been occurring over the last four years. So it’s finally gotten to the approval stage, and it’s become a global public standard now. And I’ll talk more about that in my presentation. But that’s going to change everything.

01:38

Speaker 2

It’s going to change everything about the way we interact with technology, all emerging technologies, including AI. Should I jump into the presentation, or is there anything else? Okay. What I’m going to be discussing is active inference AI and spatial web technologies. The thing that we need to understand is that our Internet is evolving. And what this means is right now we’re in the World Wide Web, where HTTP HTML enable us to build websites, web domains. Before that, we had TCP IP, which enabled email sending a message from one computer to another, and then HTTP and HTML made it to where we could attract people to our web domain. And that is the state of our Internet still.

02:34

Speaker 2

We’ve progressed to mobile, to where now we can access and do all of these things through our own personal devices, but it’s still largely the same, and it’s the World Wide Web. What’s about to happen is a new layer, a new protocol is coming on top of our Internet, and it’s called the spatial web. The protocol is HSTP, hyperspace transaction protocol, and the programming language is hyperspace modeling language. And essentially what this is going to do is it’s going to take us out of this library of pages and documents within the World Wide Web, the website domains that contain all of this data. And it’s going to enable everything in every space to become a domain, and therefore everything in every space and all spaces then become programmable through HSML.

03:36

Speaker 2

And what this means is that you can program everything from permissions and credentials and ownership and all kinds of things that pertain to the entity itself, but also attributes descriptive properties about those things and their interrelationships between each other. And all of this is able to be tracked and updated in real time. So what this is going to enable is instead of just apps on our app store where you’re interacting with an app, AI agents will become the new application software, and they’ll be able to be built within the space. All of this intelligence layer now within the spatial Internet will become empowered through AI. We’ll talk a little bit more about that. There’s a few entities that are behind this.

04:34

Speaker 2

There’s a company called Versus AI, and they actually are the ones who developed this new protocol, but they donated it to the public four years ago, donated it to the IEEE, so that these global core standards could be built around this. So that was their gift to the public, their gift to the world. The spatial Web foundation is a nonprofit that has been organized around the development of this protocol. But this is a free, public, global protocol. And what versus has done is they’ve built a platform that’ll be the first interface for the public to be able to play in this new space of the Internet. This enables an entirely new kind of AI called active inference AI. It’s a first principles AI. It’s based off of the same mechanics as biological intelligence. Doctor Carl Friston, he’s the number one neuroscientist in the world.

05:31

Speaker 2

He’s the most cited neuroscientist in the world. And active inference is his methodology. It’s based on a discovery of his called the free energy principle, which describes how all biological systems learn. And maybe a year or so ago, it was actually proven by a research team at the RiKEn Institute in Japan that indeed, the free energy principle is how neurons learn. So it’s entirely different than what we’re seeing right now with current AI, which is deep learning, machine learning AI. It’s a completely different approach, and we’ll talk a little bit more about that as we move through. So the future of AI with this technology, it’s shared, distributed, and multiscale. It’s an entirely new kind of artificial intelligence, and it’s able to overcome the limitations of machine learning AI. This AI is knowable, explainable, and capable of human governance.

06:33

Speaker 2

It operates in a naturally efficient way. There’s no big data requirement. Any amount of data can be made smart, and it’s based on the same mechanics as biological intelligence. It learns in the same way as humans. And like I said, the underlying principles have been proven to explain the way neurons learn in our brain. And if it sounds too good to be true, it’s happening right now. So what is the spatial web? The spatial web is Web 3.0, basically the next evolution of our inner, the same Internet that connects us all that we’re talking across right now. It’s just another protocol layered on top of it that expands the network and expands the capabilities.

07:17

Speaker 2

So the network itself is about to explode in size, because instead of just web domains and websites, now every person, place, or thing in any space can become a domain. It is the next evolution of the Internet. It’s going to be powered by AI. The new protocols are hyperspace transaction protocol and hyperspace modeling. Language is the programming language. It’s a global socio technical standards that have been developed over the last four years. And this slide was when it was in the final ballot process with IEEE, but it’s actually been approved now. And four years ago, interestingly enough, when the IEEE began this process of the global standards around this, they deemed the spatial web protocol a public imperative, which is their highest designation. So what does this mean?

08:14

Speaker 2

Basically, our Internet is about to become the Internet of everything, exponentially expanding the number of domains in our network. Right now, with the World Wide Web, you’ve got websites, and they contain information, data, whether it’s text or images or, you know, anything. And if you look at what these deep learning machine learning tools can do right now, they’re doing things with that data, right? It’s natural language, it’s image processing or video. What’s different with this new technology is you’re talking about spaces and all entities within spaces. A spatial web domain then becomes an entity with a persistent identity through time, with rights and credentials. And, you know, you’re talking about dimensional concepts, organizations, agents, person things. So everything is a part of this network, and everything then becomes empowered by Aihdem, and it’s an entirely new kind of AI.

09:19

Speaker 2

The current state of the art AI’s are siloed applications. They’re built for optimizing specific outcomes. They’re unable to communicate their knowledge frictionlessly and collaborate with other AI’s. If you notice, each AI application is a siloed application, and it’s trained to be able to produce those specific outcomes. What’s different about this technology is it’s a self evolving system that will be learning moment to moment, upgrading its world model, its understanding of reality, and thus mimics biology while enabling general intelligence. So it’s shared intelligence at the edge of everything, while these neural nets are just gigantic databases which require an immense amount of energy and power to run.

10:12

Speaker 2

So this decentralizes AI and it takes the processing to the edge, meaning instead of a gigantic database that has to process all of the training and all of the queries, now these agents can be dispersed across the Internet and process at the edge, meaning at edge devices like your computer, your cell phone, private servers. So you can think of it similar to the way as before. Personal computers, there were supercomputers, but once personal computers got in the hands of everybody, then all of the processing takes place in their homes. So it’s a distribution of processing and power. Super powered gpu’s are not required. The processing takes place on local devices with existing infrastructure. It uses real live data, real time data from IoT sensors, machines and ever changing context that programmed into the network through HSML.

11:14

Speaker 2

And it eliminates the need to tether to a giant database. Active inference agents throughout the network learn and adapt from their own frame of reference within the network, and it minimizes complexity. It uses the right data in the moment for the task at hand. Now, HSML becomes a common language for everything, providing unprecedented levels of interoperability between all current emerging and legacy technologies. Technologies. It’s a programming language, bridging communication between people, places and things, and laying the foundation for a real time world model of understanding for autonomous systems. HSTP, the protocol itself, the hyperspace transaction protocol, naturally provides guardrails. Now, if you think of the World Wide Web that we’re in right now, it’s the most unsecured environment. It’s why we have all of these problems like hacking, tracking, faking, and it’s really hard to secure.

12:19

Speaker 2

And in the same way, when you want to engage in a website here, you basically have two options. Yes, you can have access to all of my data and everything that I’m doing within your program, or no, and then I’m opting out and I can’t use it, right? So what HSTP enables, because instead of transactions taking place under the umbrella of a centralized organization, now transactions take place at every touch point because everything is a domain. So you can be very nuanced in programming permissions and required credentials and ownership, and, you know, to the point where you can be very selective of what parts of your data you’ll allow, for, how long you can set expiration dates on it. It really does give us this data privacy and data sovereignty that we’ve wanted, but is impossible with the World Wide Web.

13:21

Speaker 2

It provides transparency and explainability. Active inference agents are explainable AI. They can report on exactly how they’re coming to their decisions and their outputs. That’s impossible with deep learning neural nets. This provides security and authentication, interoperability and standardization, user empowerment and control, and safety and reliability. And what we have then are programmable spaces. Anything inside of any space is uniquely identifiable and programmable within a digital twin of earth, producing a model for data normalization. This enables adaptive intelligence, automation, security through Geo encoded governance, multi network interoperability, and it enables all smart technologies to function together in a unified system. And from this we get a knowledge graph.

14:21

Speaker 2

When all spaces and objects and things become programmable, and we can actually program context about the things and their interrelationships to each other, then what we’re doing is we’re building out this knowledge graph that becomes a digital twin of everything. So essentially a digital twin of our planet and all nested systems and entities within it. This creates an ecosystem of nested ecosystems. Intelligent agents, both human and synthetic, are involved, sensing and perceiving continuously evolving environments, making sense of changes, updating their internal model of what they know to be true, and acting on the new information that they receive. And this provides accurate world models for these agents. For these intelligent agents, collective intelligence trained on real time data. And it evolves, making decisions and updating its internal model based on what’s happening now, not on historical data sets.

15:24

Speaker 2

So when these deep learning machine models are trained, they’re loaded with trillions of data sets. Like the more information, the better they’ll get at matching patterns and potentially giving you an outcome that seems appropriate for what your expectation is. These active inference agents are using real data, real sensory data, coming in through IoT and cameras and different things, and measuring it against a real contextual model that’s ever changing of all of the interrelationships of the things in all the spaces, and an understanding of reality in the moment in the world. So, active inference is not a language model that’s generating words about the world based on outdated knowledge been fed regarding the world. Active inference is like a biological organism that perceives and acts on our world.

16:21

Speaker 2

By generating more accurate models, understandings and beliefs about our world, HSML computes context, enabling AI’s perception to understand real time changing state of anything in the world. And I’ll give you an example. A deep learning large language model can tell you a great story about New York City. But an active inference agent can tell you what’s happening right now anywhere in New York City. So it’s completely different now, the free energy principle, which is what active inference is based on, and the free energy principle is the principle from Doctor Carl Friston that describes how neurons learn, how all biological systems learn these two things together. The knowledge graph of the world and the context, the computable context in all the things, in all the spaces. And this free energy principle, they give us a path to AI governance.

17:23

Speaker 2

It enables computable context that defines, records, and traces the changing details of physical and digital dimensions, social dimensions, meanings, culture, conditions, circumstances and situations, whether geometrical, geopolitical or geosocial, by nature. And it’s a system that safeguards and respects individual belief systems, sociocultural differences and governing practices. And it minimizes complexity through natural intelligence. The more complex a system, the more energy it consumes. When you think of these gigantic databases that are layered neural nets that are processing these deep learning inputs to give you an output, those require an insane amount of energy to do that processing. And for every query that comes in from you or anybody else, all of that has to run through the neural nets and run through an enormous amount of data in order to be able to give you an output.

18:29

Speaker 2

It’s extremely inefficient, and, you know, most people in the industry understand that it’s really not a scalable option. But if you look at how biological intelligence works, the human brain is remarkably energy efficient, operating on just 20 watts less power than a light bulb. So this new type of AI, this active inference AI, is sustainable AI because it mimics biology, and it’s AI that thinks and learns while protecting the planet. It also enables human governance to scale alongside AI capabilities, with unprecedented cooperation between humans and machine cognition. So, versus and the spatial Web foundation offer us the framework in which we can build an ethical and cooperative path forward for AI and human civilization. Because HSML versus was actually involved in a program called Flying Forward 2020, where they.

19:31

Speaker 2

It was a drone project that was organized by the European Union, and I think it involved like, eight countries. What they learned from it is indeed, through HSML, you can program human laws and guidelines through a language that the AI can understand and abide by in real time. So this level of cooperation that we are going to be able to have will enable us to scale AI in tandem with human guidelines. Human cooperation. So this brings us to a technological awakening. The spatial web protocol creates somewhat of a nervous system for our planet. The approval of the protocol standards by the IEEE represents a monumental leap forward in the evolution of computing and AI across the global Internet. These technologies have the potential to create more intelligent, adaptive and interconnected digital world, transforming numerous aspects of our lives and industries.

20:34

Speaker 2

So what we’re going to see from 2025 to 2030 are smart cities, smart medicine, smart education, smart supply chains, and smart climate management. With this digital twinning of the planet and these active inference AI’s that can run simulations then based off of all of this real data, and the number of simulations is unending, and they can run them simultaneously, so they’ll be able to actually go, okay, we’ve run all these simulations. These are exactly the steps we need to take to correct these problems, to overcome these problems. The level of control we’re going to have, overdose. Correcting a lot of the emergency situations that we find ourselves in right now is going to be, it’s just going to be a saving grace, I think, for humanity.

21:31

Speaker 2

And then, if you’re familiar with Buckminster Fuller’s World game Network, natural intelligence agents create a self evolving intelligence that can help us manage our cities and supply chains, educate us in new ways, and help us realize Buckminster Fuller’s world game. As Bucky said, make the world work for 100% of humanity in the shortest possible time through spontaneous cooperation, without ecological offense or the disadvantage of anyone. And really, that’s what it’s all about. So if you want a deeper dive into any of this, visit my website. Deniseholt us I host monthly learning labs where you can learn about active inference, AI and the spatial computing. I have courses that I’m building now, and in the next month or so there will be curriculum available around this that you can take entire e learning courses, and I’ll have certifications and things like that I’m offering.

22:35

Speaker 2

But yeah, I think that’s it.

22:37

Speaker 1

Amazing. Just amazing.

22:39

Speaker 2

Thank you.

22:41

Speaker 1

I mean, from what I get, that’s all I can say about this. What kind of response you get from the inventors of TCP and HTML vet and Tim.

22:55

Speaker 2

So what’s really interesting is back in January at the World Economic Forum in Davos, was it Doctor Carl Friston? That was, I think, doctor, because Doctor Carl Friston had a panel with Jan Lecun, who’s the chief scientist for Meta, and I believe he also was on a panel with timber Sir Tim Berners Lee. So, yeah, I mean, they’re aware. I mean, obviously it’s a natural progression. And when you look at all the emerging technologies that we have, everything from distributed ledger technologies, which are like blockchain and digital currencies, and all currency is moving into this digital currency space. And then you look at augmented reality and virtual reality, a mixed reality. What this is going to enable is this mixed reality kind of experience that we’ve all imagined, but the framework wasn’t there.

23:51

Speaker 2

HSML becomes a common language for all these emerging technologies to be able to become interoperable across the Internet. So they’re going to come to life in ways that we haven’t seen because the ability has not been there. And Iot, all of the IoT sensors now will be able to completely be integrated with each other and with this layer of intelligence across the network.

24:17

Speaker 1

That’s the mind boggling part is the amount of data that you’re embracing is we’re already at kind of peak data management and now you’re opening your arms to many times more. So that’s fascinating. So these build on top of these prior protocols or they kind of displace it?

24:39

Speaker 2

Yeah. No, so it built on top of it. And just like when we moved from TCP IP to the world wide web, we didn’t lose TCP IP, we still send emails every day. All it did was just expand the capabilities within the network. And so that’s what’s going to happen here. We’re still going to have websites, we’re still going to have email. But what you’ve noticed is when we jumped to websites and then to mobile, 80% of the time we’re playing in the new space because of all the extended capabilities that it offers. So I think that’s what we’re going to experience with this as well.

25:16

Speaker 1

Wow. Questions anybody? I’ve got one more for you. Reactions from big tech who are building these massive models and investing heavily in it. Something tells me they’re not totally fascinated with this. Or are they?

25:32

Speaker 2

There’s a few things happening there that are kind of interesting. And I actually wrote an article back in January and the interview is, or the panel is actually on, I think it might be on the versus YouTube channel if you wanted to check it out. But the panel between Jan Lecun and doctor Carl Friston was really fascinating. Jan Lecun is the chief scientist for Meta and he’s fully aware of Carl’s work in the panel. He was like, I agree 100%. I think this is, you know, but he’s like, but we don’t know how to do it any other way than deep learning. Right? He’s thinking back propagation is the only way we know, and that’s just not true. But I think the thing that he wasn’t really aware of in that conversation is. So to back up for a second.

26:28

Speaker 2

Active inference has long been thought to be an ideal methodology for AI. Active inference is not a secret to these big tech companies, but it’s long been thought to be an ideal method, but it’s also long been thought to be near impossible because it requires this context layer, it requires this grounding of understanding of reality for the AI. And that’s what the spatial web protocol brings, that’s what HSML brings. So I think a lot of these tech companies that are so deep down, the deep learning rabbit hole in the back of their mind, they just have this idea that, oh, that’s a great idea, but it’s really not possible.

27:11

Speaker 2

And all of this technology coming together with the spatial web protocol, the expansion of our Internet into spatial domains, and the ability to distribute these agents within the network, that’s the thing that makes it possible. So what wasn’t possible before is now possible.

27:31

Speaker 1

I can appreciate the appeal to certain engineers, but I can also appreciate the challenge to certain business models by the people who manage the engineers. And so it’s. What problems do you see in implementation?

27:52

Speaker 2

I don’t really see problems because there’s so many advantages to this. Yeah. And I think that there’s going to be a lot of advantages that just guarantee the adoption and people being excited about getting their hands on this technology, one of them being that, well, a couple of them, I mean, you know, it solves the energy problem of deep learning and it decentralizes AI. Right. Now, I think I saw a quote that only less than 10% of companies are using these AI products internally. And I think one of the biggest problems is they have to open themselves up to this third party with their proprietary data. And this enables an entirely different way where you don’t do that, your data is secure and, you know, you have guardrails and control over your data.

28:50

Speaker 2

So this is going to open up AI to enterprise in a way that you can’t with deep learning. It’s just going to enable corporations and anybody with a website presence right now, anybody with an app in the app store, they’re just going to be able to offer by building intelligent agents as applications in this new space, it’s going to offer so many more capabilities for their customer base or whatever they’re building, or offering the extended level of capabilities, because these agents, as applications, are aware of each other and the network. So the level of connections and insights that will be made in that space, it’s going to be something we’ve never experienced before. I see it as we’re about to experience an explosion of innovation like we’ve never seen.

29:46

Speaker 2

And I think it’s going to make the World Wide Web and all of the innovation that brought to us, I think it’s going to pale in comparison to what we’re about to see.

29:56

Speaker 1

That’s a big. That’s a big claim.

29:58

Speaker 2

I know, I know. And I lived through all of that. You know, I’m no spring chicken. So.

30:11

Speaker 1

So the, even though data is distributed and more manageable because it’s out near the edge and you bring all these remote processors into play in this coordinated linked fashion, it’s still a lot more data that’s going to be digitized and representing a database. This is a question about maintaining data over time. Maybe it’s really not this topic, but I was just having the exchange with Vince Cerf earlier. Vince going to come back on in a couple of months and talk about decay of data, of digital content and how the iterations of technology, of hardware and software make all this immense amount of data that we do have now and is growing unusable at some point and lost. And unlike the photos we have in a shoebox that lasts for 100 years. But this stuff, we think it won’t, but it’s going to go away.

31:18

Speaker 1

I’m just curious how this could impact data preservation.

31:24

Speaker 2

So we’re going to have something that we don’t have right now. Right now, the more data you have, how do we parse that data, how do we deal with it as humans? And we’re going to have the assistance of these intelligent agents that will be able to parse the data for us and really help us to navigate through it all. And as far as the immense amount of data that’s going to be created in all of this, you also have technologies that are going to be able to handle it. You know, the photonic chips, different things like that are going to basically use light speed to process. Right. So all of these emerging technologies, they’ve been rising at the same time. So they’re just going to be able to kind of come together and. And make it all happen.

32:17

Speaker 2

In this new space of the Internet. There’s not just you as the user, there’s you and AI. Everybody is going to have an intelligent agent, assistant that’s going to know you intimately, know all of your, I mean, for instance, think about just, you know, booking travel. It will be well aware of all of your travel documents, all of your preferences, everything else. It’ll be able to act on your behalf and make all of the connections because it’s aware of and enabled through the Internet to actually make our lives easier in dealing with all of this data.

32:55

Speaker 1

So whose agent is that? It may be working for you in theory, but who does it?

33:01

Speaker 2

It’ll be yours. It’ll be yours. Every person will be enabled within this new space to build these intelligent agents. And the interesting part of it is, if you’re building an agent, you’re imparting it with all of your specialized intelligence and your perception from your frame of reference in the network, right? And that’s the beauty of all of these different agents from all over the world. You know, people from, you know, Africa or India or China, you know, they can build agents that they’re imparting with their understanding and their. Their knowledge and wisdom that will help them to be able to deal with their problems from their part of the world. And then all of these agents are able to learn from each other and pass information and share beliefs with each other. So this agent layer is this intelligence layer of collective, distributed intelligence.

34:03

Speaker 2

It’s going to become kind of an organism of intelligence. It’s going to grow in knowledge in the same way human knowledge grows. You know, we grow our knowledge by, it depends on the diversity of knowledge within. Within the sphere of human intelligence. And we test each other and push back on each other and learn from each other, and that’s how we grow our human knowledge. These agents are going to do the same thing.

34:31

Speaker 1

Well, all those neurons are mine personally, and they’re working for me, in theory at least. The ones that haven’t been reprogrammed for me, these will be some kind of hybrid because they’ll be created by external factors. We’re running out of time. There’s a question about device dependent is.

34:57

Speaker 2

Yeah, no, these intelligent agents, they work on devices. We already have laptops, cell phones. They’re empowered off of any device. Now more devices are going to come online. I mean, we’ve already seen Apple vision Pro. You know, we’ve got the meta glasses. There’s going to be all these new augmented reality devices that’ll help us. You know, I just saw something yesterday, and it was glasses for the hearing impaired, that the glasses have a microphone or a microphone on them somebody can be talking and it’ll put the subtitles in front of their eyes so they. They don’t have to read lips anymore. Like, they don’t, you know, it’s there. We’re going to be so empowered by all these new technologies that are able to take advantage of this.

35:48

Speaker 1

Okay, two more questions. One main ethical concern, do you think. What?

35:56

Speaker 2

Well, I mean, I think that we’re going to see, I think we’re more empowered through this technology to really approach human, or the governance aspect of AI versus actually put out a report last year called the future of AI governance. And in it they were talking about how HSml enables us to actually take human laws and guidelines and make them programmable so the AI can understand and abide by it. So these active inference agents, they actually, because they’re they’re learning in real time, they’re self reporting. They can be governed by human entities. They’re going to lead to really trusted autonomous systems that can handle mission critical operations. You can’t do that with deep learning because deep learning, they’re black boxes. You have no idea how they’re coming to their outputs.

36:55

Speaker 2

They can go down a wrong direction very quickly, and it’s really hard to correct that. And they can give you an output that sounds like it’s credible, but it’s absolutely wrong because if they don’t have the information in their training data, then they’ll make it up as if it were true. So there’s no trust there. And this is going to be a more trusted system. So we’ll have all levels of AI systems. These deep learning tools are not going to go away, but they’re going to be looked at as tools. You know, make me this, write me this, create me this, code me this, and inverses report on potential governance for AI.

37:43

Speaker 2

They proposed an AI’s rating system, an autonomous intelligence system rating system, so that we can kind of know, you know, according to the level of capability of the autonomous system, what kind of governing capabilities we should give it, right. How much, you know, how much control and how, versus how much freedom. And I think it seems like a very logical approach, and it’s definitely doable with, you know, all of this new.

38:14

Speaker 1

Technology that’s coming like a trustworthy thing almost.

38:17

Speaker 2

Yes.

38:17

Speaker 1

Yeah, well, trust is what people are looking for. Absolutely.

38:22

Speaker 2

Yes.

38:24

Speaker 1

Last question. Excuse me. What now? Ieee just adopted. This was last month or something.

38:33

Speaker 2

Yeah. So the final vote was in. Yeah, it was like last month or so. It went to balloting in April, and then it took a couple of months for, you know, the final voting and everything to go through. And, yeah, it’s been approved.

38:47

Speaker 1

That’s amazing because it’s so hard to do standards because it’s always helping somebody and hurting somebody else, at least in their mind. So when, what do you see as kind of the next big event around this? When will it kind of pop out or when will it kind of get people’s attention?

39:08

Speaker 2

Well, so, you know, I know that the spatial Web foundation is going to be publishing the standard over the next couple of months and making the standard draft document publicly available. And I think it’s like 150 page documenthood. It’s very comprehensive, but that will be made available to the public. Now, what versus has done is they’ve built the first interface for the public to be able to interact in this space. So their operating system, for lack of a better word, platform is called genius. And with genius, anybody is going to be able to build one of these intelligent agents as an application. So right now they’re in beta testing with wider developer community, and they’ve got huge beta partners like NASA, Volvo, cortical labs, you know, some big players and a lot going on. They’re working with smart cities all over the globe.

40:12

Speaker 2

But the platform is going to be made available to the public early next year. So this is coming, and we will all be able to start, you know, and as we start kind of building out, it’ll be a little rudimentary at first, but as this knowledge graph grows, the capabilities of these agents grow. And, you know, we saw the World Wide Web.

40:36

Speaker 1

Right, right. Okay. We’re going to leave it right there. I want to thank you so much for this, and I want you to come back, you know.

40:43

Speaker 2

Absolutely. Thank you so much, dawn and everybody else. I really appreciate the opportunity to speak today.

40:49

Speaker 1

Jen, Alta, thank you so much for giving us a report from last week. This is fascinating. So much technology is converging and so much interest, and there’s a lot of changes that are happening on a socio technical level, as you use the term, which I thought was really interesting. So with that, we’re going to stop the recording, and we’re going to thank everybody and ask you to come back in the next opportunity.

41:13

Speaker 2

And I would like to say too, if anybody would like more information, feel free to reach out to me. You can reach me on LinkedIn, and I’m happy to engage.

41:22

Speaker 1

Okay, great. Everybody has your link on the registration page, including. Okay, thank you so much, Donna.

A different class of energy governance is emerging. Seed IQ™ converts hidden energy waste into measurable savings and amplified energy...

Why the world's leading neuroscientist thinks "deep learning is rubbish," what Gary Marcus is really allergic to, and why Yann...

Seed IQ™ enables coherence across distributed agents through shared operational belief propagation maintaining system-level viability, constraints, and objectives while adapting...

Preliminary results with Seed IQ™ suggest that barren plateaus can be treated as an operational state that is detectable, actionable,...

Operations are control problems, not prediction problems, and we're applying the wrong kind of intelligence. The missing layer: Adaptive Autonomous...

We have been entrusted with something extraordinary. ΑΩ FoB HMC is the first and only adaptive multi-agent architecture based on...

AI is entering a new paradigm, and the rules are changing. AXIOM + VBGS: Seeing and Thinking Together - When...

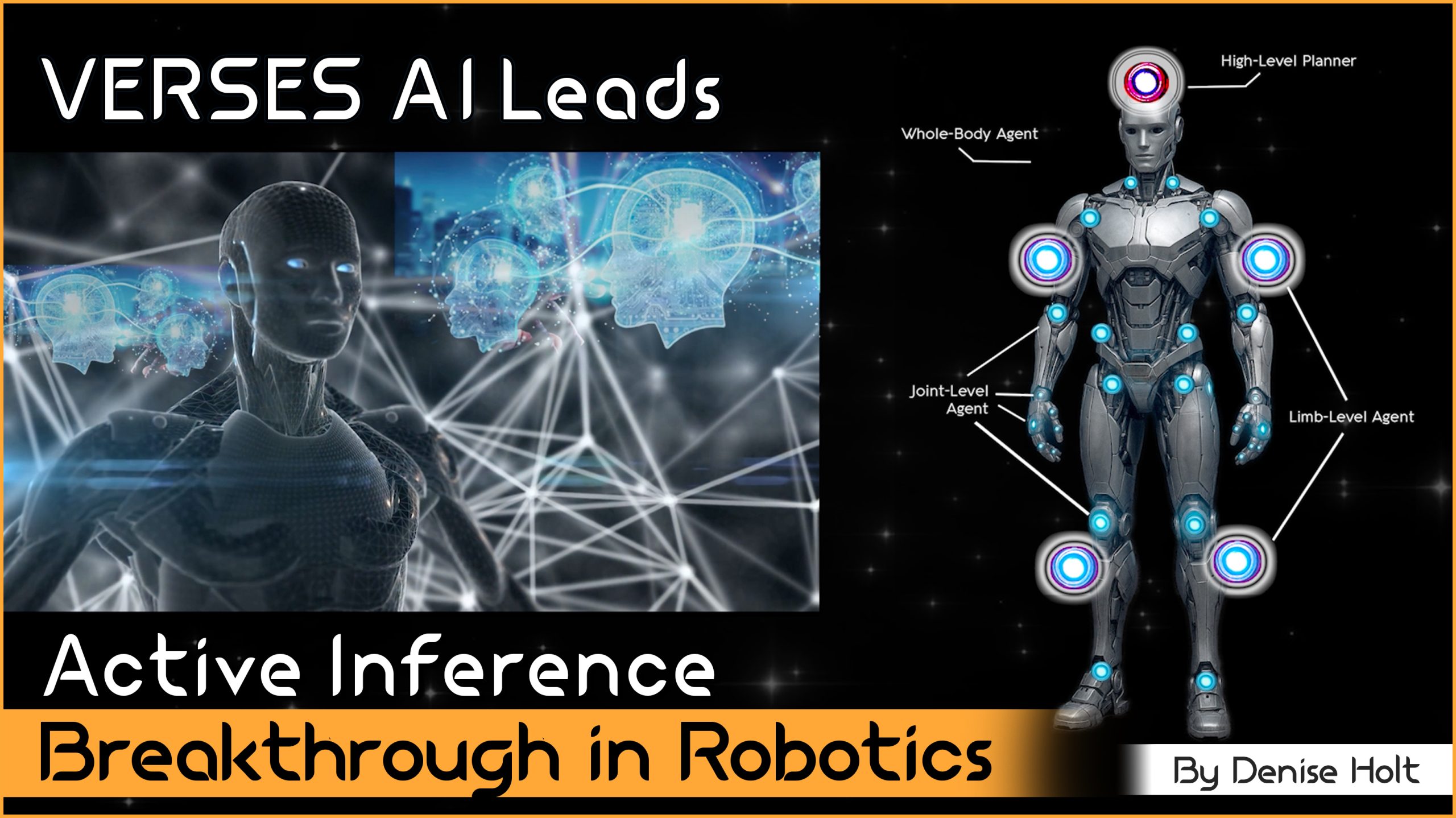

Blueprint for new robotics control stack that achieves an inner-reasoning architecture of mult-agents within a single robot body to adapt...

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...