Active Inference & The Spatial Web

Web 3.0 | Intelligent Agents | XR Smart Technology

The Dawn of True AI Agency: Why Active Inference is Surpassing LLMs and Shaping the Future

- ByDenise Holt

- February 17, 2025

Listen on YouTube

Listen on Spotify

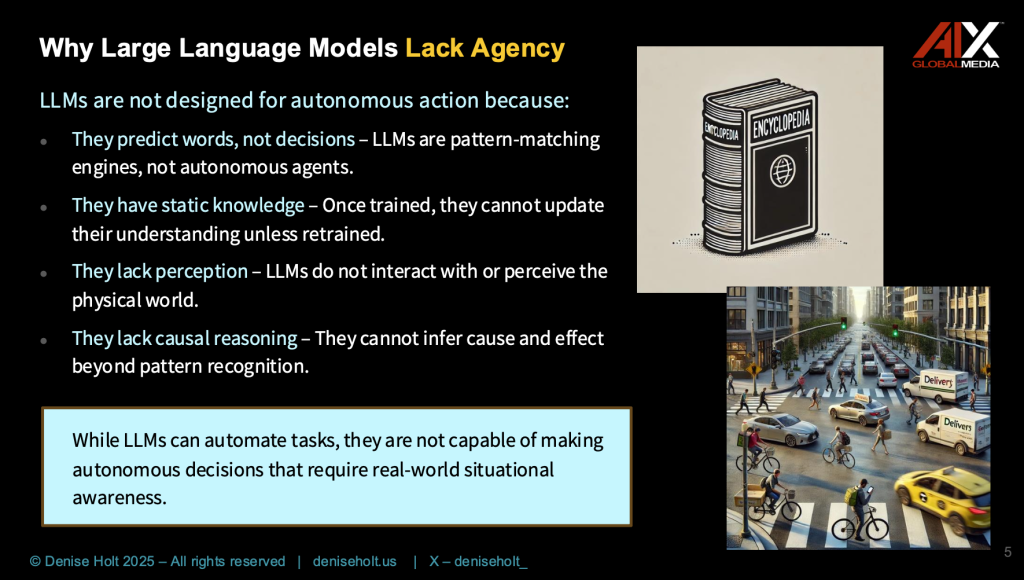

We stand at a critical juncture in AI that few truly understand. While LLMs like OpenAI’s ChatGPT have captivated the public with their conversational fluency, they highlight a fundamental limitation: they can automate tasks but lack true agency.

As many enterprises and other organizations have been experimenting with and attempting to deploy so-called “Agents” to automate tasks and draw extended insights from internal operations, they are quickly realizing that these LLM Agents fall short of expectations, leaving much to be desired. Even with Instance-Based Learning (IBL), an impressive engine by HowSo that uses LLMs for “interpretable AI,” this system is still constrained by the same limitations that follow all LLM systems — they make decisions by performing correlations against static data rather than by continuous learning and developing causal understanding.

The consensus is that this next phase of Autonomous Intelligence must go beyond automation of tasks to deploying systems that are capable of learning, planning, and adapting dynamically using LIVE data — moving beyond correlation of static data to causal reasoning.

This is where Active Inference AI Agents and the Spatial Web Protocol, are stepping in — offering adaptive, energy-efficient, multi-agent intelligent systems that continuously evolve with real-time data.

A new digital transformation is upon us, incorporating adaptive intelligence automation and spatial computing. This isn’t about more sophisticated chatbots, or better pattern matching, or even attempting explainability using LLMs; it’s about the emergence of Autonomous Intelligent Systems that possess true agency — the ability to autonomously act in the moment based on real-world situational awareness.

This is a major shift in technology that will affect every industry across the globe.

The Current State of AI: Understanding the Limitations of Large Language Models

The current AI landscape is dominated by large language models, with systems like ChatGPT, Claude, and DeepSeek garnering significant attention. These models have demonstrated remarkable capabilities in text generation, coding assistance, and pattern recognition. However, their fundamental architecture reveals critical limitations that prevent them from achieving true intelligence or agency.

I know people talk about Agentic AI and I always cringe because when they say the word Agentic AI, they don’t really mean Agents. They mean things tethered back to the central mothership for the most part.” — Dr. David Bray, PhD

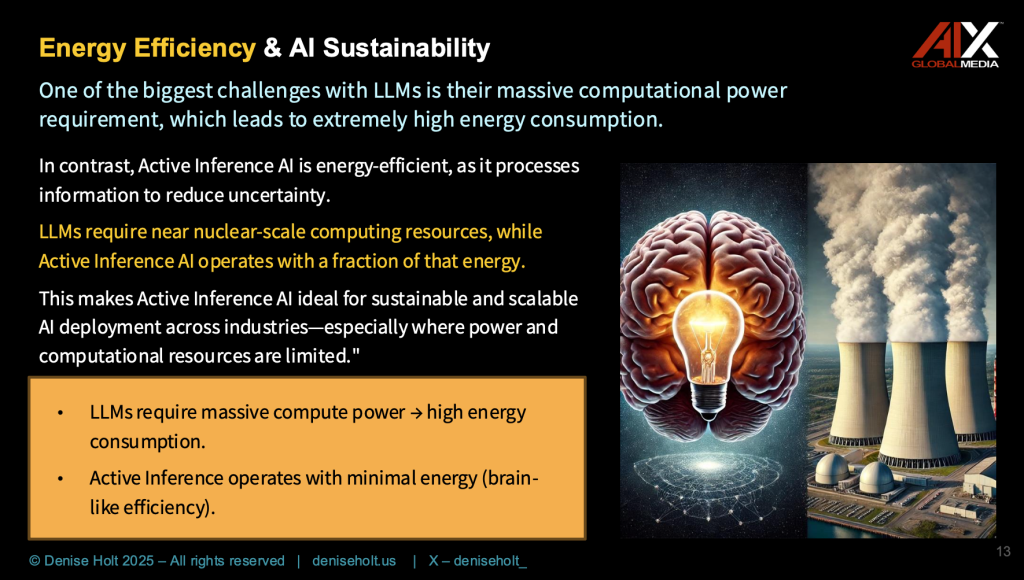

At their core, LLMs are sophisticated pattern-matching engines trained on vast amounts of static data (non-updating, frozen, stored information from the past). They operate as a giant database requiring enormous computational resources and energy consumption — with future resource projection approaching the power requirements of small nuclear facilities — just to maintain basic operations. This unsustainable approach has led to what many industry experts call the “AI power crisis,” where the energy demands of these systems threaten to outstrip our ability to power them.

The limitations go beyond resource consumption. LLMs operate in a fundamentally reactive manner, unable to update their core knowledge without massive retraining operations that cost hundreds of millions of dollars. They lack causal understanding, instead relying on statistical correlations that often lead to confident but incorrect assertions. As Meta’s Chief AI Scientist Yann LeCun recently stated at Davos 2025:

I think within five years, nobody in their right mind would use [generative AI and LLMs] anymore, at least not as the central component of an AI system. … We’re going to see the emergence of a new paradigm for AI architectures, which may not have the limitations of current AI systems.” — Yann LeCun, Chief Scientist, Meta

This bold prediction underscores the growing recognition that current approaches to AI, while impressive in narrow applications, represent a technological dead end.

Understanding Agency: The Active Inference Revolution

True “agency” in autonomous intelligence represents a fundamental departure from the pattern-matching paradigm of LLMs. It requires systems that can perceive, decide, and act autonomously in an environment while understanding the causal relationships that govern their world. This isn’t just about responding to inputs; it’s about actively predicting and shaping outcomes through intelligent interaction with the environment. It’s about understanding the “why” behind an observation before acting, while being able to act on the environment to gather more information to increase the certainty of understanding the “why.”

Active Inference (developed by world renowned neuroscientist and Chief Scientist at VERSES AI, Professor Karl Friston) provides the theoretical framework for this new approach to AI. Based on the Free Energy Principle, which describes how biological systems maintain their order and adapt to their environment, Active Inference autonomous systems operate by continuously updating their understanding of the world through a cycle of prediction, testing, and refinement. This mirrors the way biological intelligence works, allowing systems to learn and adapt with remarkable efficiency.

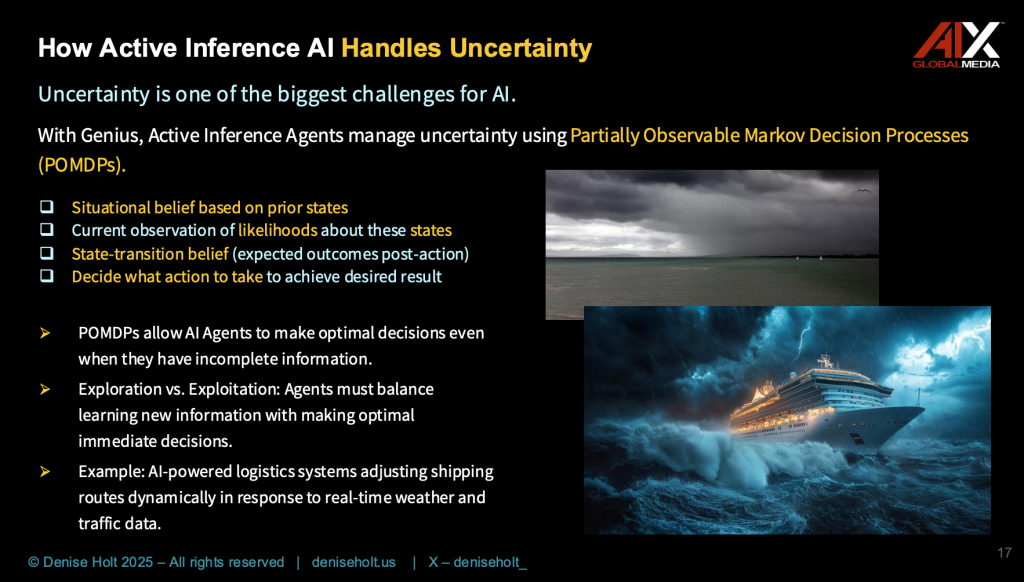

The key distinction between Active Inference agents and traditional AI systems lies in their ability to handle uncertainty and make decisions in real-time.

While an LLM might generate a plausible response based on its training data, an Active Inference agent actively seeks to reduce uncertainty and make sense of its environment, making decisions based on a sophisticated understanding of cause and effect.

This enables them to operate autonomously in complex, dynamic environments where traditional AI systems would fail.

Doesn't Instance-Based Learning (IBL) Do the Same Thing?

No… Instance-Based Learning has gained an edge of popularity in enterprise settings that are seeking to deploy AI Agents due to its ability to offer a form of “interpretable AI” with a chain of “explainability” to its outputs. However, IBL is achieving this by comparing new inputs with “stored experiences” —performing a sophisticated display of pattern matching that possesses no situational awareness and still struggles to adapt to new patterns. It does not build a model of understanding. It is referencing and comparing patterns between new data and past data, and recording the string of comparisons while weighting the level of familiarity for predicted accuracy. These are still LLMs.

Key Differences between Active Inference vs. IBL:

Active Inference AI:

- Based on the Free Energy Principle by Karl Friston, Active Inference AI continuously updates its internal models by predicting, testing, and adjusting in real time.

- It minimizes uncertainty dynamically, using probabilistic reasoning and causal inference, making decisions based on real-time sensory data and continuous feedback from the environment.

- Operates as a decentralized, scalable system through the Spatial Web Protocol, enabling real-time collaboration between distributed Intelligent Agents.

- Learns continuously without retraining, making it highly adaptive for complex, evolving environments like supply chains, smart cities, and healthcare systems.

Instance-Based Learning engine by HowSo:

- IBL stores and recalls specific instances from past experiences to make decisions, rather than building generalized models. Each new decision is based on comparing new input with stored instances.

- No continuous learning: Each decision is influenced by previously encountered examples, but the system doesn’t build an evolving understanding beyond its instance database.

- IBL focuses on interpretable AI with high transparency, providing clear reasoning for each decision by referencing past instances, which is useful for industries requiring clear AI decision trails, such as finance and healthcare.

- Limited adaptability: While highly accurate for familiar tasks, IBL systems may struggle with unfamiliar scenarios where no similar past instances exist.

In essence, Active Inference AI is like a scientist constantly experimenting, learning, and evolving its understanding of the world, while IBL is like a librarian referencing past cases to inform new decisions. Active Inference offers greater adaptability and scalability, whereas IBL offers interpretability but with more static learning.

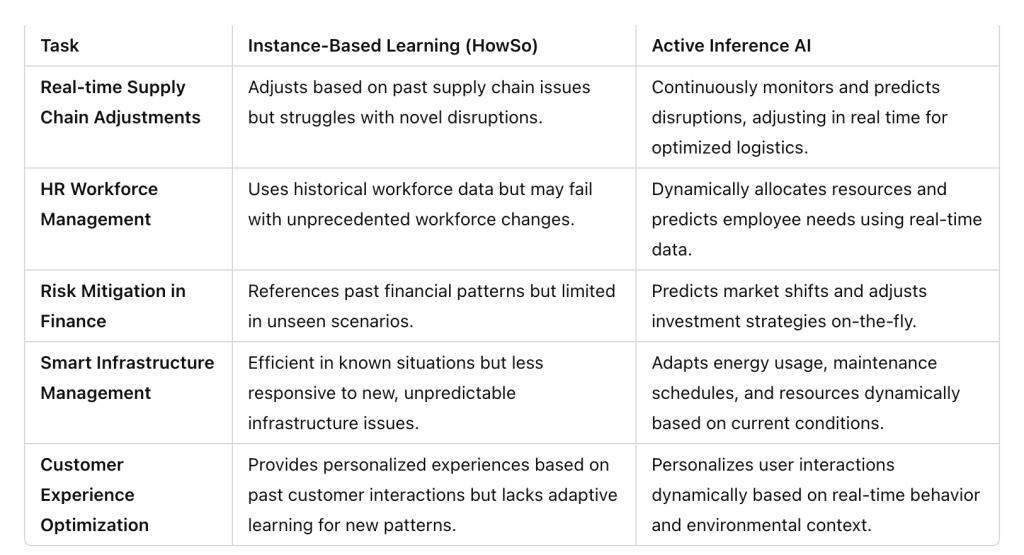

IBL vs. Active Inference in Enterprise Operations:

Active Inference AI’s continuous learning, real-time adaptation, and energy efficiency make it superior for enterprise operations compared to the more static, instance-reliant IBL approach.

The Technical Foundation: How Active Inference Enables True Agency

The technical architecture of Active Inference AI represents a radical departure from traditional deep learning approaches. Instead of relying on massive neural networks trained on static data, Active Inference systems use sophisticated probabilistic models that continuously update their understanding of the world through active interaction with their environment.

At the heart of this approach is a multi-agent system of distributed intelligence using concepts like:

- Active Inference — making the best guess of what will happen next based on what you already know, then updating your guess as you get more information — like continually updating your expectations to improve your predictions.

- Active Learning — choosing what to learn next to get the most useful information.

- Active Selection — choosing the best option among many by comparing their predicted outcomes.

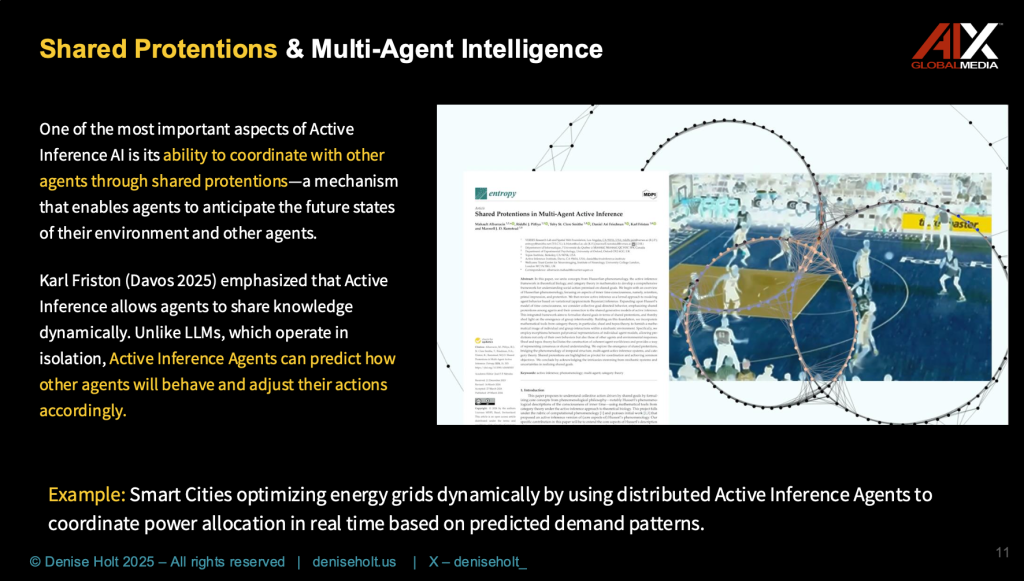

Another aspect is the notion of “shared protentions” — a mechanism that enables agents to anticipate future states of their environment and other agents. This allows for sophisticated multi-agent coordination and real-time adaptation to changing conditions. This multi-agent system operates through a continuous cycle of prediction, action, and learning, maintaining “Bayesian beliefs” about the world that are constantly refined through experience.

Let’s use a basketball team as a prime example of “shared protentions” in action. Through many hours of practice, each player’s individual actions become more guided by their expectations of what their teammates will do. And these expectations are not just one-way — they’re reciprocal and self-reinforcing. The teammate anticipates a pass because they know the point guard is likely to make it, and the point guard makes the pass because they anticipate the teammate being ready for it.

Over time, this interplay of expectations and actions leads to a highly coordinated style of play, where the team seems to move and think as one unit. And importantly, this coordination emerges not from any one player’s explicit instructions, but from the implicit alignment of each player’s understanding and anticipations.

The efficiency gains through Active Inference AI are staggering. While traditional AI systems require enormous computational resources and energy consumption, Active Inference systems can operate with a fraction of the resources — often requiring no more power than a standard light bulb. This efficiency comes from these Agents’ ability to process information in a way that minimizes computational overhead by constantly reducing uncertainty through prediction — a principle that nature itself uses to maintain order and adaptability.

The Genius Platform: A New Paradigm in Artificial Intelligence

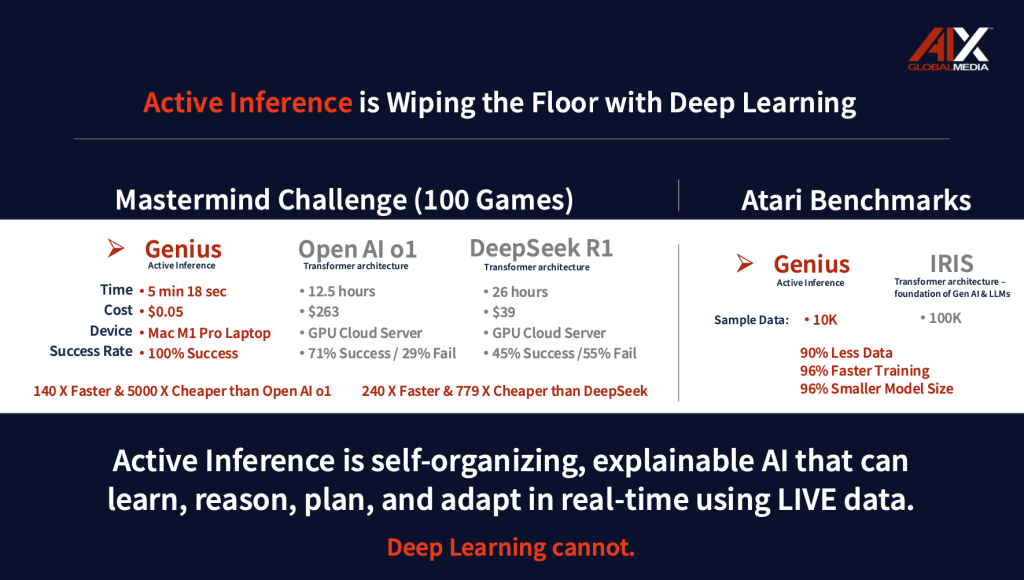

VERSES AI’s Genius platform represents the first commercial implementation of Active Inference AI, and its performance in recent benchmark tests has sent shockwaves through the AI community.

In the Atari 100K challenge, a standard benchmark for AI performance, Genius raised the stakes by establishing a new benchmark — The Atari 10K challenge, setting a new precedent by achieving superior results using just one-tenth of the training data required by current LLM transformer architecture. Active Inference is proving to be force to be reckoned with.

Notice in the Mastermind Challenge, a code-breaking game that requires “reasoning” to guess the patterns and decode the game. Genius solved 100 games using a laptop in mere minutes compared to the many, many hours it took both OpenAI o1 and DeepSeek R1 to solve using GPU cloud servers. Genius also had 100% success rate, while Open AI and DeepSeek had 29% and 55% fail rates successively. (Also notable: DeepSeek, although a significantly cheaper AI model than OpenAI o1, took over twice as long to solve these challenges, and ended with nearly double the fail rate as OpenAI.)

Active Inference through the Genius platform proved to be:

140 times faster and 5,000 times cheaper than Open AI o1

240 times faster and 779 times cheaper than DeepSeek R1

Genius’ success isn’t limited to gaming environments. In real-world applications, Genius has demonstrated unprecedented capabilities in autonomous decision-making, risk assessment, and multi-agent coordination. Its ability to operate efficiently at the edge — making complex decisions without requiring massive cloud computing resources — represents a fundamental shift in how AI can be deployed and utilized.

What makes Genius truly revolutionary is its ability to learn and adapt continuously through interaction with its environment. Unlike traditional AI systems that require complete retraining to incorporate new information, Genius Agents can update their understanding in real-time, making them ideal for dynamic environments where conditions constantly change.

The Spatial Web: Enabling Distributed Intelligence

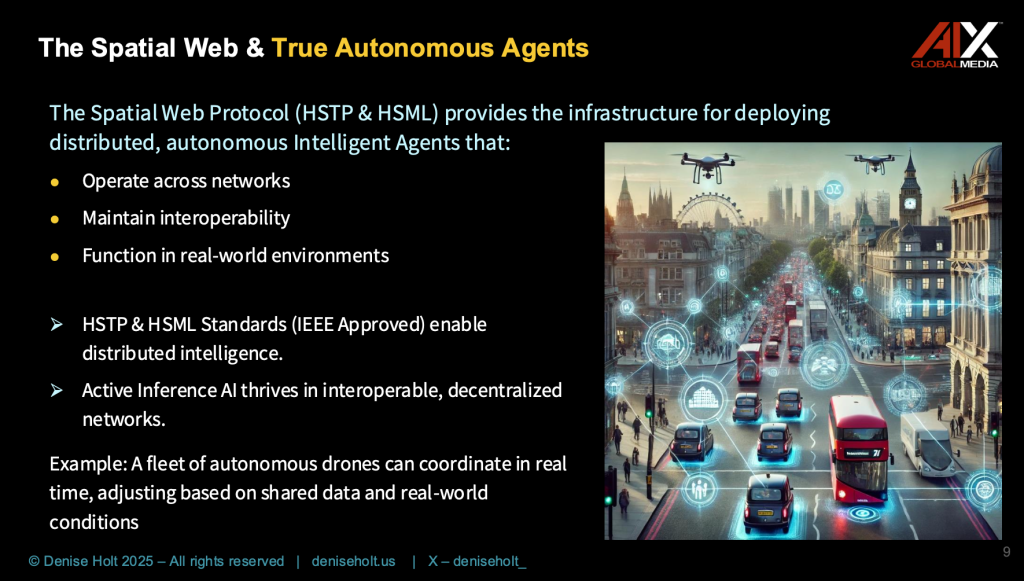

The Spatial Web Protocol (HSTP & HSML) provides the crucial infrastructure that enables Active Inference Agents to operate in a coordinated, decentralized manner. As an open public standard, this framework represents the next evolution of our global internet, moving beyond simple information sharing to enable intelligent coordination and action in both digital and physical spaces.

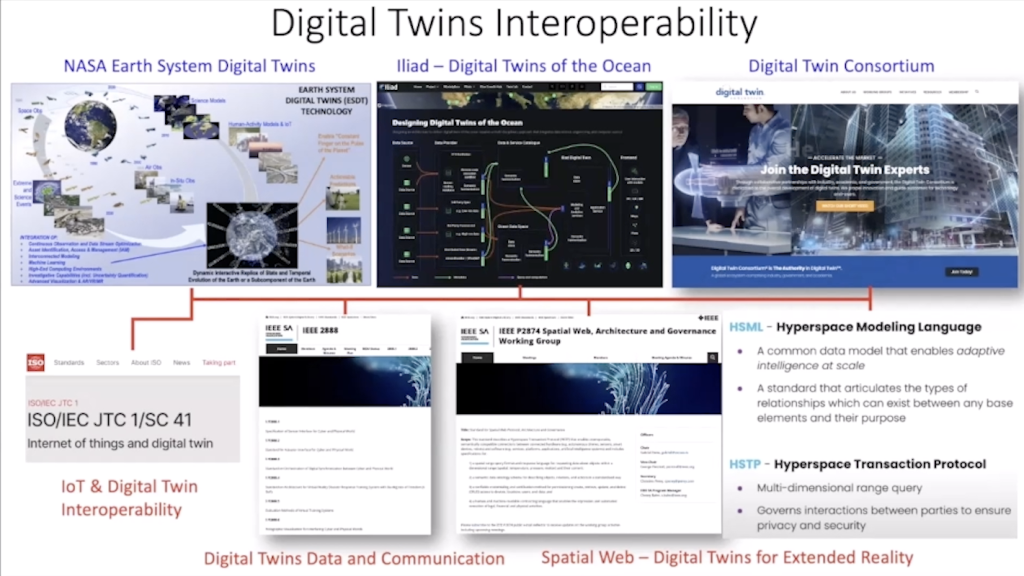

The protocol’s architecture supports the Universal Domain Graph (UDG), a Global Commons and a public utility as a growing network of interconnected knowledge graphs and capabilities that enables Agents to share information and coordinate actions efficiently through the mapping of dynamic relationships between entities across multiple dimensions and scales. This is analogous to how the World Wide Web grew and evolved, but with a crucial difference: the UDG is designed to support not just information sharing, but unprecedented Agent collaboration (both human and synthetic Agents), and intelligent, autonomous action across a secured environment. Recognizing the changes and movements of entities in this dynamic network, the UDG enables real-world modeling, simulation and digital twins.

The implications of this infrastructure are profound. Just as the world wide web enabled global information sharing, the Spatial Web enables global intelligence sharing while uniting all emerging technologies across secured networks with a common language for interoperability — HSML (Hyperspace Modeling Language). This creates a network effect where each Agent’s learning and experiences can benefit the entire system (within closed or open networks and systems), leading to exponential improvements in collective intelligence over time.

Active Inference and the Spatial Web: An Open-Source Developer's Dream

Active Inference AI, when integrated with the Spatial Web’s public open standards HSTP (Hyperspace Transaction Protocol) and HSML (Hyperspace Modeling Language), creates an unparalleled ecosystem for open-source developers. This combination provides a decentralized, interoperable framework where Intelligent Agents can communicate, collaborate, and learn from real-time data across diverse systems and environments. HSTP ensures secure, seamless transactions between Agents, while HSML offers a flexible, universal modeling language for creating dynamic, context-aware applications. Developers gain access to an adaptive AI system that minimizes computational costs, scales effortlessly, and supports real-time decision-making, making it ideal for building innovative solutions across all industries. This open, scalable architecture fosters creativity, accelerates development, and ensures that cutting-edge AI remains accessible and sustainable for all.

Real-World Applications and Transformative Impact

The practical applications of Active Inference AI extend far beyond what’s possible with traditional AI systems. In healthcare, Active Inference Agents can monitor patient conditions in real-time, predicting potential complications before they occur and coordinating responses across multiple healthcare providers. Hospitals can dynamically manage resources and staffing for real-time emergency response. In supply chain management, they can anticipate disruptions and automatically adjust logistics networks to maintain efficient operations.

Consider airport operations:

Multi-scale Active Inference Agents can revolutionize airport and airline operations by providing real-time, adaptive solutions across various domains. These Agents would optimize passenger flow through airports by dynamically adjusting to crowd levels and operational constraints, enhancing traveler experience and operational efficiency. In fleet and crew management, they ensure timely scheduling and resource allocation, mitigating the impact of delays and disruptions. Active Inference systems also enhance maintenance by predicting technical issues before they arise, ensuring safety and minimizing downtime. Additionally, they bolster security through continuous threat assessment and dynamically allocate resources like gates, fuel, and ground support based on real-time conditions. With their ability to learn continuously and adapt instantly, Active Inference Agents can drive superior efficiency, safety, and cost-effectiveness in the highly dynamic environment of airport and airline operations.

Smart Cities represent another realm where Active Inference Agents present significant opportunities. Agents can coordinate traffic flow, energy usage, and emergency services in real-time, creating more efficient and responsive urban environments. These systems can operate autonomously while maintaining transparent decision-making processes that can be audited and understood by human operators.

Perhaps most importantly, Active Inference AI can achieve these results while maintaining explainability and quantifying uncertainty — the ability to understand and verify why the system makes specific decisions and the level of certainty in its outputs. And even more impressive, it will continue to seek out more information to increase its level of certainty — seeking the right information to better inform for the task at hand. This addresses one of the major concerns with current AI systems, where decisions often emerge from inscrutable “black box” processes that can’t be properly audited or understood.

The Future of Work and Society with Active Inference Agents

As we move toward a future where Active Inference Agents become increasingly prevalent, the nature of work and human-machine interaction will undergo fundamental changes. These Agents won’t simply automate existing tasks; they’ll enable entirely new ways of organizing and optimizing complex systems.

Comparison Table: LLM Automation vs. Active Inference AI:

Consider a typical workday with Active Inference Agents: Your personal Agent coordinates your schedule based on real-time conditions, adapting to changes as they occur. In meetings, Agent-assisted “decision support systems” provide real-time analysis and suggestions based on causal understanding of complex business relationships. Throughout your organization, networks of Autonomous Agents coordinate to optimize operations, predict potential issues, and maintain efficient resource utilization.

This Transformation Will Require New Skills and Understanding from Workers at All Levels.

The ability to effectively collaborate with and manage Autonomous Agents will become as fundamental as computer literacy is today. This is why educational initiatives like my new Executive Program with an Advanced Certification on Active Inference AI and Spatial Web Technologies are crucial for preparing professionals for this massive shift in technology.

In my recent podcast interview, Active Inference AI, the AI Paradigm Shift Dr. David Bray, PhD Wants You to Know About, he urges companies to start preparing for this future:

Talking to friends that are CIOs or CTOs… they’re already underwater and they’re getting bombarded on all sides by hype, by fear, by FUD: fear, uncertainty and doubt. So I would say… take the time to get smart where you can. I know that’s hard… take the time, that would be worthwhile.”

And I would say simultaneously, enterprises should be making the case to their boards and say we need to spend 5%, maybe up to 10% to start placing some strategic bets. And part of that should be not just placing bets on the existing generative AI approaches because… we would be remiss to not think there’s going to be other approaches, so include some Active Inference in it.” — Dr. David Bray, PhD

A Dedicated Space to Learn About Active Inference

A few weeks ago, I quietly launched my new platform, Learning Lab Central, as a dedicated space to cut out the noise of deep learning and focus on the growth of these new emerging technologies. It’s a place where people from all over the globe can gather to learn, collaborate, share, and evolve within this next era of AI and computing.

Educational Imperatives: Preparing for the Active Inference Future

The transition to Active Inference AI represents one of the most significant technological shifts in human history. Understanding and preparing for this change is crucial for individuals and organizations who want to remain competitive in the next 3–5 years. I spent much of 2024 creating a new Executive Program that offers a comprehensive curriculum around these technologies, designed to build this essential knowledge base.

These first seven courses cover everything from the fundamental principles of Active Inference and Spatial Web Technologies to practical applications in business and technology. Participants learn not just the technical aspects of these systems, but also the strategic implications for their organizations and industries.

This program is a gateway to understanding a fundamentally new paradigm in computing and Autonomous Intelligence. As we move toward a future where Active Inference Agents become increasingly central to business operations and daily life, this knowledge will become an invaluable tool for driving organizational success.

The Path Forward

The emergence of Active Inference AI and the new Spatial Web Protocol — HSTP and HSML, represents more than just another step in our technological evolution — it’s a fundamental reimagining of how Autonomous Intelligence can operate and interact within the world. As traditional AI approaches reach their practical limits, Active Inference offers a path forward that is proving to be more efficient, more capable, and is more aligned with how natural intelligence actually works.

For organizations and individuals, the message is clear: the future of AI lies not in bigger language models or more powerful pattern matching systems, but in Intelligent Agents that can truly understand, reason about, plan, and act in the world.

The transition to Active Inference AI represents one of the most significant technological shifts in human history. Understanding and preparing for this change is crucial for individuals and organizations who want to remain competitive in the next 3–5 years. I spent much of 2024 creating a new Executive Program that offers a comprehensive curriculum around these technologies, designed to build this essential knowledge base.

The time to prepare for this transformation is now, while the technology is still in its early stages of deployment.

The question isn’t whether this transition will happen, but how well prepared we’ll be to take advantage of it.

Through proper education and understanding of these technologies, we can ensure that this transformation benefits society as a whole, creating a future where Autonomous Intelligence serves as a true partner in human progress and development.

A new standard for organizational leadership is emerging. This is your chance to stand out as a leader in your field.

For more information about my educational opportunities and new Executive Program on Active Inference AI and Spatial Web Technologies, visit E-mmersion Publishing and Learning Lab Central.

Join Us!

Learning Lab Central is where our community thrives!

- Basic Membership is FREE!

- Become a Pro-Plus Member, and receive your exclusive invite to our monthly Learning Lab LIVE series!

Join the conversation today!