The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

In a panel hosted by the Financial Times at the World Economic Forum in Davos, Switzerland, two of the biggest names in artificial intelligence — Dr. Karl Friston of VERSES AI, and Dr. Yann LeCun of Meta — discussed their aligned, yet contrasting visions for the future of AI, with Friston proclaiming, “Deep Learning is Rubbish.”

Describing them as “royalty” and “rockstars,” while stressing the description of these two guests as leading authorities in their respected fields was “very, very much an understatement,” panel moderator, Olivier Oullier, Founder and CEO of Inclusive Brains, posed the first question, asking both Friston and LeCun for their own personal definitions of artificial intelligence, prompting a humorous exchange to which Karl quips,

I should profess, I’m not an expert in ‘artificial’ intelligence. He’s the expert in ‘artificial’ intelligence (motioning to LeCun). I’m an expert in ‘natural’ intelligence.” – Dr. Karl Friston

Eliciting a resounding, “Boom! And so it starts,” from Olivier.

Although they differ in their approaches, their mutual respect and admiration for each other is evident, and they both agree on several key points:

Both experts agree that today’s AI is profoundly lacking the key elements found in intelligent systems in terms of perception, memory, reasoning, and generating actions.

Where they differ is in how to get there:

— Dr. LeCun thinks Deep Learning, an engineering approach to AI that is more than 30 years old, is here to stay. Staunchly defending this approach, he insists that we don’t know how to do learning or train systems any other way.

— Dr. Friston and the VERSES AI team affirm they have developed a new and better alternative, rooted in Friston’s Free Energy Principle, challenging the reliance on Deep Learning.

Dr. Karl Friston, Chief Scientist at VERSES AI and professor at University College London, is best known for his discovery of the “Free Energy Principle,” a theory of brain function focused on efficient information processing, specifically, how neurons and all biological systems learn, adapt, and self-evolve in nature, explaining how biological agents self-organize by modeling their world and acting on it in order to make the right decisions. LeCun, Meta’s VP and Chief AI Scientist, and professor at NYU, is considered a pioneer of Deep Learning, having developed techniques like convolutional neural networks that now power many AI systems.

While the two share a goal of developing more human-like intelligence in machines, their approaches differ significantly. Friston describes natural intelligence as, “sentient behavior of the right kind,” emphasizing the importance of understanding natural intelligence first, framing it in terms of gathering evidence to create internal models of the world, developing an entirely new concept of autonomous Intelligent Agents, whose learning is based on adaptable, self-optimizing, and self-evolving shared and distributed intelligence. LeCun, on the other hand, focuses directly on developing artificial systems, applying concepts from neuroscience only loosely, while relying on the training processes and back propagation of Deep Learning neural nets.

Both experts agreed on the necessity of developing AI systems capable of more complex inference and planning, underscoring the importance of energy efficient learning and data usage.

At the beginning of this discussion, Yann expressed the idea of Artificial General Intelligence as a ridiculous notion because human intelligence is very specialized. “We are not general machines.”

It’s important to note that human intelligence is also not one big brain or monolithic machine. Human intelligence is comprised of multitudes of individual brains interacting with each other and their environments, with many facets of greater consensuses of global knowledge stemming from these actions.

The framework that Friston is working on with VERSES places special focus on specialized intelligences in a distributed form of communication, which mimics the way knowledge grows among humans; a framework that not only preserves specialized intelligence, but depends on it in order to continue growing the collective and evolving knowledge over time. This kind of knowledge is self-organizing and self-evolving. It doesn’t require gimmicked training to make it work. It works in the same way as all natural intelligent systems. And it does not require Deep Learning backpropagation.

This divergence in their approach stems partly from their backgrounds — Friston as a neuroscientist seeking to unravel brain computations, LeCun as a computer scientist engineering pattern recognition algorithms. And it leads to substantively different ideas about current AI limitations and how to move the field forward. While they both share a similar vision for what’s needed for the future trajectory of AI innovation, Dr. LeCun repeatedly insisted throughout this dialog that the framework needed has yet to be discovered, and therefore the reliance on Deep Learning engineering methods would remain for years to come.

Friston, on the other hand, has developed a science based system with VERSES that generates and maintains real world models, establishing knowledge graphs of real-time, real-world data to inform and train individual specialized Intelligent Agents — with no big data requirement necessary for training.

When asked how these differing approaches can coexist, Dr. Friston’s response was,

So, I think Deep Learning is rubbish, largely because it doesn’t have the calculus of the machinery or the physics to have a calculus of beliefs, a calculus of inference, of planning, of situational awareness. And crucially, if you don’t have a maths or an engineering toolkit that can quantify what you know and what you don’t know, namely, encode the uncertainty that underwrites your sense making, you’ll never know how to act in terms of getting the right kind of information…what’s going to increase my situational awareness so I can now make the right decisions through inference?” – Dr. Karl Friston

To which Dr. Yann Le Cun countered by saying,

I’m going to heavily disagree with Karl by agreeing with him.” – Dr. Yann LeCun

Yann LeCun acknowledged the mechanics of Active Inference and the Free Energy Principle, stating, “I completely agree with that.” And then continued, explaining, “This is not at all incompatible with Deep Learning. In fact, it requires Deep Learning, because the question is, how do you learn those systems, how do you train those systems?,” implying Deep Learning is the only way.

While Le Cun seems to be stuck on the question of how else you can train AI systems, Friston’s team seems to be focused on, “How do you get systems to learn on their own?”

Karl Friston discussed the essence of agency and being an agent, emphasizing the significance of planning, framing it as ‘inference’, or ‘inference in the service of planning’. According to Friston, the key aspect of being an agent lies in having a world model or generative model that can predict the consequences of one’s actions. This predictive capability, he argues, is definitive of an agent.

Friston extended this concept to various entities, whether artificial or natural, such as artifacts, people, particles, or viruses. The defining characteristic of an agent, in his view, is the possession of the right kind of generative model or understanding of its own agency. He suggested that the ability to plan and predict outcomes based on actions is a fundamental quality that distinguishes agents.

The discussion then shifted to the transition from artificial intelligence to intelligent agents, emphasizing that true agency is realized through interaction. Friston highlighted the importance of distributed aspects in understanding agency, pointing to examples like neural populations as ‘little agents’ making decisions on whom to listen to and whom to communicate with. He describes this coordination and interaction among neural populations as a game, illustrating the pursuit of their own agency.

LeCun shared more insights regarding challenges faced by Deep Learning systems in learning useful representations of the world. Stating that it’s “Just too complicated, so you have to use non-generative architectures.” Concluding:

So the future of AI is Non-Generative.” — Yann LeCun

Yann also pointed out that reinforcement learning is a huge failure. He states that the reason the future of AI is not generative is because you can not establish any truth or understanding of the way the world works through computer vision or LLMs. LLMs possess none of the attributes that make up intelligent systems, and computer vision does not teach AI about the world through image replication.

Yann agreed with Karl that a system that is able to learn based on real world models is absolutely required for the future of artificial intelligence.

While Sam Altman spent time during his week in Davos advocating for nuclear power in order to meet the monstrous scaling energy demands of Deep Learning Generative AI, Karl Friston and Yann LeCun both discussed the critical need for efficient processing in AI systems in contrast to current systems, like Large Language Models (LLMs).

Karl explained that Active Inference applies the Free Energy Principle — a principle of least action, facilitating the most efficient exchange of beliefs, actions, and sensory information. Being the very nature of how biological systems think and learn, it is the most energy efficient form of intelligence processing.

Yann pointed out how we marvel over the training capability of LLMs to intake and process so much training data, that it would take an unreal amount of time — multiple lifetimes — for a human to even read all the data. Yet, he also noted that a four year old in its wakeful state, has taken in 450 times the amount of data as the largest LLM in the world, so that tells you that even a four month old infant “has seen more information than the biggest LLM” within the space of only a few months.

The child is learning its immediate environment and the way the world works through observation, and it does this with extreme energy efficiency.

Karl’s adds to this example stating that the learning is actually taking place through the baby pinging its environment, acting on its environment, testing what it thinks it knows, in order to gather more information as evidence of confirmation, or evidence that it needs to update its understanding of what it believes to be true.

This is a very important distinction. We learn by observation AND by action.

A world model is not just in observation of seeing and establishing an understanding of what is where and what is what. It is in understanding the entire evolutionary contextual ecosystem of the who, what, when, where, why, and how of everything at all times, and being able to measure these aspects, and track the changes over time, AND THEN to use this ever-changing data to understand the who, what, when, where, how, and why of these changes, and what that means to the data, to the interrelationships of the agents involved with the data, to the possible circumstances and consequences of acting on the data, and what might happen as a result. This is a constant perception and adaptability problem to the evolution of situations and circumstances of agents and domains over time.

While you were talking about the example of young children, how do they make sense of the world? Well, they do it by pinging and querying and moving. Can I move this? Can I move that? They learn about the move. They learn to perceive by acting. So this is active sensing, active inference, active vision. So that agency, we come right back to the planning as inference, and the agency that underwrites the sense-making that view inherits.” — Karl Friston

Consider how this process of understanding and adaptation plays out in humans. As infants, we are not just observing the world, we are simultaneously testing it, to confirm what we‘ve previously accepted as truth, or to update our understanding (our model of what we believe or accept as reality). We ping the world and take action on our environment to gain new sensory awareness as part of this testing process. It is why babies are constantly putting things in their mouths. It is why children are always touching things. And it’s why we all can understand the humor in the restraint that it takes in not pushing a button just to see what it does.

Curiosity is an innate human trait because it is how we learn and develop our truth and understanding of the world around us. It’s how we develop our understanding of our place in the world, our strengths and weaknesses, our abilities, our power, our sense of self. It’s also how we learn to navigate the world, interacting and cooperating with other agents within various groups, networks, and communities to gain new experiences and grow our knowledge through them. It’s why we crave new experiences. Yet each of us is individual in our human experience, with the diversity of all of our individual understandings and specialized intelligences contributing to the growth of the knowledge base of the whole collective of global human knowledge.

The neurological process of how we perceive, learn and evolve our understanding and knowledge and our contribution of knowledge to the collective is called, Active Inference, and though this process has been speculated in theory to be an ideal methodology for artificial intelligence, it was thought to be too complicated to be undertaken seriously… until now.

Which is probably why Dr. Yann Le Cun said, “We don’t know how to do this today with AI systems. That’s the problem we need to solve over the next few years.”

Yet, that’s exactly what VERSES AI and Dr. Karl Friston have assembled — a framework that enables this natural process of learning and scaling collective intelligence through individual Intelligent Agents, mimicking the way biological intelligence works.

“Indeed, that’s why I was attracted to VERSES as a sort of taking that ideology, that notion of distributed cognition that could be facilitated by the right kind of message passing protocols and the right kind of standards that are just there to enable this kind of exchange and construction of some kind of universe, but a universe in which we’re all resolving our uncertainty about each other.” — Dr. Karl Friston

The key to the system that VERSES and Friston have created is that the “message passing” framework of the Spatial Web Protocol enables a networked communication language, but also a networked knowledge graph of the world, capable of programming context, parameters, attributes, and details about all things in any space, and the conditions, permissions, and interrelationships between those things and spaces. This forms a knowledge graph of evidence for the Active Inference Intelligent Agents to base their understanding of the world from their own frame of reference within the network. This provides an environment for intelligent agents with specialized knowledges to thrive and grow and interact within a nested global ecosystem of knowledge. And because of the way each agent approaches learning within its own environment within the network, and their learning process is performed according to a principle of least action, it becomes the most efficient intelligence network, sharing knowledge throughout the network in a way that minimizes complexity resulting in energy minimization.

Active Inference alone can’t get us there because it requires scalable execution for learning. It requires scalable world modeling, which is an incredibly difficult task.

VERSES has solved the scalability issue without compromising the energy efficiency of Active Inference. The scaling of knowledge is accomplished through a process of decentralized crowd contribution using an advanced internet protocol and language for spatial computing powered by AI.

Current AI systems consist of a handful of singular giant machines that are fed an enormous amount of data to train each of them, resulting in untenable energy requirements for training, maintaining, and querying them, culminating in an unsustainable scaling system.

The Spatial Web Protocol enables the existence of a network of intelligence where human agents all over the world convey their own insights and specialized knowledge by training up individual Active Inference Intelligent Agents within a digital twin network of the world. Each person or entity developing these Intelligent Agents is individually responsible for the resources of energy and specialized data to train each Intelligent Agent from its own nested ecosystem of the network.

You can think of these Intelligent Agents (IAs) as a new kind of software program. People all over the world will be building all kinds of IAs that operate over this open and decentralized network, distributing the energy requirement to devices and hardware that are already in use. This natural distribution of processing and energy-draw reduces the need for the enormous processing power of individual chips that run giant monolithic machines. The processing power requirement of training and queries becomes distributed across a network of resources, making the system scalable in tandem with demand.

So what you have is a decentralized information system; our current internet as we know it, only with expanded capabilities to move from the world wide web of static web pages, into a dynamic web where all things in all spaces are now identifiable, programmable, and able to track their ever-changing conditions and attributes over time.

With HSML (Hyperpatial Modeling Language), the context that governs objects — spaces, people, places, and things — can be embedded into their digital representation, specifying things like ownership, identity, credentials, permissions, parameters of existence and changing states and conditions — even things like temperature and pressure. We can map circumstances and attributes of interrelationships, and all this information can be interpreted and understood in real-time, as it changes over time, by these Active Inference Intelligent Agents. These Agents are also simultaneously receiving LIVE real-world input from sensor data throughout the network — from IoT, cameras, devices, and more.

These Intelligent Agents are all nested within the common network, and they are all in a constant state of learning and interacting with their environments, and with each other, from their own frame of reference within the network. This allows them to learn, mimicking the same way that humans learn, but perceiving the world as it truly is through sensory input (IoT and sensors), while measuring it against their ever-evolving internal model of what they know to be true from their own frame of reference (the real and continuously updated context programmed into the environmental world through HSML), and they can operate within the action perception feedback loop of Active Inference, developing causal reasoning for refining in-the-moment states of awareness. These Agents are making decisions in real-time, with their real-time ‘training’ based on ultra relevant actual data of what is happening now in their corner of the world or area of expertise, not on massive amounts of historical datasets containing hoards of irrelevant data to sift through in effort to process input requests.

Friston explains it this way, “Intelligence is sense making that can be characterized as gathering evidence for my world models, and my generative models, that make me fit for purpose interacting with other agents.”

Dr. Karl Friston and Dr. Yann LeCun, despite their differing viewpoints, converge on the need for more advanced AI systems that can more closely mimic human learning and decision-making processes. Their dialogue at Davos highlighted the evolving nature of AI research and the ongoing quest to bridge the gap between artificial and natural intelligence.

Friston’s work with VERSES AI offers us a sustainable, natural path to scalable autonomous intelligent systems. Their framework provides the mechanics for processing LIVE data, a self-evidencing system of establishing and developing ever-changing knowledge graphs of the world, and interpreting that data, sharing knowledge between Agents, and acting on it. This is the natural path to discovering how knowledge grows — not as a gimmick attempting to replicate intelligence, but actual real intelligence.

Explore the future of Active Inference AI with me, and gain insights into this revolutionary approach!

Ready to learn more? Join my Substack Channel for the Ultimate Resource Guide for Active Inference AI, and to gain access to my exclusive Learning Lab LIVE sessions.

Visit my blog at https://deniseholt.us/, and Subscribe to my Spatial Web AI Podcast on YouTube, Spotify, and more. Subscribe to my newsletter on LinkedIn.

Enjoy all of my new articles on Substack. They will always be included with your free subscription.

AND if you choose to opt in for a paid subscription, you will have access to a wealth of extra Active Inference AI news and education materials, and become part of the global conversation of this cutting edge, safe, and trustworthy new approach to AI.

All content for Spatial Web AI is independently created by me, Denise Holt.

Empower me to continue producing the content you love, as we expand our shared knowledge together. Become part of this movement, and join my Substack Community for early and behind the scenes access to the most cutting edge AI news and information.

The convergence of two groundbreaking technologies is reshaping how we think about AI, automation, and intelligent systems, affecting every industry...

On 29 May 2025, the IEEE Standards Board cast the final vote that transformed P2874 into the official IEEE 2874–2025...

Explore the future of agent communication protocols like MCP, ACP, A2A, and ANP in the age of the Spatial Web...

Fusing neurons with silicon chips might sound like science fiction, but for Cortical Labs, it represents what's possible in AI...

Ten years ago, on March 31, 2015, I interviewed Katryna Dow, CEO and Founder of Meeco, to discuss an emerging...

We stand at a critical juncture in AI that few truly understand. LLMs can automate tasks but lack true agency....

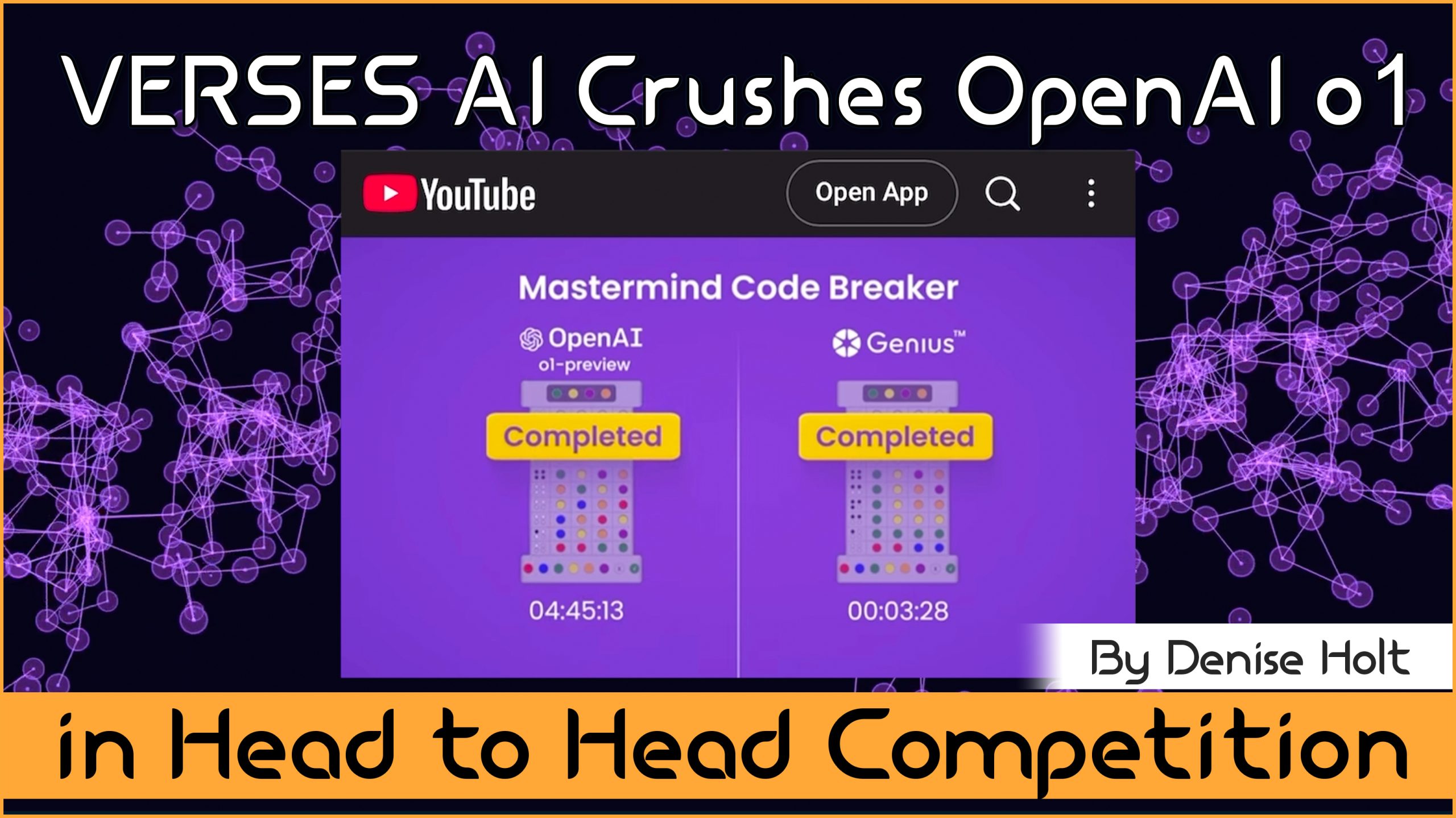

VERSES AI 's New Genius™ Platform Delivers Far More Performance than Open AI's Most Advanced Model at a Fraction of...

Go behind the scenes of Genius —with probabilistic models, explainable and self-organizing AI, able to reason, plan and adapt under...

By understanding and adopting Active Inference AI, enterprises can overcome the limitations of deep learning models, unlocking smarter, more responsive,...