AXIOM and VBGS: A New Era of Adaptive Intelligence

- ByDenise Holt

- August 25, 2025

AI is entering a new paradigm, and the rules are changing.

For the past decade, deep learning (DL) and reinforcement learning (RL) have defined what we thought AI could be. They have dominated headlines, delivering systems trained on endless data, like ChatGPT, AlphaGo, and autonomous vehicles and robots. But these models have hard limits. They are not trustworthy, and they are not sustainable, and beneath the excitement, their deficiencies have become increasingly clear. They are data-hungry, costly, and brittle in real-world environments. They replicate patterns, but they cannot truly reason, adapt, or operate in the face of uncertainty — the vague unknowns that are very much a part of our everyday life and decision-making.

Now, a profound breakthrough has emerged. VERSES AI’s newly released Active Inference AI model, AXIOM, and the complementary belief-mapping method known as Variational Bayes Gaussian Splatting (VBGS) represent a decisive leap beyond the status quo. These are not just minor improvements or clever tricks. They represent an entirely new foundation for intelligence: one that is adaptive, uncertainty-aware, and capable of learning in real time.

What we are seeing are the first scalable implementations of Active Inference, the theory of intelligence rooted in world-renowned neuroscientist – Dr. Karl Friston’s Free Energy Principle. Together, AXIOM and VBGS bring the principles of Active Inference into practice, offering a clear path toward agents that can perceive, predict, plan, and continuously refine their understanding of the world in real-time — whether inside a robot, across an enterprise, or throughout a global network connected by the Spatial Web.

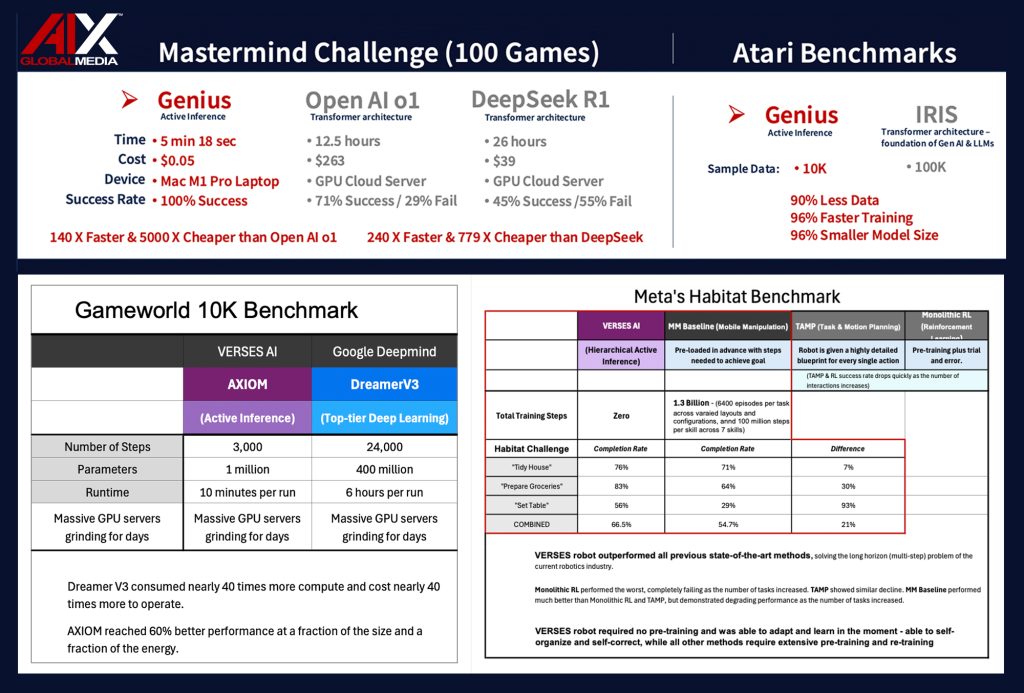

This is not hype. Over the past year, VERSES’ research team, led by Friston, their Chief Scientist, has quietly and consistently outperformed deep learning and reinforcement learning baselines across every benchmark they’ve entered. Whether in game-world environments like Atari or in robotics tasks requiring perception, planning, and adaptation, Active Inference has crushed traditional methods.

This record underscores the magnitude of this shift in AI that we are witnessing: we are not just dealing with theory, but with a tested and validated alternative to the dominant AI trajectory.

What we are witnessing is a complete paradigm shift in AI.

Understanding these breakthroughs is not optional. For business leaders, developers, and policymakers alike, AXIOM and VBGS represent the future of AI — one that is distributed, adaptive, and ready to reshape industries and global networks through Active Inference and the Spatial Web.

The End of the Deep Learning Illusion

For more than a decade, deep learning has captivated the world with its ability to generate language, recognize images, and master complex games. But these models are not intelligent in any human sense. They require enormous amounts of training data, struggle to generalize outside of narrow tasks, and consume staggering amounts of energy. They operate as if they are certain about their output no matter if it is right or wrong. It can’t tell the difference, and neither can the user working with it.

Reinforcement learning, while effective in specific benchmarks, suffers from similar inefficiencies — demanding millions of trials to learn behaviors that humans or animals master in just a few attempts.

In contrast, biological intelligence thrives in uncertainty. We don’t need millions of repetitions to learn; we infer patterns from limited experience, remember and aggregate our experiences for future reference, update our “model of understanding” on the fly, and plan actions that not only achieve goals but also teach us more about the world. This is the essence of Active Inference. Together, AXIOM and VBGS are the first systems in the world to demonstrate how this theory can be implemented at scale.

What is AXIOM?

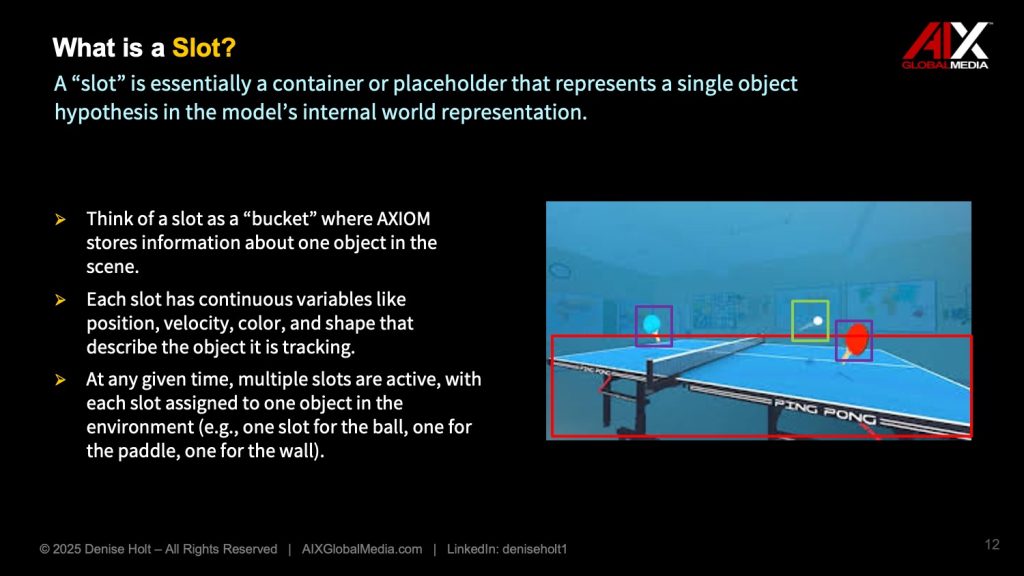

Available since June 2025 through an open-source Academic license, AXIOM (Active eXpanding Inference with Object-centric Models) is an Active Inference framework built by VERSES AI that produces Intelligent Agents capable of perceiving, learning, planning, and acting in real time. Unlike deep learning models that see the world as an endless stream of pixels or tokens, AXIOM organizes its understanding around objects and their interactions. It breaks the world into “slots” — placeholders that represent individual objects — and continuously updates its beliefs about the objects’ identity, motion, and relationships.

Wait… What Exactly is a “Slot”?

A central concept in AXIOM, you can think of a slot as a “container” in the model’s mind for one object. Each slot holds what AXIOM currently believes about that object: its identity (what it is), its state (where it is and how it’s moving), and its relationships (how it interacts with neighbors).

As AXIOM parses a scene, it assigns pixels to slots.

Note to reader: A “scene” simply means the current snapshot of the world that the agent is observing. It could be visual data (like a view from a robot’s camera), a single frame from a game screen, sensor data from a warehouse aisle, or any structured input describing what’s around the agent. AXIOM breaks this snapshot down into meaningful parts and assigns them to slots, with each slot representing one object.

In a game, one slot may represent a ball, another a paddle, another a wall. For an autonomous vehicle, various slots could represent vehicle, pedestrian, bycycle, etc… Over time, AXIOM updates each slot as new evidence comes in, much like folders on your computer that collect information about specific projects. This slot-based reasoning allows AXIOM to move beyond pattern recognition and operate at the level of entities and interactions, which is far more interpretable and adaptable.

Four Core Modules — How Slots Work in AXIOM

AXIOM has four main building blocks that work in harmony together:

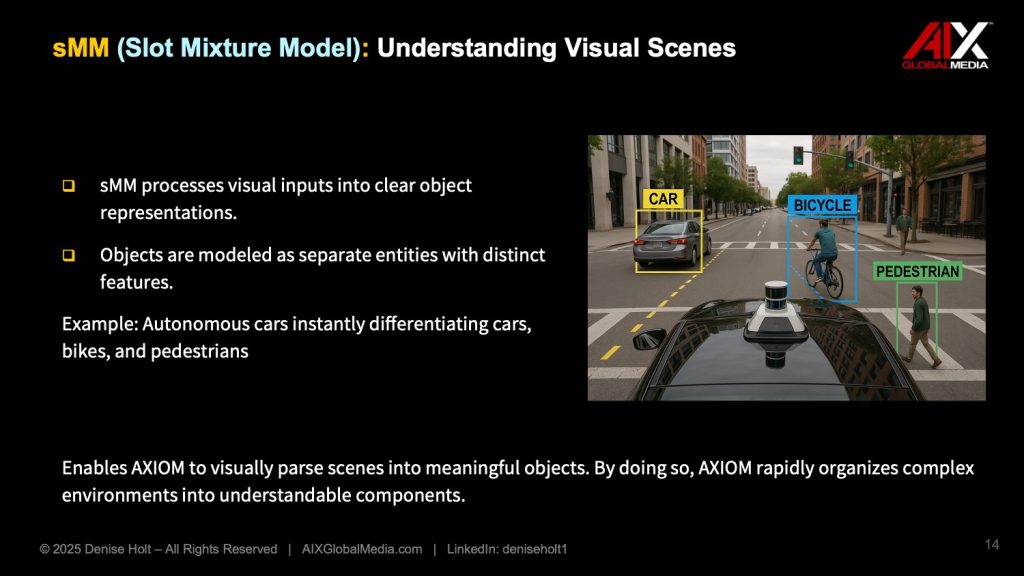

- sMM (Slot Mixture Model): This is the scene perception module, and it decides how pixels in the input image are assigned to different slots. For instance, which pixels belong to the “ball” slot vs. the “paddle slot.”

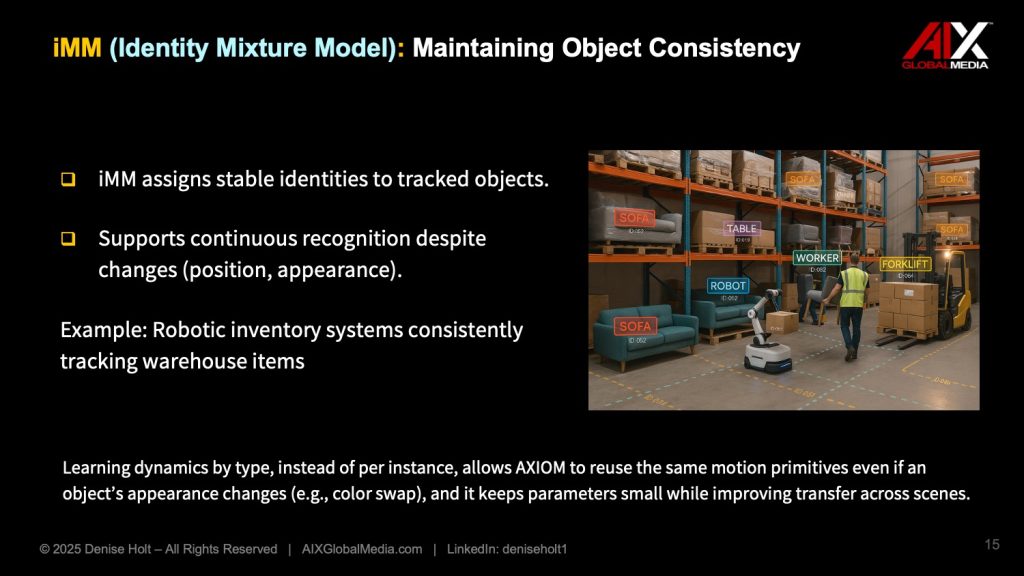

- iMM (Identity Mixture Model): Assigns an object type identity (like “ball” or “block”) to the slot, so the system knows what kind of object it is, allowing knowledge to be shared across similar entities.

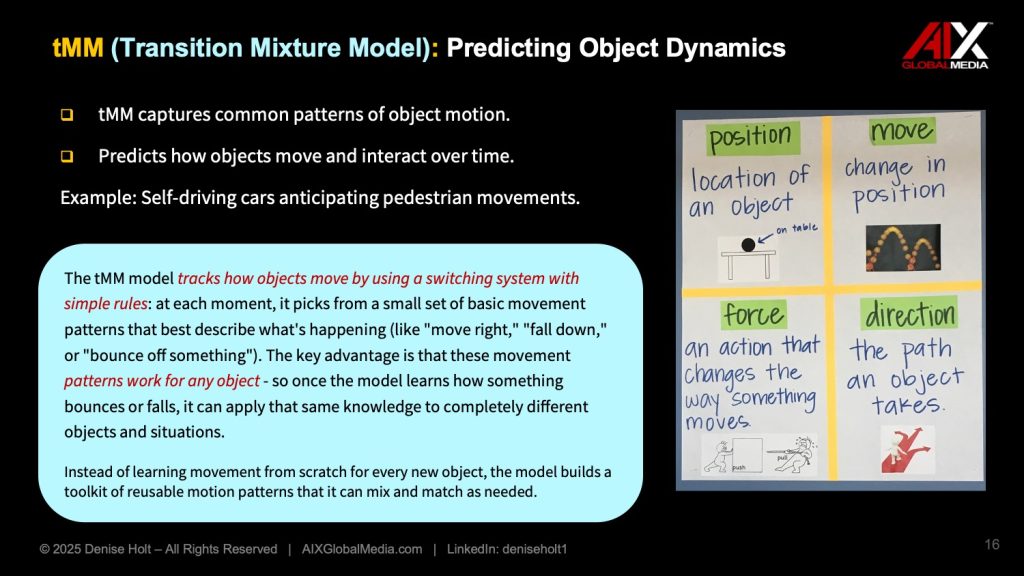

- tMM (Transition Mixture Model): Predicts how that slot’s object will move (its motion pattern).

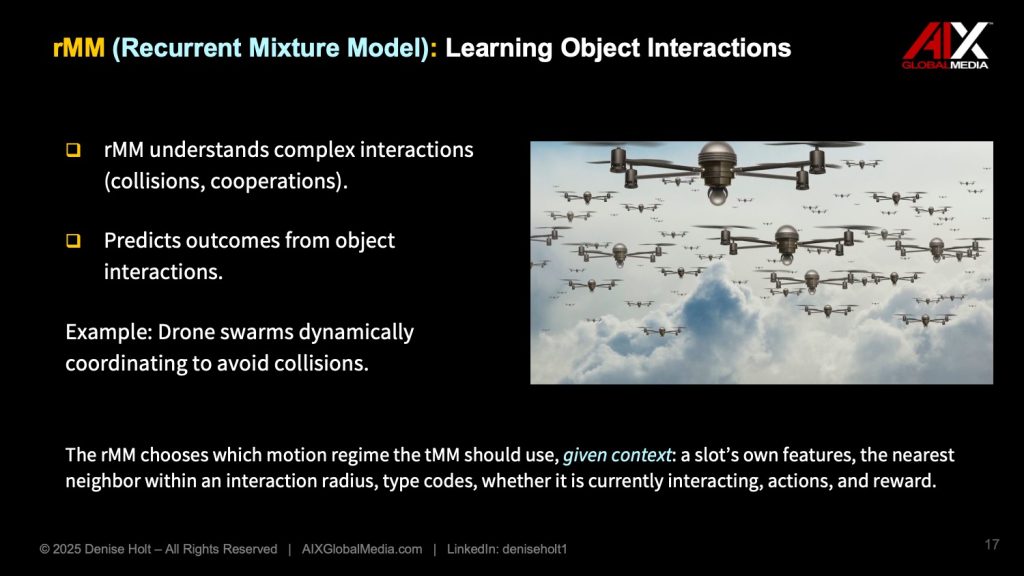

rMM (Recurrent Mixture Model): Decides which motion pattern and recurrent interaction should apply to the slot at the next moment (what will the object do next?) based on context and interactions.

This modular design enables structural intelligence — an ever-evolving picture of understanding of the world. AXIOM grows its internal model (details of understanding) when it encounters something new, and merges components when redundancies appear, streamlining its learned knowledge. It does not assume the world is fixed; it adapts its complexity to match the environment.

If it sees something new, it creates a new category. If two categories turn out to be the same, it merges them.

This “grow-and-merge” loop lets it generalize from single events, like learning the effect of a collision once, then reusing that knowledge broadly.

Variational Inference: Learning on the Fly

AXIOM’s breakthrough efficiency comes from its use of variational inference. Instead of trying to calculate the exact probability of every hidden variable (an impossible task), AXIOM builds a simplified approximation and continuously updates it as new evidence arrives.

Think of it like a detective forming a working theory about a case. Each new clue updates the theory, sometimes confirming earlier ideas, other times forcing a revision. AXIOM does the same with objects and dynamics: it maintains a best guess and improves it as more data streams in.

This approach allows AXIOM to learn in minutes, not months. It doesn’t need massive training sets or expensive retraining cycles. It learns and evolves as it goes, just as living systems do.

How AXIOM Plans

Planning in AXIOM is not about maximizing reward alone. Instead, it minimizes expected free energy, a principle that balances two goals: achieving useful outcomes and reducing uncertainty (unknowns) about the world. This means AXIOM is both goal-directed and curious.

For example, a robot guided by AXIOM might decide to open a door, not only to exit a room, but also to learn what lies beyond – enriching its model for future decisions. This dual drive, to succeed now AND to improve understanding for later, is what makes Active Inference agents fundamentally more strategic than conventional AI.

From Gaussians to Variational Bayes Gaussian Splatting (VBGS)

To understand VBGS, it helps to start with the basics.

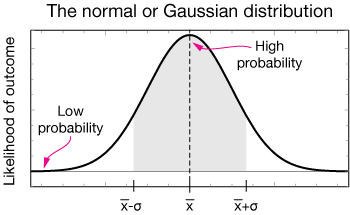

Gaussian Distributions

A Gaussian distribution (also called a normal distribution) is the bell curve we are all familiar with. Most measured values cluster around the average, with fewer appearing at the extremes. Human height is a classic example: most people are near the average, with far fewer extremely tall or short individuals.

Gaussians are powerful because they don’t just describe values — they also describe uncertainty. The width of the bell curve shows how confident we are about the estimate. A narrow curve means high certainty; a wide curve means more uncertainty.

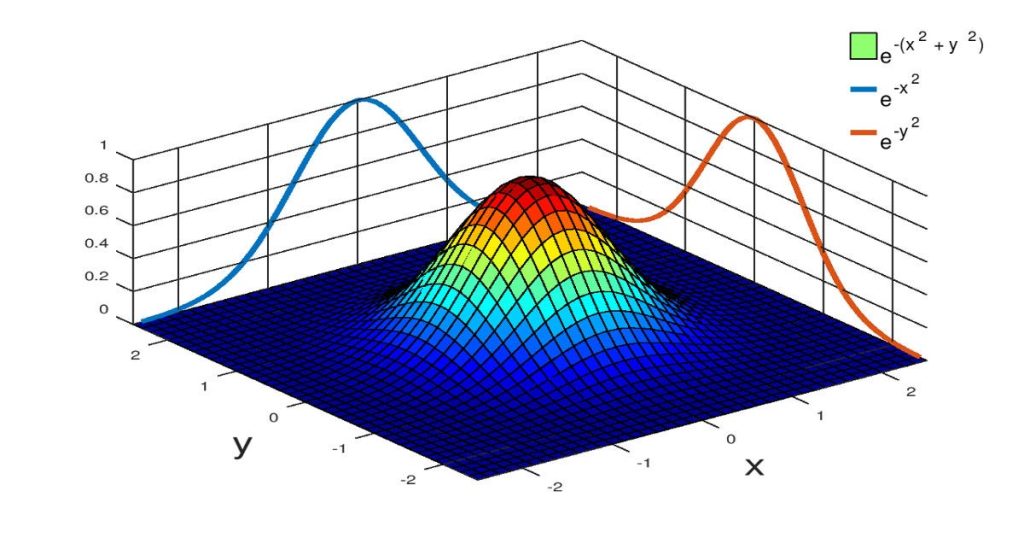

3D Gaussian Splatting

In 3D mapping, this concept becomes visual. Instead of describing objects with fixed geometric models, we represent surfaces as overlapping Gaussian “splats.” Each splat is a fuzzy blob that captures position, color, depth, and uncertainty. Thousands of splats layered together form a continuous 3D scene, much like painting with soft-focus dots that sharpen areas of the image as more evidence is added.

This method allows for smooth, efficient visual reconstructions of complex environments. And because each splat encodes uncertainty (the sharper vs blurry parts of the scene), the map itself knows where it is reliable and where it still needs more data.

Variational Bayes Gaussian Splatting (VBGS)

VBGS takes this further by applying variational inference to the splats themselves. (Remember those “smart approximations” from our detective analogy?) Instead of building a static 3D map, VBGS updates the Gaussian distributions continuously as new observations arrive without the need for replay buffers or retraining. Like a detective, the map becomes a working theory — adjusting with each new clue, refining where confidence is high, and marking where uncertainty remains.

In simple terms, VBGS doesn’t just say, “Here’s what’s in the room.” It also maintains an unfolding and evolving understanding of, “Here’s what we don’t know yet — and here’s how sure we are about each part of the picture.” This ability to model uncertainty is vital for robots and agents as they explore and operate in unknown and evolving territory.

While traditional mapping techniques attempt to build a static, fixed representation, VBGS uses a probabilistic approach. When combined with the goal-directed curiosity of AXIOM, this creates a live, uncertainty-aware 3D map that agents can use to navigate and plan safely, even in unfamiliar or ever-changing environments.

AXIOM + VBGS: Seeing and Thinking Together

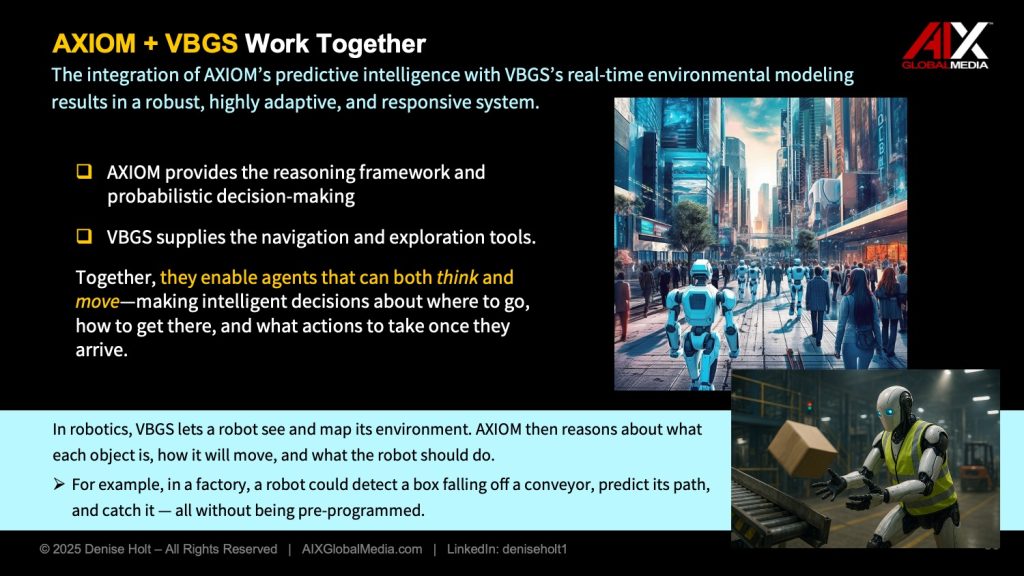

When combined, AXIOM and VBGS form a complete Active Inference loop:

- VBGS provides a live, probabilistic 3D scene with a flexible ability to process uncertainty built in.

- AXIOM reasons about objects, predicts outcomes, and plans actions.

- Together, they enable agents that can see, think, and adapt continuously.

This integration is what makes AXIOM and VBGS such a profound breakthrough. They don’t just recognize and copy patterns — they construct, test, and refine models of understanding of the world as it unfolds moment to moment while they act within it.

VERSES' Robotics Breakthroughs

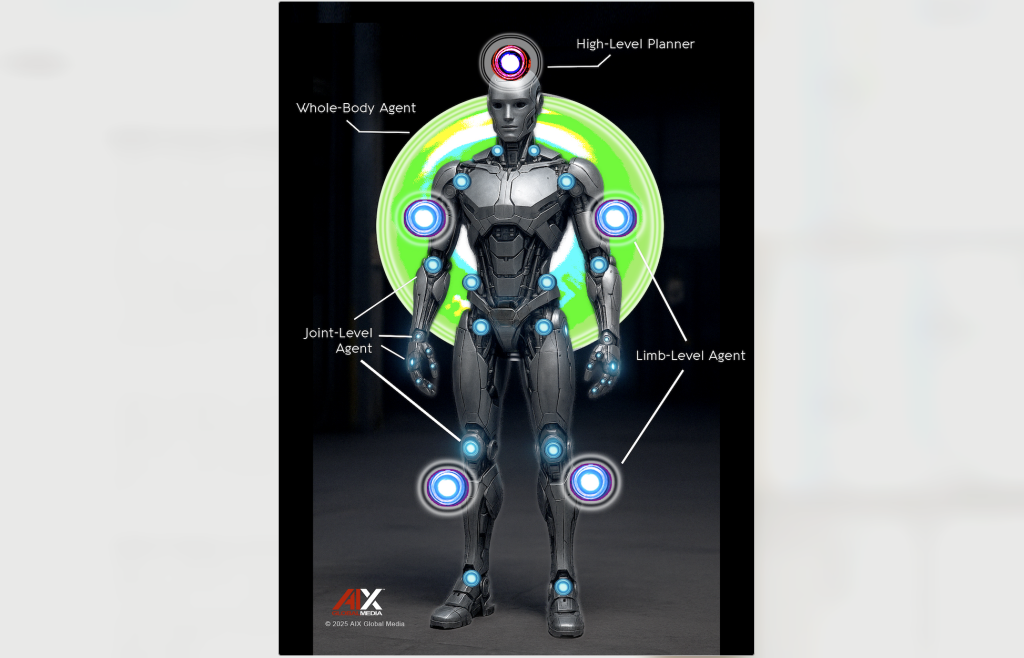

The power of this approach was demonstrated in VERSES’ recent breakthrough in robotics. Their research introduced a hierarchical Active Inference architecture where multiple agents operate within a single robot body. This framework enables robots to adapt in real-time, plan over long-sequences, and recover from unexpected problems, all without retraining. Unlike current robots, which operate as one monolithic controller, this new blueprint operates as a network of collective intelligent agents, each powered by Active Inference — where every joint, every limb, every movement controller is itself an Active Inference agent with its own local understanding of the world, all coordinating together under a higher-level Active Inference model — the robot.

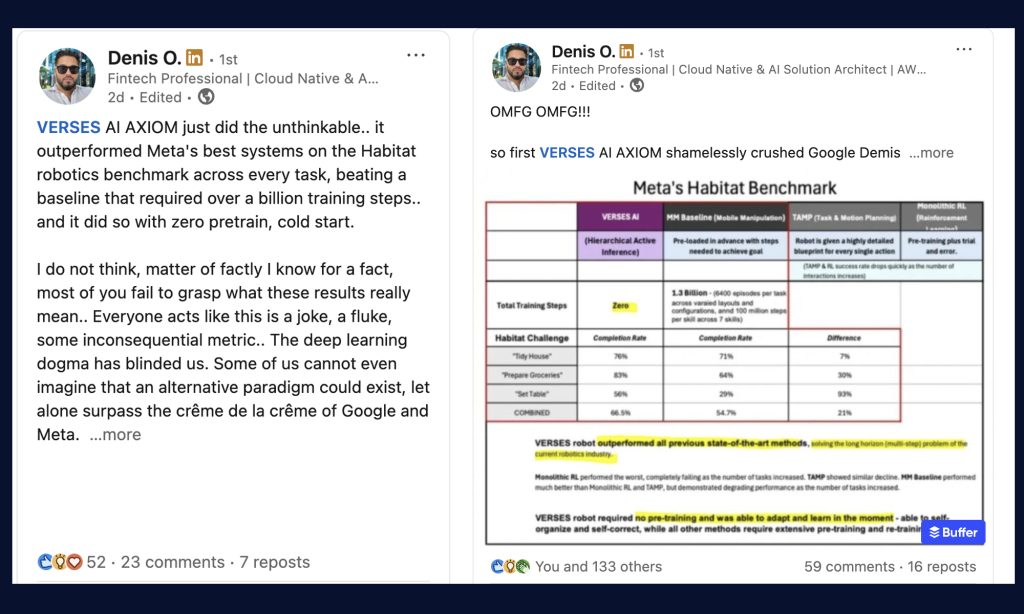

With VBGS mapping and AXIOM planning, this robot achieved real-time adaptability in unfamiliar environments, outperforming state-of-the-art reinforcement learning systems on Meta’s Habitat benchmark for mobile manipulation. For the first time, Active Inference scaled to whole-body robotic control, demonstrating how these models can achieve the flexibility and resilience that deep learning has long promised but failed to deliver.

This achievement has been creating a ton of excitement on LinkedIn this past week by some big voices in AI who have started to realize the impact of this technology:

This robotics achievement is not an outlier. VERSES’ Active Inference technology has consistently outperformed (crushed!) the most state-of-the-art deep learning and reinforcement learning systems — systems that represent years of development and hundreds of billions of dollars in investment by Google, Meta, and more. That track record doesn’t just suggest a promising new approach; it signals a seismic shift in the field of AI. We are not looking at theory — we are looking at a tested, validated, and superior alternative to the current AI paradigm.

Digital Twins: From Passive Models to Active Intelligence

One of the most immediate enterprise applications of AXIOM and VBGS lies in digital twins — virtual replicas of real-world systems used in manufacturing, logistics, energy, and beyond.

Today’s digital twins are limited. They often rely on static models that lag behind reality. Updates are costly and computationally heavy, and uncertainty is rarely represented. As a result, digital twins tend to be passive dashboards rather than active collaboration tools.

AXIOM and VBGS transform this picture. VBGS provides continuously updating 3D maps that highlight not only what is known but also where uncertainty remains. AXIOM layers on reasoning and planning, enabling the twin for real-time simulation — not just to mirror reality but to intervene intelligently — recommending actions, testing scenarios, and coordinating with other agents.

This evolution solves one of the biggest problems with digital twin simulaltion: keeping them relevant with live information, adaptive, and actionable in real-world complexity.

Enterprise and Industry Applications

The implications extend far beyond robotics. Enterprises can apply AXIOM and VBGS across every layer of their operations, from industry-facing systems to internal business processes.

- In warehousing and logistics, adaptive agents can reroute deliveries, reschedule operations, and update digital twins in real time.

- In healthcare, treatment planning can balance immediate patient care with long-term learning about outcomes.

- In smart cities, traffic, energy, and public services can be coordinated across thousands of distributed agents.

- And in finance, market models can adapt dynamically to uncertainty rather than relying on brittle, pre-set rules.

But the impact is not limited to external operations. Internal enterprise functions stand to be transformed as well:

- In marketing, Intelligent Agents built on AXIOM can simulate campaign strategies as “digital twins of customer journeys,” refining them in real time as data streams in, leading to more adaptive and personalized outreach.

- In human resources, agents can proactively predict turnover risk, optimize recruitment pipelines, and balance workforce planning dynamically — shifting HR from static reporting to real-time strategy.

- In cybersecurity, agents can model networks as objects and relationships, continuously testing and probing areas of uncertainty to harden defenses before attackers exploit them.

- Even internal financial planning can evolve: instead of quarterly reforecasting, adaptive models can continuously update forecasts as markets and supply chains shift, offering leaders a rolling, live view of risks and opportunities.

The common thread across all of these domains is adaptability. With AXIOM and VBGS, organizations gain systems that don’t just follow scripts, they learn, explain, and evolve as conditions change. By modeling uncertainty directly and planning to reduce it, they deliver intelligence that is dynamic, interpretable, and capable of working across networks of agents connected through the Spatial Web.

From Enterprises to the Spatial Web

AXIOM and VBGS mark a decisive shift in the history of AI. Where deep learning and reinforcement learning have plateaued, Active Inference is breaking through. VERSES has demonstrated systems that can see, plan, and act with remarkable efficiency — from game environments to robotics, from digital twins to enterprise networks.

The implications for enterprises are immediate. Marketing, HR, finance, and cybersecurity can all be reimagined as adaptive systems rather than reactive departments. Each function gains agents that learn continuously, model uncertainty, and plan to reduce it — transforming business operations into living, evolving networks of intelligence.

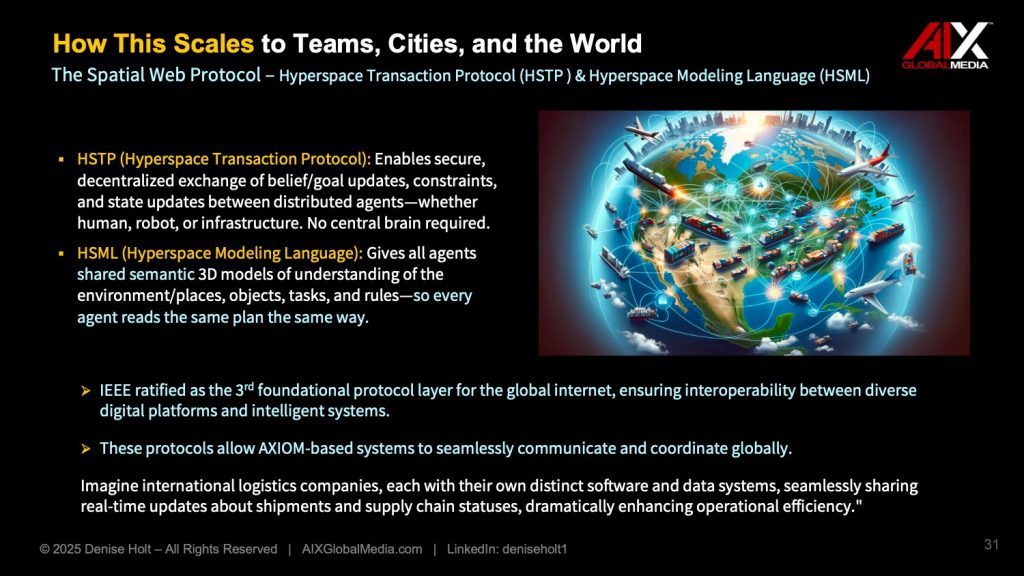

And yet, the story does not end within a single organization. The true power of this technology emerges when these Active Inference agents interconnect across companies, industries, and public systems through the Spatial Web Protocol, HSTP and HSML. The Universal Domain Graph (UDG) of all entities and nested systems will play an important role in VBGS and AXIOM agents, ensuring a common language for shared meaning of object identity, attributes, and behaviors lending a framework for real-time semantic synchronization and ever-evolving contextual substrate to these agents and their observations. Instead of siloed dashboards and brittle integrations, networks of intelligent agents can exchange structured beliefs in real time, enabling seamless collaboration across global supply chains, smart cities, and entire ecosystems of commerce.

This next era of intelligence will not be centralized in giant black-box models. It will be distributed, adaptive, and explainable, operating as networks of interoperable agents, at the edge on everyday devices and autonomous systems. AXIOM and VBGS are the first concrete steps into that future, and understanding them is essential for anyone who wants to navigate and shape what comes next.

How to Pilot in Your Organization: Education as Strategy

For leaders and teams who want to prepare for this next era of AI, the path forward begins with education and small-scale pilots. This technology is powerful, but the first step is understanding how it works and why it matters.

- Start with Genius™: VERSES AI has a no-code platform that lets you explore Active Inference directly. It’s an accessible way to experiment with causal models, see how systems adapt in real time, and build intuition for how Active Inference differs from static machine learning.

- Experiment with AXIOM: For researchers, the AXIOM Gameworld 10K codebase is available open-source under an Academic License, and it can provide you with the ability to experiment and learn how object-centric inference, variational updates, and planning operate under the hood. This allows for independent review, replication, and engagement from the research community.

- Choose a Small Domain-Specific Problem: Start with a focused use case that Genius is designed for, such as optimizing systems, resource allocation, recommendation systems, predictive maintenance, or risk assessment. These are scenarios where uncertainty and changing conditions matter, and where Active Inference’s adaptive, causal modeling delivers immediate value.

Just as important as the technical pilots is the educational component.

Because Active Inference AI and the newly IEEE-ratified Spatial Web Protocol represents a complete shift in how intelligence is understood and implemented, even the most experienced AI professionals cannot approach this new paradigm with old mindsets. This is not just another tool to add to the stack — it’s a new foundation. The first step in piloting Active Inference in your organization is not only technical deployment, but education.

That’s why I formed AIX Global Media, and it’s why we created our executive education program on Active Inference AI and Spatial Web Technologies. These initial courses are designed as the essential primer — the knowledge base everyone needs before building or deploying with Active Inference models like AXIOM and VBGS. They walk you through the principles, the architecture, the applications, and most importantly, the mindset shift from AI that recognizes patterns and generates content, to AI that is self-organizing and capable of adaptive, uncertainty-aware reasoning.

Completing this program and earning our certification sets you apart. It signals that you are not only aware of this paradigm shift, but prepared and knowledgeable enough to lead within it. In a competitive landscape where many still operate under the limits of deep learning, this education opportunity positions you on the future-proof side of business and your career.

To support this journey, I also founded Learning Lab Central as the global hub for education, collaboration, and discovery in this space. It’s not just where you’ll find training and certification programs, it’s also where you’ll connect with peers around the world who are exploring how Active Inference and the Spatial Web will reshape industries and society.

And because this field is evolving quickly, every month I host Learning Lab LIVE: a 40-minute presentation on a timely topic, followed by an open roundtable discussion among attendees from our growing community. These sessions allow us to learn together, ask critical questions, and deepen our collective understanding of this massive transformation as it unfolds.

Piloting is not only about testing software; it's about building literacy in this next generation of AI.

Every major technological shift creates leaders and followers, and those who fail to keep pace inevitably get left behind.

The early adopters of the internet, mobile, and cloud computing became the architects of the modern digital economy. Today, the same opportunity stands before us with Active Inference AI and the Spatial Web — only this time, the scale is far greater.

This is a true “blue ocean” opportunity — an uncharted market space that is free from competition, offering vast potential for growth and profit. As such, it will open possibilities that will likely eclipse every digital transformation before it.

By educating yourself and your teams now, you are not just preparing for change — you are positioning yourself at the forefront of an entirely new era. The time to act is not tomorrow, or someday, it is now. Those who begin today will be the ones defining the future of intelligence.

I encourage you to join us this Wednesday, August 27th, 2025 for Learning Lab LIVE! The topic is AXIOM and VBGS. I begin with a 40 minute educational presentation, and we finish off with a 1 hour roundtable discussion among all attendees. (My favorite part!)

To receive your invitation, simply create a profile on Learning Lab Central, and you will automatically receive an invite! … See you there!

The global education hub where our community thrives!

Scale the learning experience beyond content and cut out the noise in our hyper-focused engaging environment to innovate with others around the world.

Join the conversation every month for Learning Lab LIVE!