Active Inference & The Spatial Web

Web 3.0 | Intelligent Agents | XR Smart Technology

Ten Years Later: Katryna Dow Reflects on Data Privacy & Decentralized Identity

Spatial Web AI Podcast

- ByDenise Holt

- March 30, 2025

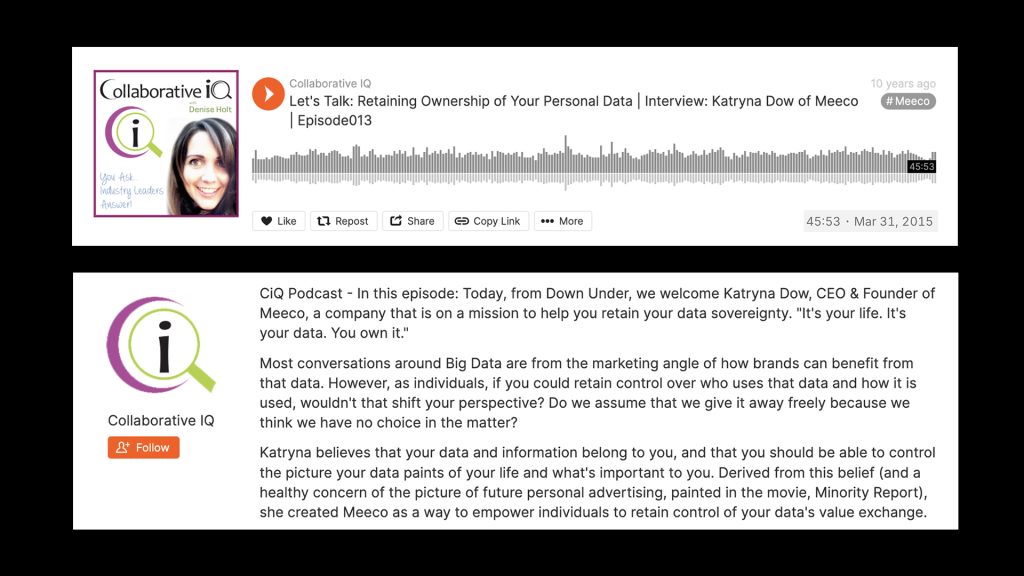

Ten years ago, on March 31, 2015, I hosted Katryna Dow, CEO and Founder of Meeco, on my former podcast Collaborative IQ to discuss an emerging concern: personal data privacy.

Katryna is one of the earliest pioneers tackling data privacy and personal digital identity, and her company, Meeco , “helps organizations build compliant, future-proof solutions that give their customers the power to access, control and exchange data on their own terms.”.

That episode ten years ago was titled “Let’s Talk: Retaining Ownership of Your Personal Data,” and in it, we addressed themes that, at the time, seemed prescient but also somewhat abstract to many listeners. A decade later, Katryna and I reunited on my current podcast, Spatial Web AI, to revisit these topics, examining how data privacy, digital identity, and decentralized architectures have dramatically evolved, often validating Katryna’s early concerns.

Watch Now:

YouTube: https://youtu.be/eCHXr7mk3LY

Spotify: https://open.spotify.com/episode/4FPOGe6njbgaSdnieVe82S?si=YLCVRXOSQf-l0nYfEa0mqw

Early Warnings and the Cambridge Analytica Moment

In our original conversation, Katryna expressed deep unease about the unchecked collection of personal data and the potential for misuse—a sentiment powerfully validated by the Cambridge Analytica scandal just a few years later in 2018. This event became a watershed moment, demonstrating the tangible risks Katryna had warned about years prior: mass data harvesting, psychological profiling, and targeted influence campaigns.

From Centralization to Decentralization

A significant pivot from the initial interview to today in 2025, has been the shift from centralized databases—the so-called “honeypots” for hackers—to decentralized architectures. Katryna described this transformation vividly, emphasizing Meeco’s dedication to decentralized technology since its inception. She highlighted how decentralization reduces risks associated with large-scale data breaches by distributing data control back to the individuals it belongs to. An important advancement in this area includes Meeco’s collaboration with DNP in Japan, leveraging decentralized identity solutions (DID) to provide secure, privacy-oriented data exchanges.

The Evolution of Regulatory Frameworks

The regulatory landscape has drastically changed over the past decade. During the initial 2015 discussion, privacy regulations were fragmented, limited, or even absent. Fast forward to today, frameworks like GDPR in Europe, Australia’s Consumer Data Right, and Japan’s stringent privacy laws represent profound progress. Katryna explained how these regulations, initially appearing ambitious or overly stringent, now serve as essential protections for digital identities and consumer rights.

Predictive Analytics to Intelligent Agents

One intriguing conversation thread from 2015 was the concept of predictive analytics inspired by the film Minority Report. Today, Katryna and I noted that reality has, in many ways, surpassed fiction. Predictive analytics have evolved into sophisticated AI-driven agents that can not only predict behaviors but assist actively in everyday decision-making. While discussing the benefits of personalized AI, Katryna emphasized the ongoing ethical and practical challenges in creating trusted systems that genuinely serve individual users, highlighting the critical importance of data consent and control.

Consent and Trust: The Heart of Privacy

The notion of consent has significantly evolved over the last decade. Katryna observed that while younger generations are increasingly comfortable with what I referred to as, “living out loud” digitally, awareness is growing around the necessity of clearly defined consent and context-specific data sharing. She cited Apple’s incremental steps toward privacy by design, such as allowing users granular control over location-sharing, as examples of the progress toward more nuanced consent models.

Looking forward, Katryna suggested that the next decade will be defined by one central theme: trust. Trust, she argued, is foundational to the future of digital interactions—encompassing cryptographic verification, transparency of data origins, and ethical AI applications. Establishing robust digital trust mechanisms will be key to ensuring technology genuinely serves humanity rather than exploits vulnerabilities.

The Road Ahead: Challenges and Opportunities

Despite remarkable progress, the road ahead is filled with complex challenges, including geopolitical differences in privacy standards, rapid technological advances outpacing regulations, and the increasing sophistication of cyber threats. However, Katryna remains optimistic, driven by collaborative global efforts toward standardized decentralized systems and enhanced digital trust frameworks.

Reflecting on ten years of progress and pitfalls, this conversation I had with Katryna Dow underscores the urgency and excitement of navigating a rapidly evolving digital landscape. From early warnings to tangible progress, our dialogue is a compelling reminder of how quickly technology can evolve—and how crucial it is for individuals, businesses, and governments to stay vigilant and proactive.

See You in 2035!

Now the question remains: what technological shifts and societal impacts will the next decade reveal? Given the accelerating pace of AI and computing innovations, 2035 promises to present a technological landscape vastly different from today. Katryna and I plan to reconvene again in ten years to assess just how transformative the next decade will be and discuss the wild and crazy future yet to come.

To hear the full conversation and explore these critical themes in greater depth, tune into this special episode of the Spatial Web AI Podcast featuring my ten-year anniversary conversation with Katryna Dow. (Bonus: at the end of this current episode, I have attached the audio of our previous interview which originally aired on March 31, 2015.)

Huge thank you to Katryna Dow for being on our show!

Connect with Katryna Dow:

LinkedIn: https://www.linkedin.com/in/katrynadow/

To learn more about data privacy infrastructure, visit Meeco at https://www.meeco.me/

The FREE global education hub where our community thrives!

Scale the learning experience beyond content and cut out the noise in our hyper-focused engaging environment to innovate with others around the world.

Become a paid member, and join us every month for Learning Lab LIVE!

Episode Transcript: