Active Inference & The Spatial Web

Web 3.0 | Intelligent Agents | XR Smart Technology

VERSES AI Research Reveals Real-World Use Cases for Active Inference AI

Spatial Web AI Podcast

- ByDenise Holt

- August 18, 2025

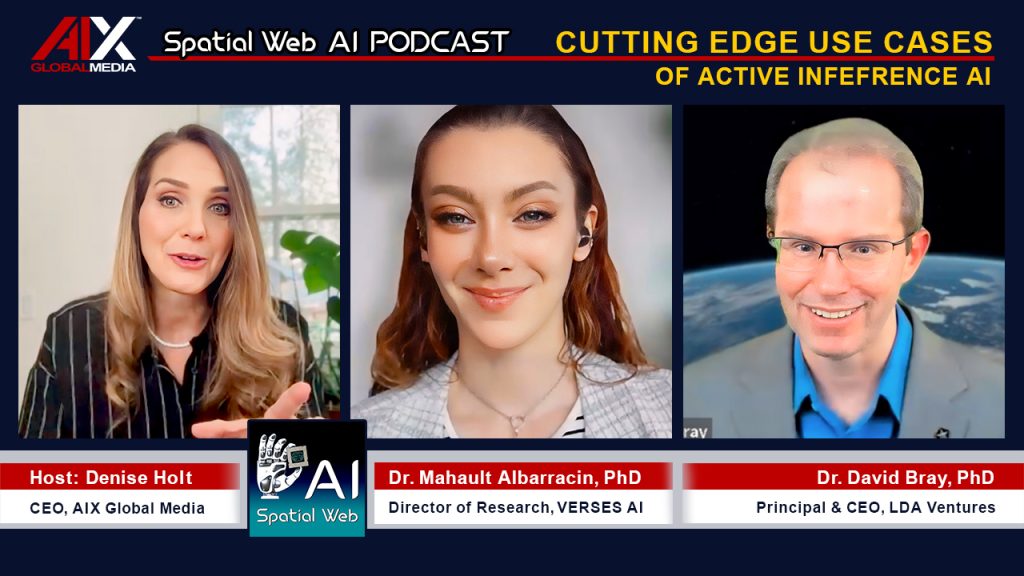

In the latest episode of the Spatial Web AI Podcast, I sat down on August 7, 2025 with Mahault Albarracin, PhD, Director of Research Strategy annd Product Innovation at VERSES AI and David Bray, PhD Distinguished Fellow with the Stimson Center and expert in global policy and governance for a groundbreaking discussion that pulls back the curtain on the real-world applications of Active Inference AI and the Spatial Web.

While generative AI has captured public attention, the next wave of intelligent systems is quietly emerging through Active Inference — a framework grounded in the Free Energy Principle, pioneered by world-renowned neuroscientist, Dr. Karl Friston — Chief Scientist at VERSES AI.

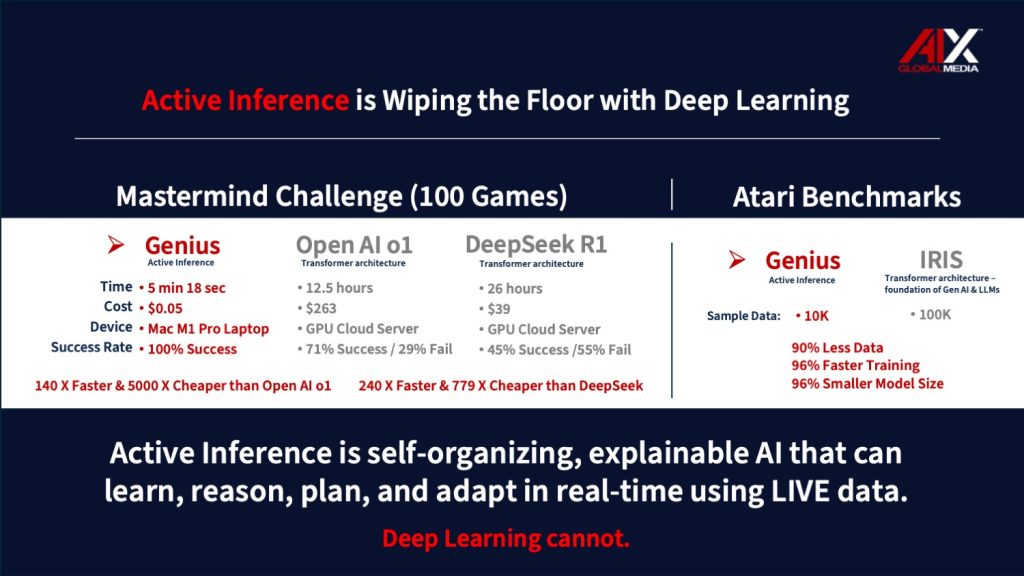

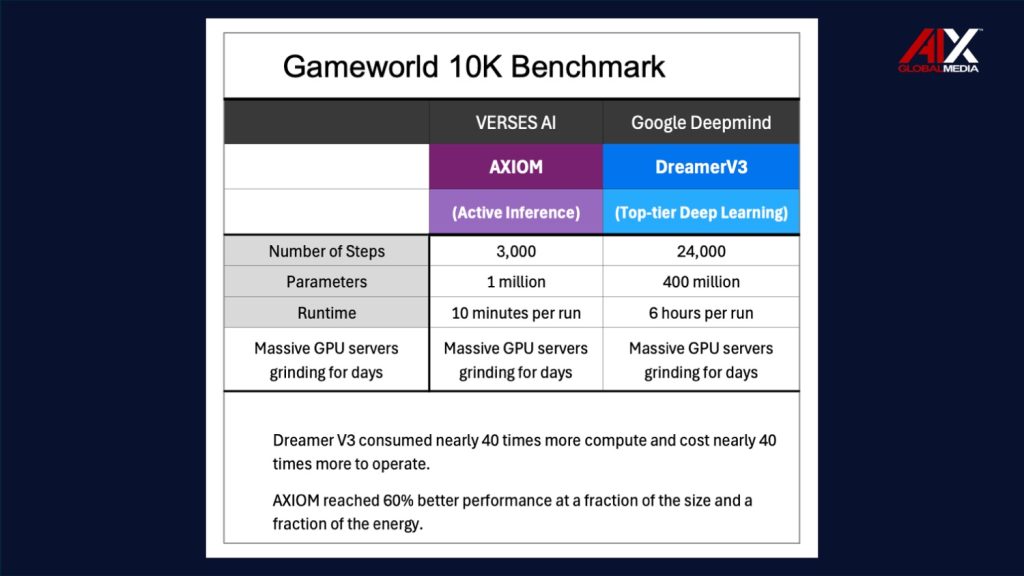

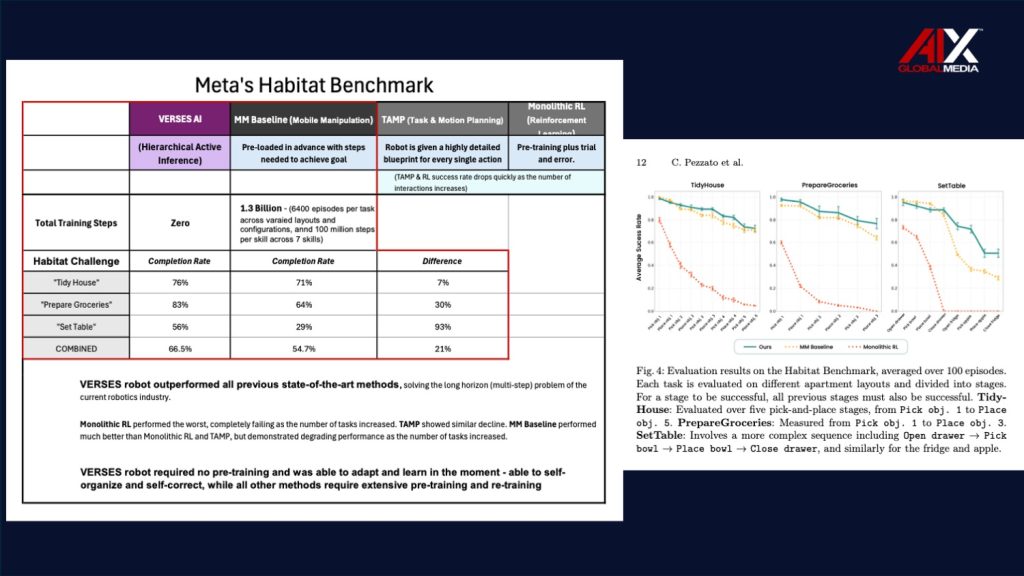

VERSES’ most recent Active Inference breakthroughs are consistently wiping the floor with the most state of the art Reinforcement Learning/Deep Learning flagship models in the world of AI, with their AXIOM model crushing Google Deepmind’s DreamerV3, and their latest research paper spotlighting their entirely new Active Inference architecture for robotics as demonstrating superior performance in Meta’s Habitat challenge. (See results charts at the end of this article.)

Until recently, much of the conversation around Active Inference and the Spatial Web has centered on theory and high-level vision. But in this episode, Mahault gives us a rare, behind-the-scenes look at the specific use cases that the VERSES research team is working on right now. For anyone wondering how these technologies manifest in practice, this conversation is one you won’t want to miss.

Watch Now:

YouTube: https://youtu.be/YYyca7dzYoA

Spotify: https://open.spotify.com/episode/3DV2jXLHgdhYstofsMkNRp

Active Inference AI in Action

Mahault highlights how the research team is applying Active Inference and the Free Energy Principle to real-world challenges. From robotics control systems that can learn and adapt in unfamiliar environments, to intelligent logistics networks capable of predicting and responding in real-time, these examples showcase how VERSES is engineering intelligence that reasons, plans, and acts in context.

Why These Use Cases Matter

Most discussions of AI today revolve around large language models. While powerful, they lack the adaptive, real-time causal reasoning required for the next generation of trusted intelligent systems. Active Inference AI changes this equation entirely.

By grounding AI in the principles of self-organizing systems, VERSES is creating intelligent agents that can:

- Sense and respond to dynamic environments in real-time.

- Plan and act across multiple scales simultaneously.

- Adapt on the fly without retraining on massive datasets.

These aren’t abstract concepts anymore. They’re being built, tested, and deployed today.

A Glimpse Into the Future

David Bray adds an important perspective by exploring the societal and global policy implications of this technology. As Active Inference AI scales, it will impact governance, commerce, and the ways we interact with digital and physical spaces.

This episode captures an exciting moment: the bridge between research and reality. For leaders, innovators, and anyone tracking the future of AI, this is a chance to see what’s emerging directly from the VERSES research team — and to understand why these use cases signal the dawn of a new intelligence layer for the internet.

What You'll Learn in This Episode

- The Science Behind Active Inference Mahault Albarracin unpacks how Active Inference differs from today’s generative AI. Rather than relying solely on vast training datasets, Active Inference enables systems to adapt in real time, infer hidden states of the world, and update their internal models dynamically. This makes them more energy-efficient, scalable, and capable of reasoning about uncertainty.

- Societal Impacts and Global Use Cases David Bray highlights why Active Inference matters for society at large — from public health and climate resilience to governance and global security. We discuss how intelligent agents designed with Active Inference can help humans navigate complexity, manage distributed systems, and coordinate collective action at scale.

- Why This Shift Matters Now Together, we dive into how organizations, governments, and communities can prepare for a future where Active Inference Agents are deployed across industries. From robotics and supply chains to personalized healthcare and education, the implications are profound.

Key Highlights from the Conversation

- Karl Friston’s Free Energy Principle — Mahault brings forward the foundational science of minimizing uncertainty/surprise (free energy) as the unifying idea behind how biological systems learn, perceive, and act.

- Real-World Applications Emerging — We explore examples such as robotics teams building new control stacks, real-time decision-making for smart cities, and decentralized systems capable of adapting without retraining on massive datasets.

- Ethics and Governance — David Bray shares perspectives on how these technologies should be implemented responsibly, ensuring societal benefit while addressing risks.

- The Convergence of Technologies — We discuss how the Spatial Web, distributed intelligence, and Active Inference together are unlocking a new paradigm for human-machine collaboration.

Why You Should Tune In

If you’ve been tracking the limitations of current AI — its energy demands, lack of causal reasoning, and inability to generalize — you’ll want to tune into our conversation. Active Inference AI represents a fundamental shift, one that’s looking to reshape how we design intelligent systems for society’s greatest challenges.

The future of AI is not just about faster models — it’s about intelligent, adaptive systems that can reason, plan, collaborate, and co-regulate. This conversation with Mahault Albarracin and David Bray will help you understand why Active Inference AI is the breakthrough we’ve been waiting for, and its potential to transform the future of society.

Watch the Full Episode

Multiple Industry Benchmarks and Challenges Demonstrating Consistent Superior Performance by VERSES AI Against All State-of-the-Art Models:

Ready to learn more? JOIN US at AIX Learning Lab Central where you will find a series of executive training and the only advanced certification program available in this field. You’ll also discover an amazing education repository known as the Resource Locker — an exhaustive collection of the latest research papers, articles, video interviews, and more, all focused on Active Inference AI and Spatial Web Technologies.

Membership is FREE, and if you join now, you’ll receive a special welcome code for 30% all courses and certifications.

The FREE global education hub where our community thrives!

Scale the learning experience beyond content and cut out the noise in our hyper-focused engaging environment to innovate with others around the world.

Become a paid member, and join us every month for Learning Lab LIVE!

Episode Transcript: