Active Inference & Spatial AI

The Next Wave of AI and Computing

Energy Efficiency Isn't Enough Without Adaptive Real-Time System-Level Control

- ByDenise Holt

- February 2, 2026

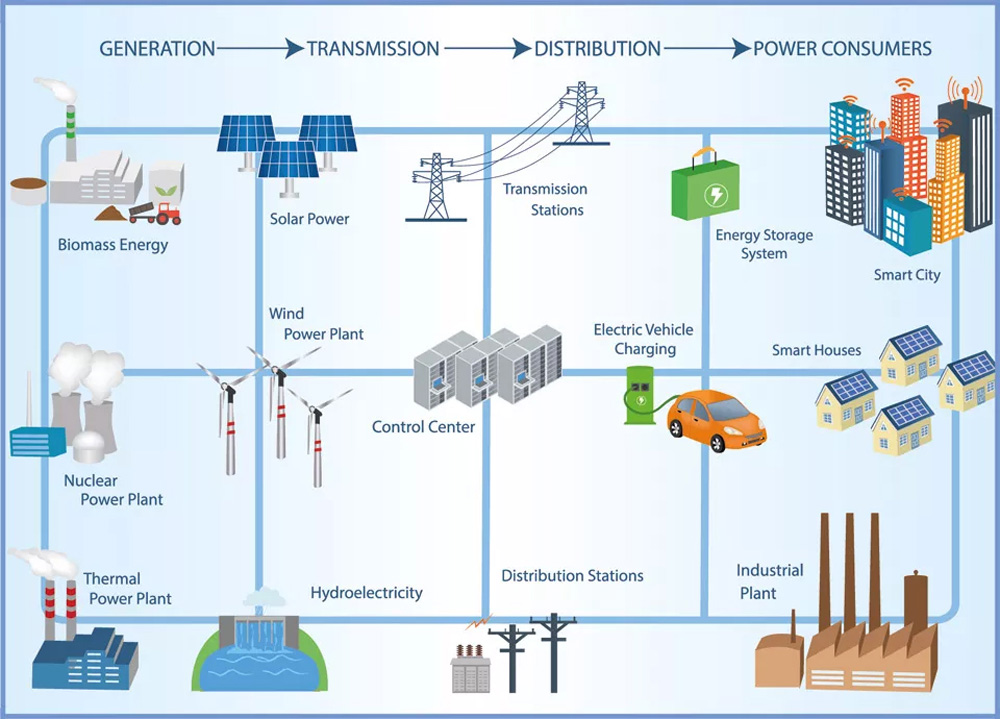

For decades, the energy conversation has revolved around supply. How do we generate more? How do we decarbonize faster? How do we build enough capacity to support electrification, digital infrastructure, and economic growth?

The implicit assumption has been that energy constraints are primarily a production problem.

Energy efficiency has been the primary lever for improving the performance of energy systems. More efficient turbines, better insulation, advanced cooling technologies, LED lighting, smarter scheduling, and increasingly sophisticated optimization software have all delivered measurable gains. These advances matter, and they will continue to matter.

But across modern infrastructure — from hyperscale data centers to stadiums, campuses, and distributed microgrids— efficiency gains are no longer translating cleanly into lower cost, higher capacity, or greater resilience. A quieter constraint is emerging. As systems grow more complex, more interconnected, and more volatile, energy is not simply limited by how much we can produce. It is limited by how coherently we govern what we already have.

Energy efficiency improves components. Adaptive, real-time system-level control determines outcomes.

Modern energy infrastructure is extraordinarily sophisticated at the component level. Cooling systems in hyperscale data centers are finely engineered. Power distribution networks are meticulously protected. Stadium electrical systems are designed to handle massive, synchronized loads under peak spectacle conditions. Each subsystem is optimized for its local objective: safety, uptime, comfort, reliability.

What is missing is coherent governance across those subsystems.

Energy systems today are operated as collections of optimized silos. They are not governed as unified, adaptive systems, and that fragmentation has measurable economic, operational, and increasingly civic consequences.

Where Energy Is Quietly Lost

Energy loss in modern infrastructure rarely appears as dramatic failure. It appears as persistent overcompensation.

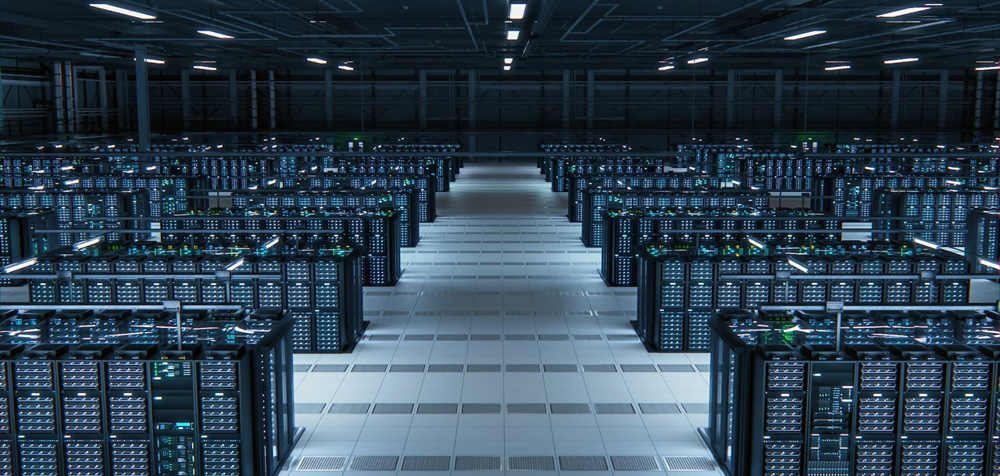

Consider a modern data center. Layers of redundancy protect uptime. Cooling systems anticipate thermal excursions. UPS systems buffer instability. Backup generators stand ready. Safety margins are embedded everywhere because failure is unacceptable.

Those margins are rational. They are designed to prevent catastrophic events. But they are also static. They are conservative by design, because the systems coordinating them lack continuous adaptive alignment.

When cooling loops react independently to localized thermal shifts, they overshoot. When electrical subsystems widen margins to absorb uncertainty, they operate below true capacity. When batteries and backup systems follow fixed guidelines rather than dynamically updating system-wide state, energy is buffered rather than utilized.

Individually, these behaviors are rational. Each is locally optimized for safety. Each is designed to avoid failure.

But collectively, they create continuous misalignment.

The same pattern appears in stadiums and large venues. Massive load spikes occur during events through lighting, broadcast infrastructure, hospitality services, HVAC, and security systems. Infrastructure is built to accommodate peak spectacle, and control strategies are designed to avoid risk under extreme conditions.

Yet subsystems react to volatility independently, widening margins and overshooting to protect reliability. Cooling anticipates peak occupancy without coordinating with real-time electrical load behavior. Distribution systems maintain conservative limits even when transient conditions would allow tightening. Peak contracts are negotiated around worst-case coincidence rather than adaptive response.

Energy is not lost in dramatic failures. It is lost in quiet, continuous misalignment, particularly during short-duration peaks that lock in outsized economic and operational penalties.

Don't We Already Use AI to Optimize Energy?

Many organizations believe they are already addressing inefficiency through artificial intelligence. In most cases, what has been deployed is predictive modeling layered onto static optimization engines. These tools improve forecasts, detect anomalies earlier, and suggest incremental setpoint adjustments.

They do not fundamentally change system behavior under stress.

Optimization engines calculate optimal configurations under modeled assumptions. When real conditions diverge, they recalculate. They do not maintain coherent behavior across interacting subsystems in real time.

Why Forecasting Has Not Solved the Problem

The industry’s response to inefficiency has largely centered on prediction. If we forecast load more accurately, we assume, we can reduce buffers. If we detect anomalies earlier, we can tighten margins. If we optimize scheduling algorithms, we can increase utilization.

These efforts have produced incremental improvements. The tools are valuable, but they have not fundamentally changed how infrastructure behaves under uncertainty. They do not govern execution.

A forecast tells you what might happen in the next hour. It does not coordinate how cooling, storage, distribution, and load subsystems should dynamically interact as conditions evolve minute by minute. Governance determines how the system behaves as conditions change in real time.

Optimization engines solve for configurations under modeled assumptions. Real systems continuously diverge from those assumptions.

Energy infrastructure is not static. It is a coupled dynamical ecosystem operating under continuous uncertainty. Equipment degrades. Weather shifts. Compute loads spike unpredictably. Human behavior changes patterns. Grid conditions fluctuate.

When reality diverges from modeled assumptions, local controllers default to conservative behavior. Buffers widen. Oscillation increases. Margins remain static even when dynamic tightening would be safe.

Predictive forecasting improves anticipation.

Energy infrastructure does not primarily need better prediction. It needs coherent control under uncertainty.

Data Centers: The Economics of Coordination

Nowhere is this more visible than in hyperscale data centers, where power density is increasing rapidly and grid interconnection limits are tightening.

Take a 50-megawatt IT data center operating at a PUE of 1.35. A modest improvement to 1.32 reduces continuous facility draw by approximately 1.5 megawatts. Over the course of a year, that reduction exceeds 13 million kilowatt-hours. At $0.09 per kilowatt-hour, direct savings of $1.18 million annually.

Those gains do not require new cooling towers or redesigned switchgear. They emerge from tighter coordination between electrical distribution, thermal systems, and workload behavior. From reducing oscillation. From narrowing margins dynamically rather than statically.

But the strategic impact emerges when facility power is capped.

Under a 70-megawatt facility limit, that same improvement unlocks more than one additional megawatt of sellable IT capacity. In power-dense markets, that incremental capacity translates into millions in additional annual revenue potential — without expanding utility contracts or installing new hardware.

What changed was not more production. It was about adaptive real-time infrastructure coordination.

It is about converting wasted overhead into usable output.

Stadiums and Dynamic Energy Load Environments

Stadiums and large venues present a different but equally compelling case. During major events, these environments experience dramatic, synchronized load spikes. Lighting, broadcast systems, hospitality services, HVAC, and security infrastructure surge simultaneously.

A conservative three percent reduction in total facility draw during peak events can reclaim roughly half a megawatt of capacity. Direct annual energy savings may reach six figures. More importantly, reclaimed headroom can be monetized through expanded services or enhanced experiential infrastructure without renegotiating peak contracts.

The larger impact is operational flexibility. The ability to reclaim margin without altering the audience experience changes the economics of venue operations.

In these environments, experience quality is non-negotiable. Any control strategy that compromises perception, comfort, or reliability is unacceptable. This constraint sharply limits the applicability of blunt demand-response techniques or static efficiency measures.

Once again, the opportunity is not about generating more power.

AI Compute, Grid Stress, and the Capacity Wall

As AI workloads scale and high-performance compute expands, power demand is increasing at unprecedented rates. In many regions, grid interconnection queues are lengthening. Utilities face mounting pressure to approve high-load facilities while maintaining system stability.

The default response has been expansion — new substations, new generation, new transmission corridors.

Yet even before new capacity is built, many facilities are already operating near internal limits. Cooling envelopes are sized for worst-case thermal conditions. Electrical systems are tuned conservatively. Power contracts are capped.

Under these constraints, the value of each incremental megawatt rises dramatically.

Recovering even two percent of wasted overhead can support the deployment of additional compute clusters, expand operations, or delay costly upgrades. In regions where grid expansion may take years, yield amplification from existing infrastructure becomes strategically decisive.

The conversation about AI and energy often focuses on consumption. A more productive question is how intelligently that energy is governed.

Industry Recognition: Flexibility Requires Architecture

This reality is now being recognized at the industry level. Initiatives such as EPRI’s (Electric Power Research Institute) DCFlex (Data Center Flex) program are exploring how large loads — particularly data centers — can operate as flexible grid participants rather than static consumers. Utilities and operators are actively examining how high-demand facilities might modulate load, respond to grid stress, or align more dynamically with renewable variability.

This represents a meaningful shift in thinking. It acknowledges that future grid stability depends not only on generation capacity, but on adaptive load behavior.

But policy-level flexibility does not automatically translate into operational flexibility.

A data center cannot simply respond to grid signals without coordinating cooling, storage, electrical distribution, and workload behavior in real time. Rapid load shifts without coherent control can introduce instability. Conservative safety margins will widen under uncertainty unless infrastructure can dynamically manage constraint trade-offs.

Grid-level flexibility requires facility-level adaptive governance.

Industry initiatives are defining the need for flexibility.

What remains is the architecture capable of operationalizing it safely and profitably.

The Missing Layer

Modern energy systems are layered with physical assets, local control loops, supervisory platforms, and human dashboards. What they lack is a continuous adaptive governance layer capable of coordinating behavior across domains under uncertainty.

Seed IQ™ operates as an adaptive multi-agent autonomous control layer that sits above existing EMS (energy management system), BMS (battery management system), SCADA (supervisory control and data acquisition), and PLC (programmable logic controller)-based control systems. It does not replace those systems or interfere with their safety-critical logic. Instead, it provides a continuous governance layer that coordinates how subsystems behave relative to one another over time.

Subsystems like cooling loops, power distribution nodes, storage assets, compute workloads, and large dynamic loads are treated as interacting Intelligent Agents within a shared execution field. Each Agent maintains beliefs about its own state, constraints, and uncertainty, while continuously negotiating with other Agents to align behavior across the system. Trade-offs between efficiency, safety, and capacity are evaluated in real time.

Rather than issuing centralized commands, Seed IQ™ governs trajectories. It evaluates how actions taken by one subsystem propagate across others, and it adjusts coordination in real time to balance efficiency, safety, experience quality, cost, and contractual constraints.

This enables safety margins to be tightened dynamically rather than statically. Oscillatory behavior between subsystems is dampened. Curtailment and over-buffering are reduced. Usable capacity increases without compromising reliability. It reduces parasitic overhead. It converts conservative buffers into usable yield.

In data centers, this manifests as measurable PUE reduction and increased sellable compute capacity. In stadiums, it manifests as reduced peak draw and monetizable headroom. In grid-integrated environments, it enables coherent participation in flexibility programs without destabilizing local systems.

Flexibility without governance increases risk.

Flexibility with adaptive control increases yield.

The underlying physics does not change. The energy generation assets do not change. The transmission lines do not change.

What changes is how the system governs itself under uncertainty.

Energy infrastructure has reached a point where incremental gains from hardware alone are flattening. The next layer of performance will come from adaptive execution control.

Energy's Next Inflection Point

Electrification is accelerating. Compute demand is rising. Renewables are becoming more deeply embedded in the grid. Expanding physical infrastructure is becoming slower, more constrained, and more expensive.

Before building more infrastructure, organizations must ask a simpler question:

Are we fully utilizing what we already have?

In many cases, the answer is no.

Energy infrastructure does not simply need more production.

It needs coherent execution.

The next transformation in energy will not be defined solely by larger turbines or denser batteries. It will be defined by adaptive control layers that convert fragmented infrastructure into coordinated, high-yield systems.

The opportunity is not merely to expand capacity.

It is to govern it intelligently.

This article is the fourth in a series examining what enterprise operations require from AI.

In the next article, we turn to robotics and autonomous systems and explore why autonomy fails without execution governance.

If you’re building the future of quantum infrastructure, energy systems, supply chains, manufacturing, finance, or robotics, this is where the conversation begins.

Learn more at AIX Global Innovations.

Join our growing community at Learning Lab Central.

The global education hub where our community thrives!

Scale the learning experience beyond content and cut out the noise in our hyper-focused engaging environment to innovate with others around the world.

Join the conversation every month for Learning Lab LIVE!