AI for Quantum: Why Scaling Quantum Computing Is an Operations Challenge - Not a Physics Problem

- ByDenise Holt

- January 4, 2026

Quantum Computing has a Real Problem.

Quantum computing has made extraordinary progress over the past decade. Qubit counts have increased, gate fidelities have improved, and error correction techniques continue to advance. Theoretical frameworks are maturing, hardware platforms are diversifying, and investment across the ecosystem remains strong.

Yet one reality remains unchanged. Quantum systems still do not scale into reliable, production-level tools for real-world problems.

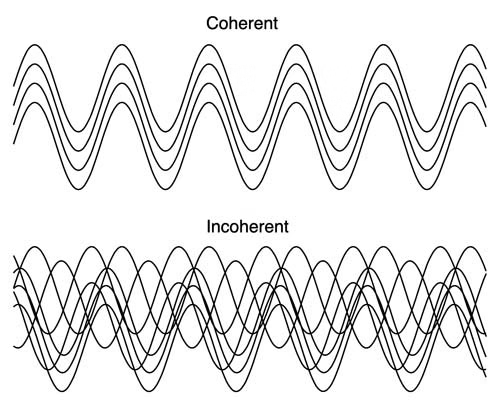

The dominant explanation points to physics: decoherence, noise, and error rates that remain stubbornly difficult to suppress.

Those challenges are real, but they are not the whole story.

What is becoming increasingly clear is that the difficulty in scaling quantum computing is not only a physics problem. It is an operations problem. More specifically, it is a problem of how quantum execution is governed over time under uncertainty, and today’s AI systems are fundamentally ill-suited to that task.

Quantum systems do not fail in clean, discrete ways. They drift. They degrade. They slide gradually into operations where results appear numeric, structured, and plausible, yet are no longer meaningful. Execution continues, optimizers keep iterating, hardware time is consumed, and engineers often discover the failure only after the fact. By then, cost has already been incurred, and insight has already been lost.

This is not a question of better prediction. It is a question of execution governance.

Quantum Execution Is a Continuous Process, Not a Discrete Event

Quantum programs do not execute under stable, well-defined conditions. Noise is continuous, not episodic. Decoherence accumulates gradually over the course of a run. Error regimes can shift mid-execution. Measurements are partial, delayed, and often ambiguous. A quantum run rarely fails at a single identifiable moment. Instead, it drifts away from viability.

Operationally, this means quantum execution cannot be treated as a “run-and-evaluate” process. By the time results are evaluated, the opportunity to intervene has already passed. Execution viability must be assessed continuously while the program is running, not only after it completes.

Most current approaches to quantum control and optimization do not operate this way. They rely on static assumptions, offline calibration, or post hoc evaluation. As a result, systems continue executing long after the trajectory has become non-viable, consuming expensive hardware time and producing outputs that appear numeric and well-formed but are fundamentally meaningless.

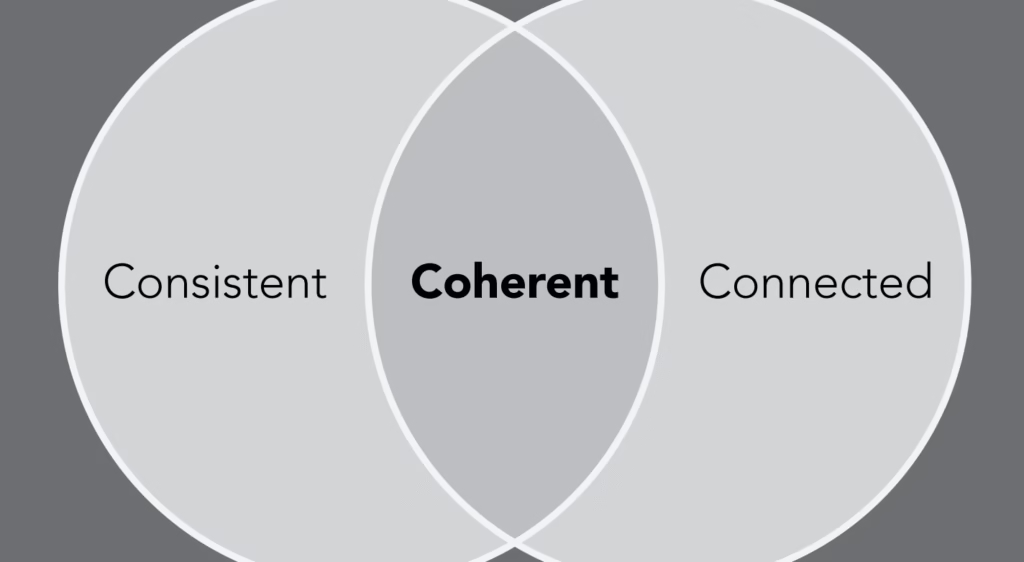

Scaling quantum computing requires intelligence that can remain engaged throughout execution, maintaining a live understanding of whether ongoing behavior is still coherent and recoverable.

Silent Failure Is the Dominant Failure Mode in Quantum Systems

One of the most dangerous aspects of quantum computing today is that failure is often invisible. Runs do not crash. They complete. Optimizers continue iterating. Pipelines advance downstream. Only later, sometimes far later, does it become clear that the results were invalid.

This is not an error detection problem in the traditional sense. It is a trajectory viability problem. At some point during execution, the system crosses into a region where valid outcomes are no longer attainable, even if the program continues to run. Existing systems lack the ability to recognize this moment.

Operational intelligence for quantum systems must be able to detect inevitability, not anomalies. It must recognize when recovery is no longer possible and signal that reality explicitly, early enough to matter. Without this capability, quantum systems will remain expensive, fragile, and unreliable regardless of improvements in raw hardware performance.

In quantum computing, the ability to stop is just as important as the ability to compute.

Worst-Case Behavior Matters More Than Average Performance

In classical computing, average performance metrics often provide a useful picture. In quantum computing, they are deeply misleading. A small number of rare failures can dominate cost, invalidate experiments, and erode trust in results. Tail behavior, not mean behavior, determines operational viability.

Many quantum systems can show impressive average accuracy while still being operationally unusable because their worst-case behavior is unbounded. A single catastrophic phase excursion can invalidate an entire run. A handful of unstable executions can consume the majority of available and costly hardware time.

Scaling quantum computing requires intelligence that actively governs worst-case behavior. The goal is not merely to improve average fidelity, but to compress tail risk so that rare events do not dominate outcomes. A system with slightly lower average performance but tightly bounded worst cases is far more valuable than one that occasionally produces spectacular results and frequently produces silent failures.

Safe Halting Is a Control Capability, Not an Error State

Today’s quantum systems do not know how to stop themselves.

Execution continues until completion, regardless of whether continued operation still makes sense. This leads to wasted compute, damaged confidence in results, and unpredictable costs.

True operational intelligence treats halting as a first-class capability. When execution viability is lost, the system should be able to terminate safely and explicitly, preserving resources and preventing downstream harm.

Halting is not failure. It is governance.

In enterprise and national lab environments, quantum access commonly costs hundreds of dollars per QPU hour once hardware scheduling and operational overhead are included. A single failed VQE campaign can easily burn tens of thousands of dollars in wasted runtime and engineering effort. Safe halting converts uncontrolled loss into bounded, predictable operating cost.

The Barren Plateau Problem Is a Structural Failure Mode, Not an Optimization Glitch

As parameterized quantum circuits become deeper or more expressive, the optimization landscape collapses. Gradients shrink toward zero across most directions. Gradient variance disappears. Parameter updates cease to carry meaningful information. The circuit still runs. Measurements still return numbers. But those numbers no longer encode useful signal.

This is not a numerical artifact or a hardware glitch. It is a structural failure mode that becomes more likely as circuits scale. Once a system enters a barren plateau, continued execution is computationally wasteful. Hardware time, shot budgets, and engineering effort are consumed, yet no additional insight is gained.

Critically, most quantum software stacks do not treat barren plateaus as an explicit operational state. As long as a job completes successfully, execution is considered valid. As a result, barren plateaus manifest as silent failure rather than as a managed condition.

This places a hard ceiling on practical quantum scaling, regardless of improvements in qubit quality or error correction.

Why Conventional AI is the Wrong Tool for Quantum Operations

Most AI techniques used in quantum computing today are designed to optimize specific tasks. They help decode error signals, estimate noise, tune control parameters, and manage hardware drift.

These tools are valuable, and they reflect serious engineering progress, but they operate at the wrong level.

They solve narrow problems inside the system. They do not govern the system as a whole.

Running a quantum system is not a sequence of isolated fixes. It is a continuous operation unfolding over time under uncertainty, across tightly coupled classical and quantum control loops. Problems rarely appear as sudden failures. Instead, they emerge as gradual loss of coherence and compounding instability, and the system eventually crosses a threshold where recovery is no longer possible.

Conventional AI focuses on improving predictions or optimizing short-term performance. It asks what adjustment improves results right now, rather than whether execution remains viable and how it should adapt to stay that way.

Neural networks can reduce average error rates. Reinforcement learning can tune control policies, but it depends on trial-and-error and centralized reward signals, both of which become costly and unstable as quantum systems scale and drift accelerates. These approaches improve local metrics while leaving the system exposed to rare but expensive failure modes.

Even when combined, today’s AI tools remain reactive. They respond after problems appear. They do not maintain an internal representation of execution health, constraint boundaries, or irreversible failure regions over time.

Quantum systems are not failing to scale because today’s AI is insufficiently advanced. They fail because the wrong class of intelligence is being applied. They struggle because the dominant tools are built for prediction and optimization, not for continuous operational control.

What is missing is an intelligence layer that can manage uncertainty over time, recognize when trajectories are becoming unsafe, and adapt before collapse occurs.

Quantum Systems Are Already Distributed Systems

Quantum computing is not a single system. It is a hybrid stack composed of classical controllers, quantum hardware, noise environments, optimization loops, and orchestration layers. Failures most often occur at the interfaces between these components, not within any one of them.

Operational intelligence must be able to coordinate behavior across this distributed landscape without relying on centralized micromanagement or unreliable control loops. Centralized optimization breaks down under uncertainty. Rigid feedback mechanisms amplify instability when conditions change.

What is needed today is intelligence that can maintain coherence across execution layers, adapting behavior locally while preserving system-level stability. This is not something that can be achieved by bolting orchestration logic on top of existing models. It requires a fundamentally different approach to how execution is governed.

Adaptation Must Occur During Execution, Not Between Runs

Quantum environments change faster than retraining cycles can keep up. Noise statistics drift. Hardware behavior varies from day to day. Recalibration is expensive and often disruptive.

Any intelligence system that requires retraining or redeployment in order to adapt is already too slow for real quantum operations. Adaptation must occur during execution, within bounded constraints, without restarting the pipeline.

This does not mean unconstrained learning. It means governed adaptation that preserves coherence and stability. It means adjusting control within a constrained solution-space to remain viable as conditions evolve. Without this capability, quantum systems will remain unreliable, regardless of advances in algorithms or hardware.

Seed IQ™ as an Adaptive Multi-Agent Autonomous Control Layer for Quantum Execution

A Different Class of Intelligence Is Emerging

Seed IQ™ is designed as an adaptive multi-agent autonomous control layer rather than a predictive model or optimization engine. Instead of attempting to forecast outcomes or tune parameters in isolation, it maintains a structured internal understanding of execution viability, coherence, and constraint satisfaction as execution unfolds.

In the context of quantum computing, this means Seed IQ™ can monitor execution trajectories continuously, detect when stability is degrading toward irrecoverable regions, manage worst-case behavior explicitly, and intervene when necessary, including halting execution safely when continued operation no longer makes sense.

Importantly, this intelligence operates without retraining cycles, without symbolic control loops, and without centralized micromanagement.

Adaptation occurs during execution, bounded by explicit constraints that preserve stability, coherence, and mission alignment. The system does not chase optimization objectives blindly. It governs whether execution remains worth pursuing at all.

This manifests as a multi-agent system operating during execution. Independent agents monitor distinct aspects of system health such as gradient signal, variance collapse, noise behavior, and stability over time. Each agent adapts locally, according to Active Inference principles, while remaining bounded by explicit operational constraints.

Crucially, no single signal determines action. Coherence is maintained through belief propagation across agents rather than centralized command or symbolic control loops. This allows weak signals that are individually ambiguous to become meaningful when they align.

When collective evidence indicates that execution remains viable, the system allows optimization to continue. When that coherence breaks down, the system intervenes. Intervention may involve structural adjustment or safe halting, depending on what preserves viability.

This is not a replacement for quantum hardware, control software, or error correction. It is a complementary layer that governs how those components interact over time.

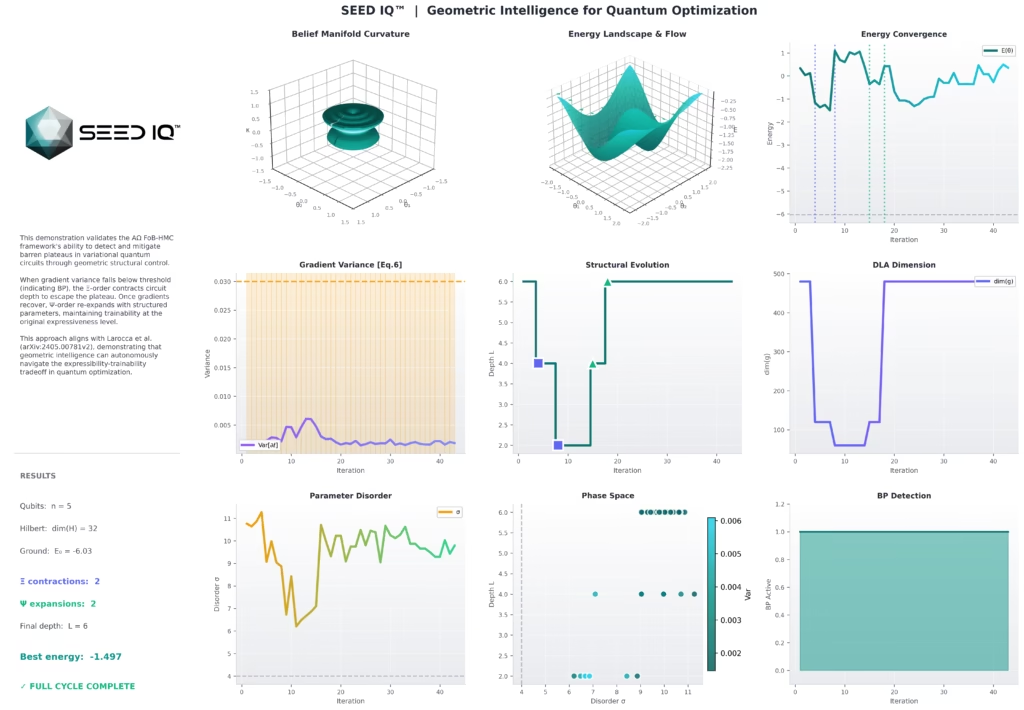

Preliminary Evidence from Barren Plateau Detection and Control

In recent internal simulations using the QuTiP framework – an open-source Python framework for the dynamics of open quantum systems, Seed IQ™ has demonstrated the ability to explicitly detect barren plateau, showing early promise in addressing this problem.

In controlled demonstrations, Seed IQ’s™ underlying ΑΩ FoB HMC™ framework was able to detect when a variational circuit had entered a non-informative regime by monitoring gradient magnitude, variance, and persistence over time. Rather than continuing execution blindly, the system identified loss of computational viability and authorized structural intervention, restoring curvature to the optimization landscape, followed by structured expansion that preserved learned coherence rather than reintroducing disorder.

While these results are preliminary and simulated, and real hardware validation remains the goal, they suggest that barren plateaus can be treated as an operational state that is detectable, actionable, and governable during execution. This represents a meaningful departure from current workflows that simply avoid depth or rely on repeated restarts.

Safe Quantum Halting is the Missing Economic Control Layer in Quantum Computing

Seed IQ™ “Safe Halting” turns quantum execution losses into a scalable, high-margin control layer.

Seed IQ™ introduces safe halting as an explicit capability. It detects when a quantum run is no longer viable and stops execution early. This is not about improving solution quality. It is about preventing invalid results from consuming time money and downstream decision capacity.

In enterprise and national lab environments, quantum access typically costs hundreds of dollars per hour once hardware scheduling, operations overhead, and support are included. A single production scale VQE campaign often consumes tens of QPU hours plus significant engineering oversight. When a run fails silently, the effective loss commonly lands in the $10K to $50K range and can exceed $100K when recalibration and queue delays are involved.

Safe halting changes the economics completely. By stopping runs early when viability is lost Seed IQ™ reduces wasted QPU time, reduces wasted human time, and prevents invalid quantum outputs from entering pipelines. This directly converts uncontrolled spend into bounded predictable operating cost.

The impact compounds at scale. Organizations running hundreds of quantum experiments per year can eliminate millions of dollars in wasted execution. More importantly teams can run pipelines unattended because failures no longer require manual detection after the fact. This unlocks automation reliability and trust which are prerequisites for any serious deployment.

Seed IQ™ does not replace quantum algorithms. It governs them. It does not promise better answers. It ensures that bad answers do not persist long enough to cause damage. At the current stage of quantum hardware this is the highest leverage intervention available because preventing waste is more valuable than marginal accuracy gains.

Safe quantum halting is not a feature. It is infrastructure. It is the line between experimental curiosity and economically viable quantum operations.

How Seed IQ™ Relates to Topological Qubits

Discussions about quantum scaling increasingly point to topological qubits as a potential breakthrough. This approach, most visibly pursued by Microsoft, aims to make qubits more stable at the hardware level by encoding information in physical states that are naturally resistant to certain classes of noise.

If successful at scale, topological qubits could significantly reduce error rates and lower the burden placed on error correction, another quantum scaling problem. This is an important and promising direction in quantum hardware development.

But even fundamentally more stable qubits do not eliminate the operational problem.

Quantum systems would still evolve over time under uncertainty. Control loops would still interact across classical and quantum layers. Execution trajectories would still drift. And systems would still face thresholds beyond which continued execution no longer produces meaningful outcomes.

Topological qubits reduce how often things go wrong. They do not decide what the system should do when conditions change, tradeoffs emerge, or execution viability degrades.

Seed IQ™ operates at a different layer entirely.

It does not compete with qubit technologies, error correction codes, or hardware-level protection. It governs execution across them. Seed IQ™ treats quantum operation as a continuous process rather than a sequence of corrections, maintaining a persistent internal understanding of viability, constraints, and stability over time.

Whether a system is built on superconducting qubits, photonic encodings, or topological qubits, the same operational challenge remains: how to manage execution coherently under uncertainty, prevent slow trajectory collapse, and intervene before failure becomes inevitable.

In fact, if topological qubits succeed, the need for execution governance increases rather than decreases. Longer runtimes, higher system value, and reduced random noise shift the risk toward slow, systemic failures that are harder to detect and more expensive to recover from.

Topological qubits make quantum hardware more stable.

Seed IQ™ makes quantum operations governable.

They are not substitutes.

They are complementary layers in the same future stack.

Quantum as the Proof Case

Quantum computing is one of the most unforgiving operational environments in existence. Noise is continuous. Failures are subtle. Costs are high. Small instabilities compound quickly, and by the time a breakdown becomes visible, recovery is often no longer possible.

That is precisely why quantum matters.

Quantum systems expose, earlier than most domains, what happens when systems become too complex, too dynamic, and too tightly coupled to be managed by prediction, optimization, or post hoc correction alone.

The same operational challenges already exist across modern enterprises. They appear in global supply chains, energy grids, autonomous robotics, financial infrastructure, and large-scale digital platforms. Quantum systems simply reach the limits first, because their margins are thinner and their failure modes are less forgiving.

If execution can be governed coherently under quantum conditions, it can be governed anywhere.

What This Points Toward

Quantum computing is not failing to scale because it lacks intelligence. It is failing to scale because it lacks the right kind of intelligence.

The operational challenges exposed in quantum systems are not unique. They are simply more visible. Continuous uncertainty, silent degradation, tightly coupled execution layers, and failure modes that emerge as trajectories rather than events, already exist across modern enterprise systems.

What makes this moment important is that we are beginning to see credible evidence of a different approach.

Preliminary simulations using Seed IQ™, including QuTiP-based quantum simulation experiments exploring barren plateaus, execution stability, and tail risk behavior, suggest that adaptive autonomous control can actively govern quantum execution rather than merely react to it.

These results do not prove that all quantum scaling challenges are solved. They do demonstrate something more fundamental: that execution viability, stability, and coherence can be managed dynamically during operation, even under non-stationary and adversarial conditions.

That is the missing capability.

Seed IQ™ operates as an adaptive multi-agent autonomous control layer, not a prediction engine and not an optimization heuristic. It governs how complex systems behave over time by coordinating multiple agents that learn, adapt, and act locally while remaining coherent at the system level. This enables continuous assessment of whether execution remains viable, when adaptation is warranted, and how to intervene without destabilizing the system itself.

Quantum computing provides a uniquely hard proving ground for this kind of intelligence. If execution can be governed coherently there, under continuous noise, drift, and irreversible failure boundaries, it strongly suggests that the same class of intelligence can govern other complex systems where uncertainty, cost, and coordination dominate outcomes.

This is why quantum matters in this series.

It reveals what modern operations actually require from intelligence.

The early signals point toward Seed IQ™ occupying a solution-space that current AI approaches do not reach. One where intelligence is measured not by how well it predicts or optimizes, but by how well it governs execution under uncertainty, contains failure before it cascades, and preserves coherence as systems scale.

If that capability continues to hold, quantum computing will not just benefit from it. It will validate it.

And that validation would extend far beyond quantum, toward a new foundation for governing the most complex systems that enterprises and industries rely on every day.

This is not a shift in tooling. It is a shift in responsibility.

The question is not whether AI can model the world more accurately.

The question is whether it can be trusted to govern it.

This article is the second in a series examining what enterprise operations require from AI. In the next article, we move from quantum systems to exploring global supply chains — a very different domain, but one shaped by the same forces: uncertainty, distributed coordination, and rare events that dominate outcomes.

If you’re building the future of supply chains, energy systems, manufacturing, finance, robotics, or quantum infrastructure, this is where the conversation begins.

Learn more at AIX Global Innovations.

Join our growing community at Learning Lab Central.

The global education hub where our community thrives!

Scale the learning experience beyond content and cut out the noise in our hyper-focused engaging environment to innovate with others around the world.

Join the conversation every month for Learning Lab LIVE!